During the 20st century, millions of tons of munition were dumped into the oceans worldwide. After decades of decay, the problems these unexploded ordnance (UXO) are causing are starting to become apparent. In order to facilitate more efficient salvage efforts through e.g. autonomous underwater vehicles, access to representative data is paramount. However, so far such data is not publicly available.

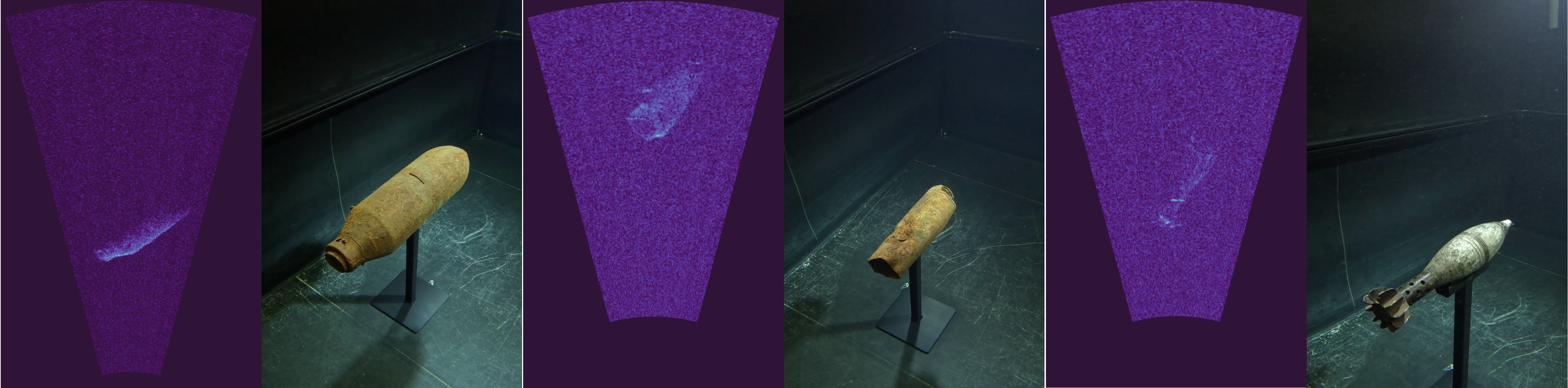

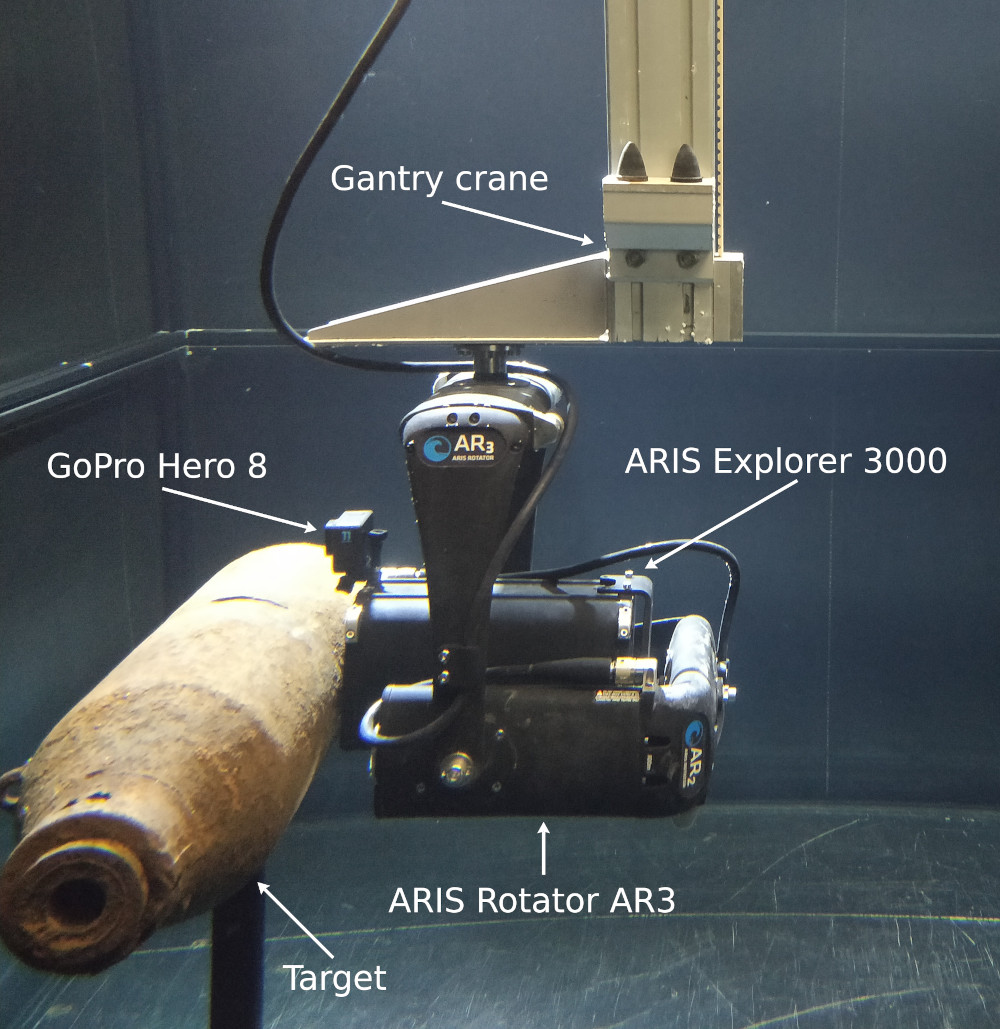

We present a dataset of multimodal synchronized data for acoustic and optical sensing of UXO underwater. Using an ARIS 3000 imaging sonar, a GoPro Hero 8 and a custom design gantry crane, we recorded close to 100 trajectories and over 74,000 frames of 3 distinct types of UXO in a controlled environment. Included in this dataset are raw and polar transformed sonar frames, annotated camera frames, sonar and target poses, textured 3D models, calibration matrices, and more. It is intended for research of acoustic and optical perception of UXO underwater and was recorded in a controlled experimental environment.

This dataset has the following properties:

- Sonar scans of multiple UXO using an ARIS Explorer 3000 imaging sonar.

- Matched GoPro UHD frames for most sonar frames.

- Known and accurate transforms between sonar and targets.

- Known details UXO targets including munition types, dimensions, and 3D models.

- Tracked scan trajectories that are typical and achievable for non-experimental environments.

- Publicly available at [https://zenodo.org/records/11068046].

- Export scripts available at [https://github.com/dfki-ric/uxo-dataset2024].

This repository contains the code we used to prepare the raw recordings for export. A more in-depth description of the data can be found with the dataset itself and the accompanying PAPER.

When using this dataset or the code used to process it, please cite the following paper:

@inproceedings{Dahn2024-uxo,

title = {An Acoustic and Optical Dataset for the Perception of Underwater Unexploded Ordnance (UXO)},

author = {Dahn, Nikolas and Bande Firvida, Miguel and Sharma, Proneet and Christensen, Leif and Geisler, Oliver and Mohrmann, Jochen and Frey, Torsten and Sanghamreddy, Prithvi Kumar and Kirchner, Frank},

keywords = {UXO, unexploded ordnance, dataset, imaging sonar},

booktitle = {2024 IEEE OCEANS},

year = {2024},

pages = {},

doi = {},

url = {},

}The exported data uses simple, well-established formats, namely .pgm, .jpg, .csv, .yaml, .json, and .txt. For convenience, we provide a script that loads an exported recording and allows to step through datapoints in a synchronized fashion using the arrow keys. To view a recording, use the following command:

python scripts/view_recording.py data_export/recordings/<target_type>/<recording-folder>Reducing the dataset down to the (for us) relevant parts was subject to some challenges, largely due to oversights on our part. For one, the GoPro does not have a synchronized timestamp, so for us the best way to match the footage to the ARIS data was by matching the motion. In addition, the GoPro's recording and file naming scheme (combined with some dropouts due to low battery) made it difficult to find the corresponding clip for every recording. Calculating the optical flow has helped in these regards. The motion onset identified in the ARIS data was used to trim the other sensors after matching. In general, decisions were always made based on and in favor of the ARIS data.

To extract and prepare the data from the raw recordings, we used the scripts from this repository in filename order. Relevant options are documented in and read from the accompanying config.yaml file. The scripts used in particular are:

- prep_1_aris_extract.py: extract individual frames as .pgm files and metadata as .csv from the ARIS recordings.

- prep_2_aris_to_polar.py: convert the extracted ARIS data into other formats, namely polar-transformed .png images representing what the sonar was actually "seeing". Export into .csv point clouds is also possible.

- prep_3_aris_calc_optical_flow.py: calculate the optical flow magnitudes for each ARIS recording, saved as .csv files.

- prep_4_aris_find_offsets.py: graphical user interface to manually mark the motion onset and end for each ARIS recording.

- prep_5_gantry_extract.py: extract the gantry crane trajectories as .csv files from the recorded ROS bags.

- prep_6_gantry_find_offsets.py: automatically extracts the motion onsets and ends from each gantry crane trajectory.

- prep_7_gopro_cut.bash: cut the GoPro recordings into clips according to the timestamps extracted from the audio tracks.

- prep_8_gopro_downsample.bash: re-encode the previously cut GoPro clips into smaller resolutions.

- prep_9_gopro_calc_optical_flow.py: calculate the GoPro clips' optical flow magnitudes, saved as .csv files.

- prep_x_match_recordings.py: graphical user interface to pair ARIS recordings and GoPro clips and adjust the time offsets between them. Output is a .csv file.

- release_1_export.py: assembles the dataset for export based on the previous preprocessing steps.

- release_2_archive.bash: packs the preprocessed and exported files into archives.

Further details and (some) documentation can be found in the scripts themselves. Since some of the packages interact with ROS1 (e.g. for extracting data from rosbags), you may have to setup an Ubuntu 20 docker container. As an alternative you may try robostack to setup your ROS1 environment.