Viblo tutorial: https://viblo.asia/p/cung-tim-hieu-he-thong-dich-may-mang-no-ron-tu-dau-tu-bleu-score-den-beam-search-decoding-oK9VyxDXLQR

English-Vietnamese Neural Machine Translation implementation from the scratch with PyTorch.

Create virtual environment then install required packages:

python -m venv venv

venv\Scripts\activate

pip install -r requirements.txtIf you would like to utilize your own dataset and hyperparameters, there are two methods to achieve this:

- 1. Modifying the default

config.ymlfile: Open theconfig.ymlfile and make the necessary adjustments to the variables. - 2. Specifying a custom configuration file: By default, the configuration file path is set to

config.yml. However, if you wish to conduct different experiments simultaneously, you can create your own configuration file in YAML format and pass its path using the--configflag. Please note that our code currently supports reading configuration files in the YAML format exclusively. For instance:python train.py --config my_config.yml

The complete pipeline encompasses three primary processes:

-

1. ⚙️ Preprocessing: This involves reading English-Vietnamese parallel corpora, tokenizing the sentences, building vocabularies, and mapping the tokenized sentences to tensors. The resulting tensors are then saved into a DataLoader, along with the trained tokenizers containing the vocabulary for both languages. To execute the preprocessing step, run the following command:

python preprocess.py --config config.yml

-

2. 🚄 Training and Validation: In this step, the prepared tokenizers and DataLoaders are loaded. A Seq2Seq model is created, and if available, its checkpoint is loaded. The training process is initiated, and the results are recorded in a CSV file. To train and validate the model, use the following command:

python train.py --config config.yml

-

3. 🧪 Testing: This step involves testing the pretrained model on the testing DataLoader. For each pair of sentences, the source, target, predicted sentences, and their respective scores are printed. To perform the testing, execute the following command:

python test.py --config config.yml

Alternatively, you can run the full pipeline with a single command using the following:

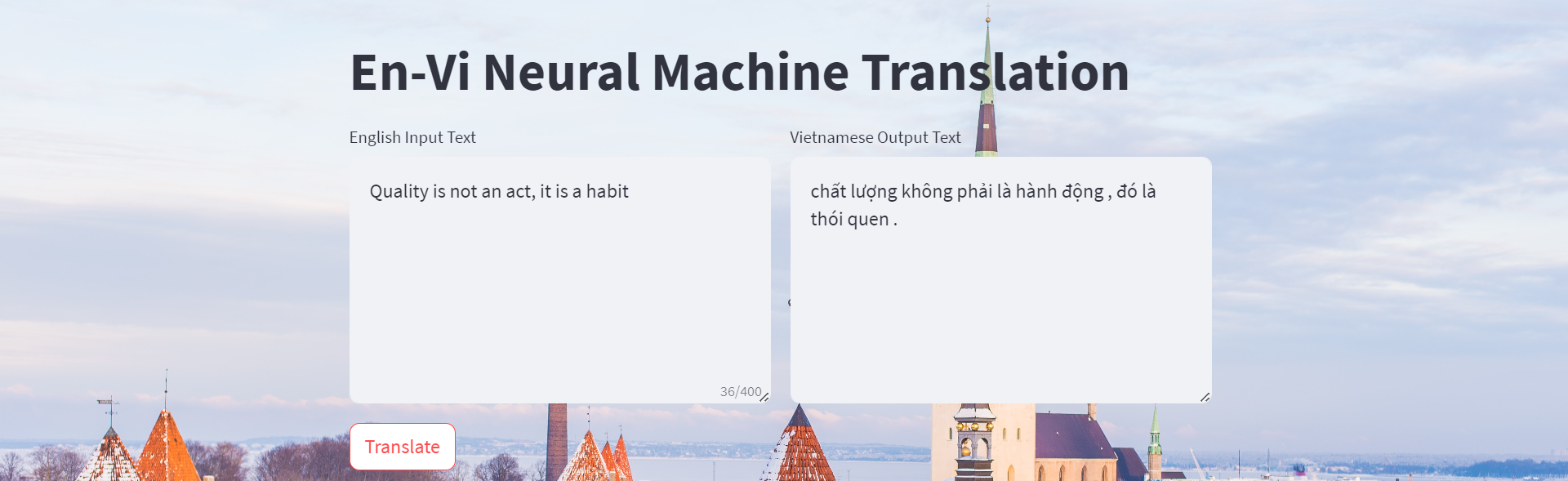

bash full_pipeline.sh --config config.ymlTo facilitate an intuitive interaction with your trained model, follow the steps below to host a web server using Streamlit:

-

Execute the command provided below to initiate the server:

streamlit run inference_streamlit.py -- --config config.yml

You have the option to replace

config.ymlwith your customized configuration file. -

If you prefer to test the server on Google Colab or do not have access to a GPU device, you can conveniently host your server there. Simply access the demo Google Colab Streamlit link:

This link will allow you to utilize my trained model and tokenizers.

Ensure that you have the necessary dependencies and libraries installed before running the Streamlit server. This will enable you to interact seamlessly with your trained model and explore its capabilities through a user-friendly web interface.

- https://github.com/bentrevett/pytorch-seq2seq

- https://github.com/pbcquoc/transformer

- https://web.stanford.edu/class/archive/cs/cs224n/cs224n.1214/

- https://github.com/hyunwoongko/transformer

- https://machinelearningmastery.com/beam-search-decoder-natural-language-processing/

- https://en.wikipedia.org/wiki/BLEU