Tai Wang*

Xiaohan Mao*

Chenming Zhu*

Runsen Xu

Ruiyuan Lyu

Peisen Li

Xiao Chen

Wenwei Zhang

Kai Chen

Tianfan Xue

Xihui Liu

Cewu Lu

Dahua Lin

Jiangmiao Pang

Shanghai AI Laboratory Shanghai Jiao Tong University The University of Hong Kong

The Chinese University of Hong Kong Tsinghua University

🤖 Demo

We test our codes under the following environment:

- Ubuntu 20.04

- NVIDIA Driver: 525.147.05

- CUDA 12.0

- Python 3.8.18

- PyTorch 1.11.0+cu113

- PyTorch3D 0.7.2

- Clone this repository.

git clone https://github.com/OpenRobotLab/EmbodiedScan.git

cd EmbodiedScan- Install PyTorch3D.

conda create -n embodiedscan python=3.8 -y # pytorch3d needs python>3.7

conda activate embodiedscan

# We recommend installing pytorch3d with pre-compiled packages

# For example, to install for Python 3.8, PyTorch 1.11.0 and CUDA 11.3

# For more information, please refer to https://github.com/facebookresearch/pytorch3d/blob/main/INSTALL.md#2-install-wheels-for-linux

pip install --no-index --no-cache-dir pytorch3d -f https://dl.fbaipublicfiles.com/pytorch3d/packaging/wheels/py38_cu113_pyt1110/download.html- Install EmbodiedScan.

# We plan to make EmbodiedScan easier to install by "pip install EmbodiedScan".

# Please stay tuned for the future official release.

# Make sure you are under ./EmbodiedScan/

pip install -e .Please download ScanNet, 3RScan and matterport3d from their official websites.

We will release the demo data, re-organized file structure, post-processing script and annotation files in the near future. Please stay tuned.

We provide a simple tutorial here as a guideline for the basic analysis and visualization of our dataset. Welcome to try and post your suggestions!

We will release the code for model training and benchmark with pretrained checkpoints in the 2024 Q1.

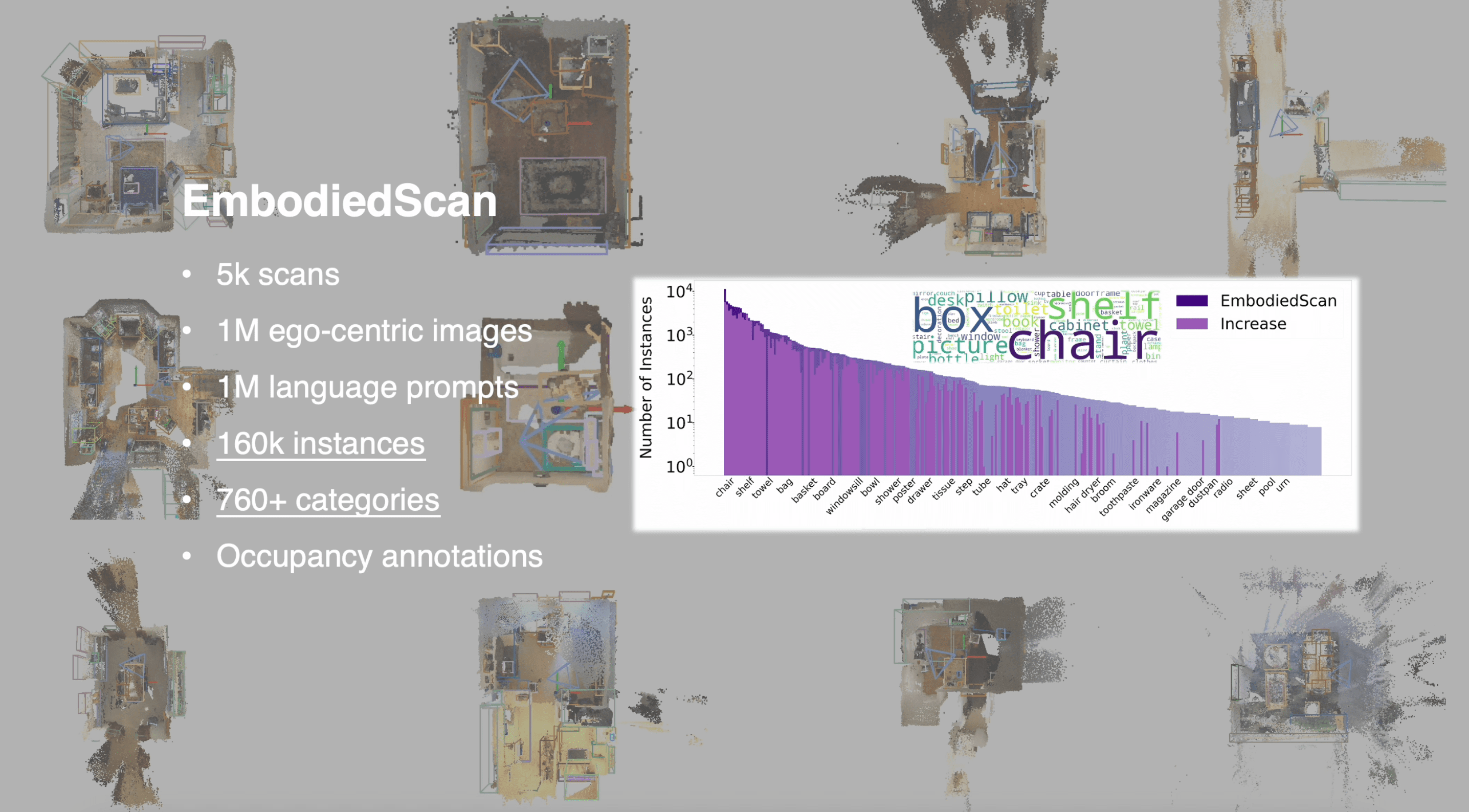

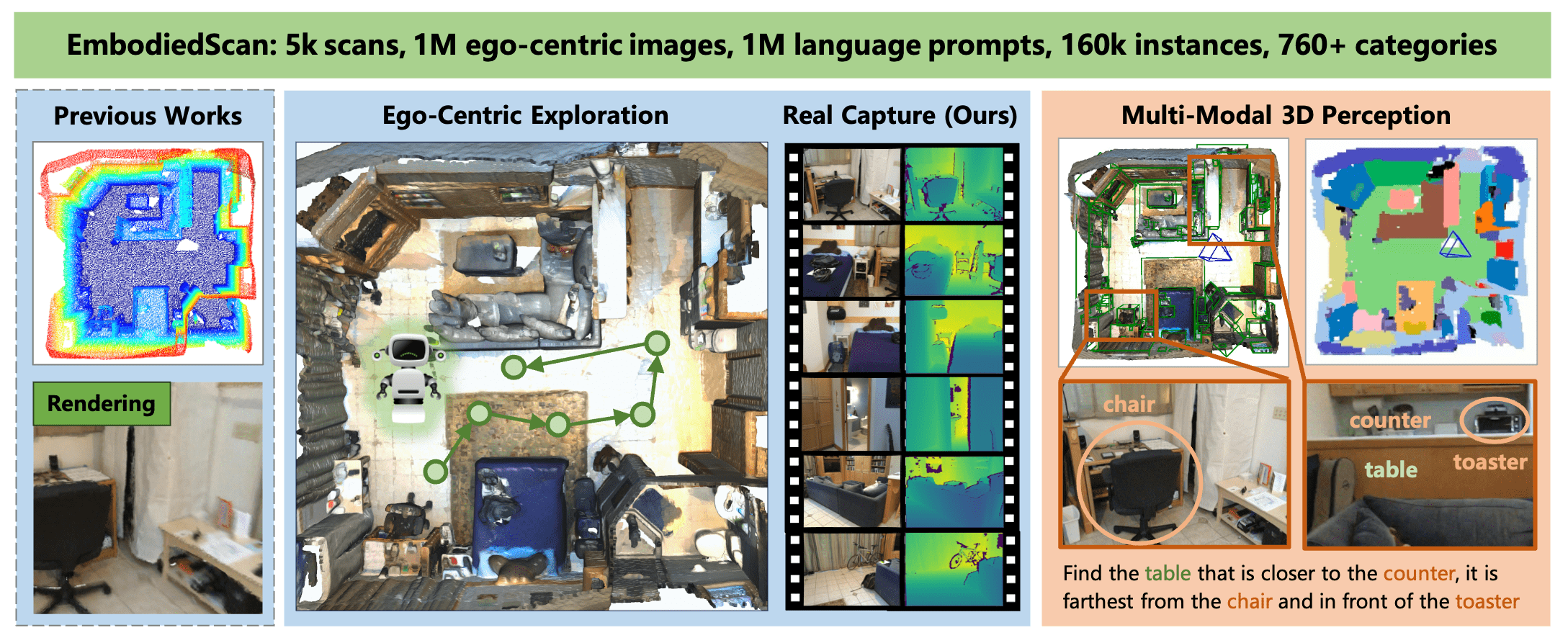

Embodied Perceptron accepts RGB-D sequence with any number of views along with texts as multi-modal input. It uses classical encoders to extract features for each modality and adopts dense and isomorphic sparse fusion with corresponding decoders for different predictions. The 3D features integrated with the text feature can be further used for language-grounded understanding.Please see the paper for details of our two benchmarks, fundamental 3D perception and language-grounded benchmarks. This dataset is still scaling up and the benchmark is being polished and extended. Please stay tuned for our recent updates.

- Paper and partial code release.

- Release EmbodiedScan annotation files.

- Polish dataset APIs and related codes.

- Release Embodied Perceptron pretrained models.

- Release codes for baselines and benchmarks.

- Full release and further updates.

If you find our work helpful, please cite:

@article{wang2023embodiedscan,

author={Wang, Tai and Mao, Xiaohan and Zhu, Chenming and Xu, Runsen and Lyu, Ruiyuan and Li, Peisen and Chen, Xiao and Zhang, Wenwei and Chen, Kai and Xue, Tianfan and Liu, Xihui and Lu, Cewu and Lin, Dahua and Pang, Jiangmiao},

title={EmbodiedScan: A Holistic Multi-Modal 3D Perception Suite Towards Embodied AI},

journal={Arxiv},

year={2023},If you use our dataset and benchmark, please kindly cite the original datasets involved in our work. BibTex entries are provided below.

Dataset BibTex

@inproceedings{dai2017scannet,

title={ScanNet: Richly-annotated 3D Reconstructions of Indoor Scenes},

author={Dai, Angela and Chang, Angel X. and Savva, Manolis and Halber, Maciej and Funkhouser, Thomas and Nie{\ss}ner, Matthias},

booktitle = {Proceedings IEEE Computer Vision and Pattern Recognition (CVPR)},

year = {2017}

}@inproceedings{Wald2019RIO,

title={RIO: 3D Object Instance Re-Localization in Changing Indoor Environments},

author={Johanna Wald, Armen Avetisyan, Nassir Navab, Federico Tombari, Matthias Niessner},

booktitle={Proceedings IEEE International Conference on Computer Vision (ICCV)},

year = {2019}

}@article{Matterport3D,

title={{Matterport3D}: Learning from {RGB-D} Data in Indoor Environments},

author={Chang, Angel and Dai, Angela and Funkhouser, Thomas and Halber, Maciej and Niessner, Matthias and Savva, Manolis and Song, Shuran and Zeng, Andy and Zhang, Yinda},

journal={International Conference on 3D Vision (3DV)},

year={2017}

}

This work is under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

- OpenMMLab: Our dataset code uses MMEngine and our model is built upon MMDetection3D.

- PyTorch3D: We use some functions supported in PyTorch3D for efficient computations on fundamental 3D data structures.

- ScanNet, 3RScan, Matterport3D: Our dataset uses the raw data from these datasets.

- ReferIt3D: We refer to the SR3D's approach to obtaining the language prompt annotations.

- SUSTechPOINTS: Our annotation tool is developed based on the open-source framework used by SUSTechPOINTS.