Maintainers - Eungbean Lee

A curated list of deep learning resources for computer vision, inspired by awesome-deep-vision - It is no longer maintained since 2017.

I am taking this list as a milestone for studying computer vision fields. I would like to inform you that I have rewritten the latest papers and important papers.

- Fork & Star This Repo.

- After reading paper, modify checkbox as checked.

- Unread Awesome Paper (CONF 2019), Someone Gineus [Paper]

- Read Awesome Paper (CONF 2019), Someone Gineus [Paper]

* Unread Awesome Paper (CONF 2019), Someone Gineus [[Paper]](#)

* Read Awesome Paper (CONF 2019), Someone Gineus [[Paper]](#)- If you want to display the day you read the paper, add a badge next to the list.

I'll give you some useful badges.

Just Copy-Paste-Modify!markdown

You can make your own badge Here.

- Enjoy!

- Awesome Deep Learning by Terry Um

- Awesome Deep Vision by Jiwon Kim, Heesoo Myeong, Myungsub Choi, Jung Kwon Lee, Taeksoo Kim

- awesome-computer-vision by Jia-Bin Huang

- Research Paper Reading List by Ted Xiao

- General Resources

- Paper lists

- Courses

- Lab Blogs

- Personal Blogs

- Books

- Videos

- Papers

- ImageNet Classification

- Object Detection

- Video Object segmentation

- Object Tracking

- Low-Level Vision

- Edge Detection

- Semantic Segmentation

- Visual Attention and Saliency

- Object Recognition

- Human Pose Estimation

- Understanding CNN

- Image and Language

- Image Generation

- GAN

- 3D

- Other Topics

- Software

- Tutorials

- Awesome Deep Learning

- Deep Learning Papers Reading Roadmap

- Awesome Deep Vision

- Deep Reinforcement Learning Papers

- ML@B Summer Reading List

-

Machine learning

- [UC Berkeley] CS 189/289A: Introduction to Machine Learning, Fall 2015, [UC Berkeley login required]

- EE 221A: Nonlinear Systems, UC Berkeley, Fall 2016

-

Deep learning

- [Udacity] ND101: Deep Learning - $999

- [Oxford] Deep Learning by Prof. Nando de Freitas

- [NYU] Deep Learning by Prof. Yann LeCun

- IEOR 265: Learning and Optimization, UC Berkeley, Spring 2016

- Deep Learning for everybody (Korean), by Hun Kim

-

Deep Vision

-

More Deep Learning

- [Stanford] CS224d: Deep Learning for Natural Language Processing

- CS 294-129: Designing, Visualizing, and Understanding Deep Neural Networks, UC Berkeley, Fall 2016 [UC Berkeley login required]

- CS 294-112: Deep Reinforcement Learning, UC Berkeley, Spring 2017

- CS 294-131: Special Topics in Deep Learning, UC Berkeley, Spring 2017

- BAIR blog

- DeepMind blog

- OpenAI blog

- Google Research blog

- gBrain blog

- FAIR blog

- NVIDIA blog

- MSR blog

- Facebook's AI Painting@Wired

- Towards Data Science

- Lilian Weng, OpenAI

- Eric Jang, Robotics at Google

- Alex Irpan, Robotics at Google

- Deep down the rabbit hole: CVPR 2015 and beyond@Tombone's Computer Vision Blog

- CVPR recap and where we're going@Zoya Bylinskii (MIT PhD Student)'s Blog

- Inceptionism: Going Deeper into Neural Networks@Google Research

- Implementing Neural networks

- Free Online Books * Deep Learning by Ian Goodfellow, Yoshua Bengio, and Aaron Courville * Neural Networks and Deep Learning by Michael Nielsen * Deep Learning Tutorial by LISA lab, University of Montreal

- Books

-

Talks

- Deep Learning, Self-Taught Learning and Unsupervised Feature Learning By Andrew Ng

- Recent Developments in Deep Learning By Geoff Hinton

- The Unreasonable Effectiveness of Deep Learning by Yann LeCun

- Deep Learning of Representations by Yoshua bengio

- Siraj Raval

- [Terry's Deep Learning Talk (Korean)][https://www.youtube.com/playlist?list=PL0oFI08O71gKEXITQ7OG2SCCXkrtid7Fq] by Terry Um

- SIFT (IJCV 2004), DG Lowe [Paper] [Post]

- HOG (CVPR 2005) N Dalal [Paper]

- Recovering High Dynamic Range Radiance Maps from Photographs (SIGGRAPH 1997), PE Debevec [Paper]

- Poisson Image Editing (SIGGRAPH 2003), P Pérez [Paper]

- Single Image Haze Removal Using Dark Channel Prior (TPAMI 2011), Kaiming He [Paper]

- Digital Photography with Flash and No-Flash Image Pairs (2004), Georg Petschnigg [Paper]

- Bilateral Filter (1998), C Tomasi [Paper]

- Guided Filter (ECCV 2010), Kaiming He [Paper] [Project]

- Rolling Guidance Filter (ECCV 2014), Q Zhang [[Paper]](Rolling Guidance Filter https://pdfs.semanticscholar.org/.../c4000f5c71c22fb4a22fcf5dd0...) [Project]

- WLS Filter (SIGGRAPH 2008), Z Farbman [Paper] [Projects]

- Deep Joint Filtering (ECCV 2016), Y Li [Paper]

- Deep Multi-scale Convolutional Neural Network for Dynamic Scene Deblurring (arXiv:1612.02177), Seungjun Nah [Paper]

- Colorization using optimization (ACMTOG 2004), A Levin [Paper]

- Domain transform for edge-aware image and video processing (ACMTOG 2011), ESL Gastel [Paper]

(from Alex Krizhevsky, Ilya Sutskever, Geoffrey E. Hinton, ImageNet Classification with Deep Convolutional Neural Networks, NIPS, 2012.)

- ResNet (arXiv:1512.03385), K He [Paper][Slide]

- PReLu/Weight Initialization (arXiv:1502.01852), Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun [Paper]

- GoogLeNet (CVPR 2015), Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, Andrew Rabinovich [Paper]

- VGG-Net (ICLR 2015), Karen Simonyan and Andrew Zisserman [Web] [Paper]

- AlexNet (NIPS, 2012) Alex Krizhevsky, Ilya Sutskever, Geoffrey E. Hinton, [Paper]

(from Shaoqing Ren, Kaiming He, Ross Girshick, Jian Sun, Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks, arXiv:1506.01497.)

- PVANET (arXiv:1608.08021), Kye-Hyeon Kim [Paper] [Code]

- OverFeat (ICLR 2014), P Sermanet [Paper]

- R-CNN, (CVPR 2014), Ross Girshick [Paper-CVPR14] [Paper-arXiv14] * SPP: Spatial Pyramid Pooling (ECCV 2014), Kaiming He [Paper] * Fast R-CNN, (arXiv:1504.08083), Ross Girshick [Paper] * Faster R-CNN, (arXiv:1506.01497), Shaoqing Ren [Paper] * R-CNN minus R, (arXiv:1506.06981), Karel Lenc [Paper]

- End-to-end people detection in crowded scenes (arXiv:1506.04878), Russell Stewart, [Paper]

- YOLO: Real-Time Object Detection, Joseph Redmon [Project] [C Code], [TF Code]

- Inside-Outside Net (arXiv:1512.04143), Sean Bell [Paper]

- Deep Residual Network (arXiv:1512.03385), Kaiming He [Paper]

- Weakly Supervised Object Localization with Multi-fold Multiple Instance Learning (arXiv:1503.00949), [Paper]

- R-FCN (arXiv:1605.06409), Jifeng Dai [Paper] [Code] * SSD: Single Shot MultiBox Detector (arXiv:1512.05325v2) [Paper] [Code]

- Speed/accuracy trade-offs for modern convolutional object detectors (arXiv:1611.10012), Jonarhan NHuang [Paper]

- Multispectral Deep Neural Networks for Pedestrian Detection (arXiv:1611.02644), J Liu [Paper]

- R-FCN (NIPS 2016), [Paper] * R-FPN (CVPR 2017), TY. Lin [Paper] * Mask R-CNN (ICCV 2017), K. He [Paper]

- RetinaNet (ICCV 2017), TY. Lin [Paper] * SNIP (arXiv:1711), B Singh [Paper]

- SNIPER (arXiv:1805), B Singh [Paper]

- Delving Deeper into Convolutional Networks for Learning Video Representations (ICLR 2016), Nicolas Ballas [Paper]

- Deep Multi Scale Video Prediction Beyond Mean Square Error (ICLR 2016), Michael Mathieu [Paper]

- Two-Stream Convolutional Networks for Action Recognition in Videos (arXiv:1406.2199), K Simonyan [Paper]

- Learning Spatiotemporal Features with 3D Convolutional Networks (arXiv:1412.0767), D Tran [Paper]

-

(CVPR2017) Tokmakov et al., “Learning motion patterns in videos"

MP-Net. takes the optical flow field of two consecutive frames of a video sequence as input and produces per-pixel motion labels. -

(arXiv2017) Tokmakov et al.,“Learning video object segmentation with visual memory"

integrate one stream with appearance information and a visual memory module based on C-GRU. -

(CVPR2017) Jain et al.,“FusionSeg: Learning to combine motion and appearance for fully automatic segmentation of generic objects in videos."

FSEG. Design a two-stream fully CNN to combine appearance and motion information. -

(arXiv2017) Vijayanarasimahan et al.,“Sfm-net: Learning of structure and motion from video."

Geometry-aware CNN to predict depth, segmentation, camera and rigid object motions -

(ECCV2018) Song et al.,“Pyramid dilated deeper ConvLSTM for video salient object detection."

Pyramid dilated bidirectional ConvLSTM architecture, and CRF-based post-process Continued -

(CVPR2018) Li et al.,“Instance embedding transfer to unsupervised video object segmentation."

Transfer transferring the knowledge encapsulated in image-based instance embedding networks, and adapt the instance networks to video object segmentation. They propose a motion-based bilateral network, then a graph cut model is build to propagate the pixel-wise labels. -

(NIPS2018) Goel et al., “Unsupervised video object segmentation for deep reinforcement learning."

deep reinforcement learning methods is proposed to automatically detect moving objects with the relevant information for action selection. -

(ECCV2018) Song et al.,“Pyramid dilated deeper ConvLSTM for video salient object detection."

Pyramid dilated bidirectional ConvLSTM architecture, and CRF-based post-process Continued -

(CVPR2019) Wang et al., “Learning Unsupervised Video Object Segmentation through Visual Attention."

based on the CNN-convLSTM architecture, Visual attention-driven unsupervised VOS model.

(i) train network to incorporate optical flow 2-Branch Based Papers: (Color segmentation + Optical flow (FlowNet))

-

(ICCV2017) Cheng et al., “Segflow: Joint learning for video object segmentation and optical flow"

-

(CVPR2018) Xiao et al., “MoNet: Deep Motion Exploitation for Video Object Segmentation."

-

(CVPR2018) Luiten et al. “Premvos: Proposal-generation, refinement and merging for the davis challenge on video object segmentation."

-

(CVPR2017) Khoreva et al., “LucidTrack: Lucid Data Dreaming for Object Tracking."

-

(ECCV2018) Li et al., “VS-ReID: Video object segmentation with joint re-identification and attention-aware mask propagation."

-

(CVPR2017) Jampani et al., “Video propagation networks"

temporal bilateral network to propagate video frames in an adaptive manner by using optical flow as additional feature. -

(CVPR2018) Bao et al., “CNN in MRF: Video Object Segmentation via Inference in a CNN-Based Higher-Order Spatio-Temporal MRF"

inference in CNN-based spatio-temporal MRF. -

(NIPS2017) Hu et al., “Motion-Guided Cascaded Refinement Network for Video Object Segmentation."

employ active contour on optical flow to segment moving object.

RNN Based Papers:

-

(NIPS2017) Hu et al., “Maskrnn: Instance level video object segmentation."

build a RNN which fuses in each frame the output of a binary segmentation net and a localization net with optical flow -

(ECCV2018) Li and Loy, “Video object segmentation with joint re-identification and attention-aware mask propagation."

combine temporal propagation and re-identification functionalities into a single framework.

(ii) learn mask refinement of an object from current frame to the next one.

-

(CVPR2017) Perazzi et al., “Learning video object segmentation from static images."

trains a refine the previous frame mask to create the current frame mask, and directly infer the results from optical flow -

(CVPR2018) Yang et al., “Efficient video object segmentation via network modulation"

use a very coarse location prior with visual and spatial modulation. -

(CVPR2018) Oh et al., “Fast video object segmentation by reference-guided mask propagation."

use both the reference frame with annotation and the current frame with previous mask estimation to a deep network. -

(CVPR2018) Han et al., “Reinforcement Cutting-Agent Learning for Video Object Segmentation."

A reinforcement cutting-agent learning framework is to obtain the object box from the segmentation mask and propagates it to the next frame. -

(CVPR2019) Paul et al., “FEELVOS: Fast End-To-End Embedding Learning for Video Object Segmentation"

Some methods leverage temporal information on the bounding boxes by tracking objects across frames. -

(arXiv2017) Sharir et al., “Video object segmentation using tracked object proposals"

present a temporal tracking method to enforce coherent segmentation throughout the video. -

(arXiv2018) Cheng et al., “Fast and Accurate Online Video Object Segmentation via Tracking Parts"

Utilize a part-based tracking method on the bounding boxes, and construct a region-of-interest segmentation network to generate part masks. -

(WACV2017) Valipour et al., “Recurrent fully convolutional networks for video segmentation"

introduce a combination of CNN and RNN for video object segmentation. -

(ECCV2018) Xu et al., “YouTube-VOS: Sequence-to-Sequence Video Object Segmentation"

generate the initial states for our C-LSTM and use a FFNN to encode both the first frame and the segmentation mask. -

(CVPR2019) "Video Object Segmentation using Space-Time Memory Networks"

-

(CVPR2019) "RVOS- End-to-End Recurrent Network for Video Object Segmentation"

(i) Without using temporal information, some methods learn a appearance model to perform a pixel-level detection and segmentation of the object at each frame.

-

(CVPR2017) Caelles et al., “OSVOS: One-Shot Video Object Segmentation."** post\ offline and online training process by a FCN on static image for one-shot video object segmentation.

-

(TPAMI2018) Maninis et al., “VOSWTI: Video Object Segmentation Without Temporal Information."

extend the model of the object with explicit semantic information -

(BMVC2017) Voigtlaender et al., “Online adaptation of convolutional neural networks for video object segmentation"

online adaptive video object segmentation -

(arXiv2018) Cheng et al., “Fast and Accurate Online Video Object Segmentation via Tracking Parts."

propose a method to propagate a coarse segmentation mask spatially based on the pairwise similarities in each frame.

(ii) Other approaches formulate video object segmentation as a pixel-wise matching problem to estimate an object of interest with subsequence images until the end of a sequence.

-

(CVPR2017) Yoon et al., “Pixel-level matching for video object segmentation using convolutional neural networks."

a pixel-level matching net to distinguish the object from the background on the basis of the pixel-level similarity btw. two object units. -

(CVPR2018) Chen et al., “PML- Blazingly fast video object segmentation with pixel-wise metric learning."

formulate a pixel-wise retrieval problem in an embedding space for video object segmentation -

(ECCV2017) Hu et al., “VideoMatch: Matching based Video Object Segmentation."

match extracted features to a provided template without memorizing the appearance of the objects. -

(CVPR2019) Fast Online Object Tracking and Segmentation- A Unifying Approach"

-

(CVPR2019) BubbleNets- Learning to Select the Guidance Frame in Video Object Segmentation by Deep Sorting Frames"

-

(arXiv2017) Benard et al., “Interactive video object segmentation in the wild"

use OSVOS, propose to refine the initial predictions with a fully connected CRF -

(arXiv2018) Caelles et al., “The 2018 davis challenge on video object segmentation."

use OSVOS, define a baseline method (i.e. Scribble- OSVOS) -

(CVPR2018) Chen et al., “Blazingly fast video object segmentation with pixel-wise metric learning"

Formulate video object segmentation as a pixel-wise retrieval problem. And their method allow for a fast user interaction -

(CVPR2016) Xu et al., “ Deep interactive object selection."

iFCN: guides a CNN from positive and negative points acquired from the ground-truth masks -

(CVPR2018) Mannis et al., “Deep extreme cut: From extreme points to object segmentation"

build on iFCN to improve the results by using four points of an object as input to obtain precise object segmentation for images and videos -

(19CVPR) "Fast User-Guided Video Object Segmentation by Interaction-and-Propagation Networks"

-

(ECCV2012) Hartmann et al., “Weakly supervised learning of object segmentations from web-scale video"

Training Segment Classifier: formulate pixel-level segmentations as multiple instance learning weakly supervised classifiers -

(CVPR2013) Tang et al., “Discriminative segment annotation in weakly labeled video."

Training Segment Classifier: Estimate the video in the positive sample with a large number of negative samples, and regard those segments with a distinct appearance as the foreground -

(CVPR2014) Liu et al., “Weakly supervised multiclass video segmentation."

Performing label transfer: Weakly supervised multiclass video segmentation -

(CVPR2015) Zhang et al., “Semantic object segmentation via detection in weakly labeled video."

using object detection without the need of training process. -

(ECCV2016) Tsai et al., “Semantic co-segmentation in videos."

does not require object proposal or video-level annotations. Link objects btw. different video and construct a graph for optimization -

(ACCV2016) Wang et al., “ Semi-supervised domain adaptation for weakly labeled semantic video object segmentation."

combine the recognition and representation power of CNN with the intrinsic structure of unlabelled data. -

(arXiv2018) Khoreva et al., “ Video object segmentation with language referring expressions."

Employ natural language expressions to identify the target object in video. Their method integrate textual descriptions of interest as foreground into convnet-based techniques.

-

(CVPR2019) Wang et al., “Fast Online Object Tracking and Segmentation: A Unifying Approach"

Siamese network to simultaneously estimate binary segmentation mask, bounding box, and the corresponding object/background scores. -

(ACMMCMC2018) Zhang et al., “Tracking- assisted Weakly Supervised Online Visual Object Segmentation in Unconstrained Videos."

build a two-branch network, i.e., appearance network and contour network. -

(ArXiv18) "Distractor-aware Siamese Networks for Visual Object Tracking"

-

(CVPR2019) "SiamRPN++- Evolution of Siamese Visual Tracking with Very Deep Networks (19SOTA)"

- Online Tracking by Learning Discriminative Saliency Map with Convolutional Neural Network (arXiv:1502.06796), Seunghoon Hong [Paper]

- DeepTrack: Learning Discriminative Feature Representations by Convolutional Neural Networks for Visual Tracking, (BMVC 2014), Hanxi Li [Paper]

- Learning a Deep Compact Image Representation for Visual Tracking, (NIPS 2013), N Wang [Paper]

- Hierarchical Convolutional Features for Visual Tracking (ICCV 2015), Chao Ma [Paper] [Code]

- Visual Tracking with fully Convolutional Networks (ICCV 2015), Lijun Wang [Paper] [Code]

- Learning Multi-Domain Convolutional Neural Networks for Visual Tracking (arXiv:1510.07945), Hyeonseob Nam, Bohyung Han [Paper] [Code] [Project Page]

- Iterative Image Reconstruction (IJCAI, 2001), Sven Behnke [Paper]

- SRCNN: Super-Resolution (ECCV 2014), Chao Dong [Web] [Paper-ECCV14] [Paper-arXiv15]

- Very Deep Super-Resolution (arXiv:1511.04587), Jiwon Kim [Paper]

- Deeply-Recursive Convolutional Network (arXIv:1511.04491), Jiwon Kim [Paper]

- Casade-Sparse-Coding-Network (ICCV 2015), Zhaowen Wang [Paper] [Code]

- Perceptual Losses for Super-Resolution (arXiv:1603.08155), Justin Johnson [Paper] [Supplementary]

- SRGAN (arXiv:1609.04802v3), Christian Ledig [Paper]

- Image Super-Resolution with Fast Approximate Convolutional Sparse Coding (ICONIP 2014), Osendorfer [Paper 2014]

- Convolutional Neural Network to Compare Image Patches (arXiv:1510.05970), S Zagoruyko [Paper]

- Discriminative Learning of Deep Convolutional Feature Point Descriptors (ICCV 2015), E Simo-Serra [Paper], 2015,

- MatchNet (CVPR 2015), X Han [Paper],

- Computing the Stereo Matching Cost with a Convolutional Neural Network (CVPR 2015), Jure Žbontar [Paper]

- Colorful Image Colorization (ECCV 2016), Richard Zhang [Paper], [Code]

- Stereo matching

- Adaptive Support-Weight (TPAMI 2006), Kuk-Jin Yoon [Paper]

- Fast Cost-Volume Filtering (2012), Christoph Rhemann [Paper]

- Non-Rigid Dense Correspondence with Applications for Image Enhancement (TOG 2011), Y HaCohen [Paper]

- Multi-modal and Multi-spectral Registration for Natural Images (ECCV 2014), X Shen [Paper]

- FlowNet (arXiv:1504.06852), P Fischer [Paper]

- Compression Artifacts Reduction (arXiv:1504.06993), Chao Dong [Paper]

- Blur Removal

- Image Deconvolution (NIPS 2014), Li Xu [Web] [Paper]

- Deep Edge-Aware Filter (ICMR 2015), Li Xu [Paper]

- Colorful Image Colorization (ECCV 2016), [Paper] [Project] [Blog-Ryan Dahl]

- Feature Learning by Inpainting (CVPR 2016), Deepak Pathak [Paper][Code]

(from Gedas Bertasius, Jianbo Shi, Lorenzo Torresani, DeepEdge: A Multi-Scale Bifurcated Deep Network for Top-Down Contour Detection, CVPR, 2015.)

- Holistically-Nested Edge Detection (arXiv:1504.06375), Saining Xie [Paper] [Code]

- DeepEdge (CVPR 2015), Gedas Bertasius [Paper]

- DeepContour (CVPR 2015), Wei Shen [Paper]

(from Jifeng Dai, Kaiming He, Jian Sun, BoxSup: Exploiting Bounding Boxes to Supervise Convolutional Networks for Semantic Segmentation, arXiv:1503.01640.)

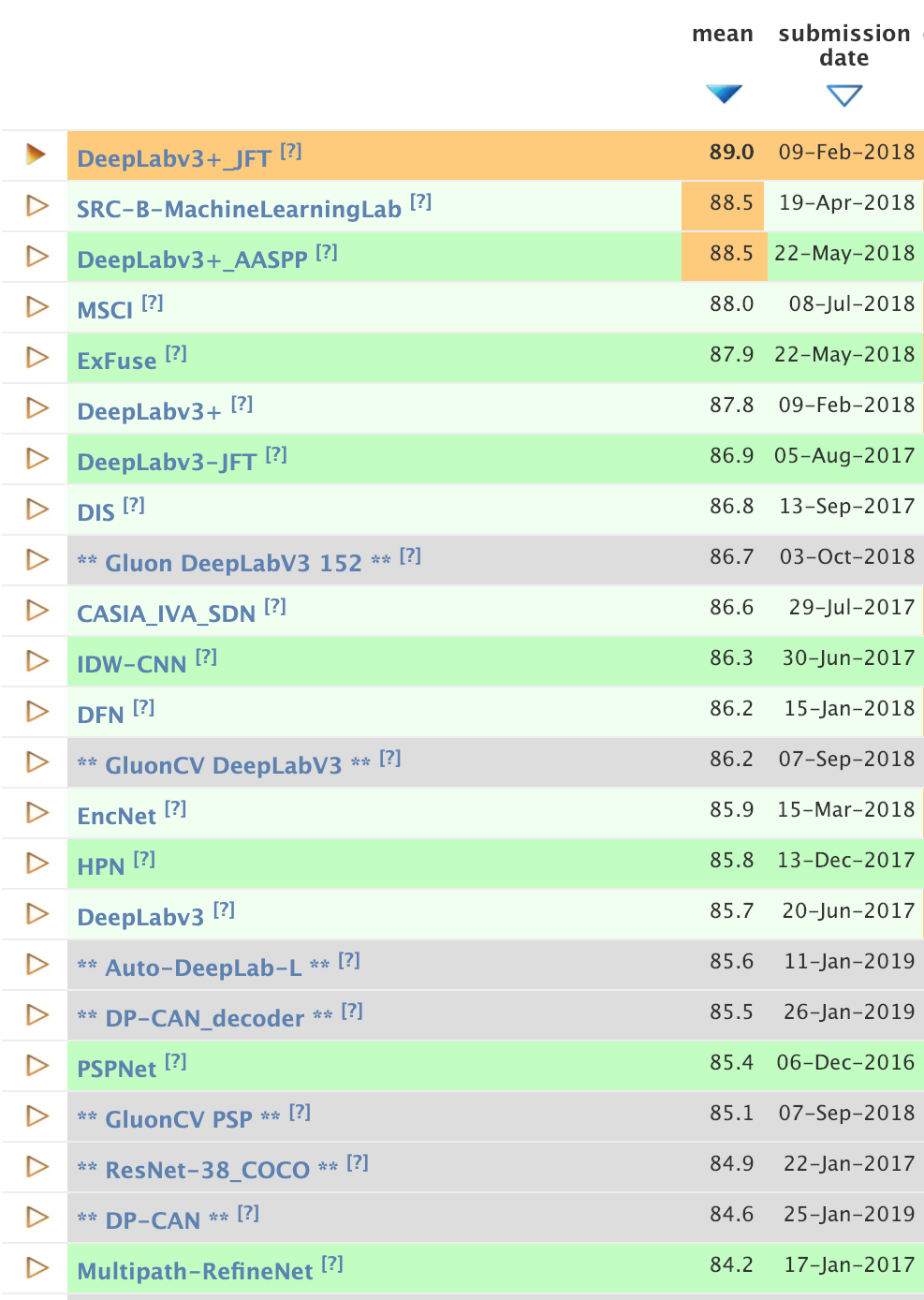

(from PASCAL VOC2012 leaderboards)

(from PASCAL VOC2012 leaderboards)

-

DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs (arXiv:1606.00915v2), Liang-Chieh Chen [Paper]

-

Fully Convolutional Networks for Semantic Segmentation (CVPR 2015), Jonathan Long [Paper-CVPR15] [Paper-arXiv15]

-

Learning Deconvolution Network for Semantic Segmentation (arXiv:1505.04366), Hyeonwoo Noh [Paper] (7th ranked in VOC2012)

-

SEC: Seed, Expand and Constrain (ECCV 2016), Alexander Kolesnikov [Paper] [Code]

-

Adelaide, Guosheng Lin

-

Deep Parsing Network (DPN) (ICCV 2015), Ziwei Liu [Paper] (2nd ranked in VOC 2012)

-

CentraleSuperBoundaries (arXiv 1511.07386), Iasonas Kokkinos [Paper] (4th ranked in VOC 2012)

-

BoxSup (arXiv:1503.01640), ifeng Dai, [Paper] (6th ranked in VOC2012)

- POSTECH

- Decoupled Deep Neural Network for Semi-supervised Semantic Segmentation (arXiv:1506.04924), Seunghoon Hong [Paper]

- Learning Transferrable Knowledge for Semantic Segmentation with Deep Convolutional Neural Network (arXiv:1512.07928), Seunghoon Hong [Paper] [Project Page]

- Conditional Random Fields as Recurrent Neural Networks (arXiv:1502.03240), Shuai Zheng [Paper] (8th ranked in VOC2012)

- DeepLab (arXiv:1502.02734), Liang-Chieh Chen [Paper] (9th ranked in VOC2012)

- Zoom-out (CVPR 2015), Mohammadreza Mostajabi [Paper]

- Joint Calibration(arXiv:1507.01581), Holger Caesar [Paper]

- Hypercolumn, Bharath Hariharan (CVPR 2015) [Paper]

- Deep Hierarchical Parsing (CVPR 2015), Abhishek Sharma [Paper]

- Learning Hierarchical Features for Scene Labeling, Clement Farabet [Paper-ICML12] [Paper-PAMI13]

- SegNet [Web]

- Multi-Scale Context Aggregation by Dilated Convolutions (ICLR 2016), Fisher Yu [Paper]

- Segment-Phrase Table for Semantic Segmentation, Visual Entailment and Paraphrasing, (ICCV 2015), Hamid Izadinia [Paper]

- Pusing the Boundaries of Boundary Detection Using deep Learning (ICLR 2016), Iasonas Kokkinos [Paper]

- Weakly supervised graph based semantic segmentation by learning communities of image-parts (ICCV 2015), Niloufar Pourian [Paper]

- Rich feature hierarchies for accurate object detection and semantic segmentation [CVPR 2014], R Girshick [Web]

(from Nian Liu, Junwei Han, Dingwen Zhang, Shifeng Wen, Tianming Liu, Predicting Eye Fixations using Convolutional Neural Networks, CVPR, 2015.)

(from Nian Liu, Junwei Han, Dingwen Zhang, Shifeng Wen, Tianming Liu, Predicting Eye Fixations using Convolutional Neural Networks, CVPR, 2015.)

- Mr-CNN (CVPR 2015), Nian Liu [Paper]

- Learning a Sequential Search for Landmarks (CVPR 2015), Saurabh Singh [Paper]

- Multiple Object Recognition with Visual Attention (ICLR 2015), Jimmy Lei Ba [Paper]

- Recurrent Models of Visual Attention (NIPS 2014), Volodymyr Mnih [Paper]

- Weakly-supervised learning with convolutional neural networks (CVPR 2015), Maxime Oquab [Paper]

- FV-CNN (CVPR 2015), Mircea Cimpoi [Paper]

- Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields (CVPR 2017), Zhe Cao

- Deepcut: Joint subset partition and labeling for multi person pose estimation (CVPR 2016), Leonid Pishchulin

- Convolutional pose machines (CVPR 2016), Shih-En Wei

- Stacked hourglass networks for human pose estimation (ECCV 2016), Alejandro Newell

- Flowing convnets for human pose estimation in videos (ICCV 2015), T Pfister

- Joint training of a convolutional network and a graphical model for human pose estimation (NIPS 2014), Jonathan J. Tompson

(from Aravindh Mahendran, Andrea Vedaldi, Understanding Deep Image Representations by Inverting Them, CVPR, 2015.)

- Understanding image representations by measuring their equivariance and equivalence (CVPR 2015), Karel Lenc [Paper]

- Deep Neural Networks are Easily Fooled:High Confidence Predictions for Unrecognizable Images (CVPR 2015), Anh Nguyen [Paper]

- Understanding Deep Image Representations by Inverting Them (CVPR 2015), Aravindh Mahendran [Paper]

- Object Detectors Emerge in Deep Scene CNNs (ICLR 2015), Bolei Zhou [arXiv Paper]

- Inverting Visual Representations with Convolutional Networks (arXiv:1506.02753), Alexey Dosovitskiy [Paper]

- Visualizing and Understanding Convolutional Networks, (ECCV 2014), Matthrew Zeiler [Paper]

(from Andrej Karpathy, Li Fei-Fei, Deep Visual-Semantic Alignments for Generating Image Description, CVPR, 2015.)

- UCLA / Baidu [Paper]

- Junhua Mao, Wei Xu, Yi Yang, Jiang Wang, Alan L. Yuille, Explain Images with Multimodal Recurrent Neural Networks, arXiv:1410.1090.

- Toronto [Paper]

- Ryan Kiros, Ruslan Salakhutdinov, Richard S. Zemel, Unifying Visual-Semantic Embeddings with Multimodal Neural Language Models, arXiv:1411.2539.

- Berkeley [Paper]

- Jeff Donahue, Lisa Anne Hendricks, Sergio Guadarrama, Marcus Rohrbach, Subhashini Venugopalan, Kate Saenko, Trevor Darrell, Long-term Recurrent Convolutional Networks for Visual Recognition and Description, arXiv:1411.4389.

- Google [Paper]

- Oriol Vinyals, Alexander Toshev, Samy Bengio, Dumitru Erhan, Show and Tell: A Neural Image Caption Generator, arXiv:1411.4555.

- Stanford [Web] [Paper]

- Andrej Karpathy, Li Fei-Fei, Deep Visual-Semantic Alignments for Generating Image Description, CVPR, 2015.

- UML / UT [Paper]

- Subhashini Venugopalan, Huijuan Xu, Jeff Donahue, Marcus Rohrbach, Raymond Mooney, Kate Saenko, Translating Videos to Natural Language Using Deep Recurrent Neural Networks, NAACL-HLT, 2015.

- CMU / Microsoft [Paper-arXiv] [Paper-CVPR]

- Xinlei Chen, C. Lawrence Zitnick, Learning a Recurrent Visual Representation for Image Caption Generation, arXiv:1411.5654.

- Xinlei Chen, C. Lawrence Zitnick, Mind’s Eye: A Recurrent Visual Representation for Image Caption Generation, CVPR 2015

- Microsoft [Paper]

- Hao Fang, Saurabh Gupta, Forrest Iandola, Rupesh Srivastava, Li Deng, Piotr Dollár, Jianfeng Gao, Xiaodong He, Margaret Mitchell, John C. Platt, C. Lawrence Zitnick, Geoffrey Zweig, From Captions to Visual Concepts and Back, CVPR, 2015.

- Univ. Montreal / Univ. Toronto [Web] [Paper]

- Kelvin Xu, Jimmy Lei Ba, Ryan Kiros, Kyunghyun Cho, Aaron Courville, Ruslan Salakhutdinov, Richard S. Zemel, Yoshua Bengio, Show, Attend, and Tell: Neural Image Caption Generation with Visual Attention, arXiv:1502.03044 / ICML 2015

- Idiap / EPFL / Facebook [Paper]

- Remi Lebret, Pedro O. Pinheiro, Ronan Collobert, Phrase-based Image Captioning, arXiv:1502.03671 / ICML 2015

- UCLA / Baidu [Paper]

- Junhua Mao, Wei Xu, Yi Yang, Jiang Wang, Zhiheng Huang, Alan L. Yuille, Learning like a Child: Fast Novel Visual Concept Learning from Sentence Descriptions of Images, arXiv:1504.06692

- MS + Berkeley

- Jacob Devlin, Saurabh Gupta, Ross Girshick, Margaret Mitchell, C. Lawrence Zitnick, Exploring Nearest Neighbor Approaches for Image Captioning, arXiv:1505.04467 [Paper]

- Jacob Devlin, Hao Cheng, Hao Fang, Saurabh Gupta, Li Deng, Xiaodong He, Geoffrey Zweig, Margaret Mitchell, Language Models for Image Captioning: The Quirks and What Works, arXiv:1505.01809 [Paper]

- Adelaide [Paper]

- Qi Wu, Chunhua Shen, Anton van den Hengel, Lingqiao Liu, Anthony Dick, Image Captioning with an Intermediate Attributes Layer, arXiv:1506.01144

- Tilburg [Paper]

- Grzegorz Chrupala, Akos Kadar, Afra Alishahi, Learning language through pictures, arXiv:1506.03694

- Univ. Montreal [Paper]

- Kyunghyun Cho, Aaron Courville, Yoshua Bengio, Describing Multimedia Content using Attention-based Encoder-Decoder Networks, arXiv:1507.01053

- Cornell [Paper]

- Jack Hessel, Nicolas Savva, Michael J. Wilber, Image Representations and New Domains in Neural Image Captioning, arXiv:1508.02091

- MS + City Univ. of HongKong [Paper]

- Ting Yao, Tao Mei, and Chong-Wah Ngo, "Learning Query and Image Similarities with Ranking Canonical Correlation Analysis", ICCV, 2015

- Berkeley [Web] [Paper]

- Jeff Donahue, Lisa Anne Hendricks, Sergio Guadarrama, Marcus Rohrbach, Subhashini Venugopalan, Kate Saenko, Trevor Darrell, Long-term Recurrent Convolutional Networks for Visual Recognition and Description, CVPR, 2015.

- UT / UML / Berkeley [Paper]

- Subhashini Venugopalan, Huijuan Xu, Jeff Donahue, Marcus Rohrbach, Raymond Mooney, Kate Saenko, Translating Videos to Natural Language Using Deep Recurrent Neural Networks, arXiv:1412.4729.

- Microsoft [Paper]

- Yingwei Pan, Tao Mei, Ting Yao, Houqiang Li, Yong Rui, Joint Modeling Embedding and Translation to Bridge Video and Language, arXiv:1505.01861.

- UT / Berkeley / UML [Paper]

- Subhashini Venugopalan, Marcus Rohrbach, Jeff Donahue, Raymond Mooney, Trevor Darrell, Kate Saenko, Sequence to Sequence--Video to Text, arXiv:1505.00487.

- Univ. Montreal / Univ. Sherbrooke [Paper]

- Li Yao, Atousa Torabi, Kyunghyun Cho, Nicolas Ballas, Christopher Pal, Hugo Larochelle, Aaron Courville, Describing Videos by Exploiting Temporal Structure, arXiv:1502.08029

- MPI / Berkeley [Paper]

- Anna Rohrbach, Marcus Rohrbach, Bernt Schiele, The Long-Short Story of Movie Description, arXiv:1506.01698

- Univ. Toronto / MIT [Paper]

- Yukun Zhu, Ryan Kiros, Richard Zemel, Ruslan Salakhutdinov, Raquel Urtasun, Antonio Torralba, Sanja Fidler, Aligning Books and Movies: Towards Story-like Visual Explanations by Watching Movies and Reading Books, arXiv:1506.06724

- Univ. Montreal [Paper]

- Kyunghyun Cho, Aaron Courville, Yoshua Bengio, Describing Multimedia Content using Attention-based Encoder-Decoder Networks, arXiv:1507.01053

- TAU / USC [paper]

- Dotan Kaufman, Gil Levi, Tal Hassner, Lior Wolf, Temporal Tessellation for Video Annotation and Summarization, arXiv:1612.06950.

(from Stanislaw Antol, Aishwarya Agrawal, Jiasen Lu, Margaret Mitchell, Dhruv Batra, C. Lawrence Zitnick, Devi Parikh, VQA: Visual Question Answering, CVPR, 2015 SUNw:Scene Understanding workshop)

-

Virginia Tech / MSR [Web] [Paper]

- Stanislaw Antol, Aishwarya Agrawal, Jiasen Lu, Margaret Mitchell, Dhruv Batra, C. Lawrence Zitnick, Devi Parikh, VQA: Visual Question Answering, CVPR, 2015 SUNw:Scene Understanding workshop.

-

- Mateusz Malinowski, Marcus Rohrbach, Mario Fritz, Ask Your Neurons: A Neural-based Approach to Answering Questions about Images, arXiv:1505.01121.

-

- Mengye Ren, Ryan Kiros, Richard Zemel, Image Question Answering: A Visual Semantic Embedding Model and a New Dataset, arXiv:1505.02074 / ICML 2015 deep learning workshop.

-

Baidu / UCLA [Paper] [Dataset]

- Hauyuan Gao, Junhua Mao, Jie Zhou, Zhiheng Huang, Lei Wang, Wei Xu, Are You Talking to a Machine? Dataset and Methods for Multilingual Image Question Answering, arXiv:1505.05612.

-

POSTECH [Paper] [Project Page]

- Hyeonwoo Noh, Paul Hongsuck Seo, and Bohyung Han, Image Question Answering using Convolutional Neural Network with Dynamic Parameter Prediction, arXiv:1511.05765

-

CMU / Microsoft Research [Paper]

- Yang, Z., He, X., Gao, J., Deng, L., & Smola, A. (2015). Stacked Attention Networks for Image Question Answering. arXiv:1511.02274.

-

MetaMind [Paper]

- Xiong, Caiming, Stephen Merity, and Richard Socher. "Dynamic Memory Networks for Visual and Textual Question Answering." arXiv:1603.01417 (2016).

-

SNU + NAVER [Paper]

- Jin-Hwa Kim, Sang-Woo Lee, Dong-Hyun Kwak, Min-Oh Heo, Jeonghee Kim, Jung-Woo Ha, Byoung-Tak Zhang, Multimodal Residual Learning for Visual QA, arXiv:1606:01455

-

UC Berkeley + Sony [Paper]

- Akira Fukui, Dong Huk Park, Daylen Yang, Anna Rohrbach, Trevor Darrell, and Marcus Rohrbach, Multimodal Compact Bilinear Pooling for Visual Question Answering and Visual Grounding, arXiv:1606.01847

-

Postech [Paper]

- Hyeonwoo Noh and Bohyung Han, * Recurrent Answering Units with Joint Loss Minimization for VQA*, arXiv:1606.03647

-

SNU + NAVER [Paper]

- Jin-Hwa Kim, Kyoung Woon On, Jeonghee Kim, Jung-Woo Ha, Byoung-Tak Zhang, Hadamard Product for Low-rank Bilinear Pooling, arXiv:1610.04325.

-

Convolutional / Recurrent Networks

-

Adversarial Networks

- Vanila GAN (NIPS 2014), Ian J. Goodfellow. [Paper]

- Deep Generative Image Models using a Laplacian Pyramid of Adversarial Network (NIPS 2015), Emily Denton [Paper]

- A note on the evaluation of generative models (ICLR 2016), Lucas Theis [Paper]

- Variationally Auto-Encoded Deep Gaussian Processes (ICLR 2016), Zhenwen Dai [Paper]

- Generating Images from Captions with Attention (ICLR 2016), Elman Mansimov [Paper]

- Unsupervised and Semi-supervised Learning with Categorical Generative Adversarial Networks (ICLR 2016), Jost Tobias Springenberg [Paper]

- Censoring Representations with an Adversary (ICLR 2016), Harrison Edwards [Paper]

- Distributional Smoothing with Virtual Adversarial Training (ICLR 2016), Takeru Miyato [Paper]

- Generative Visual Manipulation on the Natural Image Manifold (ECCV 2016), Jun-Yan Zhu [Paper] [Code] [Video]

- Mixing Convolutional and Adversarial Networks (ICLR 2016), Alec Radford [Paper]

- Unsupervised Monocular Depth Estimation with Left-Right Consistency (arXiv:1609.03677), Clément Godard [Paper]

- Depth Map Prediction from a Single Image using a Multi-Scale Deep Network (NIPS 2014), David Eigen [Paper]

- 3D Shape Retrieval (CVPR 2015), Fang Wang [Paper]

- Vanilla GAN (NIPS2014), I Goodfellow [Paper]

- Conditional GAN (2014), M Mirza [Paper]

- InfoGAN (2016), Xi Chen [Paper]

- Wasserstein GAN (2017), M Arjovsky [Paper]

- Mode Regularized GAN (2017), T Che [Paper]

- Coupled GAN (2016), MY Liu [Paper]

- Auxiliary Classifier GAN (2017), A Odena [Paper]

- Least Squares GAN (2017), X Mao [Paper]

- Boundary Seeking GAN (2017), RD Hjelm [Paper]

- Energy Based GAN (2016), J Zhao [Paper]

- f-GAN (2016), S Nowozin [Paper]

- Generative Adversarial Parallelization (2016), DJ Im [Paper]

- DiscoGAN (2017), TS Kim [Paper]

- Adversarial Feature Learning (2016), J Donahue [Paper]

- Adversarially Learned Inference (2016), V Dumoulin [Paper]

- Boundary Equilibrium GAN 2017(), D Berthelot [Paper]

- Improved Training for Wasserstein GAN (), [Paper]

- DualGAN (2017), Z Yi [Paper]

- MAGAN: Margin Adaptation for GAN (2017), R Wang [Paper]

- Softmax GAN (2017), M Lin [Paper]

- GibbsNet (NIPS2017), A Lamb [Paper]

- Big GAN (2018), A Brock [Paper]

- MoCo GAN (2018), S Tulyakov [Paper]

- Batch Normalization (arXiv:1502.03167), S Ioffe [Paper]

- Weight Standardization (arXiv1903), S Qiao [Paper] [Code]

- Visual Analogy [Paper]

- Scott Reed, Yi Zhang, Yuting Zhang, Honglak Lee, Deep Visual Analogy Making, NIPS, 2015

- Surface Normal Estimation [Paper]

- Xiaolong Wang, David F. Fouhey, Abhinav Gupta, Designing Deep Networks for Surface Normal Estimation, CVPR, 2015.

- Action Detection [Paper]

- Georgia Gkioxari, Jitendra Malik, Finding Action Tubes, CVPR, 2015.

- Crowd Counting [Paper]

- Cong Zhang, Hongsheng Li, Xiaogang Wang, Xiaokang Yang, Cross-scene Crowd Counting via Deep Convolutional Neural Networks, CVPR, 2015.

- Weakly-supervised Classification

- Samaneh Azadi, Jiashi Feng, Stefanie Jegelka, Trevor Darrell, "Auxiliary Image Regularization for Deep CNNs with Noisy Labels", ICLR 2016, [Paper]

- Artistic Style [Paper] [Code]

- Leon A. Gatys, Alexander S. Ecker, Matthias Bethge, A Neural Algorithm of Artistic Style.

- Human Gaze Estimation

- Face Recognition

- Yaniv Taigman, Ming Yang, Marc'Aurelio Ranzato, Lior Wolf, DeepFace: Closing the Gap to Human-Level Performance in Face Verification, CVPR, 2014. [Paper]

- Yi Sun, Ding Liang, Xiaogang Wang, Xiaoou Tang, DeepID3: Face Recognition with Very Deep Neural Networks, 2015. [Paper]

- Florian Schroff, Dmitry Kalenichenko, James Philbin, FaceNet: A Unified Embedding for Face Recognition and Clustering, CVPR, 2015. [Paper]

- Facial Landmark Detection

-

Yue Wu, Tal Hassner, KangGeon Kim, Gerard Medioni, Prem Natarajan, Facial Landmark Detection with Tweaked Convolutional Neural Networks, 2015. [Paper] [Project]

- Tensorflow: An open source software library for numerical computation using data flow graph by Google [Web]

- Torch7: Deep learning library in Lua, used by Facebook and Google Deepmind [Web]

- Torch-based deep learning libraries: [torchnet],

- Caffe: Deep learning framework by the BVLC [Web]

- Theano: Mathematical library in Python, maintained by LISA lab [Web]

- MatConvNet: CNNs for MATLAB [Web]

- MXNet: A flexible and efficient deep learning library for heterogeneous distributed systems with multi-language support [Web]

- Deepgaze: A computer vision library for human-computer interaction based on CNNs [Web]

- Adversarial Training

- Code and hyperparameters for the paper "Generative Adversarial Networks" [Web]

- Understanding and Visualizing

- Source code for "Understanding Deep Image Representations by Inverting Them," CVPR, 2015. [Web]

- Semantic Segmentation

- Source code for the paper "Fully Convolutional Networks for Semantic Segmentation," CVPR, 2015. [Web]

- Super-Resolution

- Image Super-Resolution for Anime-Style-Art [Web]

- Edge Detection

-

- [CVPR 2014] Tutorial on Deep Learning in Computer Vision

- [CVPR 2015] Applied Deep Learning for Computer Vision with Torch