Java JNI bindings for Nvidia PhysX 5.2.1.

The library is published on maven central, so you can easily add this to your dependencies:

dependencies {

// java bindings

implementation("de.fabmax:physx-jni:2.2.1")

// native libraries - you can add the one matching your system or all

runtimeOnly("de.fabmax:physx-jni:2.2.1:natives-windows")

runtimeOnly("de.fabmax:physx-jni:2.2.1:natives-linux")

runtimeOnly("de.fabmax:physx-jni:2.2.1:natives-macos")

runtimeOnly("de.fabmax:physx-jni:2.2.1:natives-macos-arm64")

}

This is still work in progress, but the bindings already include most major parts of the PhysX SDK:

- Basics

- Static and dynamic actors

- All geometry types (box, capsule, sphere, plane, convex mesh, triangle mesh and height field)

- All joint types (revolute, spherical, prismatic, fixed, distance and D6)

- Articulations

- Vehicles

- Character controllers

- CUDA (requires a Platform with CUDA support, see below)

- Rigid bodies

- Particles (Fluids + Cloth)

- Soft bodies

- Scene serialization

The detailed list of mapped functions is given by the interface definition files. The Java classes containing the actual bindings are generated from these files during build.

After build (or after running the corresponding gradle task generateJniBindings) the generated Java

classes are located under physx-jni/src/main/generated.

- Windows (x86_64)

- Linux (x86_64)

- MacOS (x86_64, and arm64)

Moreover, there is also a version for javascript/webassembly: physx-js-webidl.

You can take a look at HelloPhysX.java for a hello world example on how to use the library. There also are a few tests with slightly more advanced examples (custom simulation callbacks, triangle mesh collision, custom filter shader, etc.).

To see a few real life demos you can take a look at my kool demos:

- Vehicle: Vehicle demo with a racetrack and a few obstacles.

- Character: 3rd person character demo on an island.

- Ragdolls: Simple Ragdoll demo.

- Joints: A chain running over two gears.

- Collision: Various collision shapes.

Note: These demos run directly in the browser and obviously don't use this library, but the webassembly version mentioned above. However, the two are functionally identical, so it shouldn't matter too much. The JNI version is much faster though.

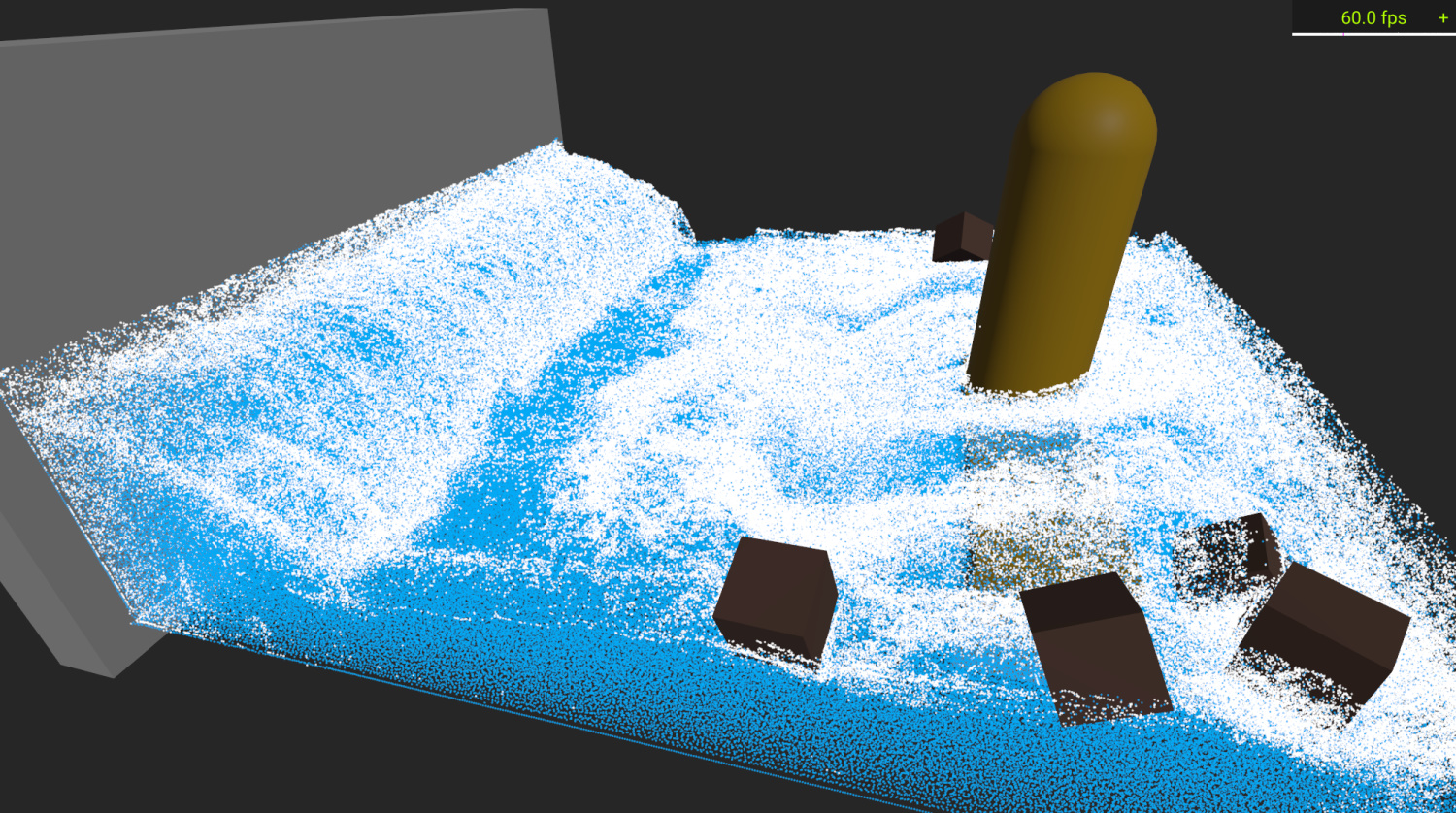

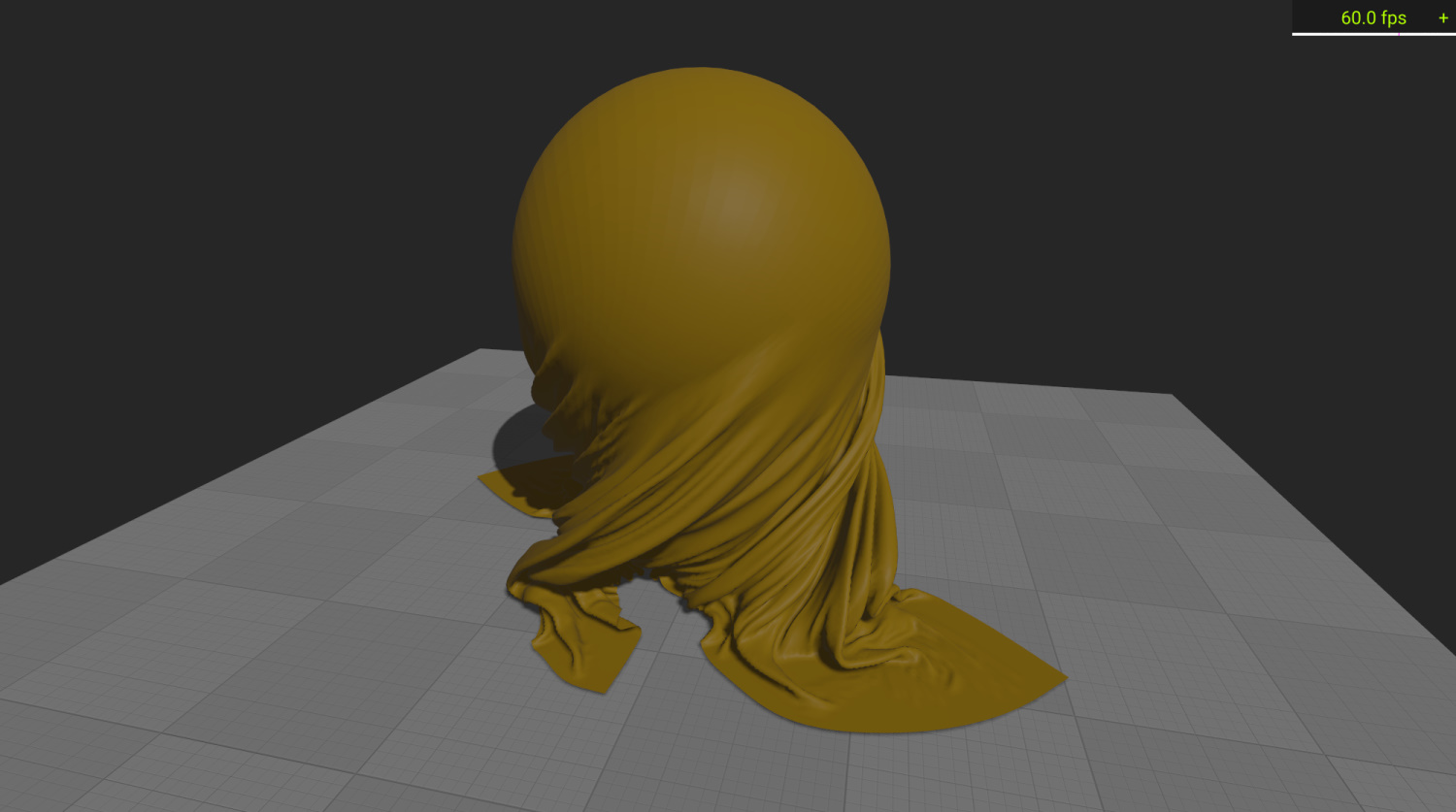

The particle simulation unfortunately requires CUDA and therefore only works on Windows / Linux systems with an Nvidia GPU. Here are a few images what this can look like:

| Fluid Simulation | Cloth Simulation |

|---|---|

|

|

Simplified non-graphical versions of the two scenes are available in source as tests: FluidTest and ClothTest They are more or less 1:1 translations of the corresponding PhysX example snippets.

The generated bindings contain most of the original documentation converted to javadoc. For further reading there is also the official PhysX documentation.

Whenever you create an instance of a wrapper class within this library, this also creates an object on the native side. Native objects are not covered by the garbage collector, so, in order to avoid a memory leak, you have to clean up these objects yourself when you are done with them.

Here is an example:

// create an object of PxVec3, this also creates a native PxVec3

// object behind the scenes.

PxVec3 vector = new PxVec3(1f, 2f, 3f);

// do something with vector...

// destroy the object once you are done with it

vector.destroy();This approach has two potential problems: First, as mentioned, if you forget to call destroy(), the memory on the native heap is not released resulting in a memory leak. Second, creating new objects on the native heap comes with a lot of overhead and is much slower than creating a new object on the Java side.

These issues aren't a big problem for long living objects, which you create on start-up and use until you exit

the program. However, for short-lived objects like, in many cases, PxVec3 this can have a large impact. Therefore,

there is a second method to allocate these objects: Stack allocation. To use this, you will need some sort of

memory allocator like LWJGL's MemoryStack. With that one the above example could look like this:

try (MemoryStack mem = MemoryStack.stackPush()) {

// create an object of PxVec3. The native object is allocated in memory

// provided by MemoryStack

PxVec3 vector = PxVec3.createAt(mem, MemoryStack::nmalloc, 1f, 2f, 3f);

// do something with vector...

// no explicit destroy needed, memory is released when we leave the scope

}While the PxVec3.createAt() call looks a bit more complicated, this approach is much faster and comes without the

risk of leaking memory, so it should be preferred whenever possible.

At a few places it is possible to register callbacks, e.g., PxErrorCallback or

PxSimulationEventCallback. In order to implement a callback, the corresponding Java callback class has to be

extended. The implementing class can then be passed into the corresponding PhysX API.

Here's an example how this might look:

// implement callback

public class CustomErrorCallback extends PxErrorCallbackImpl {

@Override

public void reportError(PxErrorCodeEnum code, String message, String file, int line) {

System.out.println(code + ": " + message);

}

}

// register / use callback

CustomErrorCallback errorCb = new CustomErrorCallback();

PxFoundation foundation = PxTopLevelFunctions.CreateFoundation(PX_PHYSICS_VERSION, new PxDefaultAllocator(), errorCb);Several PhysX classes (e.g. PxActor, PxMaterial, ...) have a userData field, which can be used to store an arbitrary

object reference. Since the native userData field is a void pointer, a wrapper class JavaNativeRef is needed to store

a java object reference in it:

PxRigidDynamic myActor = ...

// set user data, can be any java object, here we use a String:

myActor.setUserData(new JavaNativeRef<>("Arbitrary data"));

// get user data, here we expect it to be a String:

JavaNativeRef<String> userData = JavaNativeRef.fromNativeObject(myActor.getUserData());

System.out.println(userData.get());PhysX supports accelerating physics simulation with CUDA (this, of course, requires an Nvidia GPU). However,

using CUDA requires different runtime libraries, which are not available via maven central. Instead, you can grab them

from the releases section (those suffixed with -cuda).

Apart from that, enabling CUDA acceleration for a scene is straight forward:

// Setup your scene as usual

PxSceneDesc sceneDesc = new PxSceneDesc(physics.getTolerancesScale());

sceneDesc.setCpuDispatcher(PxTopLevelFunctions.DefaultCpuDispatcherCreate(8));

sceneDesc.setFilterShader(PxTopLevelFunctions.DefaultFilterShader());

// Create the PxCudaContextManager

PxCudaContextManagerDesc desc = new PxCudaContextManagerDesc();

desc.setInteropMode(PxCudaInteropModeEnum.NO_INTEROP);

PxCudaContextManager cudaMgr = PxCudaTopLevelFunctions.CreateCudaContextManager(foundation, desc);

// Check if CUDA context is valid / CUDA support is available

if (cudaMgr != null && cudaMgr.contextIsValid()) {

// enable CUDA!

sceneDesc.setCudaContextManager(cudaMgr);

sceneDesc.getFlags().set(PxSceneFlagEnum.eENABLE_GPU_DYNAMICS);

sceneDesc.setBroadPhaseType(PxBroadPhaseTypeEnum.eGPU);

// optionally fine tune amount of allocated CUDA memory

// PxgDynamicsMemoryConfig memCfg = new PxgDynamicsMemoryConfig();

// memCfg.setStuff...

// sceneDesc.setGpuDynamicsConfig(memCfg);

} else {

System.err.println("No CUDA support!");

}

// Create scene as usual

PxScene scene = physics.createScene(sceneDesc);CUDA comes with some additional overhead (a lot of data has to be copied around between CPU and GPU). For smaller scenes this overhead seems to outweigh the benefits and physics computation might actually be slower than with CPU only. I wrote a simple CudaTest, which runs a few simulations with an increasing number of bodies. According to this the break even point is around 5k bodies. At 20k boxes the CUDA version runs about 3 times faster than the CPU Version (with an RTX 2080 / Ryzen 2700X). The results may be different when using other body shapes (the test uses boxes), joints, etc.

You can build the bindings yourself. However, this requires cmake, python3 and the C++ compiler appropriate to your

platform (Visual Studio 2022 (Community) on Windows / clang on Linux):

# Clone this repo

git clone https://github.com/fabmax/physx-jni.git

# Enter that directory

cd physx-jni

# Download submodule containing the PhysX source code

git submodule update --init

# Optional, but might help in case build fails

./gradlew generateNativeProject

# Build native PhysX (requires Visual Studio 2022 (Community) on Windows / clang on Linux)

./gradlew buildNativeProject

# Generate Java/JNI code and build library

./gradlew build