Welcome to the Awesome-LLM-Quantization repository! This is a curated list of resources related to quantization techniques for Large Language Models (LLMs). Quantization is a crucial step in deploying LLMs on resource-constrained devices, such as mobile phones or edge devices, by reducing the model's size and computational requirements.

| Title & Author & Link | Introduction | Summary |

|---|---|---|

| ICLR22 GPTQ: Accurate Post-Training Quantization for Generative Pre-trained Transformers Elias Frantar, Saleh Ashkboos, Torsten Hoefler, Dan Alistarh Github |

|

This Paper is the first one to apply post-training quantization to GPT. GPTQ is a one-shot weight quantization method based on approximate second-order information(Hessian). The bit-width is reduced to 3-4 bits per weight. Extreme experiments on 2-bit and ternary quantization are also provided. #PTQ #3-bit #4-bit #2-bit |

| ICML23 SmoothQuant: Accurate and Efficient Post-Training Quantization for Large Language Models Guangxuan Xiao, Ji Lin, Mickael Seznec, Hao Wu, Julien Demouth, Song Han Github |

|

SmoothQuant is a post-training quantization framework targeting W8A8 (INT8). In General, weights are easier to quantize than activation. It propose to migrate the quantization difficulty from activations to weights using mathematically equivalent transformation using #PTQ #W8A8 #Outlier |

| MLSys24_BestPaper AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration Ji Lin, Jiaming Tang, Haotian Tang, Shang Yang, Wei-Ming Chen, Wei-Chen Wang, Guangxuan Xiao, Xingyu Dang, Chuang Gan, Song Han, Song Han Github |

|

Activation-aware Weight Quantization (AWQ) is low-bit weight-only quantization method targeting edge devices with W4A16. The motivation is protecting only 1% of sliant weighs can retain the performance. Then, AWQ aims to search for the optimal per-channel scaling #PTQ #W4A16 #Outlier |

| AAAI24 Oral OWQ: Outlier-Aware Quantization for Efficient Fine-tuning and Inference of Large Language Models Changhun Lee, Jungyu Jin, Taesu Kim, Hyungjun Kim, Eunhyeok Park [Github](xvyaward/owq: Code for the AAAI 2024 Oral paper "OWQ: Outlier-Aware Weight Quantization for Efficient Fine-Tuning and Inference of Large Language Models". (github.com)) |

|

Outlier-aware weight quantization (OWQ) aims to minimize the footprint through low-precision representation. It prioritizes a small subset of structured weight using Hessain matrices and applies the high precision to these subset. This approach is a mixed-precision quantization method. The final model is 3.1 bit, which achieves comparable performance to OPTQ in 4-bit. Moreover, it incorporates Weak Column Tuning using PEFT to further boost the quality of zero-shot tasks. #PTQ #Mixed-Precision #3-bit #PEFT |

| EMNLP23 Outlier Suppression+: Accurate quantization of large language models by equivalent and optimal shifting and scaling Xiuying Wei, Yunchen Zhang, Yuhang Li, Xiangguo Zhang, Ruihao Gong, Jinyang Guo, Xianglong Liu Github |

|

In PTQ of LLM, outliers are constrated in specific channels and are asymmetric across channels. To address it, Outlier Suppression+ (OS+), a PTQ framework, is proposed with channel-wise shifting for asymmetry and channel-wise scaling for concentration. Also, they propose a fast and stable scheme to calculate the hyperparameters within the shifting and scaling. (1) Channel-wise Shifting can reshape the asysmetric shapes to symmetric distribution (2) Channel-wise Scaling scales down the outliers to a threshold, resulting a unified range for different channels. (3) Unified Migration Pattern can make sure the equivalent to the original results. #PTQ #Outlier |

| EMNLP23 LLM-FP4: 4-Bit Floating-Point Quantized Transformers Shih-yang Liu, Zechun Liu, Xijie Huang, Pingcheng Dong, Kwang-Ting Cheng Github |

|

LLM-FP4 quantizes both weight and activation to FP4 in a post-training manner. Compared with INT quantization, FP quantization is more flexible and can better handle long-tail or bell-shaped distributions. FP quantization is sensitive to the exponent bits and clipping range. LLM-FP4 searches for the optimal quantization parameters. This paper observes a pattern, which is high inter-channel variance and low intra-channel variance. Thus, LLM-FP4 employ per-channel activation quantization. #PTQ #FP4 |

| Arxiv23 LLM-QAT: Data-Free Quantization Aware Training for Large Langugae Models Zechun Liu, Barlas Oguz, Changsheng Zhao, Ernie Chang, Pierre Stock, Yashar Mehdad, Yangyang Shi, Raghuraman Krishnamoorthi, Vikas Chandra |

|

LLM-QAT is a data-free distillation method that leverages generations produced by the pre-trained model (also teacher model). Besides quantizing the weights and activations, LLM-QAT also quantize the KV cache, which helps to increase the throughput and support long sequence. The experiments are conducted on 4-bits. Experiments are conducted on a single 8-GPU training node. #QAT #KVQuant #KD |

| Arxiv23 BitNet: Scaling 1-bit Transformers for Large Language Models Hongyu Wang, Shuming Ma, Li Dong, Shaohan Huang, Huaijie Wang, Lingxiao Ma, Fan Yang, Ruiping Wang, Yi Wu, Furu Wei Github |

|

BitNet focus on QAT for 1-bit LLMs. It employs low-precision binary weights and quantized 8-bit activations, while maintaining high precision for the optimizer states and gradients during training. BitLinear is proposed as plug-and-play module that replace all linear modules in Transformer. For training, (1) It employs STE to approximate the gradient. (2) It maintains a latent weight in a high-precision format to accumulate the paramter update. (3) It employs large learning rate to avoid the biased gradient. #QAT #1-bit |

| NeurIPS23 QLoRA: Efficient Finetuning of Quantized LLMs Tim Dettmers, Artidoro Pagnoni, Ari Holtzman, Luke Zettlemoyer Github |

|

QLoRA aims to reduce the memory usage of LLM by incoorporating the LoRA with 4-bit quantized pretrained model. Specifically, QLoRA introduces (1) 4-bit NormalFlot(NF4), a information theoretically optimal for normally distributed weights. (2) double quantization to reduce the memory footprint. (3) paged optimizers to manage memory spikes. #NF4 #4-bit #LoRA |

| NeurIPS23 QuIP: 2-Bit Quantization of Large Language Models with Guarantees Jerry Chee, Yaohui Cai, Volodymyr Kuleshov, Christopher De Sa Github |

This paper introduces Quantization with Incoherence Processing (QuIP), which is based on the insight that quantization benefits from incoherent weight and Hessian matrices. It consists of two steps (1) adaptive rounding procedure to minimize a quadratic proxy objective. (2) pre- and post-processing that ensures weight and Hessian incoherence using random orthgonal matrices. QuIP makes the two-bit LLM compression viable for the first time. #PTQ #2-bit #Rotation |

|

| ICLR24 Spotlight OmniQuant: Omnidirectionally Calibrated Quantization for Large Language Models Wenqi Shao, Mengzhao Chen, Zhaoyang Zhang, Peng Xu, Lirui Zhao, Zhiqian Li, Kaipeng Zhang, Peng Gao, Yu Qiao, Ping Luo Github |

|

This paper is a framework between PTQ and QAT, aiming to attain the performance of QAT and maintain the time and data efficiency of PTQ. OmniQuant just requires 1.6h for LLaMA-7B, while LLM-QAT requires 90h and SmoothQuant requires 10min. Specifically, OmniQuant freezes the original full-precision weight and only train a few learnable quantization parameters, including Learnable Weight Clipping (LWC) and Learnable Equivalent Transformation (LET). #PTQ #QAT #Learnable #Transformation |

| ICLR24 PB-LLM: Partially Binarized Large Language Models Yuzhang Shang, Zhihang Yuan, Qiang Wu, Zhen Dong Github |

|

PB-LLM is a mixed-precision quantization framework that filters a small ratio of salient weights to higher-bit. It analyzed the performance under PTQ and QAT settings. Under PTQ, it reconstruct the binarized weight matrix like GPTQ. Under QAT, it freezes the salient weights during training. #PTQ #QAT #1-bit |

| ICML24 BiLLM: Pushing the Limit of Post-Training Quantization for LLMs Wei Huang, Yangdong Liu, Haotong Qin, Ying Li, Shiming Zhang, Xianglong Liu, Michele Magno, Xiaojuan Qi Github |

|

BiLLM is the first 1-bit post-training quatization framework for pretrained LLMs. BiLLM split the weights into salient weight and non-salient one. For the salient weights, they propose the binary residual approximation strategy. For the unsalient weights, they propose an optimal splitting search to group and binarize them independently. BiLLM achieve 8.41 ppl on LLaMA2-70B with only 1.08 bit. #PTQ #1-bit |

| ICML24 AQLM: Extreme Compression of Large Language Models via Additive Quantization Github |

|

|

| ACL24 BitDistiller: Unleashing the Potential of Sub 4-bit LLMs via Self-Distillation Dayou Du, Yijia Zhang, Shijie Cao, Jiaqi Guo, Ting Cao, Xiaowen Chu, Ningyi Xu Github |

|

Bitdistiller is a QAT framework that utilizes Knowledge Distillation to boost the performance at Sub-4bit. BitDistiller (1) incorporates a tailored asymmetric quantization and clipping technique to perserve the fidelity of quantized weight and (2) proposes a Confidence-Aware Kullback-Leibler Divergence (CAKLD) as self-distillation loss. Experiments involve 3-bit and 2-bit configuration. #QAT #2-bit #3-bit #KD |

| ACL24 DB-LLM: Accurate Dual-Binarization for Efficient LLMs Hong Chen, Chengtao Lv, Liang Ding, Haotong Qin, Xiabin Zhou, Yifu Ding, Xuebo Liu, Min Zhang, Jinyang Guo, Xianglong Liu, Dacheng Tao |

|

DB-LLM introduces Flexible Dual Binarization (FDB) by splitting 2-bit quantized weights into two independent set of binaries (which is similar to BiLLM). It also proposes Deviation -Aware Distillation to focus differently on various samples. DB-LLM is actually a QAT framework that targeting W2A16 settings. #QAT #Binarization #2-bit |

| ACL24 Findings IntactKV: Improving Large Language Model Quantization by Keeping Pivot Tokens Intact Ruikang Liu, Haoli Bai, Haokun Lin, Yuening Li, Han Gao, Zhengzhuo Xu, Lu Hou, Jun Yao, Chun Yuan |

|

This paper unveils a previously overlooked type of outliers in LLMs. Such outliers are found to allocate most of the attention scores on initial tokens of input, termed as pivot tokens, which are crucial to the performance of quantized LLMs. Given that, this paper proposes IntactKV to generate the KV cache of pivot tokens losslessly from the full-precision model. The approach is simple and easy to combine with existing quantization solutions with no extra inference overhead. Besides, IntactKV can be calibrated as additional LLM parameters to boost the quantized LLMs further with minimal training costs. #Weight #Outliers |

| Arxiv24 SliM-LLM: Salience-Driven Mixed-Precision Quantization for Large Language Models Wei Huang, Haotong Qin, Yangdong Liu, Yawei Li, Xianglong Liu, Luca Benini, Michele Magno, Xiaojuan Qi Github |

|

This paper presents Slience-Driven Mixed-Precision Quantization for LLMs, called Slim-LLM, targeting 2-bit mixed precision quantization. Specifically, Silm-LLM involves two techniques: (1) Salience-Determined Bit Allocation (SBA): by minimizing the KL divergence between original output and the quantized output, the objective is to find the best bit assignment for each group. (2) Salience-Weighted Quantizer Calibration: by considering the element-wise salience within the group, Slim-LLM search for the calibration parameter #MixedPrecision #2-bit |

| Arxiv24 AdpQ:A Zero-shot Calibration Free Adaptive Post Training Quantization Method for LLMs |

|

This paper formulates outlier weight identification problem in PTQ as the concept of shinkage in statistical ML. By applying Adaptive LASSO regression model, AdpQ ensures the quantized weights distirbution is close to that of origin, thus eliminating the requirement of calibration data. Lasso Regression employ the L1 regularization and minimize the KL divergence between the original weight and quantized one. The experiments mainly focus on 3/4 bit quantization #PTQ #Regression |

| Arxiv24 Integer Scale: A Free Lunch for Faster Fine-grained Quantization for LLMs Qingyuan Li, Ran Meng, Yiduo Li, Bo Zhang, Yifan Lu, Yerui Sun, Lin Ma, Yuchen Xie |

|

Integer Scale is a PTQ framework that require no extra calibration and maintain the performance. It is a fine-grained quantization method and can be used plug-and-play. Specifically, it reorder the sequence of costly type conversion I32toF32. #PTQ #W4A16 #W4A8 |

| Arxiv24 Outliers and Calibration Sets have Dimishing Effect on Quantization of Mordern LLMs Davide Paglieri, Saurabh Dash, Tim Rocktaschel, Jack Parker-Holder |

|

This paper evaluates the effects of calibration set on the performance of LLM quantization, especially on hidden activations. Calibration set can distort the quantization range and negatively impact performance. This paper reveals that different model has shown different tendency towards quantization. (1) OPT has shown high susceptibility to outliers with varying calibration sets. (2) Newer models like Llama-2-7B, Llama-3-8B, Mistral-7B has demonstrated stronger robustness. This findings suggest a shift in PTQ strategies. These findings indicate that we should emphasis more on optimizing inference speed rather than focusing on outlier preservation. #Analysis #Evaluation #Finding |

| Arxiv24 Effective Interplay between Sparsity and Quantization: From Theory to Practice Simla Burcu Harma Ayan Chakraborty, Elizaveta Kostenok, Dnila Mishin, etc. Github |

|

This paper dives into the interplay between sparsity and quantization and evaluates whether thheir combination impacts final performance of LLMs. This paper theriotically proves that applying sparsity before quantization is the optimal sequence, minimizing the error in computation. The experiments involves OPT, LLaMA and ViT. Findings: (1) sparsity and quantization are not orthogonal; (2) interaction between Sparsity and quantization significantly harm the performance, where quantization error is playing a dominant role in the degradation. #Theory #Sparisty |

| Arxiv24 PV-Tuning: Beyond Straight-Through Estimation for Extreme LLM Compression Github |

|

|

| Arxiv24 I-LLM: Efficient Integer-Only Inference for Fully-Quantized Low-Bit Large Language Models Xing Hu, Yuan Chen, Dawei Yang, Zhihang Yuan, Jiangyong Yu, Chen Xu, Sifan Zhou |

|

I-LLM is a PTQ framework that targeting the Integer-only quantization. They identify the large fluctuation of activations across channels and tokens. (1) It develop Fully-Smooth Block-Reconstruction (FSBR) to aggressively smooth inter-channel variations of all activations and weights. (2) It proposes Dynamic Integer-only MatMul (DI-MatMul) to dynamically quantize the input and output with int-only operations. (3) It proposes a series of bit shift to execute non-linear operation, including DI-ClippedSoftmax, DI-Exp, DI-Normalization. #PTQ #Int-Only #W4A4 |

| ICCAD24 Fast and Efficient 2-bit LLM Inference on GPU: 2/4/16-bit in a Weight Matrix with Asynchronous Dequantization Jinhao Li, Jiaming Xu, Shiyao Li, Shan Huang, Jun Liu, Yaoxiu Lian, Guohao Dai |

|

This paper first identify three challenges (1) Uneven distribution in weight; (2) Speed degradation by sparse outliers; (3) Time-consuming dequant on GPUs; To tackle these, this paper proposed three techniques: (1) Intra-weight mixed-precision quant; (2) Exclusive 2-bit sparse outlier; (3) Asynchronous dequant; #QAT #2-bit |

| Arxiv24 Mixture of Scales: Memory-Efficient Token-Adaptive Binarization for Large Language Models Dongwon Jo, Taesu Kim, Yulhwa Kim, Jae-Joon Kim |

|

The paper proposes a novel binarization technique called Mixture of Scales (BinaryMoS) for large language models (LLMs). Unlike conventional binarization methods that use a single scaling factor, BinaryMoS employs multiple scaling experts that are dynamically combined based on the input token to generate token-adaptive scaling factors. This token-adaptive approach enhances the representational power of binarized LLMs while maintaining the memory efficiency of traditional binarization techniques. Experiments show that BinaryMoS outperforms previous binarization methods and even 2-bit quantization approaches in various NLP tasks, all while maintaining similar model size to static binarization techniques. #1-bit #PTQ #QAT |

| Arxiv24 QuaRot: Outlier-Free 4-Bit Inference in Rotated LLMs Saleh Ashkboos, Amirkeivan Mohtashami, Maximilian L. Croci, Bo Li, Martin Jaggi, Dan Alistarh, Torsten Hoefler, James Hensman Github |

|

This paper introduce a novel rotation-based quantization scheme, which can quantize the weight, activation, and KV cache of LLMs in 4-bit. QuaRot rotates the LLMs to remove the outliers from hideenstate. It apply randomized Hadamard transformations to the weight matrices without changing the model. When applying this transformation to attention module, it enables the KV cache quantization. #PTQ #4bit #Rotation |

| Arxiv24 SpinQuant:LLM Quantization with Learned Rotations Zechun Liu, Changsheng Zhao etc. |

|

Rotating activation or weight matrices heps remove outliers and benefits quantizaion (rotational invariance property). They first identify a collection of applicable rotation parameterizations that lead to identical outputs in full-precision Transformer. They find that soem random rotations lead to better quantization than others. Then, SpinQuant was proposed to optimize the rotation matrices with Cayley optimization on validation dataset. Specifically, them employ Cayley SGD method to optimize the rotation matrix on the Stiefel manifold. #PTQ #Rotation #4bit |

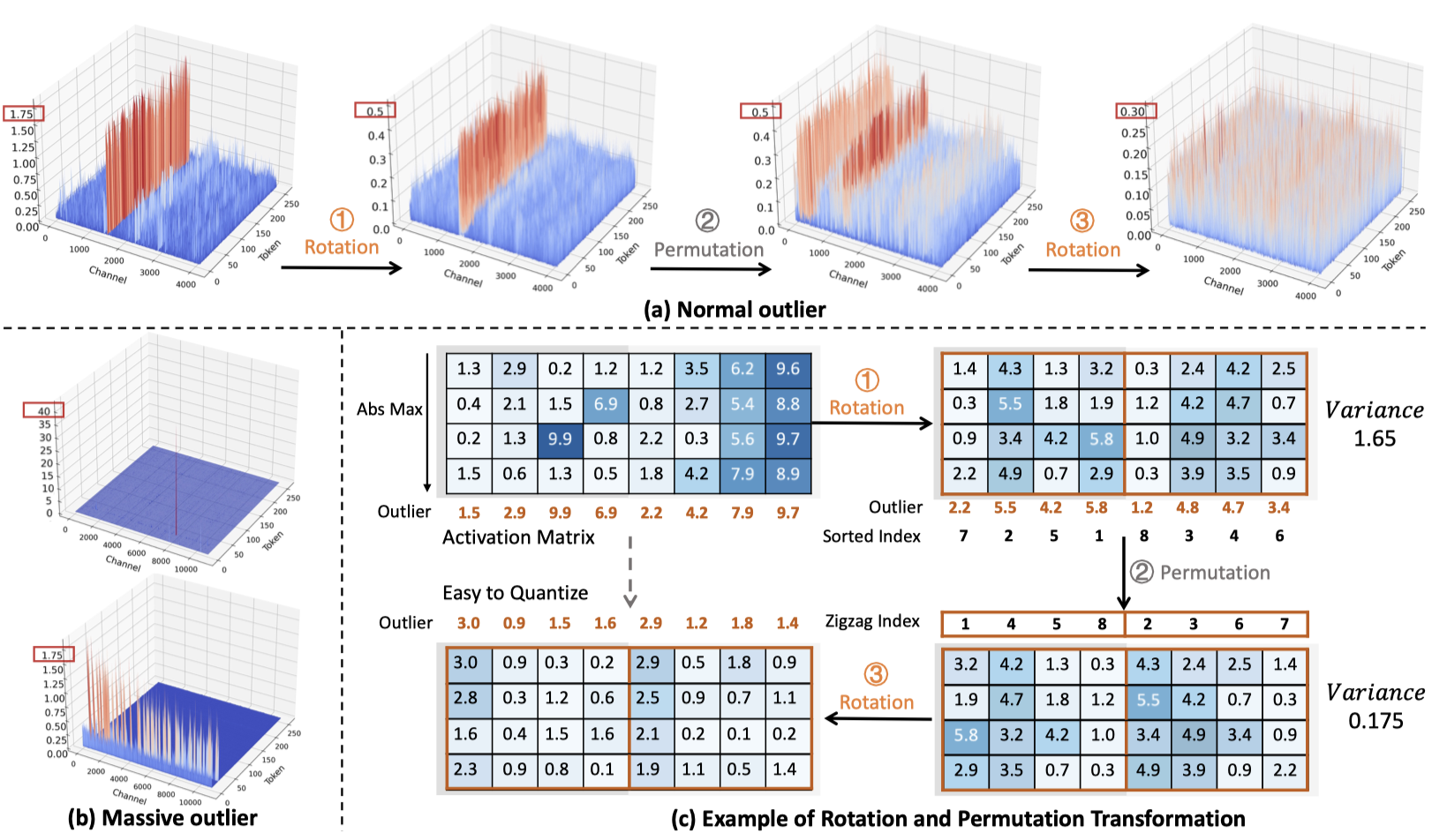

| NeurIPS24 Oral DuQuant: Distributing Outliers via Dual Transformation Makes Stronger Quantized LLMs Haokun Lin, Haobo Xu, Yichen Wu, Jingzhi Cui, Yingtao Zhang, Linzhan Mou, Linqi Song, Zhenan Sun, Ying Wei |

|

This paper finds that there exists extremely large massive outliers at down_porj layer of FFN modules. This paper introduces DuQuant, a novel approach that utilizes rotation and permutation transformations to more effectively mitigate both massive and normal outliers. First, DuQuant starts by constructing rotation matrices, using specific outlier dimensions as prior knowledge, to redistribute outliers to adjacent channels by block-wise rotation. Second, We further employ a zigzag permutation to balance the distribution of outliers across blocks, thereby reducing block-wise variance. A subsequent rotation further smooths the activation landscape, enhancing model performance. DuQuant establishs new state-of-the-art baselines for 4-bit weight-activation quantization across various model types and downstream tasks. #PTQ #Rotation #4bit #WA |

2-bit evaluation

3-bit evaluation

Contributions to this repository are welcome! If you have any suggestions for new resources, or if you find any broken links or outdated information, please open an issue or submit a pull request.

This repository is licensed under the MIT License.