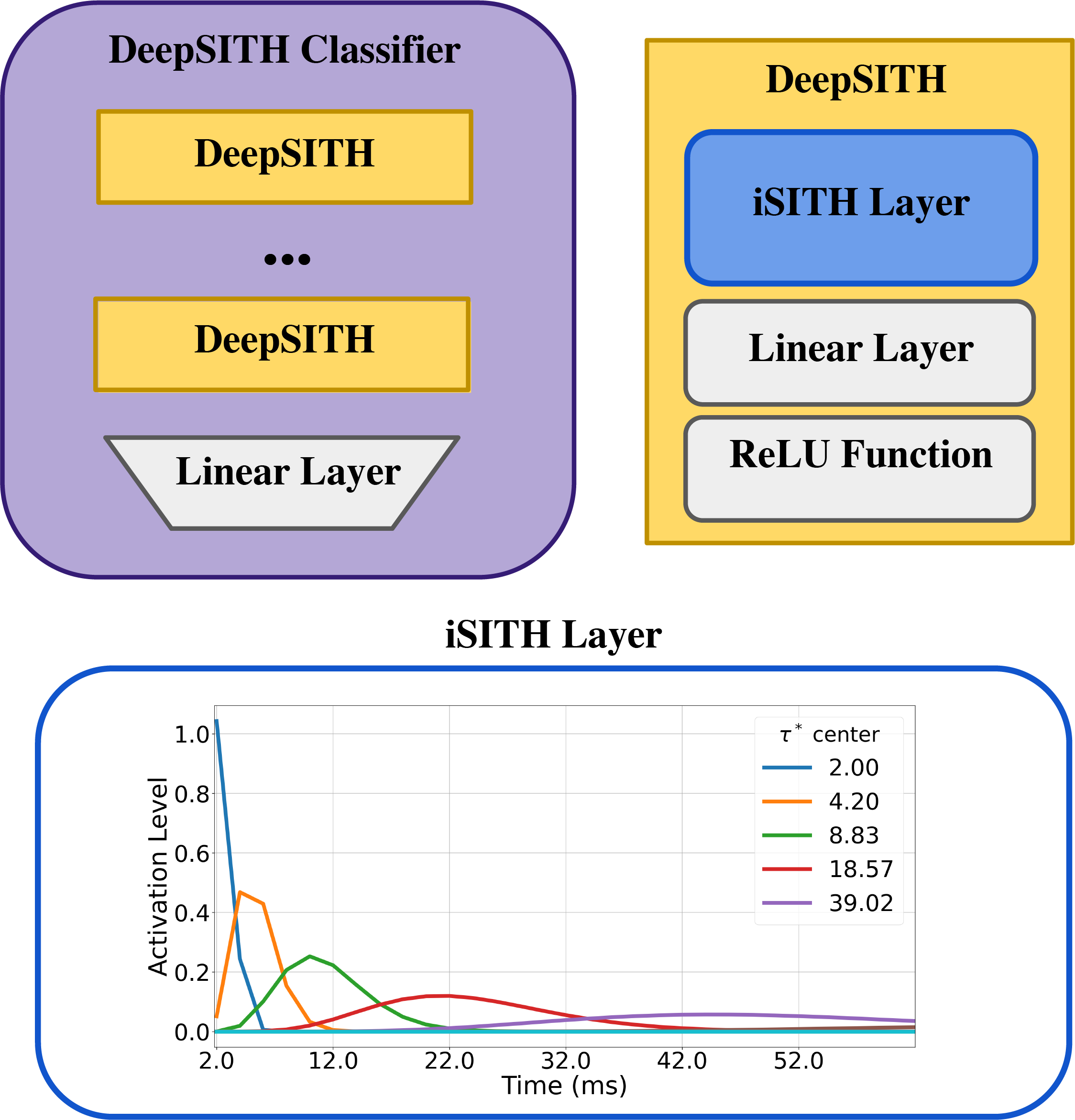

This repository tracks the use and validation of a specialized neural network (Scale Invariant Temporal History (SITH)) that mimics the scale-invariant patterns of the brain's time cells. SITH's development was pioneered by the Computational Memory Lab at the University of Virginia; more information and code can be found here: https://github.com/compmem/SITH_Layer. Our goal is to quanitfy how well SITH can identify actions given EEG data in hopes to improve prediction accuracy of current BCI (Brain-Computer Interface) technology on EEG data by using DeepSITH and compare it with LSTM.

All work in this repository belongs to Gaurav Anand ([email protected]), Arshiya Ansari ([email protected]),Beverly Dobrenz ([email protected]), and Yibo Wang ([email protected]).

-

Download the Grasp-and-Lift EEG Detection data

-

Download and install the SITH_Layer-master package from https://github.com/compmem/SITH_Layer

-

Check out example_training.ipynb for installation and training. train_all.py is used to train all subjects. The evaluation notebook is used to evaluate performances of both models on the holdout set.

-

Submit SLURM job to train on Rivanna (An example is provided in the Slurm_script folder).

Methods

For now, only consider one subject (subject1) for modeling. Predict only one event/channel a time (since there are events overlapping), and incorporate sliding-window standardization and filtering.

Load all eight events and split into 80% training and 20% validation/holdout set.

Use dataset and dataloader pytorch classes to control batch processing.

Use sequence length of 50000 and stride size of 5000 (overlaped sequence for each batch)for each minibatch.

Note

The code is tested on Rivanna with GPU. (may needs some tweaks with CPU only)

Need train_util.py and Deep_isith_EEG.py helper functions as well as the SITH_Layer_master package

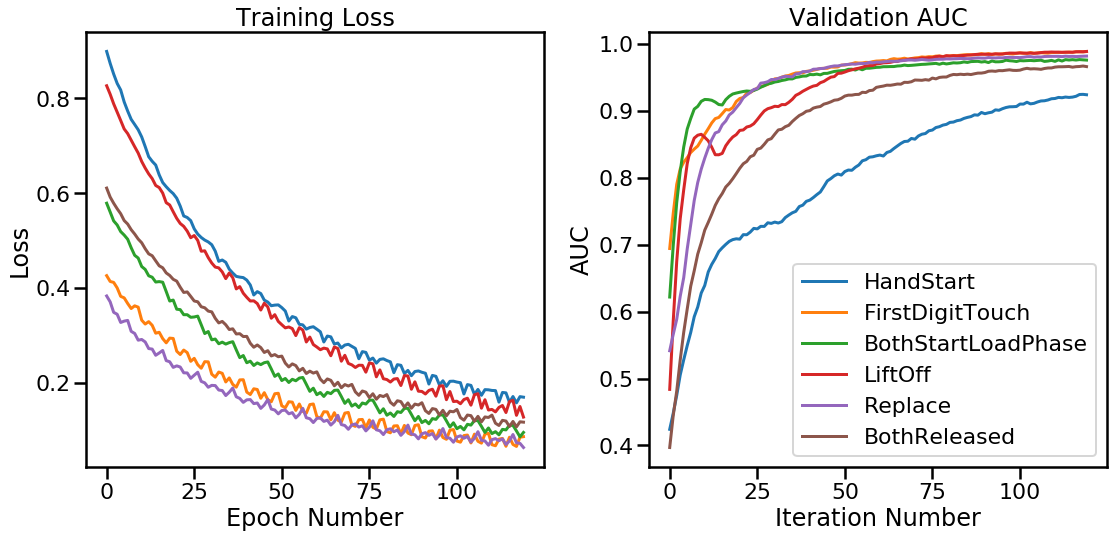

Result

Average AUC on the holdout set for all six events for Subject1 is 0.97

Average AUC on the holdout set for all events and for all subject is 0.935

paper