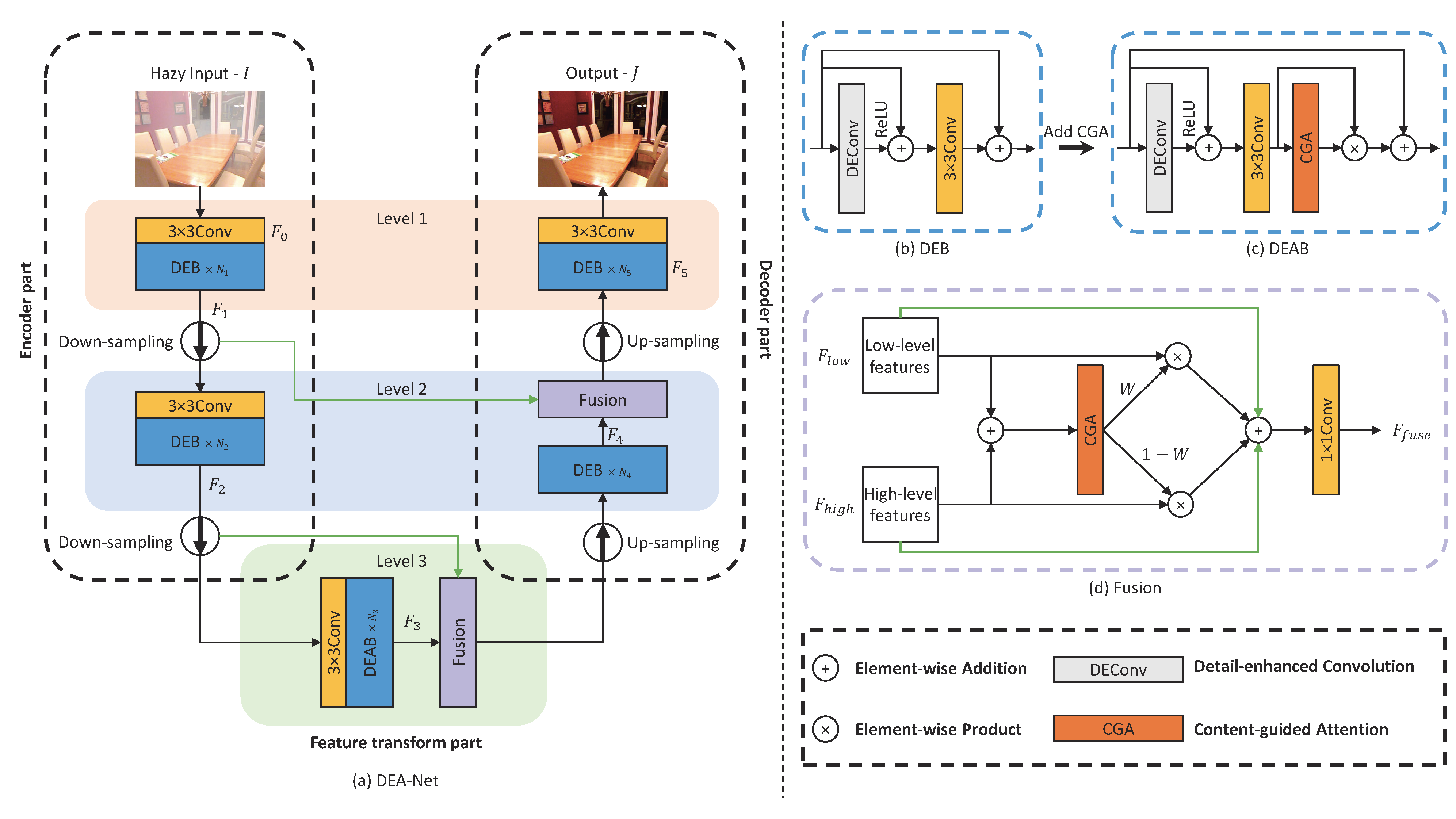

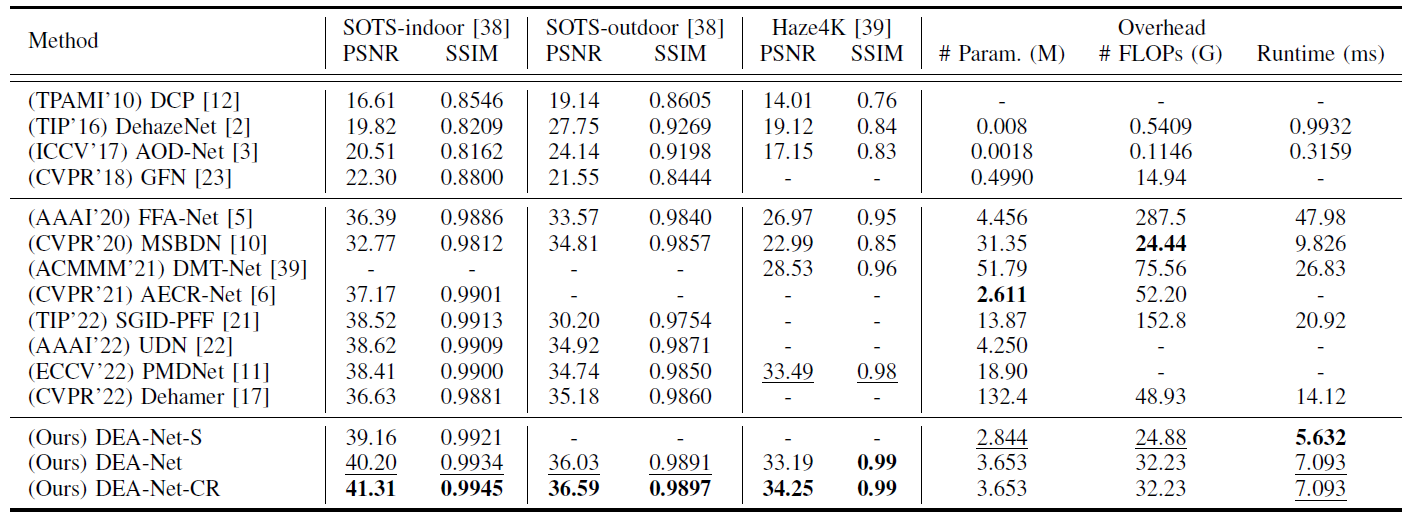

DEA-Net: Single image dehazing based on detail-enhanced convolution and content-guided attention

(IEEE TIP 2024)

Zixuan Chen, Zewei He, Zheming Lu

Zhejiang University

This repo is the official implementation of "DEA-Net: Single image dehazing based on detail-enhanced convolution and content-guided attention".

-

Apr 21, 2024: 🔥🔥🔥 The implementation for Re-Parameterization are available now.

-

Jan 10, 2024: 🔥🔥🔥 The implementation for DEConv and training codes for DEA-Net-CR are available now.

-

Jan 08, 2024: 🎉🎉🎉 Accepted by IEEE TIP.

-

Jan 11, 2023: Released evaluation codes and reparameterized pre-trained models.

- Clone this repo:

git clone https://github.com/cecret3350/DEA-Net.git

cd DEA-Net/

- Create a new conda environment and install dependencies:

conda create -n pytorch_1_10 python=3.8

conda activate pytorch_1_10

conda install pytorch==1.10.0 torchvision==0.11.0 torchaudio==0.10.0 cudatoolkit=11.3 -c pytorch -c conda-forge

pip install -r requirements.txt

When evaluating on OTS with jpeg images as input, please make sure that the version of pillow is 8.3.2, this is to ensure that the same decoding algorithm is used for jpeg images during evaluation and training.

- Download the dataset: [RESIDE] and [HAZE4K].

- Make sure the file structure is consistent with the following:

dataset/

├── HAZE4K

│ ├── test

│ | ├── clear

│ | │ ├── 1.png

│ | │ └── 2.png

│ | │ └── ...

│ | └── hazy

│ | ├── 1_0.89_1.56.png

│ | └── 2_0.93_1.66.png

│ | └── ...

│ └── train

│ ├── clear

│ │ ├── 1.png

│ │ └── 2.png

│ │ └── ...

│ └── hazy

│ ├── 1_0.68_0.66.png

│ └── 2_0.59_1.95.png

│ └── ...

├── ITS

│ ├── test

│ | ├── clear

│ | │ ├── 1400.png

│ | │ └── 1401.png

│ | │ └── ...

│ | └── hazy

│ | ├── 1400_1.png

│ | └── ...

│ | └── 1400_10.png

│ | └── 1401_1.png

│ | └── ...

│ └── train

│ ├── clear

│ │ ├── 1.png

│ │ └── 2.png

│ │ └── ...

│ └── hazy

│ ├── 1_1_0.90179.png

│ └── ...

│ └── 1_10_0.98796.png

│ └── 2_1_0.99082.png

│ └── ...

└── OTS

├── test

| ├── clear

| │ ├── 0001.png

| │ └── 0002.png

| │ └── ...

| └── hazy

| ├── 0001_0.8_0.2.jpg

| └── 0002_0.8_0.08.jpg

| └── ...

└── train

├── clear

│ ├── 0005.jpg

│ └── 0008.jpg

| └── ...

└── hazy

├── 0005_0.8_0.04.jpg

└── 0005_1_0.2.jpg

└── ...

└── 0008_0.8_0.04.jpg

└── ...

- Run the following script to train DEA-Net-CR from scratch:

CUDA_VISIBLE_DEVICES=0 python train.py --epochs 300 --iters_per_epoch 5000 --finer_eval_step 1400000 --w_loss_L1 1.0 --w_loss_CR 0.1 --start_lr 0.0001 --end_lr 0.000001 --exp_dir ../experiment/ --model_name DEA-Net-CR --dataset ITS

- Download the pre-trained models on [Google Drive] or [Baidu Disk (password: dcyb)].

- Make sure the file structure is consistent with the following:

trained_models/

├── HAZE4K

│ └── PSNR3426_SSIM9985.pth

├── ITS

│ └── PSNR4131_SSIM9945.pth

└── OTS

└── PSNR3659_SSIM9897.pth

- Run the following script to evaluation the pre-trained model:

cd code/

python3 eval.py --dataset HAZE4K --model_name DEA-Net-CR --pre_trained_model PSNR3426_SSIM9885.pth

python3 eval.py --dataset ITS --model_name DEA-Net-CR --pre_trained_model PSNR4131_SSIM9945.pth

python3 eval.py --dataset OTS --model_name DEA-Net-CR --pre_trained_model PSNR3659_SSIM9897.pth

- (Optional) Run the following script to evaluation the pre-trained model and save the inference results:

cd code/

python3 eval.py --dataset HAZE4K --model_name DEA-Net-CR --pre_trained_model PSNR3426_SSIM9885.pth --save_infer_results

python3 eval.py --dataset ITS --model_name DEA-Net-CR --pre_trained_model PSNR4131_SSIM9945.pth --save_infer_results

python3 eval.py --dataset OTS --model_name DEA-Net-CR --pre_trained_model PSNR3659_SSIM9897.pth --save_infer_results

Inference results will be saved in experiment/<dataset>/<model_name>/<pre_trained_model>/

If you find our paper and repo are helpful for your research, please consider citing:

@article{chen2023dea,

title={DEA-Net: Single image dehazing based on detail-enhanced convolution and content-guided attention},

author={Chen, Zixuan and He, Zewei and Lu, Zhe-Ming},

journal={IEEE Transactions on Image Processing},

year={2024},

volume={33},

pages={1002-1015}

}If you have any questions or suggestions about our paper and repo, please feel free to concat us via [email protected] or [email protected].