make install

make test1

make clean1 make uninstall

- -o use_path_request_style

- 增加以上参数

apiVersion: v1

kind: ConfigMap

metadata:

name: s3-config

data:

ENDPOINT: <YOUR-S3-BUCKET-NAME>

AK: <YOUR-AWS-TECH-USER-ACCESS-KEY>

SK: <YOUR-AWS-TECH-USER-SECRET>如果对于性能有很强的需求并且要求和传统文件系统的体验一致,那么此方案并不适用,可以考虑使用AWS的EBS来做kubernetes的持久存储。

一般情况下S3不能提供像本地文件系统一样的功能。具体如下:

随机写或追加写需要重写整个文件; 由于网络延迟,所以元数据的操作如列出目录等的性能较差; 最终一致性会暂时产生中间数据(AMAZON S3数据一致性模型); 没有文件和目录的原子重命名; 安装相同存储桶的多个客户端之间没有协调; 没有硬链接;

The storage is definitely the most complex and important part of an application setup, once this part is completed, 80% of the tasks are completed.

Mounting an S3 bucket into a pod using FUSE allows you to access the data as if it were on the local disk. The mount is a pointer to an S3 location, so the data is never synced locally. Once mounted, any pod can read or even write from that directory without the need for explicit keys.

However, it can be used to import and parse large amounts of data into a database.

Generally S3 cannot offer the same performance or semantics as a local file system. More specifically:

- random writes or appends to files require rewriting the entire file

- metadata operations such as listing directories have poor performance due to network latency

- eventual consistency can temporarily yield stale data(Amazon S3 Data Consistency Model)

- no atomic renames of files or directories

- no coordination between multiple clients mounting the same bucket

- no hard links

You need to have a Kubernetes cluster, and the kubectl command-line tool must be configured to communicate with your cluster. If you do not already have a cluster, you can create one by using the Gardener.

Ensure that you have create the "imagePullSecret" in your cluster.

kubectl create secret docker-registry artifactory --docker-server=<YOUR-REGISTRY>.docker.repositories.sap.ondemand.com --docker-username=<USERNAME> --docker-password=<PASSWORD> --docker-email=<EMAIL> -n <NAMESPACE>Change the settings in the build.sh file with your docker registry settings.

#!/usr/bin/env bash

########################################################################################################################

# PREREQUISTITS

########################################################################################################################

#

# - ensure that you have a valid Artifactory or other Docker registry account

# - Create your image pull secret in your namespace

# kubectl create secret docker-registry artifactory --docker-server=<YOUR-REGISTRY>.docker.repositories.sap.ondemand.com --docker-username=<USERNAME> --docker-password=<PASSWORD> --docker-email=<EMAIL> -n <NAMESPACE>

# - change the settings below arcording your settings

#

# usage:

# Call this script with the version to build and push to the registry. After build/push the

# yaml/* files are deployed into your cluster

#

# ./build.sh 1.0

#

VERSION=$1

PROJECT=kube-s3

REPOSITORY=cp-enablement.docker.repositories.sap.ondemand.com

# causes the shell to exit if any subcommand or pipeline returns a non-zero status.

set -e

# set debug mode

#set -x

.

.

.

.

Create the S3Fuse Pod and check the status:

# build and push the image to your docker registry

./build.sh 1.0

# check that the pods are up and running

kubectl get pods

Create a demo Pod and check the status:

kubectl apply -f ./yaml/example_pod.yaml

# wait some second to get the pod up and running...

kubectl get pods

# go into the pd and check that the /var/s3 is mounted with your S3 bucket content inside

kubectl exec -ti test-pd sh

# inside the pod

ls -la /var/s3

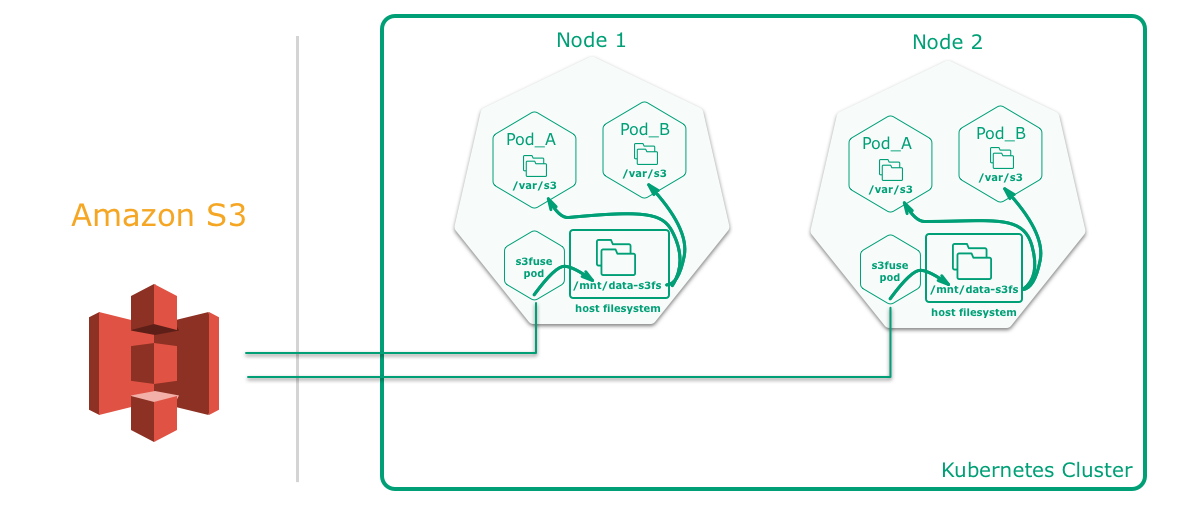

Docker engine 1.10 added a new feature which allows containers to share the host mount namespace. This feature makes it possible to mount a s3fs container file system to a host file system through a shared mount, providing a persistent network storage with S3 backend.

The key part is mountPath: /var/s3:shared which enables the volume to be mounted as shared inside the pod. When the

container starts it will mount the S3 bucket onto /var/s3 and consequently the data will be available under

/mnt/data-s3fs on the host and thus to any other container/pod running on it (and has /mnt/data-s3fs mounted too).