Large Language Models (LLMs) have revolutionized natural language processing, but their substantial computational and energy requirements pose significant challenges for sustainable deployment. While much attention has focused on reducing pretraining costs, the majority of a model's environmental impact occurs during inference—its primary operational mode. This creates an urgent need for optimization strategies that can reduce the ecological footprint of LLMs while maintaining their performance. Our research introduces a novel post-training pruning methodology that:

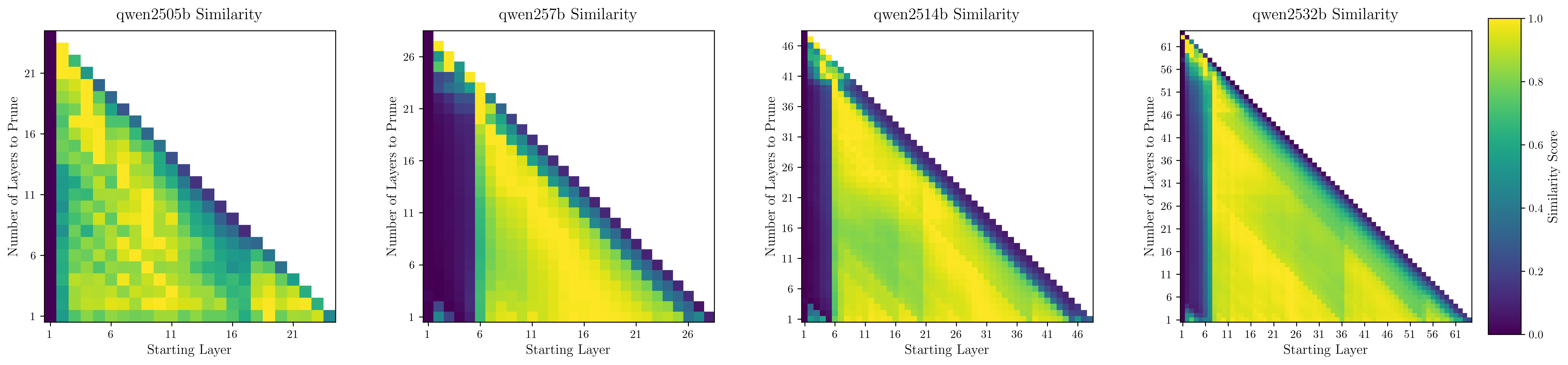

- Strategically identifies optimal pruning points through neighborhood similarity analysis

- Systematically removes architectural elements while preserving model functionality

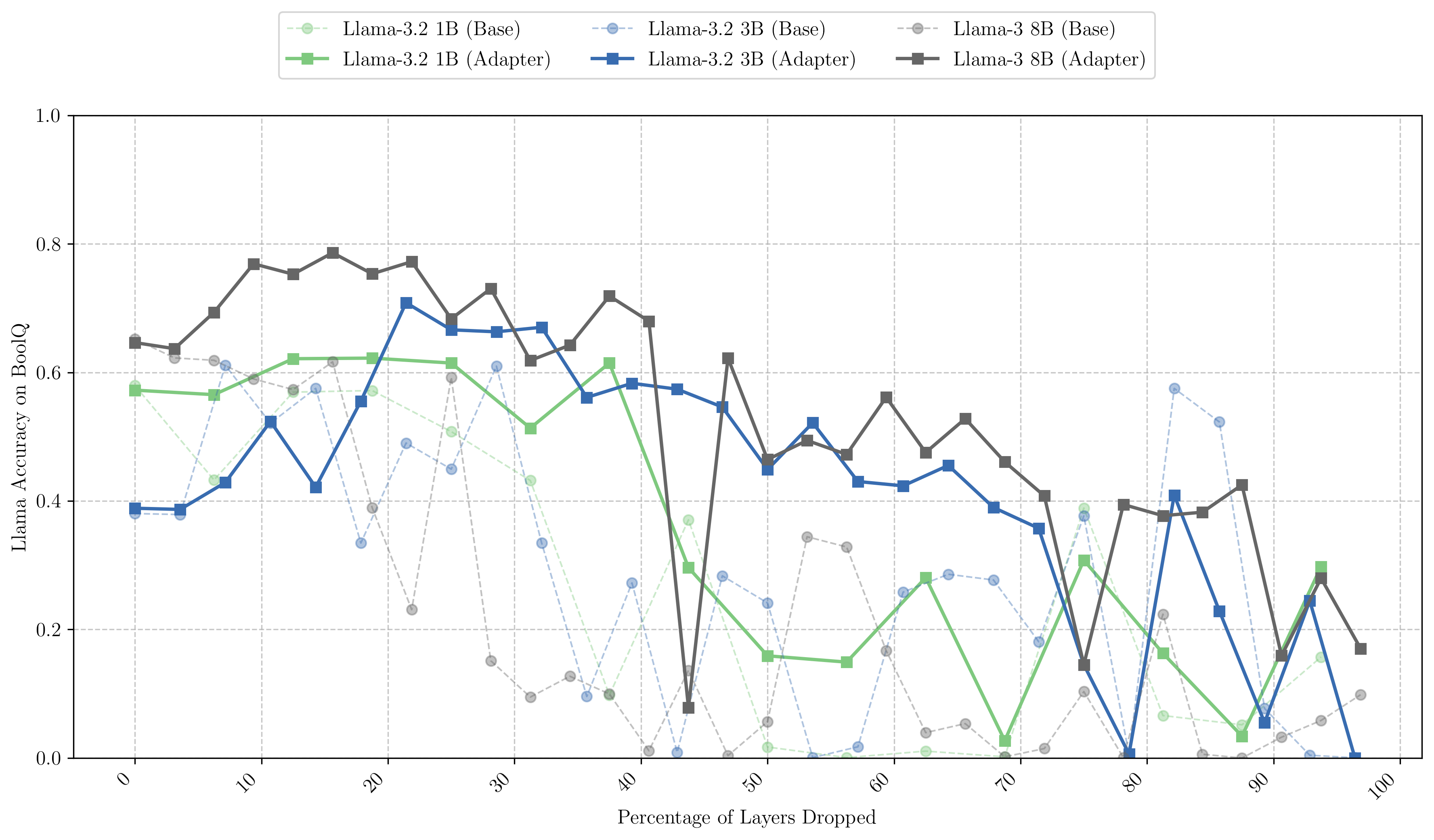

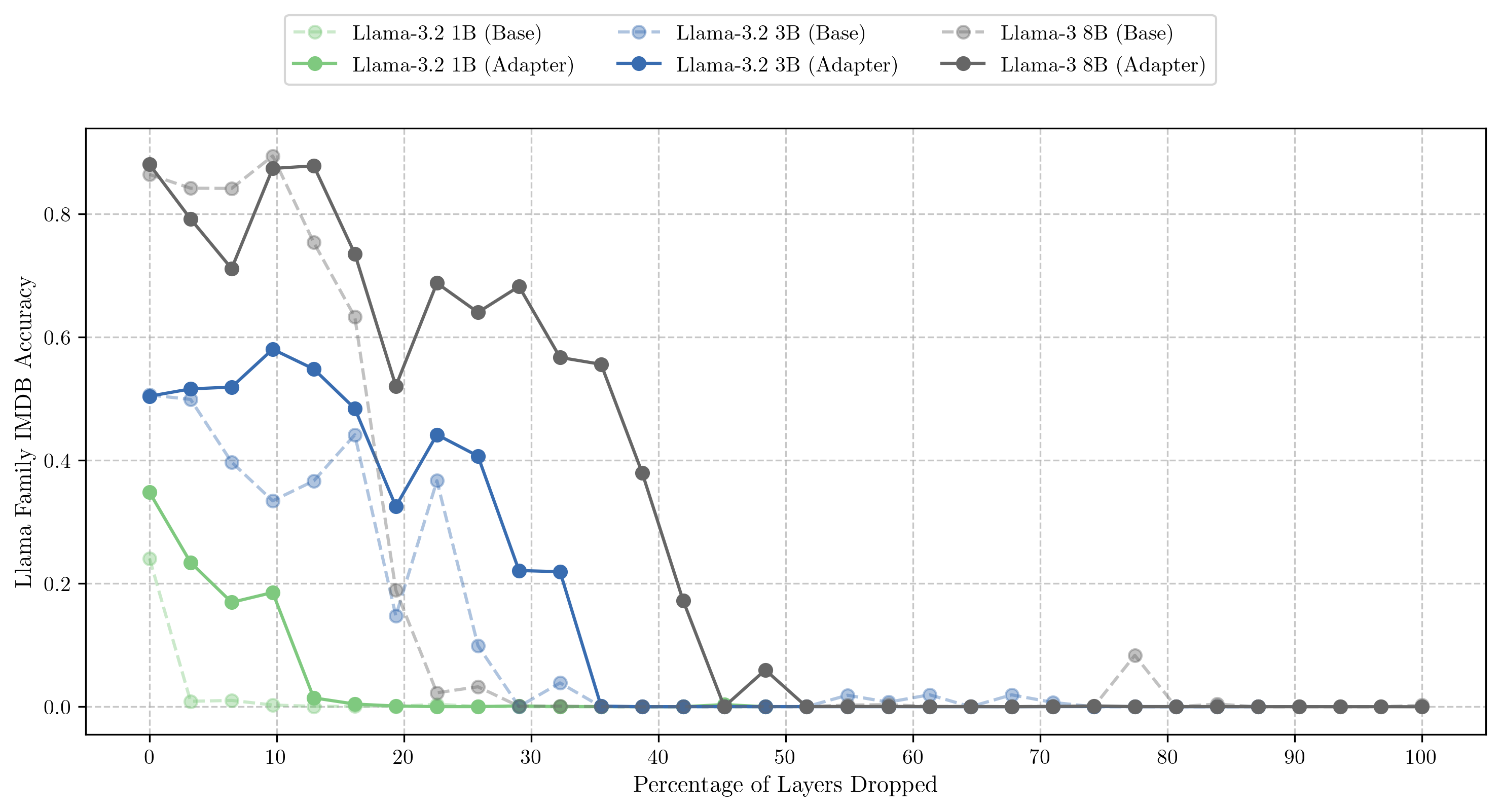

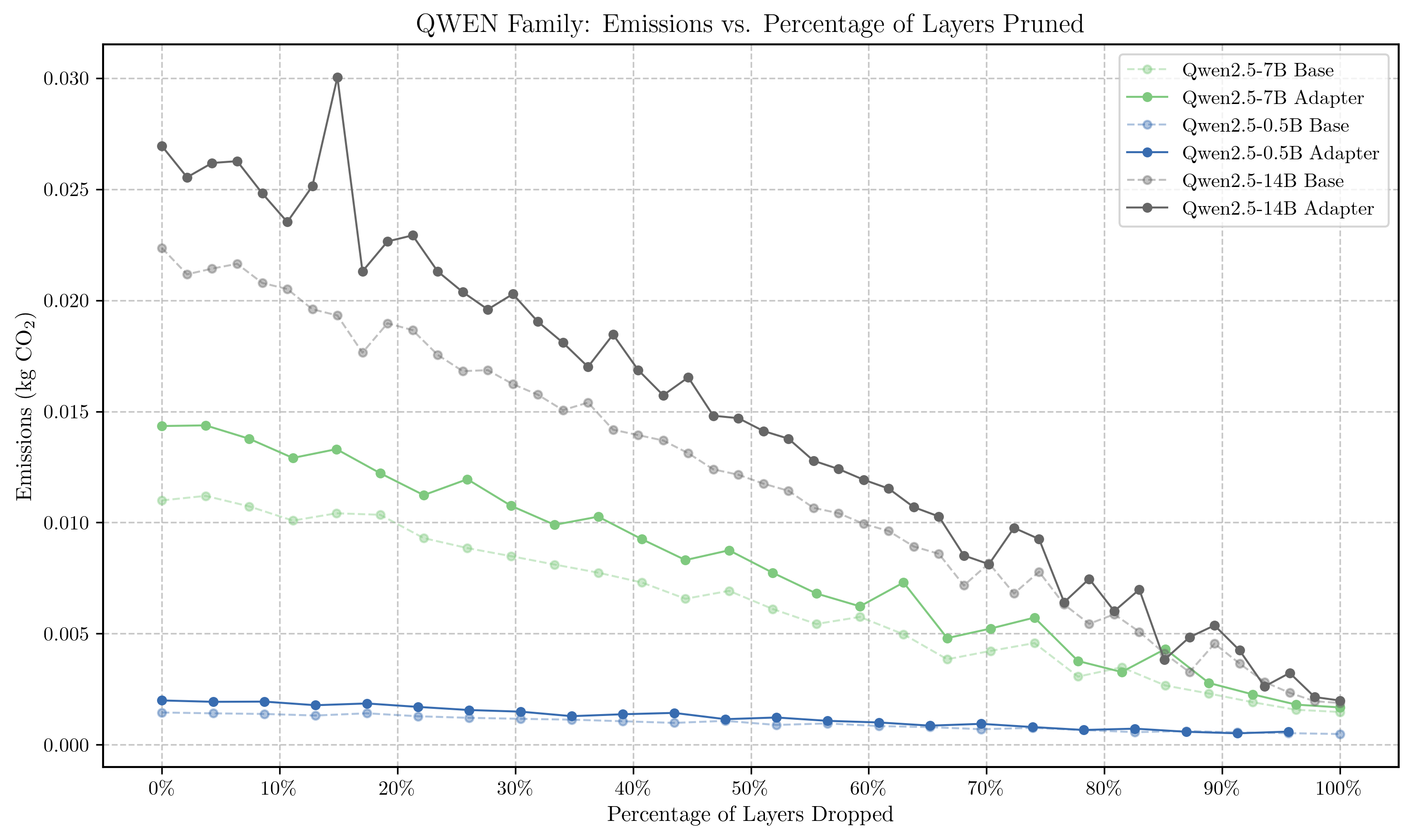

- Quantifies both performance impact and resource efficiency gains

The implementation includes comprehensive evaluation across multiple NLP tasks (question answering, sentiment analysis, summarization) to validate our approach. By focusing on post-deployment optimization, we directly address the environmental challenges of AI technologies, contributing to more sustainable large language model deployments.

This work was conducted as an undergraduate thesis project by:

- Paul Lambert (Undergraduate Researcher)

- Julia Hockenmaier, PhD (Professor, Research Adviser)

Department of Computer Science, University of Illinois at Urbana-Champaign

This project uses conda for environment management. To set up:

# Clone the repository

git clone [email protected]:hipml/llm-pruning-thesis.git

cd llm-pruning-thesis

# Create and activate conda environment

conda env create -f environment.yml

conda activate [env-name][1-gpu-collector.py]: Multi-GPU script for capturing all layer hidden state tensors for a given model[2-meantensor.py]: Processing mean tensors to find optimal pruning start points[multitrain.py]: Multi-GPU QLoRA training script to optimally prune a model's layers of block sizenand then heal it with an adapter trained on theallenai/c4dataset[eval.py]: Evaluate the pruned (and healed) models on:- Question/Answering (

BoolQ), - Sentiment Analysis (

IMDB), - Summarization (

xsum), and - Perplexity measurements

- Question/Answering (

For high-performance computing cluster execution, refer to the SLURM job scripts in the batch/ directory:

# Example SLURM job submission

sbatch batch/multitrain.sh llama3170b boolq.

├── docs/ # Additional documentation + Paper

├── batch/ # SLURM batch scripts

├── models/ # Emissions data from QLoRA training

├── output/ # Generated outputs

│ └── viz/ # Visualization outputs

└── src/ # Source code files

└── support/ # Helper classes and config files

Experimental results are organized as follows:

- Task evaluation results:

output/evaluation_results

- Environmental impact data:

models/adapters/<model_name>/<pruned_layers>/emissions.csv

While this project has reached its conclusion, we'd love to hear from anybody interested. Please feel free to contact the author or to submit a Pull Request.

This project is licensed under the University of Illinois/NCSA Open Source License - see the LICENSE.txt file for details.

If you use this code in your research, please cite:

@thesis{lambert2024llmpruning,

author = {Lambert, Paul},

title = {Post-Training Optimizations in Large Language Models: A Novel Pruning Approach},

school = {University of Illinois at Urbana-Champaign},

year = {2024},

type = {Bachelor's Thesis},

advisor = {Hockenmaier, Julia}

}