An R package for robust analysis, plotting and meta analysis of data from A-B Single Case Experiment Designs using exact tests, robust effect sizes and meta analysis of these effect sizes.

Ian Hussey ([email protected])

You may use, copy, distribute and modify this software, as long as you (a) track any changes or additions you make to it (e.g., by submitting pull requests) and (b) make your changes or additions available to others.

install.packages("devtools")

library(devtools)

install_github("ianhussey/SCED")

library(SCED)See the vignette for an examle workflow (vignettes/vignette.Rmd)

Simulate data that meets the required format of sced_analysis() and sced_plot() for testing/demonstration (via the simstudy package).

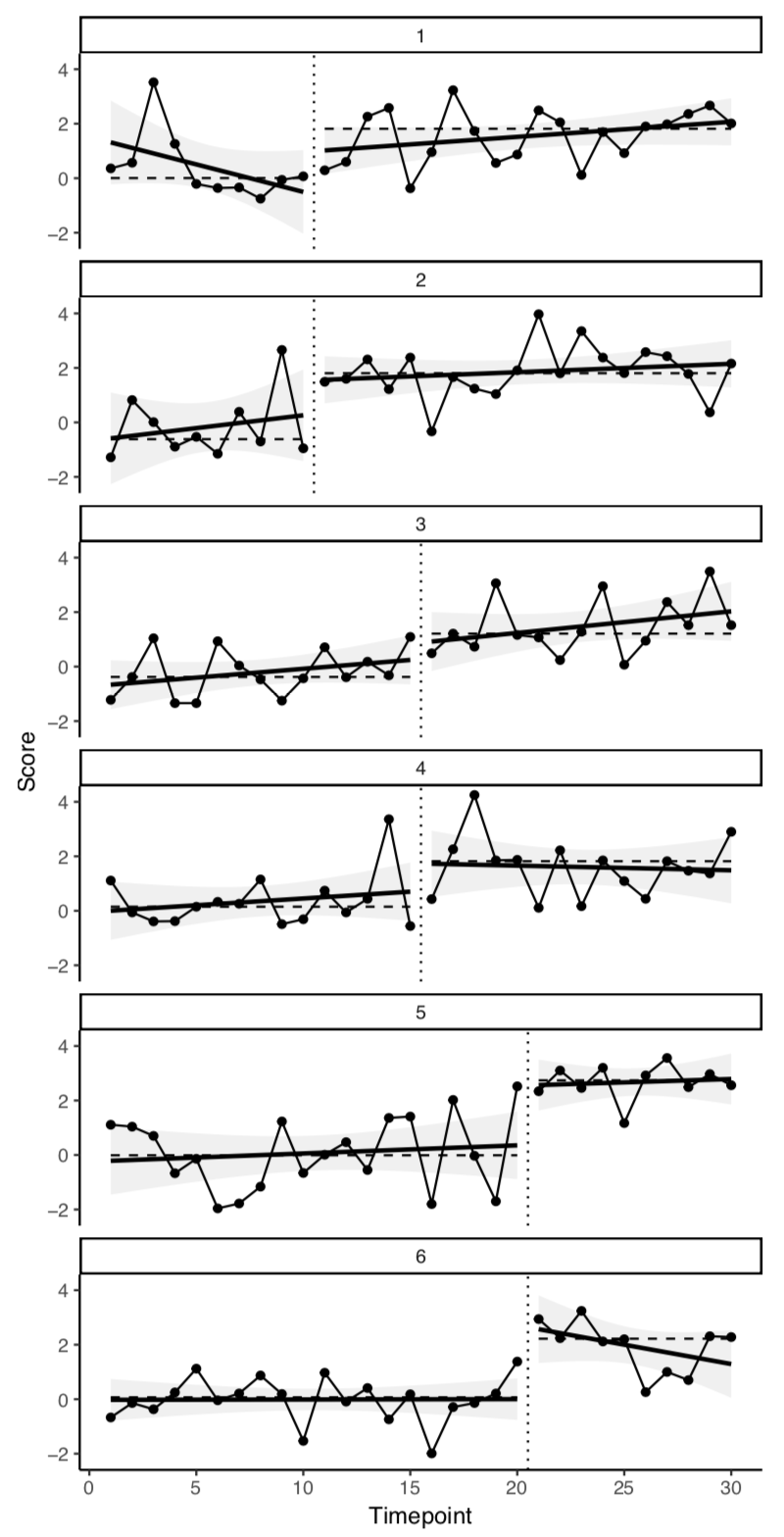

Panes represent individual participants with ID numbers included above each. Vertical dotted lines separate experimental conditions A and B (e.g., pre and post intervention). Horizontal dashed lines represent median values in each expeirmental condition.

Calculates the following statistics:

- An exact p value for using a non-parametric permutation test (via the coin package). These are insensitive to distributions and outliers. False positive rates are therefore equal to the alpha value, rather than approximate to it. Assesses whether the values of the data points in condition B are different to condition A.

- Median difference score. Difference score between the median value of condition A and condition B. Median values are employed given their greater robustness. Unstandardized values such as the median difference score can be useful in order to assess clinical significance, and when used in conjunction with non-parametric effect sizes (such as A, below), which can suffer from floor/ceiling effects.

- Effect size A (Ruscio, 2008), a non-parametric, probabalistic effect size. Bootstrapping is used to calculated the median value and its 95% confidence intervals. This is also calculated via a permutation method, and is therefore robust/insensitive to outliers and distributions. The original, parametric versions of this test are referred to as the Area Under the Curve (AUC), the Common Language Effect Size (CLES), or the probability of superiority. A has an identical range (i.e. a probability between and 1) interpretation (i.e., the probability of a randomly selected time point in condition B being greater than a randomly selected time point in condition A). A is therefore also more robust than traditional effect size metrics for SCED such as the % overlap and the % greater than the median.

- Hedges' g effect sizes, and its bootstrapped 95% confidence intervals. g is a variation of Cohen's d that is bias corrected for small sample sizes. It is included here for the sake of familiarity/comparison. However, it is sensitive to the ratio of data points in A vs. B, the normality of the distributions of data in each condition, and the equality of variances of A and B. Bootstrapped CIs serves to mitigate these assumptions somewhat.

Conduct a random effects meta analysis of the robust standardized effect sizes (Ruscio's A values) from sced_analysis(). Produces a meta analytic effect size, 95% confidence intervals, 95% credibility intervals (also referred to as prediction intervals), measures of heterogeneity between participants, and a forest plot of the effect sizes and meta analysed effect size.

Rough a priori power analysis for SCED's between subjects meta analysis based on the equations derived from Valentine et al. (2009) by Quintana (2017). Some approximations are made: e.g., Valentine et al assume a between groups design and the use of Cohen's d over the SCED package's Hedge's g. The function therefore likely underestimates power by a small degree. Note that power analysis should not be used in isolation to determine sample size: number of participants, timepoints, etc should also take methodological recommendations for SCEDs into account.

-

Explicate that the expectation is that B>A, and add option to reverse (which is more than inverting the probability in cases of ties)

-

Change Rusico's A point estimate to the whole sample estimate rather than the median bootstrap?

-

update screenshots in readme

-

Add additional other terminology for A from Parker (2009), and relate A to tau