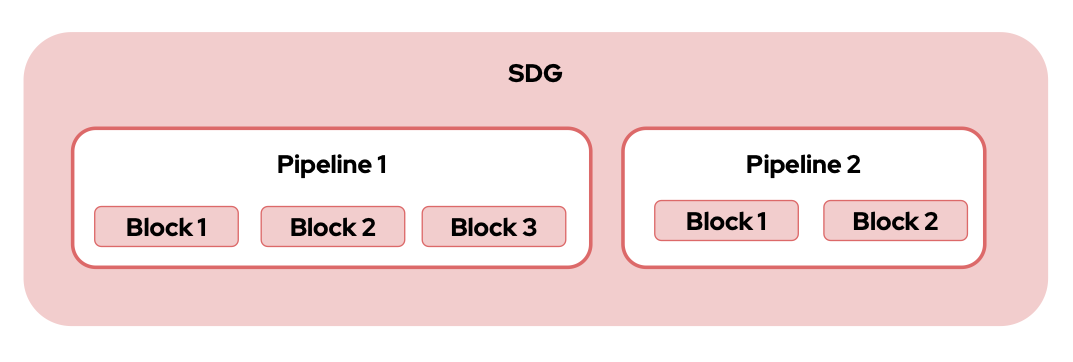

The SDG Framework is a modular, scalable, and efficient solution for creating synthetic data generation workflows in a “no-code” manner. At its core, this framework is designed to simplify data creation for LLMs, allowing users to chain computational units and build powerful pipelines for generating data and processing tasks.

The framework is built around the following principles:

- Modular Design: Highly composable blocks form the building units of the framework, allowing users to build workflows effortlessly.

- No-Code Workflow Creation: Specify workflows using simple YAML configuration files.

- Scalability and Performance: Optimized for handling large-scale workflows with millions of records.

At the heart of the framework is the Block. Each block is a self-contained computational unit that performs specific tasks, such as:

- Making LLM calls

- Performing data transformations

- Applying filters

Blocks are designed to be:

- Modular: Reusable across multiple pipelines.

- Composable: Easily chained together to create workflows.

These blocks are implemented in the src/instructlab/sdg/blocks directory.

Blocks can be chained together to form a Pipeline. Pipelines enable:

- Linear or recursive chaining of blocks.

- Execution of complex workflows by chaining multiple pipelines together.

There are three default pipelines shipped in SDG: simple, full, and eval. Each pipeline requires specific hardware specifications

The simple pipeline is designed to be used with quantized Merlinite as the teacher model. It enables basic data generation results on low-end consumer grade hardware, such as laptops and desktops with small or no discrete GPUs.

The full pipeline is designed to be used with Mixtral-8x7B-Instruct-v0.1 as the the teacher model, but has also been successfully tested with smaller models such as Mistral-7B-Instruct-v0.2 and even some quantized versions of the two teacher models. This is the preferred data generation pipeline on higher end consumer grade hardware and all enterprise hardware.

The eval pipeline is used to generate MMLU benchmark data that can be used to later evaluate a trained model on your knowledge dataset. It does not generate data for use during model training.

The Pipeline YAML configuration file is central to defining data generation workflows in the SDG Framework. This configuration file describes how blocks and pipelines are orchestrated to process and generate data efficiently. By leveraging YAML, users can create highly customizable and modular workflows without writing any code.

Pipeline configuration must adhere to our JSON schema to be considered valid.

-

Modular Design:

- Pipelines are composed of blocks, which can be chained together.

- Each block performs a specific task, such as generating, filtering, or transforming data.

-

Reusability:

- Blocks and their configurations can be reused across different workflows.

- YAML makes it easy to tweak or extend workflows without significant changes.

-

Ease of Configuration:

- Users can specify block types, configurations, and data processing details in a simple and intuitive manner.

Here is an example of a Pipeline configuration:

version: "1.0"

blocks:

- name: gen_questions

type: LLMBlock

config:

config_path: configs/skills/freeform_questions.yaml

output_cols:

- question

batch_kwargs:

num_samples: 30

drop_duplicates:

- question

- name: filter_questions

type: FilterByValueBlock

config:

filter_column: score

filter_value: 1.0

operation: eq

convert_dtype: float

drop_columns:

- evaluation

- score

- num_samples

- name: gen_responses

type: LLMBlock

block_config:

config_path: configs/skills/freeform_responses.yaml

output_cols:

- response-

Data Representation: Data flow between blocks and pipelines is handled using Hugging Face Datasets, which are based on Arrow tables. This provides:

- Native parallelization capabilities (e.g., maps, filters).

- Support for efficient data transformations.

-

Data Checkpoints: Intermediate caches of generated data. Checkpoints allow users to:

- Resume workflows from the last successful state if interrupted.

- Improve reliability for long-running workflows.

Clone the library and navigate to the repo:

git clone https://github.com/instructlab/sdg

cd sdgInstall the library:

pip install .You can import SDG into your Python files with the following items:

from instructlab.sdg.generate_data import generate_data

from instructlab.sdg.utils import GenerateException|-- src/instructlab/ (1)

|-- docs/ (2)

|-- scripts/ (3)

|-- tests/ (4)- Contains the SDG code that interacts with InstructLab.

- Contains documentation on various SDG methodologies.

- Contains some utility scripts, but not part of any supported API.

- Contains all the tests for the SDG repository.