This project will no longer be maintained by Intel. This project has been identified as having known security escapes. Intel has ceased development and contributions including, but not limited to, maintenance, bug fixes, new releases, or updates, to this project. Intel no longer accepts patches to this project.

| Details | |

|---|---|

| Target OS: | Ubuntu* 18.04 LTS |

| Programming Language: | Python* 3.5 |

| Time to Complete: | 1 hour |

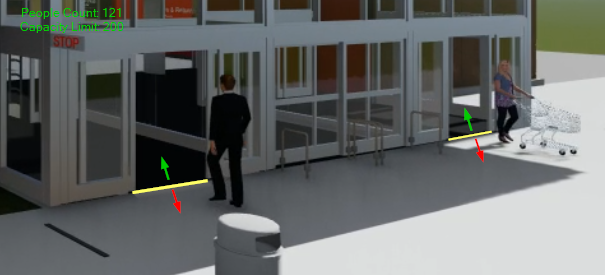

This reference implementation showcases a retail capacity limit application where a people counter keeps track of how many people are in the store based on the detected people entering and exiting the store. Virtual lines are configured in entrance and exit areas to indicate the limits of entering and exiting the area of interest.

- 6th gen or greater Intel® Core™ processors or Intel® Xeon® processor, with 8Gb of RAM

The application uses the Inference Engine and Model Downloader included in the Intel® Distribution of OpenVINO Toolkit doing the following steps:

- Ingests video from a file, processing frame by frame.

- Detects people in the frame using a pre-trained DNN model.

- Extracts features from people detected using a second pre-trained DNN in order to do a tracking of the people.

- Check if people cross the predefined virtual gates based on the coordinates and identify in which direction the virtual gate was closed to determine if it is an entry or exit event.

- People counter gets updated based on the entry and exit data.

The DNN models are optimized for Intel® Architecture and are included with Intel® Distribution of OpenVINO™ toolkit.

Clone the reference implementation:

sudo apt-get update && sudo apt-get install git

git clone github.com:intel-iot-devkit/capacity-limit.gitRefer to https://software.intel.com/en-us/articles/OpenVINO-Install-Linux for more information about how to install and setup the Intel® Distribution of OpenVINO™ toolkit.

To install the dependencies of the Reference Implementation, run the following commands:

cd <path_to_capacity-limit-directory>

pip3 install -r requirements.txtThis application uses the person-detection-retail-0013 and person-reidentification-retail-0300 Intel® pre-trained models, that can be downloaded using the model downloader. The model downloader downloads the .xml and .bin files that is used by the application.

To install the dependencies of the RI and to download the models Intel® model, run the following command:

mkdir models

cd models

python3 /opt/intel/openvino/deployment_tools/open_model_zoo/tools/downloader/downloader.py --name person-detection-retail-0013 --precisions FP32

python3 /opt/intel/openvino/deployment_tools/open_model_zoo/tools/downloader/downloader.py --name person-reidentification-retail-0300 --precisions FP32The models will be downloaded inside the following directories:

- models/intel/person-detection-retail-0013/FP32/

- models/intel/person-reidentification-retail-0300/FP32/The config.json contains the path to the videos and models that will be used by the application and also the coordinates of a virtual line.

The config.json file is of the form name/value pair. Find below an example of the _config.json_file:

{

"video": "path/to/video/myvideo.mp4",

"pedestrian_model_weights": "models/intel/person-detection-retail-0013/FP32/person-detection-retail-0013.bin",

"pedestrian_model_description": "models/intel/person-detection-retail-0013/FP32/person-detection-retail-0013.xml",

"reidentification_model_weights": "models/intel/person-reidentification-retail-0300/FP32/person-reidentification-retail-0300.bin",

"reidentification_model_description": "models/intel/person-reidentification-retail-0300/FP32/person-reidentification-retail-0300.xml",

"coords": [[50, 45], [80, 45]]

}Note that coords represents a virtual gate defined by two points with x,y coordinates.

The application works with any input video format supported by OpenCV.

Sample video: https://www.pexels.com/video/black-and-white-video-of-people-853889/

Data set subject to license https://www.pexels.com/license. The terms and conditions of the data set license apply. Intel does not grant any rights to the data files.

To use any other video, specify the path in config.json file.

You must configure the environment to use the Intel® Distribution of OpenVINO™ toolkit one time per session by running the following command:

source /opt/intel/openvino/bin/setupvars.sh -pyver 3.5Note: This command needs to be executed only once in the terminal where the application will be executed. If the terminal is closed, the command needs to be executed again.

Change the current directory to the project location on your system:

cd <path-to-capacity-limit-directory>Run the python script.

python3 capacitylimit.py