Rong Li1

Shijie Li2

Lingdong Kong3

Xulei Yang2

Junwei Liang1,4

AI Thrust, HKUST(Guangzhou)

I2R, A*STAR

National University of Singapore

CSE, HKUST

|

|

|

|

|

|

|

|

- [2025.01] The code and model checkpoints have been fully released. Feel free to try it out! 🤗

- [2024.12] Introducing SeeGround 👁️, a new framework towards zero-shot 3D visual grounding. For more details, kindly refer to our Project Page and Preprint. 🚀

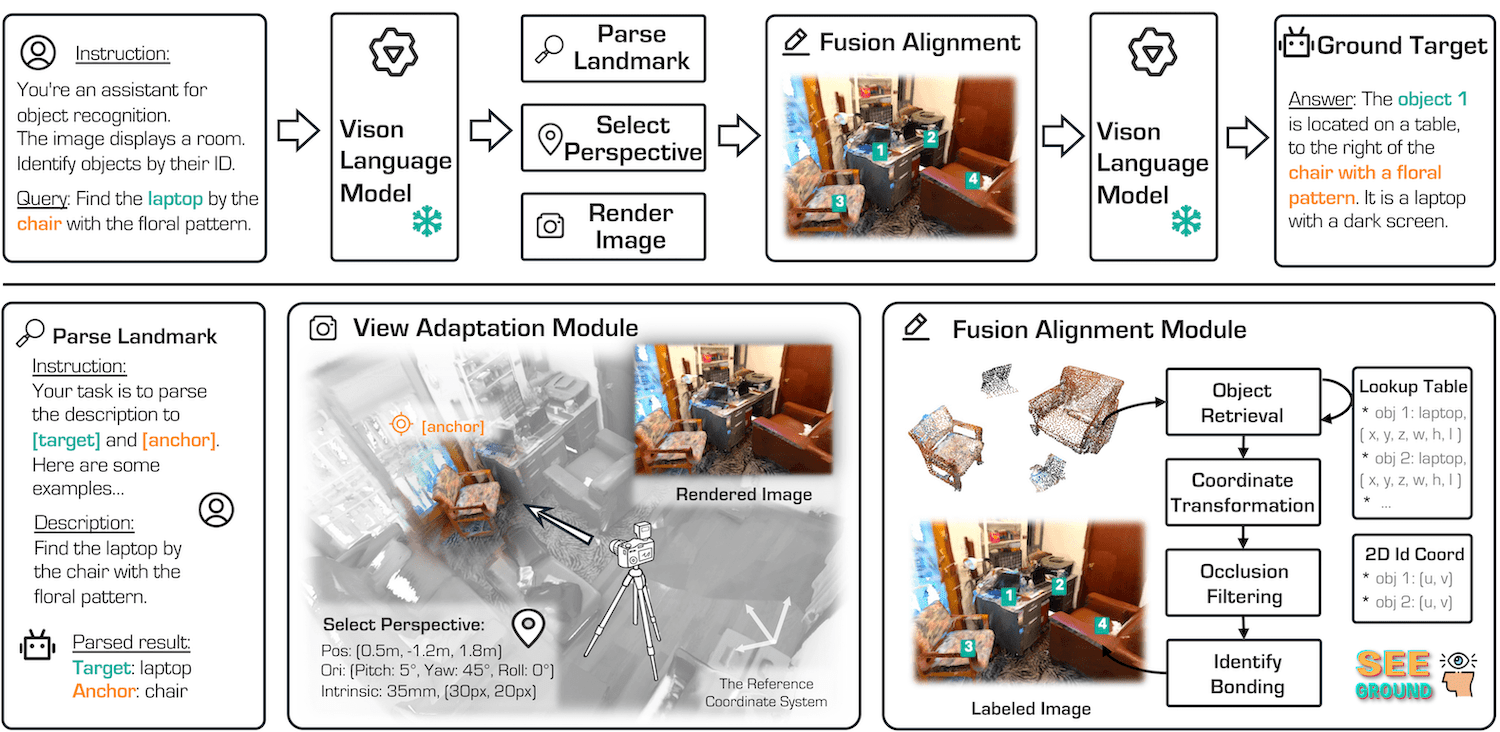

- 0. Framework Overview

- 1. Environment Setup

- 2. Download Model Weights

- 3. Download Datasets

- 4. Data Processing

- 5. Inference

- 6. Reproduction

- 7. License

- 8. Citation

- 9. Acknowledgments

We recommend using the official Docker image for environment setup

docker pull qwenllm/qwenvl

You can download the qwen2-vl model weights from either of the following sources:

Download ScanRefer dataset from official repo, and place it in the following directory:

data/ScanRefer/ScanRefer_filtered_val.json

Download the Nr3D dataset from the official repo, and place it in the following directory:

data/Nr3D/Nr3D.jsonl

Download the preprocessed Vil3dref data from vil3dref.

The expected structure should look like this:

referit3d/

.

├── annotations

| ├── meta_data

| │ ├── cat2glove42b.json

| │ ├── scannetv2-labels.combined.tsv

| │ └── scannetv2_raw_categories.json

│ └── ...

├── ...

└── scan_data

├── ...

├── instance_id_to_name

└── pcd_with_global_alignment

Download mask3d pred first.

- ScanRefer

python prepare_data/object_lookup_table_scanrefer.py

- Nr3D

python prepare_data/process_feat_3d.py

python prepare_data/object_lookup_table_nr3d.py

Alternatively, you can download the preprocessed Object Lookup Table.

We use vllm to deploy the VLM. It is recommended to run the following command in a tmux session on your server:

python -m vllm.entrypoints.openai.api_server --model /your/qwen2-vl-model/path --served-model-name Qwen2-VL-72B-Instruct --tensor_parallel_size=8

The --tensor_parallel_size flag controls the number of GPUs required. Adjust it according to your memory resources.

- ScanRefer

python parse_query/generate_query_data_scanrefer.py

- Nr3D

python parse_query/generate_query_data_nr3d.py

- ScanRefer

python inference/inference_scanrefer.py

- Nr3D

python inference/inference_nr3d.py

- ScanRefer

python eval/eval_nr3d.py

- Nr3D

python eval/eval_scanrefer.py

This work is released under the Apache 2.0 license.

If you find this work and code repository helpful, please consider starring it and citing the following paper:

@article{li2024seeground,

title = {SeeGround: See and Ground for Zero-Shot Open-Vocabulary 3D Visual Grounding},

author = {Rong Li and Shijie Li and Lingdong Kong and Xulei Yang and Junwei Liang},

journal = {arXiv preprint arXiv:2412.04383},

year = {2024},

}

We would like to thank the following repositories for their contributions: