This is a SwiftUI-based iOS application that demonstrates the use of YOLOv3 (You Only Look Once version 3) for real-time object detection in images captured from the device's camera.

The app utilizes the Vision and CoreML frameworks to integrate the YOLOv3 model for detecting objects in the images.

This project can be used to detect objects in images using both the YOLOv3 model and the YOLOv3 Tiny model, as the Swift classes are same for both the models with the only difference being the model file (size and name).

The project is not bundled with any of the YOLOv3 models. You can download the models from the Apple Machine Learning Models page and add them to the project by dragging and dropping them into the project navigator.

By default, the project is configured to use the YOLOv3 model. To use the YOLOv3 Tiny model, update the class name over here (after adding the model to the project).

- Capture images using the device's camera.

- Real-time object detection using YOLOv3 model.

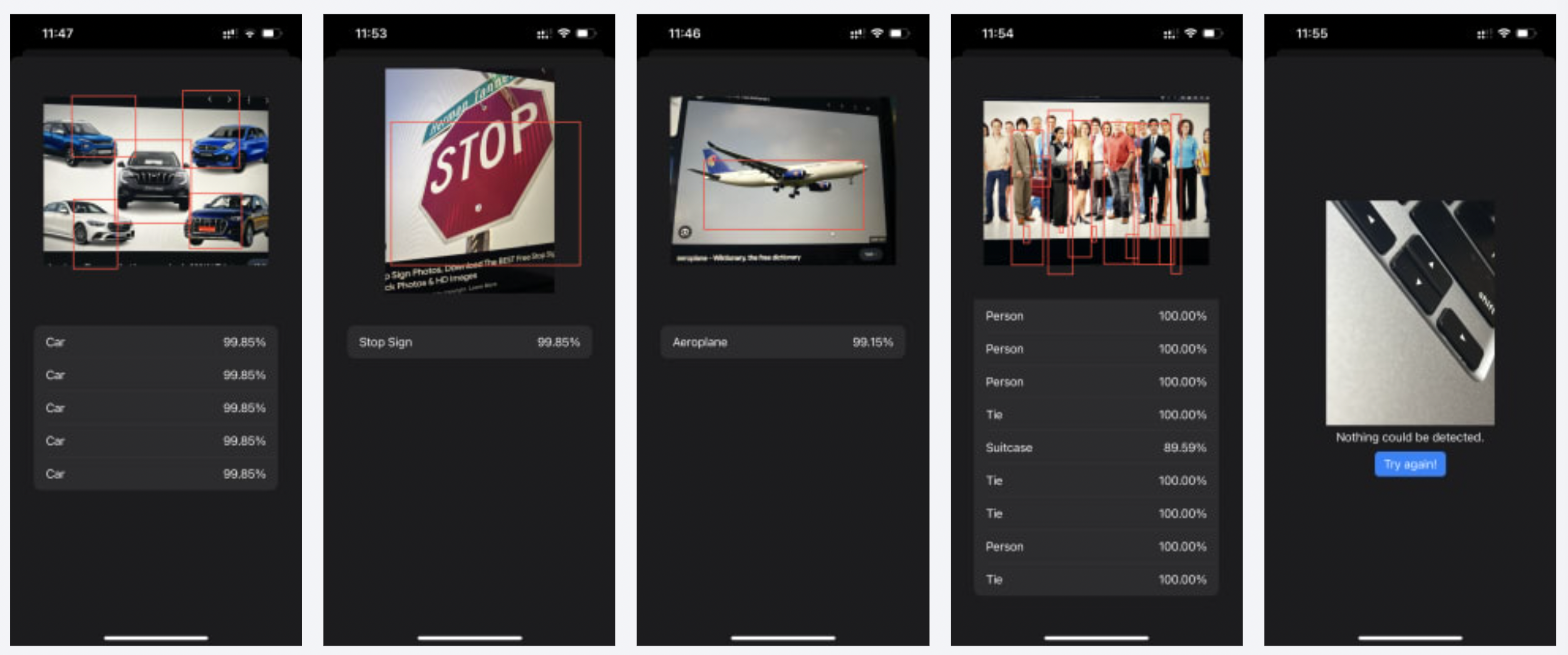

- Display bounding boxes around detected objects.

- Display detected objects with confidence percentages.

- Run the app

- Tap the "Take a Picture" button to take a picture

- The app will detect objects in the image and display the results

- The results will be displayed in a list view with the detected objects and their confidence percentages, and also in the image view with bounding boxes around the detected objects.

A neural network for fast object detection that detects 80 different classes of objects. Given an RGB image, with the dimensions 416x416, the model outputs three arrays (one for each layer) of arbitrary length; each containing confidence scores for each cell and the normalised coordaintes for the bounding box around the detected object(s).

Refer to the original paper for more details. YOLOv3: An Incremental Improvement

This function is built on top of the methodology described over here, as the specific requirement for the CoreML model is to accept a CVPixelBuffer as input, whereas the captured image is of type UIImage.