PyTorch implementation of Modularized Textual Grounding for Counterfactual Resilience , CVPR 2019.

Qualitative grounding results can be found in our webpage.

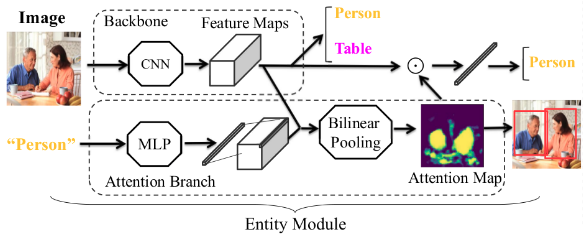

We propose a cross-modal grounding method through weak supervision.

A demonstration on how to load and ground the attribute can be found at : Demo.ipynb

Image --> 'Boy' Attribute -- > 'Lady' Attribute

- PyTorch 0.4.

- Python 3.6.

- FFT package.

Weakly trained on both COCO or Flickr 30k.

Training script for attribute grounding:

Train_attr_attention_embedding.py

Attention model for attribute grounding, it's based on a pre-trained Res-50 Network on person gender/age classification network:

/Models/Model7.py

<lib> Contains all the neccesary dependencies for our framework, it consists of:

- bilinear pooling module: Implemented from Compact Bilinear Pooling. Faster Fourier Transform module is needed before using. Download and install it from here by running:

pip3 install pytorch_fft

- resnet: We modified the last fully connected layer from 2048d to 256d to a more compact representation.

- nms/roi_align module: Not neccesary in this time. (For entity grounding and bbox detection.)

In order to re_train our framework, several things might be modified:

parser.py

In parser.py, img_path/annotations need to be changed to your local coco_2017_train directory:

/path/to/your/local/coco17/image path/annotations/

Argument resume is for loading pre-trained overall model.

To download the pre-trained unsupervised network:

- Res50 can be found it here.

- Model 7 in here.