| title | paper link | code link |

|---|---|---|

| Improving Language Understanding by Generative Pre-Training | [paper] | [code(pytorch)] |

| ELMo : Deep contextualized word representations | [paper] | [code(tensorflow)] |

| BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding | [paper] | [code(tensorflow)][code(pytorch)] |

| ALBERT: A LITE BERT FOR SELF-SUPERVISED LEARNING OF LANGUAGE REPRESENTATIONS | [paper] | [code(tensorflow)][code(pytorch)] |

| RoBERTa: A Robustly Optimized BERT Pretraining Approach | [paper] | [code[pytorch]] |

| Language Models are Unsupervised Multitask Learners | [paper] | [code(tensorflow)] |

| Language Models are Few-Shot Learners | [paper] | [code] |

| XLNet: Generalized Autoregressive Pretraining for Language Understanding | [paper] | [code(tensorflow)] |

| title | paper link | code link |

|---|---|---|

| Identity Mappings in Deep Residual Networks | [paper] | [code] |

| Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks | [paper] | [code(pytorch)] |

| Mask R-CNN | [paper] | [code(tensorflow)][code(pytorch)] |

| You Only Look Once: Unified, Real-Time Object Detection | [paper] | [code(tensorflow)] |

| YOLOv3: An Incremental Improvement | [paper] | [code(tensorflow)][code(pytorch)] |

| YOLOv4: Optimal Speed and Accuracy of Object Detection | [paper] | [code(tensorflow)] |

| YOLOv5 | [paper] | [code(pytorch)] |

| Image Transformer | [paper] | [code(pytorch)] |

| title | paper link | code link |

|---|---|---|

| Looking Fast and Slow: Memory-Guided Mobile Video Object Detection | [paper] | [code(tensorflow)] |

| Context R-CNN: Long Term Temporal Context for Per-Camera Object Detection | [paper] | [code(tensorflow)] |

| Optimizing Video Object Detection via a Scale-Time Lattice | [paper] | [code(pytorch)] |

| Mobile Video Object Detection with Temporally-Aware Feature Maps | [paper] | [code(pytorch)] |

| X3D: Expanding Architectures for Efficient Video Recognition | [paper] | [code(pytorch)] |

| SibNet: Sibling Convolutional Encoder for Video Captioning | [paper] | [code] |

| SAM: Modeling Scene, Object and Action with Semantics Attention Modules for Video Recognition | [paper] | [code] |

| Bottleneck Transformers for Visual Recognition | [paper] | [code(pytorch)] |

| title | paper link | code link |

|---|---|---|

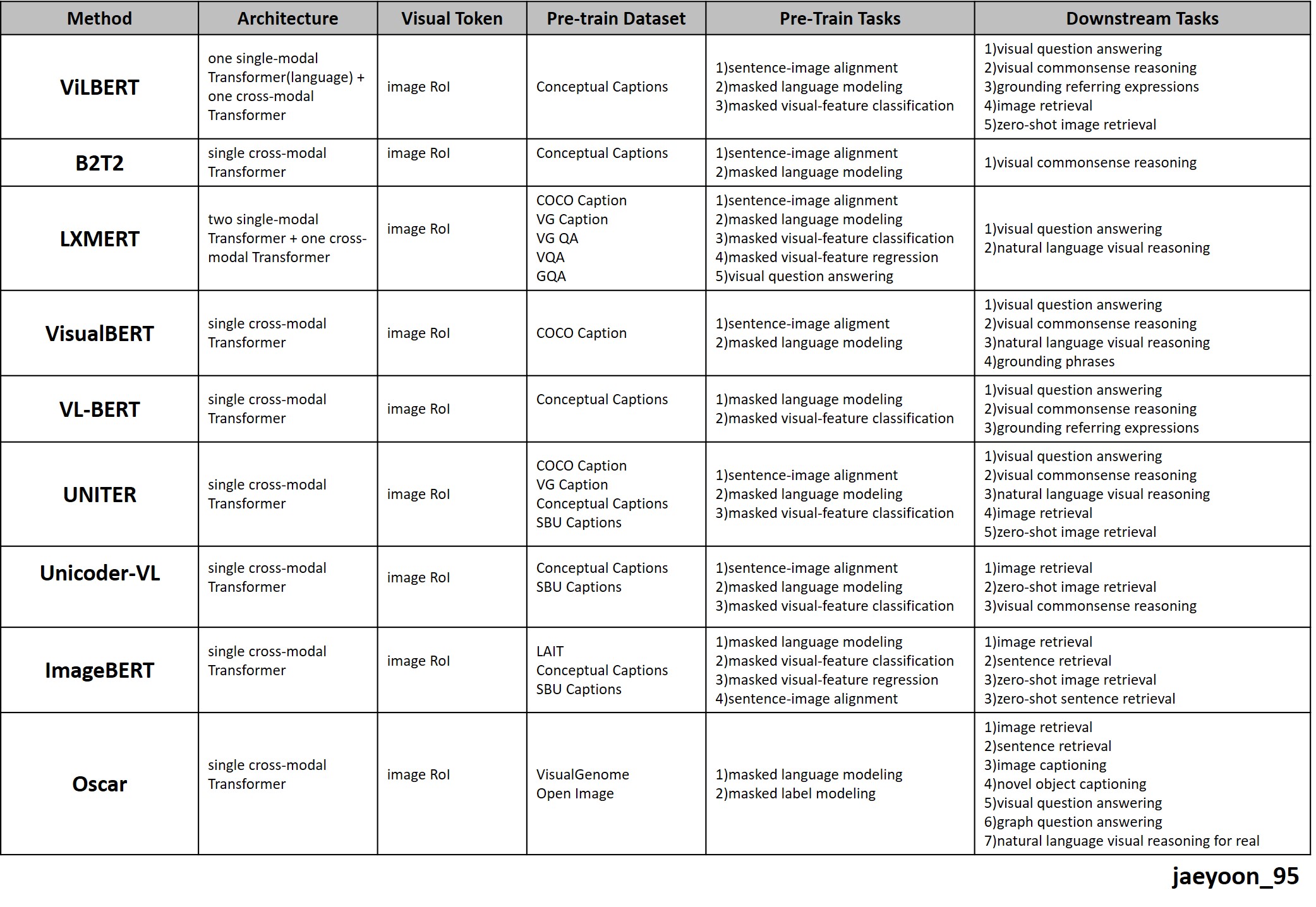

| ViLBERT: Pretraining Task-Agnostic Visiolinguistic Representations for Vision-and-Language Tasks | [paper] | [code(pytorch)] |

| 12-in-1: Multi-Task Vision and Language Representation Learning | [paper] | [code(pytorch)] |

| LXMERT: Learning Cross-Modality Encoder Representations from Transformers | [paper] | [code(pytorch)] |

| VISUALBERT: A SIMPLE AND PERFORMANT BASELINE FOR VISION AND LANGUAGE | [paper] | [code(pytorch)] |

| VL-BERT: Pre-training of Generic Visual-Linguistic Representations | [paper] | [code(pytorch)] |

| UNITER: LEARNING UNIVERSAL IMAGE-TEXT REPRESENTATIONS | [paper] | [code(pytorch)] |

| Unicoder-VL: A Universal Encoder for Vision and Language by Cross-modal Pre-training | [paper] | [code(pytorch)] |

| Large-Scale Adversarial Training for Vision-and-Language Representation Learning | [paper] | [code(pytorch)] |

| Fusion of Detected Objects in Text for Visual Question Answering | [paper] | [code(tensorflow)] |

| ERNIE-ViL: Knowledge Enhanced Vision-Language Representations Through Scene Graph | [paper] | [code] |

| X-LXMERT: Paint, Caption and Answer Questions with Multi-Modal Transformers | [paper] | [code] |

| Pixel-BERT: Aligning Image Pixels with Text by Deep Multi-Modal Transformers | [paper] | [code] |

| M6-v0: Vision-and-Language Interaction for Multi-modal Pretraining | [paper] | [code] |

| Unified Vision-Language Pre-Training for Image Captioning and VQA | [paper] | [code] |

| Multimodal Pretraining Unmasked:Unifying the Vision and Language BERTs | [paper] | [code] |

| VinVL: Making Visual Representations Matter in Vision-Language Models | [paper] | [code] |

| Seeing past words: Testing the cross-modal capabilities of pretrained V&L models | [paper] | [code] |

| Inferring spatial relations from textual descriptions of images | [paper] | [code] |

| DMRFNet: Deep Multimodal Reasoning and Fusion for Visual Question Answering and explanation generation | [paper] | [code] |

| Scheduled Sampling in Vision-Language Pretraining with Decoupled Encoder-Decoder Network | [paper] | [code(pytorch)] |

| Conceptual 12M: Pushing Web-Scale Image-Text Pre-Training To Recognize Long-Tail Visual Concepts | [paper] | [code] |

| Scaling Up Visual and Vision-Language Representation Learning With Noisy Text Supervision | [paper] | [code] |

| Transformer is All You Need:Multimodal Multitask Learning with a Unified Transformer | [paper] | [code] |

| WIT: Wikipedia-based Image Text Dataset for Multimodal Multilingual Machine Learning | [paper] | [code] |

| title | paper link | code link |

|---|---|---|

| VideoBERT: A Joint Model for Video and Language Representation Learning | [paper] | [code] |

| UniVL: A Unified Video and Language Pre-Training Model for Multimodal Understanding and Generation | [paper] | [code] |

| Multi-modal Circulant Fusion for Video-to-Language and Backward | [paper] | [code] |

| Video-Grounded Dialogues with Pretrained Generation Language Models | [paper] | [code] |

| Deep Extreme Cut: From Extreme Points to Object Segmentation | [paper] | [code(pytorch)] |

| Integrating Multimodal Information in Large Pretrained Transformers | [paper] | [code(pytorch)] |

| Improving LSTM-based Video Description with Linguistic Knowledge Mined from Text | [paper] | [code(caffe)] |

| PARAMETER EFFICIENT MULTIMODAL TRANSFORMERS FOR VIDEO REPRESENTATION LEARNING | [paper] | [code] |

| LoGAN: Latent Graph Co-Attention Network for Weakly-Supervised Video Moment Retrieval | [paper] | [code] |

| VX2TEXT: End-to-End Learning of Video-Based Text Generation From Multimodal Inputs | [paper] | [code] |

| HERO: Hierarchical Encoder for Video+Language Omni-representation Pre-training | [paper] | [code(pytorch)] |

| Less is More: CLIPBERT for Video-and-Language Learning via Sparse Sampling | [paper] | [code(pytorch)] |

| title | paper link | code link |

|---|---|---|

| Knowledge Enhanced Contextual Word Representations | [paper] | [code(pytorch)] |

| Why Do Masked Neural Language Models Still Need Commonsense Repositories to Handle Semantic Variations in Question Answering? | [paper] | [code] |

| SentiLARE: Sentiment-Aware Language Representation Learning with Linguistic Knowledge | [paper] | [code] |

| Acquiring Knowledge from Pre-trained Model to Neural Machine Translation | [paper] | [code] |

| Knowledge-Aware Language Model Pretraining | [paper] | [code] |

| Pretrained Encyclopedia: Weakly Supervised Knowledge-Pretrained Language Model | [paper] | [code] |

| title | paper link | code link |

|---|---|---|

| Reasoning over Vision and Language:Exploring the Benefits of Supplemental Knowledge | [paper] | [code] |

| KVL-BERT: Knowledge Enhanced Visual-and-Linguistic BERT for Visual Commonsense Reasoning | [paper] | [code] |