Mohammad Fahes1,

Tuan-Hung Vu1,2,

Andrei Bursuc1,2,

Patrick Pérez1,2,

Raoul de Charette1

1 Inria, Paris, France.

2 valeo.ai, Paris, France.

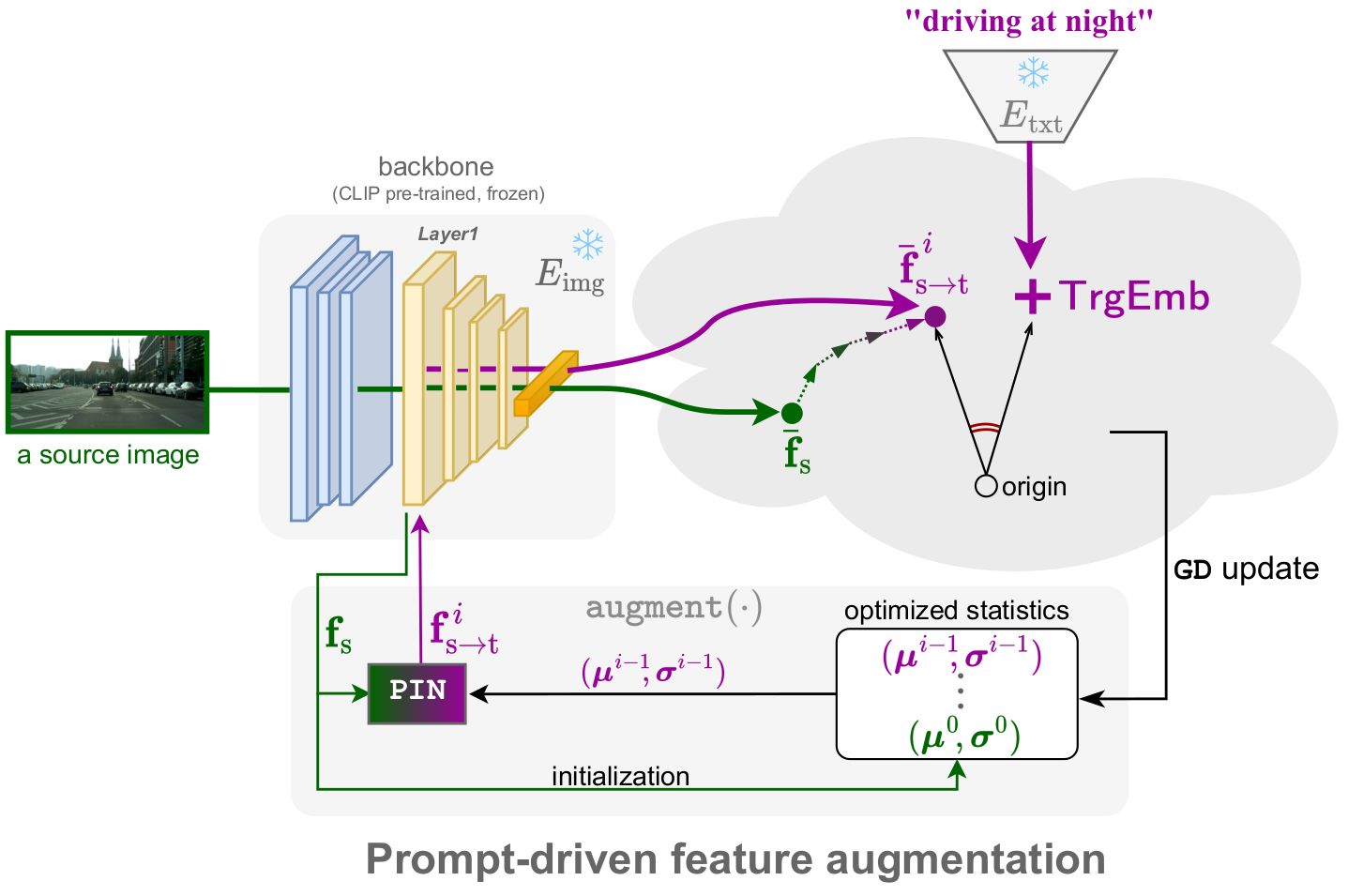

TL; DR: PØDA (or PODA) is a simple feature augmentation method for zero-shot domain adaptation guided by a single textual description of the target domain.

Project page: https://astra-vision.github.io/PODA/

Paper: https://arxiv.org/abs/2212.03241

@InProceedings{fahes2023poda,

title={P{\O}DA: Prompt-driven Zero-shot Domain Adaptation},

author={Fahes, Mohammad and Vu, Tuan-Hung and Bursuc, Andrei and P{\'e}rez, Patrick and de Charette, Raoul},

booktitle={ICCV},

year={2023}

}

Overview of PØDA

We propose Prompt-driven Instance Normalization (PIN) to augment feature styles based on "feature/target domain description" similarity

Test on unseen youtube video of night driving:

Training dataset: Cityscapes

Prompt: "driving at night"

- News

- Installation

- Running PODA

- Inference & Visualization

- Qualitative Results

- PODA for Object Detection

- License

- Acknowledgement

- 19/08/2023: Camera-ready version is on arxiv.

- 14/07/2023: PODA is accepted at ICCV 2023.

First create a new conda environment with the required packages:

conda env create --file environment.yml

Then activate environment using:

conda activate poda_env

-

CITYSCAPES: Follow the instructions in Cityscapes to download the images and semantic segmentation ground-truths. Please follow the dataset directory structure:

<CITYSCAPES_DIR>/ % Cityscapes dataset root ├── leftImg8bit/ % input image (leftImg8bit_trainvaltest.zip) └── gtFine/ % semantic segmentation labels (gtFine_trainvaltest.zip)

-

ACDC: Download ACDC images and ground truths from ACDC. Please follow the dataset directory structure:

<ACDC_DIR>/ % ACDC dataset root ├── rbg_anon/ % input image (rgb_anon_trainvaltest.zip) └── gt/ % semantic segmentation labels (gt_trainval.zip)

-

GTA5: Download GTA5 images and ground truths from GTA5. Please follow the dataset directory structure:

<GTA5_DIR>/ % GTA5 dataset root ├── images/ % input image └── labels/ % semantic segmentation labels

The source models are available here.

python3 main.py \

--dataset <source_dataset> \

--data_root <path_to_source_dataset> \

--data_aug \

--lr 0.1 \

--crop_size 768 \

--batch_size 2 \

--freeze_BB \

--ckpts_path saved_ckpts

python3 PIN_aug.py \

--dataset <source_dataset> \

--data_root <path_to_source_dataset> \

--total_it 100 \

--resize_feat \

--domain_desc <target_domain_description> \

--save_dir <directory_for_saved_statistics>

python3 main.py \

--dataset <source_dataset> \

--data_root <path_to_source_dataset> \

--ckpt <path_to_source_checkpoint> \

--batch_size 8 \

--lr 0.01 \

--ckpts_path adapted \

--freeze_BB \

--train_aug \

--total_itrs 2000 \

--path_mu_sig <path_to_augmented_statistics>

python3 main.py \

--dataset <dataset_name> \

--data_root <dataset_path> \

--ckpt <path_to_tested_model> \

--test_only \

--val_batch_size 1 \

--ACDC_sub <ACDC_subset_if_tested_on_ACDC>

To test any model on any image and visualize the output, please add the images to predict_test directory and run:

python3 predict.py \

--ckpt <ckpt_path> \

--save_val_results_to <directory_for_saved_output_images>

PØDA for uncommon driving situations

Our feature augmentation is task-agnostic, as it operates on the feature extractor's level. We show some results of PØDA for object detection. The metric is mAP%

PØDA is released under the Apache 2.0 license.

The code heavily borrows from this implementation of DeepLabv3+, and uses code from CLIP