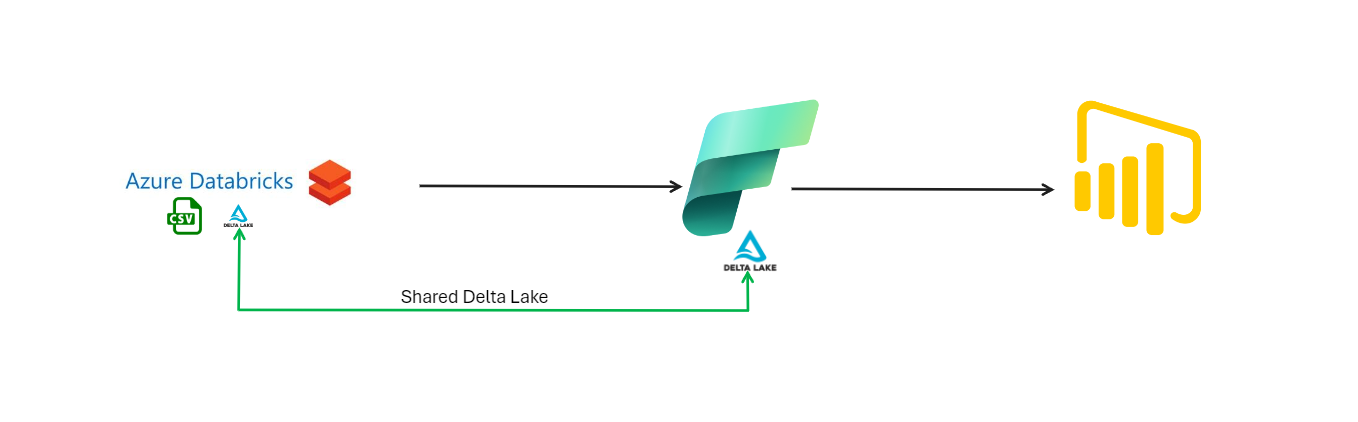

In this guide, you'll find how to build out a demo Delta Lake originating in Azure Databricks, linking that new Delta Lake into Microsoft Fabric (shortcut), make changes in Fabric that are reflected in Delta Lake across both platforms, and finally create a simple AI-driven Power BI dashboard!

This repo provides everything you need to setup a working cross-platform demo between Azure Databricks, Fabric, and Power BI (now part of Fabric) including sample data to get started.

-

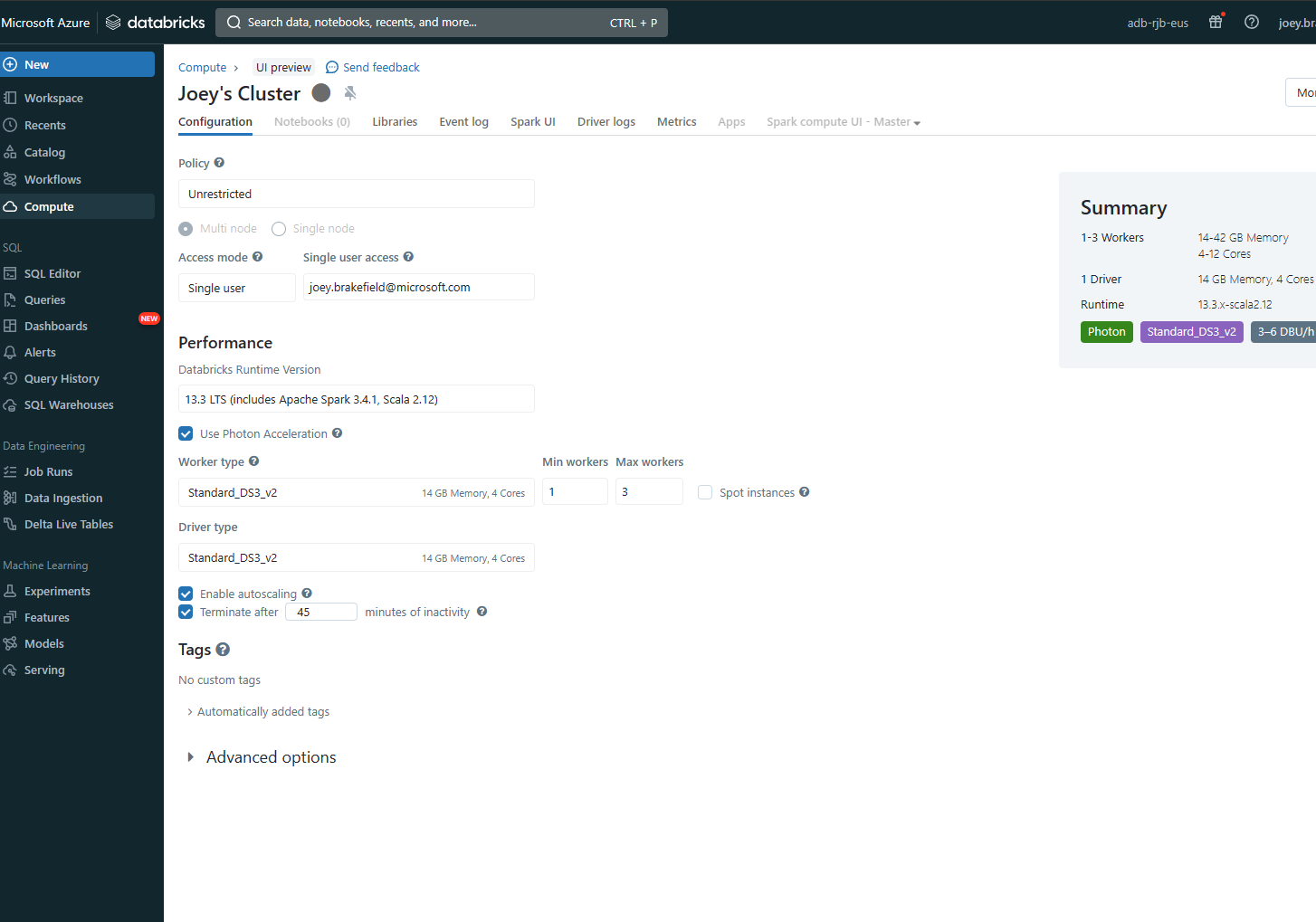

Setup or use and existing Azure Databricks workspace.

-

Initialize your Azure Databricks cluster with general compute notes as the workers.

-

Create an Azure App Registration/Service Principal for this effort and ensure it has Storage Blob Data Contributor rights on the storage account in which you are wanting to create the Delta Lake.

Record the secret at creation time as you'll use it in the next portion

-

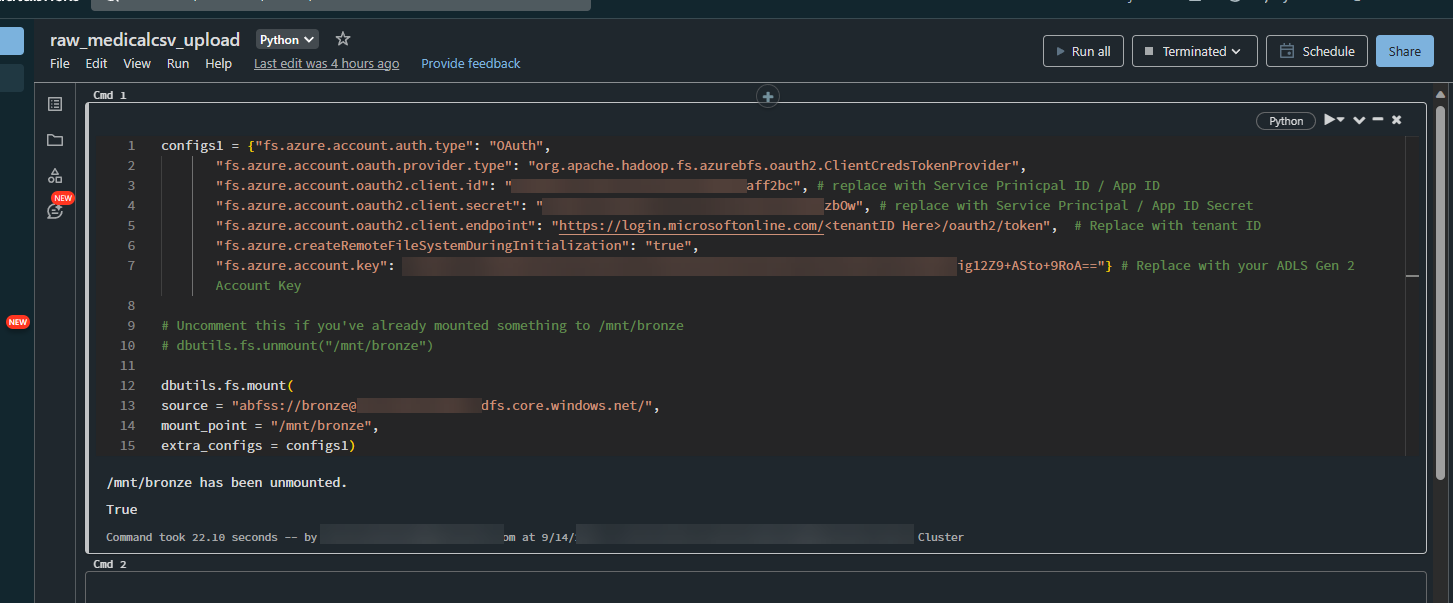

Upload the .dbc file notebook in this repository folder to run through creating a Delta Lake. Use the newly created cluster to execute the notebook after making these modifications:

-

Update the first cell of the notebook with your credentials for: Tenant Id inserted in to the login.microsoftonline.com URI, Service Prinicpal ID/App ID, Service Prinicpal Secret & the Azure Storage Account Key where you want your lake. Basically, all the blurred spots in the image below

-

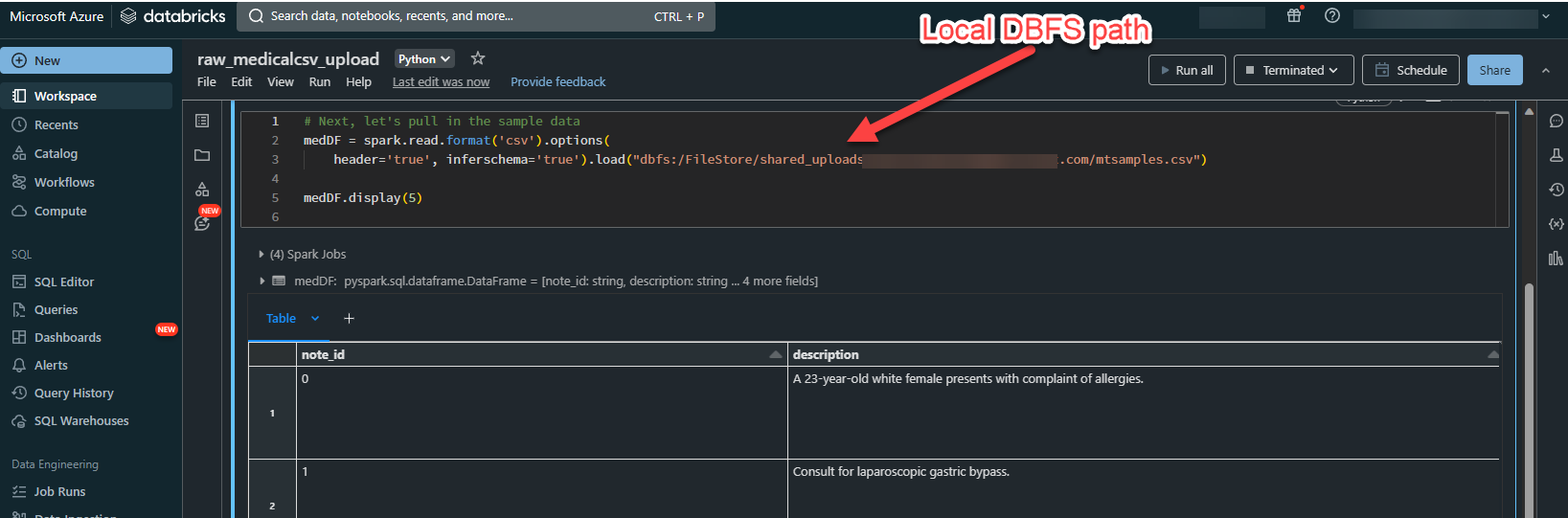

Either upload the .csv sample file to the DBFS local store or reference it /mnt/bronze/<path you chose on ADLS Gen 2>. In my example, I just uploaded the sample file to the Databricks workspace's DBFS store. Run the second cell and it will select the top 5 records for the dataframe to ensure you've correctly configured it.

-

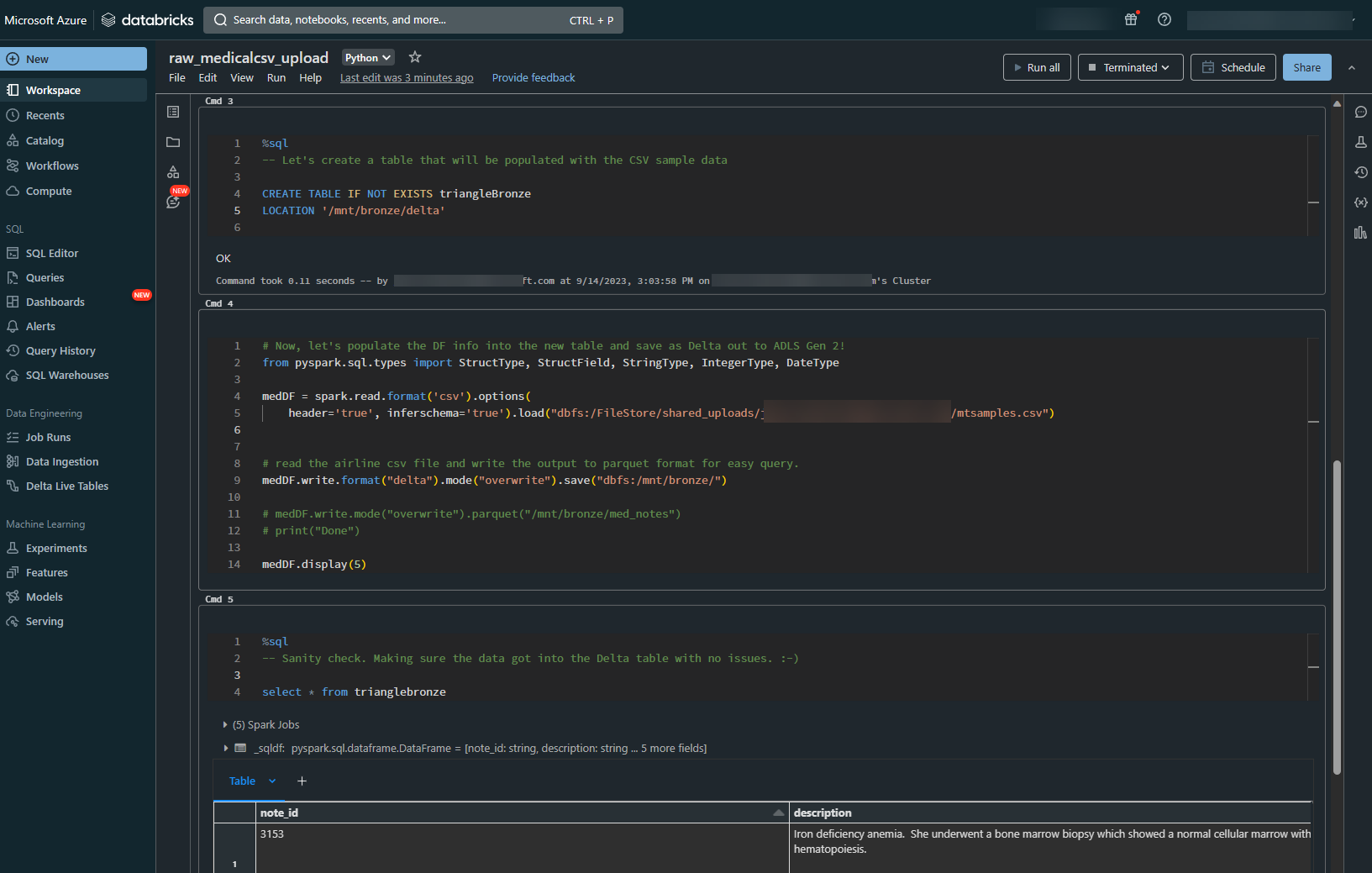

In the next two cells, we're going to create an empty table and load the data from the dataframe in Step 2 into that table. We're writing that out to the ADLS Gen 2 account that was configured in Step 1.

-

Alright, you've got data in delta lake! Congrats. You can pull up Azure Storage Explorer to confirm delta files are in the location before we switch to Fabric to continue the demo.

-

-

Navigate to the Fabric portal and navigate to the Data Engineering persona using these docs as guidance

-

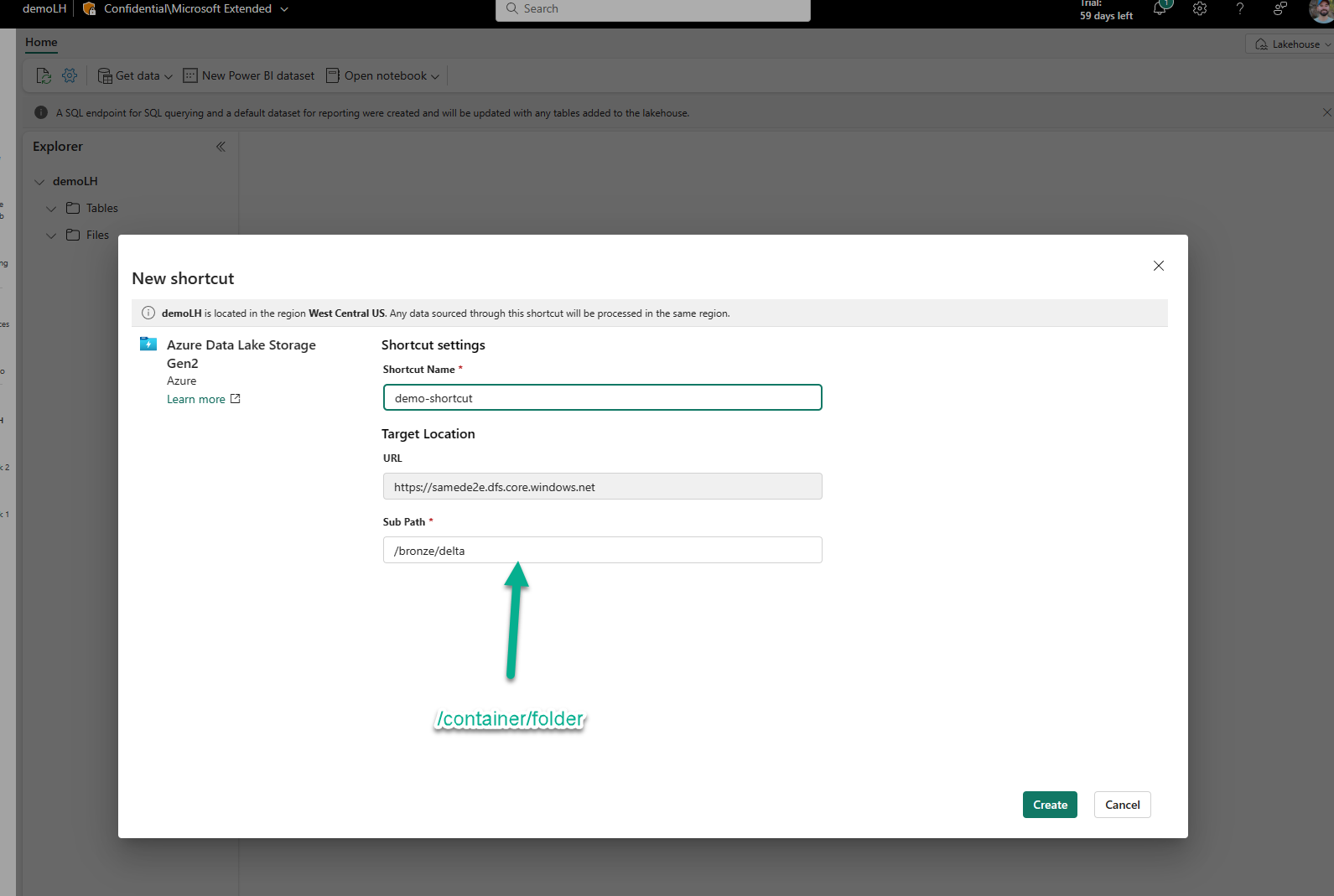

Once you've created a new lakehouse -- here I'm using "demoLH" as my lakehouse, make sure you "shortcut" your existing Databricks-created Delta Lakehouse into Fabric by following these steps.

-

NOTE #1: Make sure to specify the DFS endpoint "https://yourStorageAccount.dfs.core.windows.net"

-

-

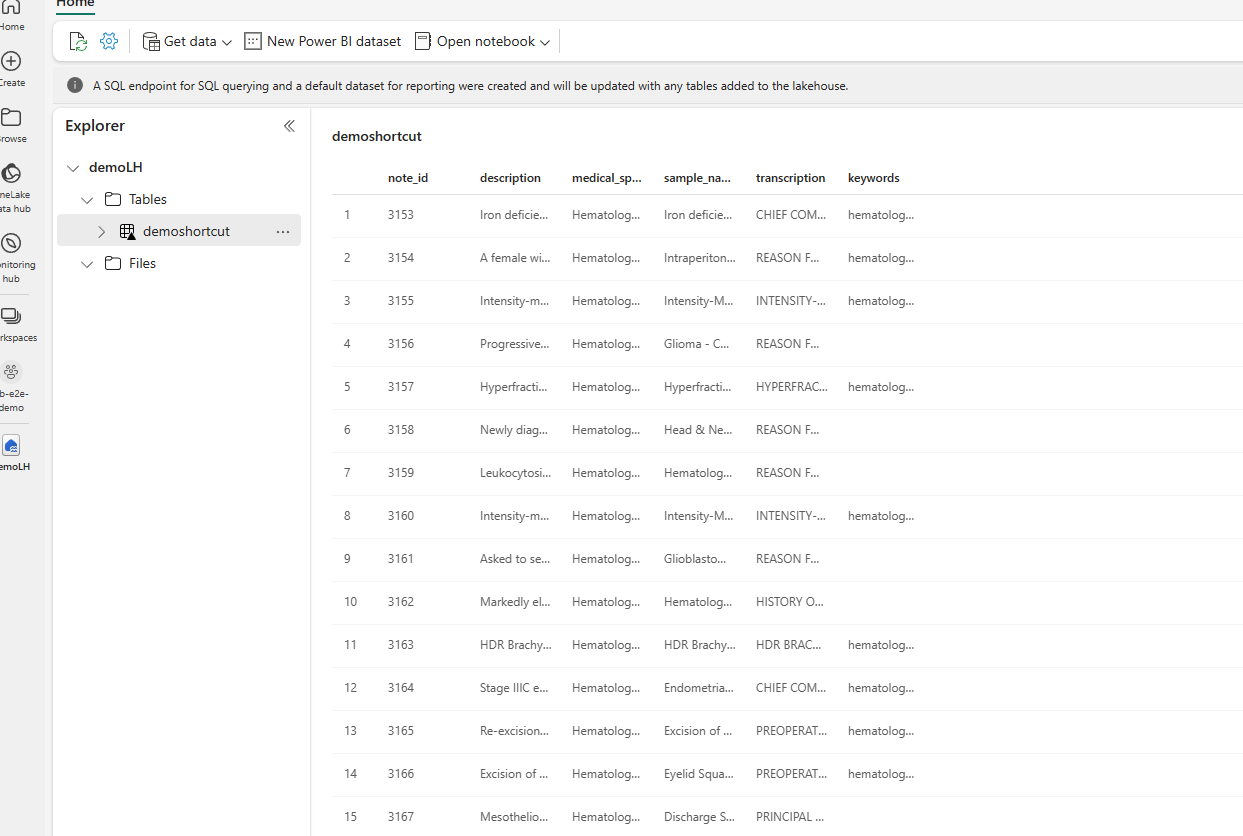

Now that your table is registered as a shortcut, you can click on it and notice there is no column named "mrn"

-

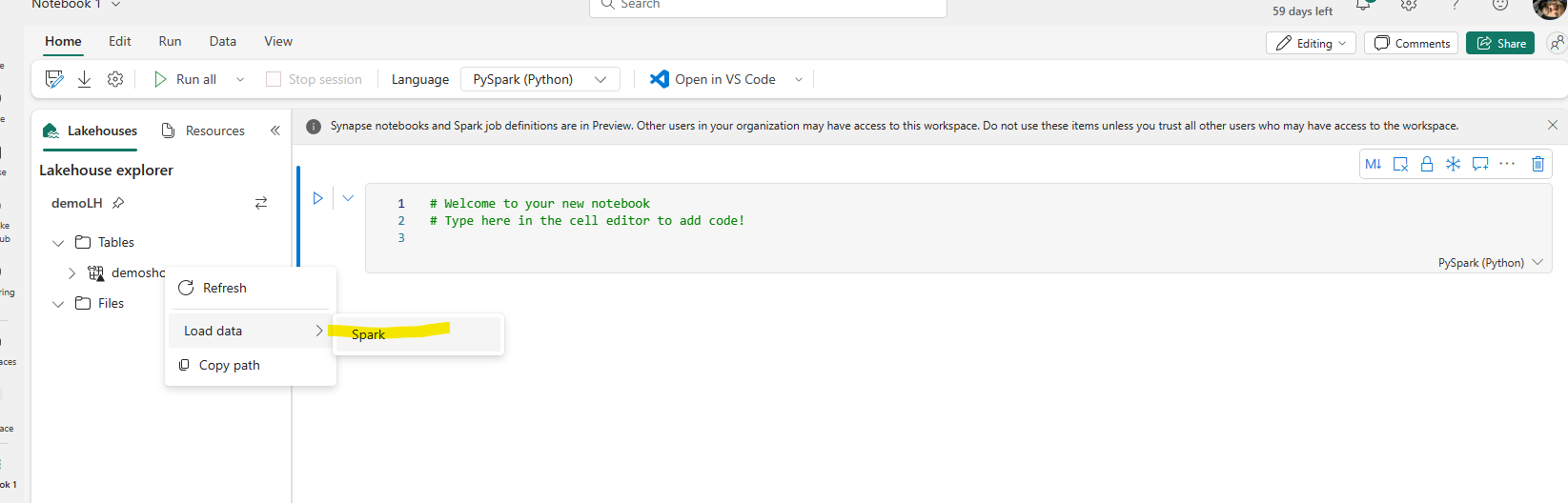

Now, we're going to modify the table by first loading the table into a dataframe. Right-click the shortcut delta table, then select "New Notebook".

-

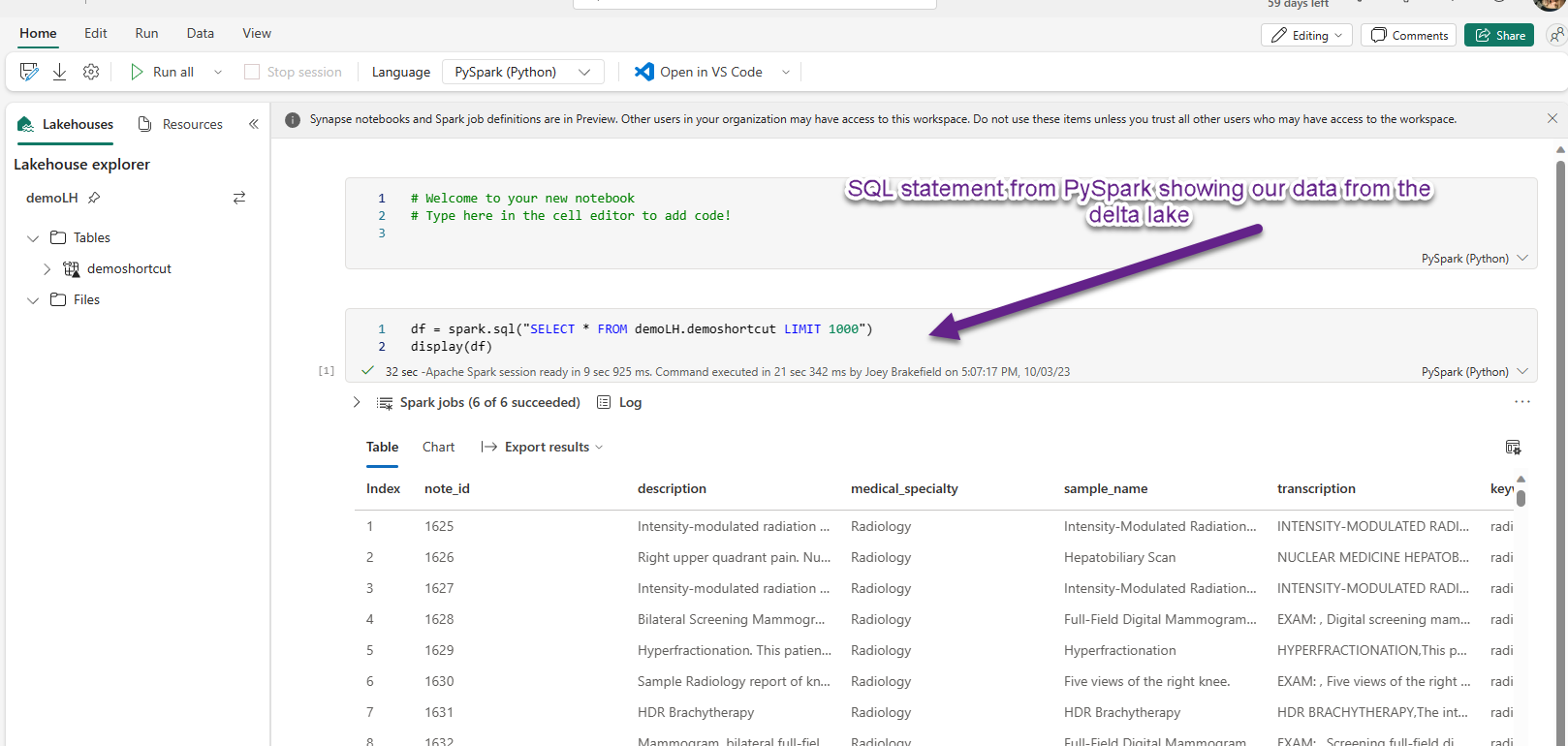

Once the new notebook opens, you'll right-click the table again and select "Load" and Fabric will write the first bits of code for you:

-

Now, let's modify the table and make sure both Azure Databricks and Fabric see the change ok.

-

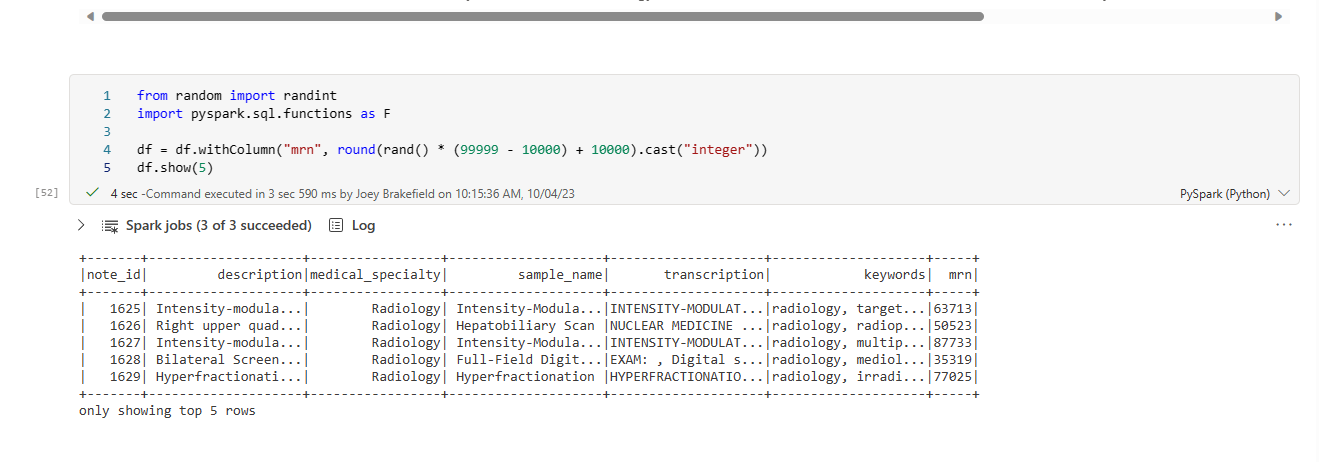

Create a new column called mrn and populate it with some random integers:

-

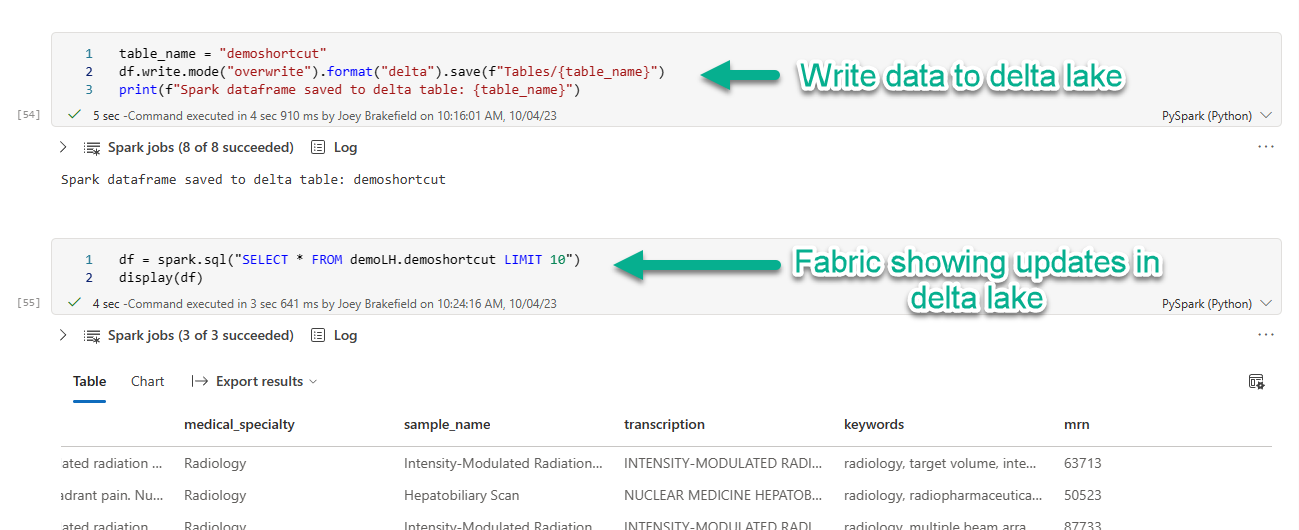

Now, let's write the new column with new random values out to our delta lake so that we can see the changes in both Fabric and Azure Databricks:

-

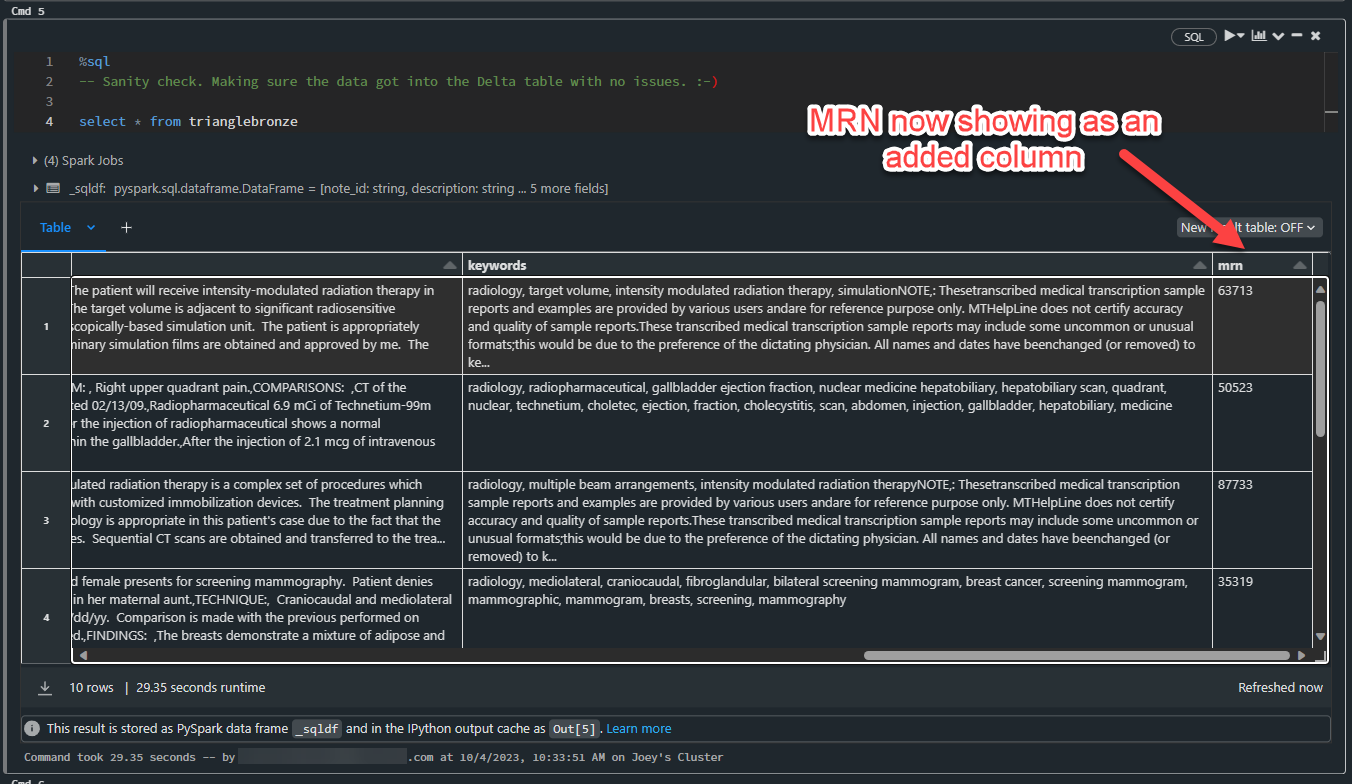

And finally, let's make sure that the data is reflected in the delta lake on Azure Databricks:

-

-

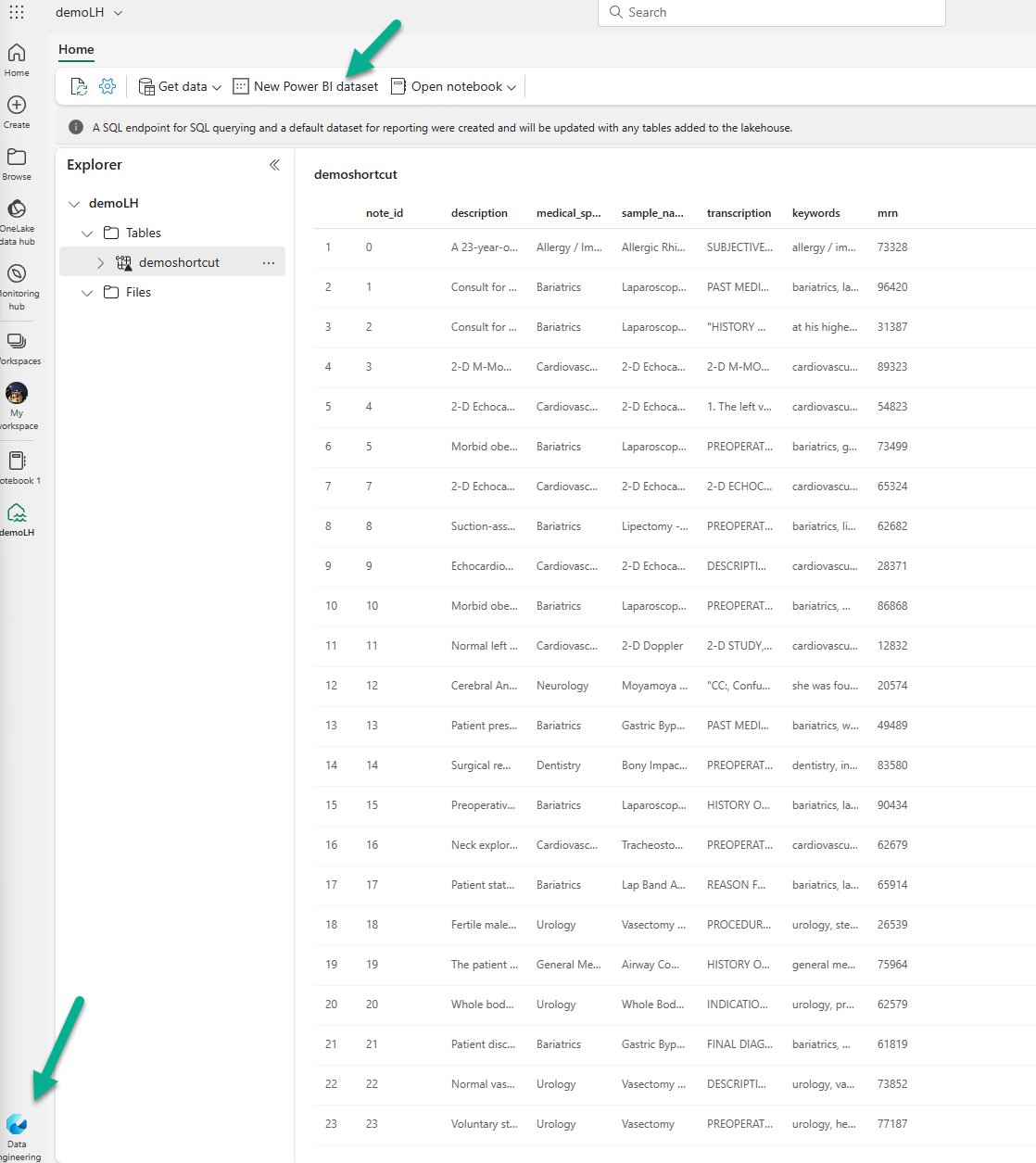

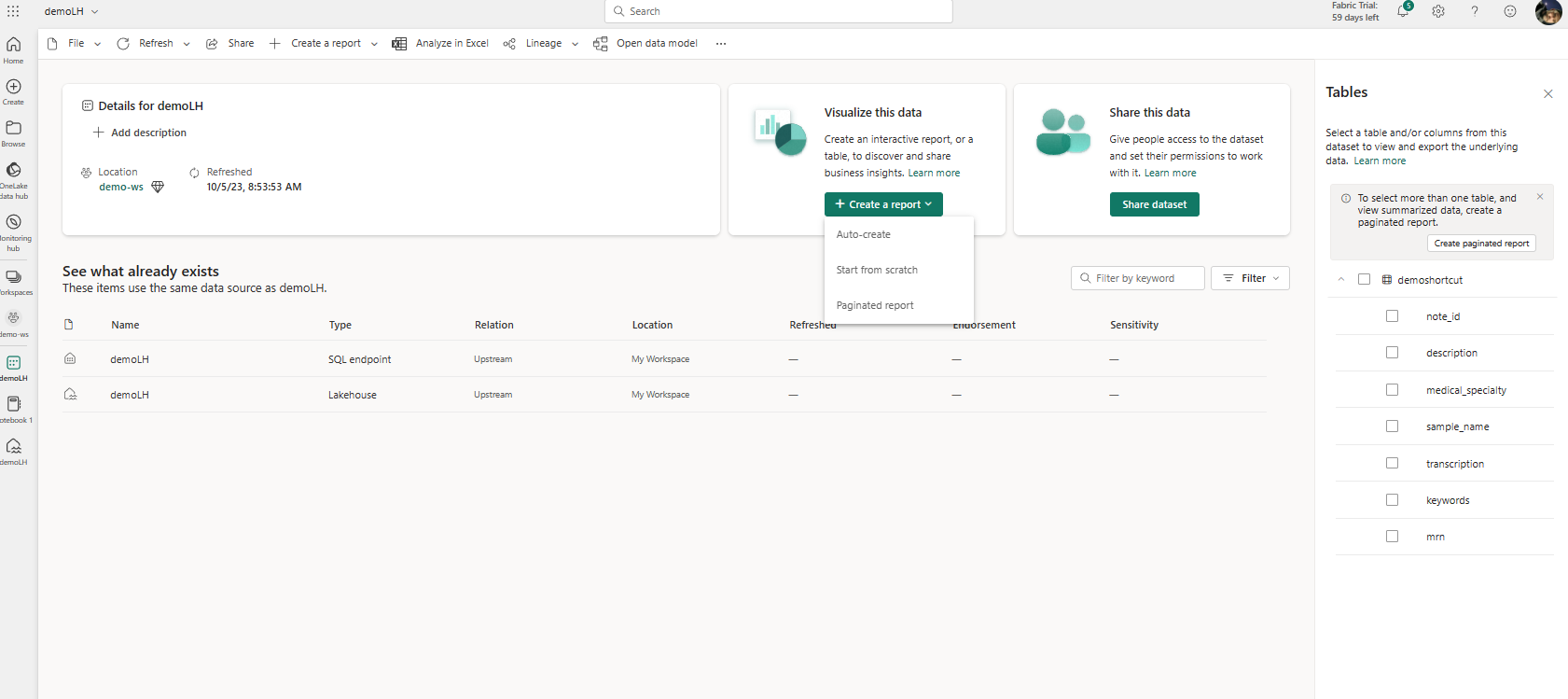

We're going to start with creating a new Power BI dataset from the "Data Engineering" Persona and clicking New Power BI dataset.

-

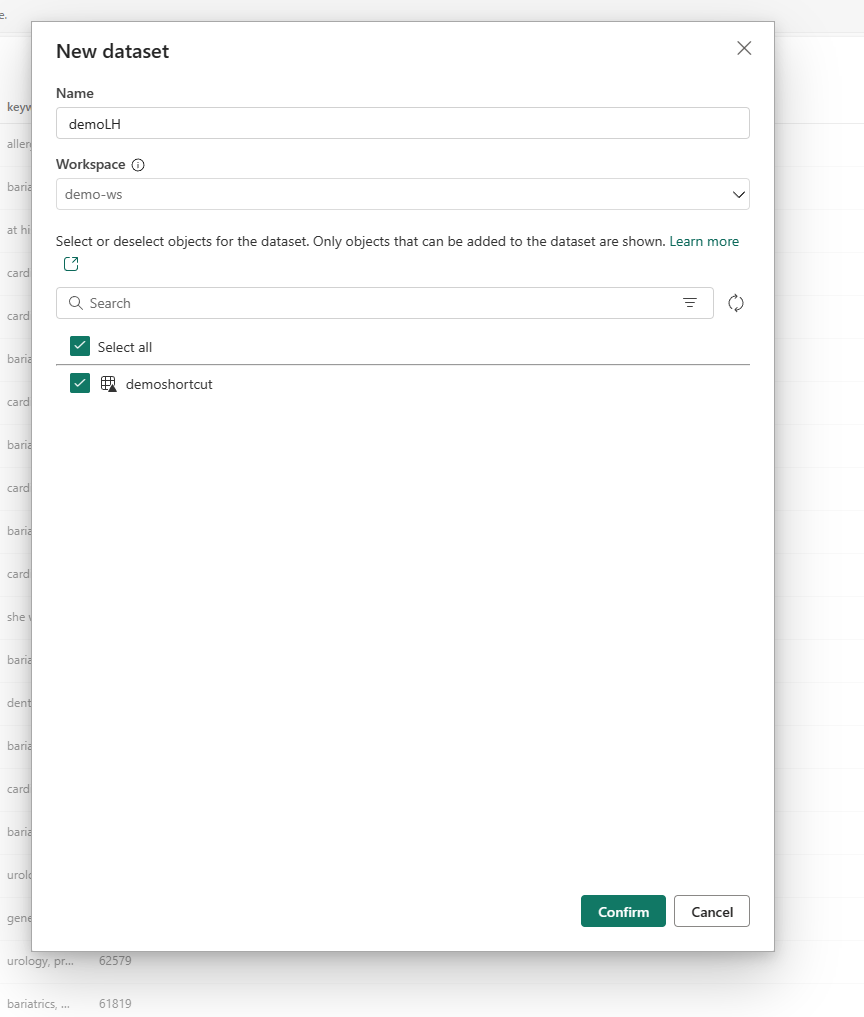

Now, let's select our table:

-

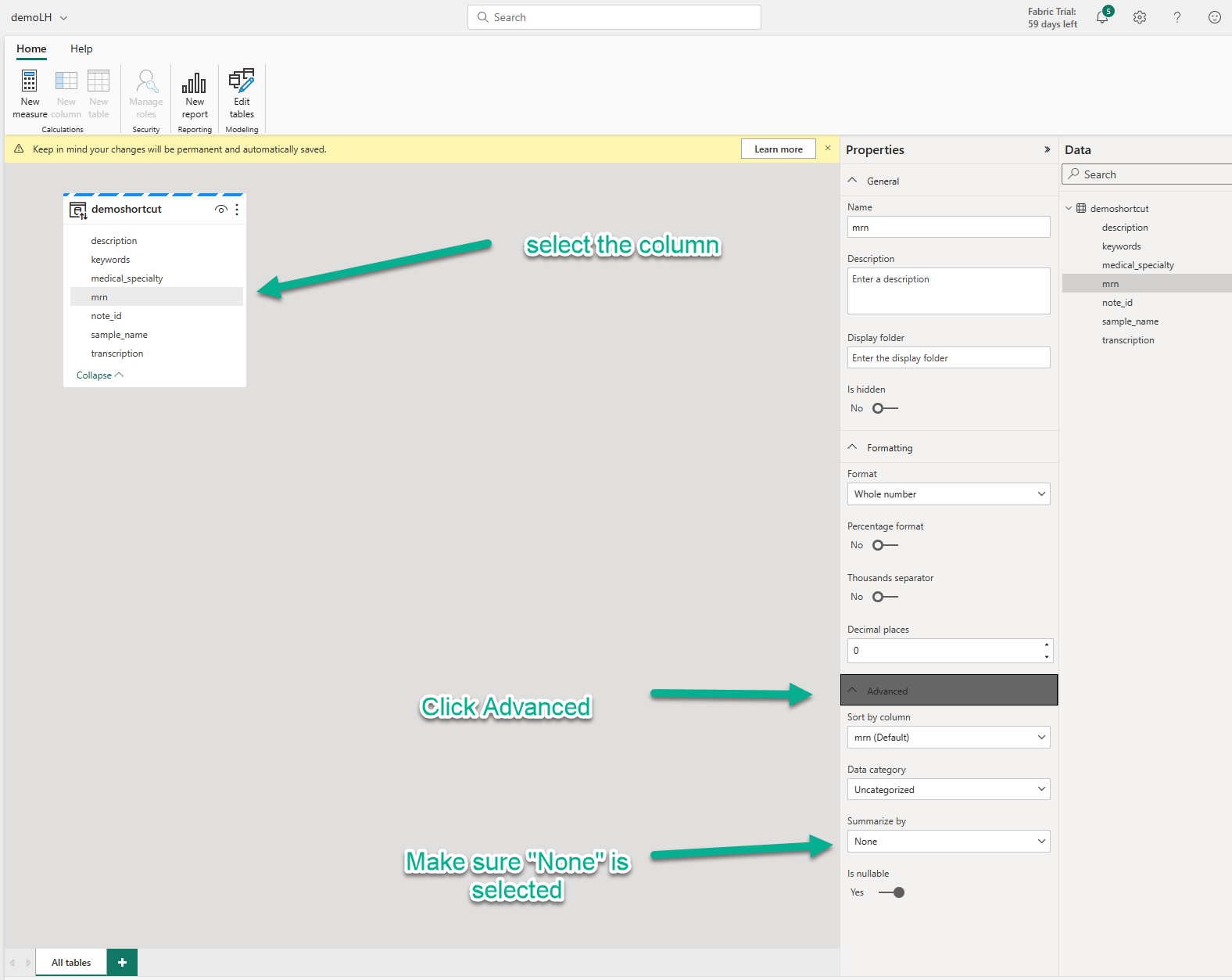

And make sure that "mrn" is added to the dataset. While we're here, let's remove the aggregation since Power BI detected it was an integer value column from our Data Science work:

-

Go back to your workspace and select the dataset you just created and you'll be presented with the following screen. Select down chevron on the + Create a report button and select Auto-create.

-

Alright, let's look at the final Power BI report to ensure that the auto-generated report looks interesting and make any tweaks if we need to. Typically, I've found the auto-created reports are pretty great at summarization of the useful columns in a report. For more info about adjusting the auto-created report, click here.

Alright, that's a wrap. Remember to delete either individual artifacts or the entire workspace. If you have questions about Microsoft Fabric, remember to check the awesome always-updating tutorials on Microsoft Learn. Happy Fabric-ing!