This repository explores how to visualize what a neural network learned after having trained it. The basic idea is that we keep the network weights fixed while we run backpropagation on the input image to change the input image to excite our target output the most.

We thus obtain images which are "idealized" versions of our target classes.

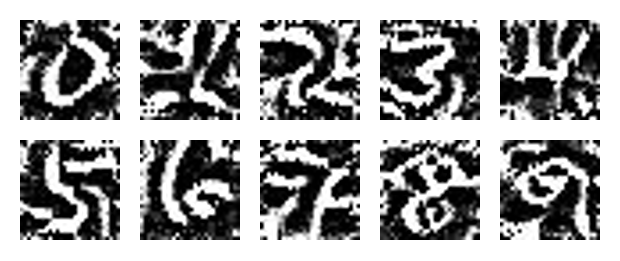

For MNIST this is the result of these "idealized" input images that the network likes most for the numbers 0 - 9:

Run python mnist.py to train the MNIST neural network. This saves the model weights as mnist_cnn.pt.

Afterwards, run python generate_image.py to loop over the 10 target classes and generate the images in the generated folder.

If you want to learn more: check out my blog entry explaining this visualization technique for deep neural networks