Raphtory is an ongoing project to maintain and analyse a temporal graph within a distributed environment. The most recent paper on Raphtory can be found here.

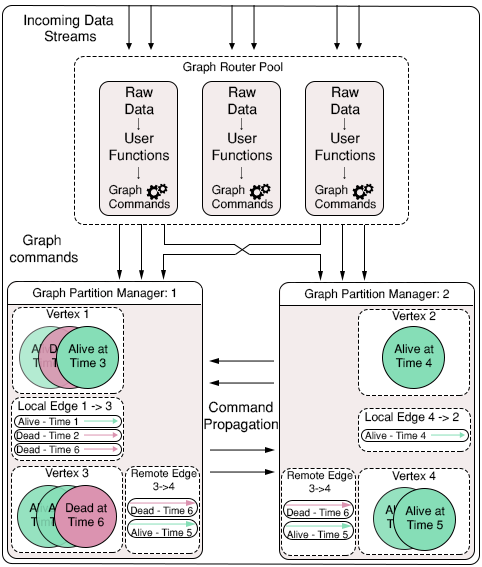

Raphtory is built around the actor model using the Akka Framework. There are two main Actor types, Routers and Partition Managers.

Routers take in raw data from a given source and convert it to Graph updates, which may consist of adding, removing or updating the properties of a Vertex or Edge. The Partition Manager in charge of the Vertex (or source of an edge) will then be informed of the update, and will insert it into the ordered history of the affected entities. If the Partition manager is not in charge of all effected entities (such as an edge which spans between two Vertices which are stored in different partitions) it will communicate with other affected partitions to synchronize the update across the cluster.

As Raphtory inserts updates into an ordered history, and no entities are actually deleted (just marked as deleted within their history) the order of execution does not matter. This means that Addition,Deletion and Updating are all addative and cummulative. An overview of this can be seen in the diagram below:

To make Raphtory as easy to run as possible it has been containerised within docker. This means that you only have to install docker and you will be able to run raphtory on your machine.

First clone the Raphtory-Deployment project which contains all of the yml files required for running. Once this is cloned and you have docker installed, run:

docker swarm init This will initialise a docker swarm cluster onto your machine which will allow you to deploy via docker stack. Docker stack requires a Docker Compose file to specify what containers to run, how many of each type and what ports to open etc. Within the Raphtory-Deployment repository there are two main compose files: One for running on a cluster, one for running locally.

##Example workloads to pick from Once Docker is setup you must pick an example workload to test. There are three to choose from (available here): A 'random' graph where updates are generated at runtime; the GAB.AI graph (a graph build from the posts on the social network); and a 'coingraph' built by ingesting the transactions within the bitcoin blockchain.

Each datasource comes with a Spout which generates/pulls the raw data, a Router which parses the data into updates, and example Live Analysis Managers which perform some basic processing. Note: Both GAB and Bitcoin workloads have example data baked into Raphtory's base image, meaning you can run these without having access to the GAB posts, or a Bitcoin full node established.

Raphtory uses Reflection to decide what Spout/Router/Analysis Manager to create at run time. Therefore, to choose one of these examples you do not need to rebuild the Raphtory image, only change the docker environment file (.env) which docker stack requires when running. Example .env files are available here for each of the workloads. For the insturctions below I shall be using the 'fake' bitcoin environment file. This has several config variables which aren't too important for testing, the main one to focus on are the specified classes highlighted below:

The first thing to do is to copy the example environment file to .env and deploy the docker stack:

cp EnvExamples/bitcoin_read_dotenv.example .env

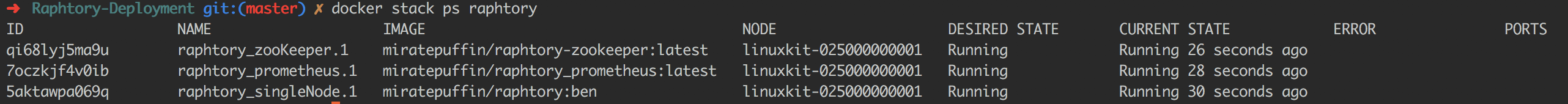

docker stack deploy --compose-file docker-compose-local.yml raphtoryIf we look inside of the compose file, we will see three 'services' which this should deploy. Prometheus, which is the monitoring tool used for Raphtory. Zookeeper which containers can use to join the Akka network, and singleNode which contains all of the Raphtory components. We can check if the cluster is running via:

docker stack ps raphtorywhich will list all of the containers running within the stack and allow you to connect to their output stream to see what is going on inside. This should look like the following:

We can then view the output from Raphtory running within in the single node via:

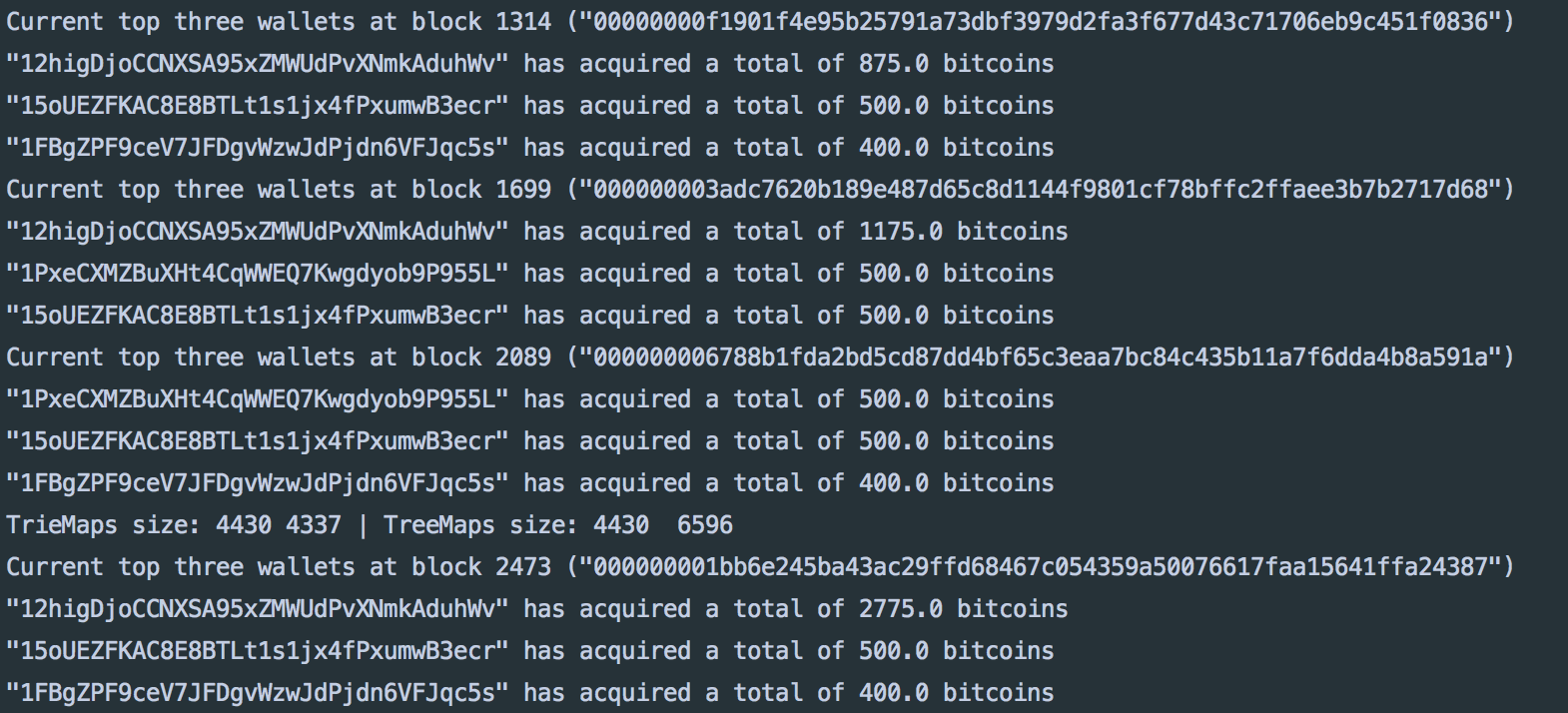

docker service logs raphtory_singleNode --followThere should then be some initial debug logs followed by the output for the analysis for the chosen workload. For example below we can see the Bitcoin Analyser returning the top three wallets which have aquired the most bitcoins at the latest ingested block.

In addition to these logs Prometheus is running at http://localhost:8888, providing additional metrics such as CPU/memory utilisation, number of vetices/edges and updates injested.

Finally when you are happy everything is working, the cluster can be removed by running:

docker stack remove raphtoryDeploying over a cluster is very similar to deploying locally, we again copy the env file and deploy via docker stack, but this time we use the docker-compose.yml file:

cp EnvExamples/bitcoin_read_dotenv.example .env

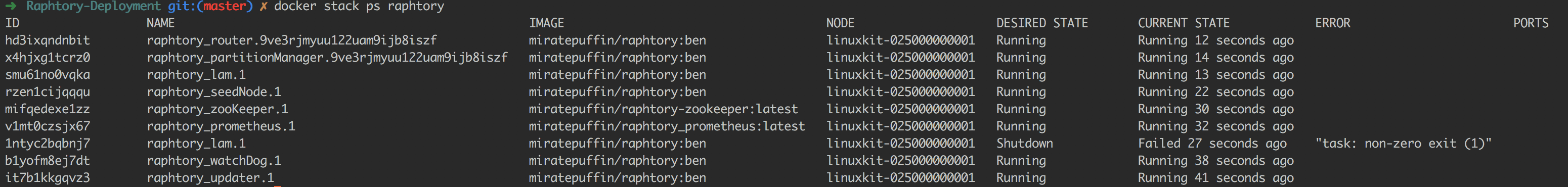

docker stack deploy --compose-file docker-compose.yml raphtoryWhen we look inside of this file it can be seen that there a lot more services. This is because each of the Raphtory cluster components are now within their own containers. It should be noted here that due to the random order docker starts up these containers, they can sometimes fail to connect to zookeeper and crash (see the Live analysis manager below). This is completely fine and the container will simply be restarted and connect to the cluster.

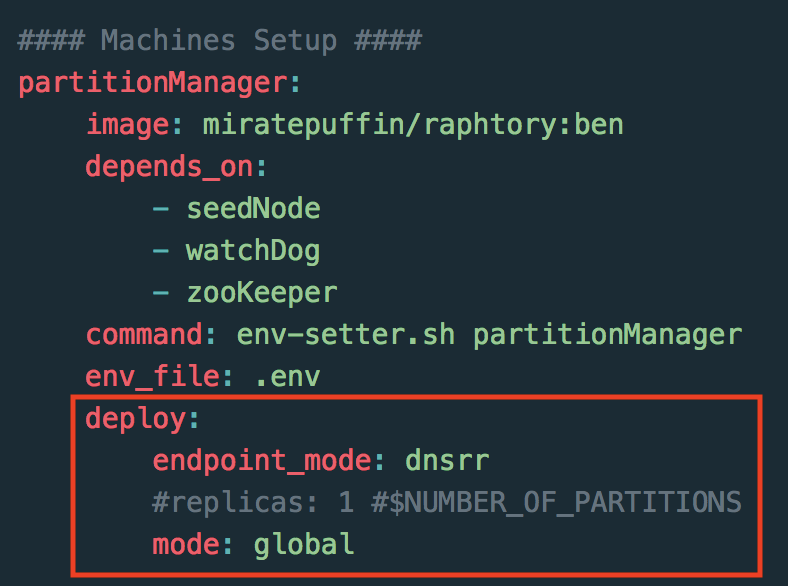

As you are now running in cluster mode the number of PM's and Routers within your cluster can be specified. This is set within the yml file under the 'deploy' section of each service. A nice way we have found to do this is to set the mode to global and then set a label constraint for the service. This way you get one Partition on each chosen node within the swarm cluster.

In addition to the above, whilst Routers and Live Analysis Managers can leave and join the cluster at any time (as they are somewhat stateless), there has to be a set number of Partition Managers established at the start. This is set within the .env file under PARTITION_MIN and should match the number which will be created when the containers are deployed.