This script fetches issues from a GitHub repository and saves them in Markdown format, with support for keyword and state filters. It was initially designed to aid in creating ChatGPT bots that can answer queries based on GitHub issues, aiding in model training.

An example of its application is the Robo Advisor bot, trained on ROS 2 documentations and issues. However, additional data sources may be required for comprehensive model training.

- Fetch issues from any GitHub repository.

- Filter issues by keywords.

- Select issues based on their state (open, closed, or all).

- Save all relevant issue information, including comments, into a single Markdown file.

Before you run this script, you need to have Python installed on your machine. Additionally, you will need a GitHub Personal Access Token with permissions to access repositories.

- Clone this repository:

git clone https://github.com/leochien1110/github-issue-crawler.git cd github-issue-crawler - Install the required Python libraries:

pip install -r requirements.txt

💡 Without a GitHub access token, you will be limited to a lower rate limit for API requests. Generate a personal access token from your GitHub account:

Go to GitHub -> Settings -> Developer settings -> Personal access tokens -> Generate new token. Make sure to select the scopes or permissions you want to grant this token, such as repo for repository access. If you're unsure, select public repo.

Copy the generated token and use it in the configuration step. Save this token securely, as you won't be able to see it again.

- Setup your Github token in the environment variable:

export GITHUB_TOKEN=your_access_tokenOr you can save this into ~/.bashrc for future use:

echo "export GITHUB_TOKEN=your_access_token" >> ~/.bashrc

source ~/.bashrc-

Run the script using the following command:

python github_issue_crawler.py --repo [owner/repo] --state [open|closed|all] --keywords [keyword1 keyword2 ...] --output [output_file.md]

The arguments are as follows:

-r, --repo: The repository to fetch issues from, in the formatowner/repo. For example,ros2/rclpy.-s, --state: The state of the issues to fetch (open, closed, or all). Default is 'closed'. It is not recommended to useallsince it might reach the Github API request limit.-k, --keywords: Optional A list of keywords to filter the issues.-o, --output: Optional The output file where the issues will be saved in Markdown format. By default, the collected document will be saved undernotes/{owner}/{repo}_{keywords_}{timestamp}.md. -

Take

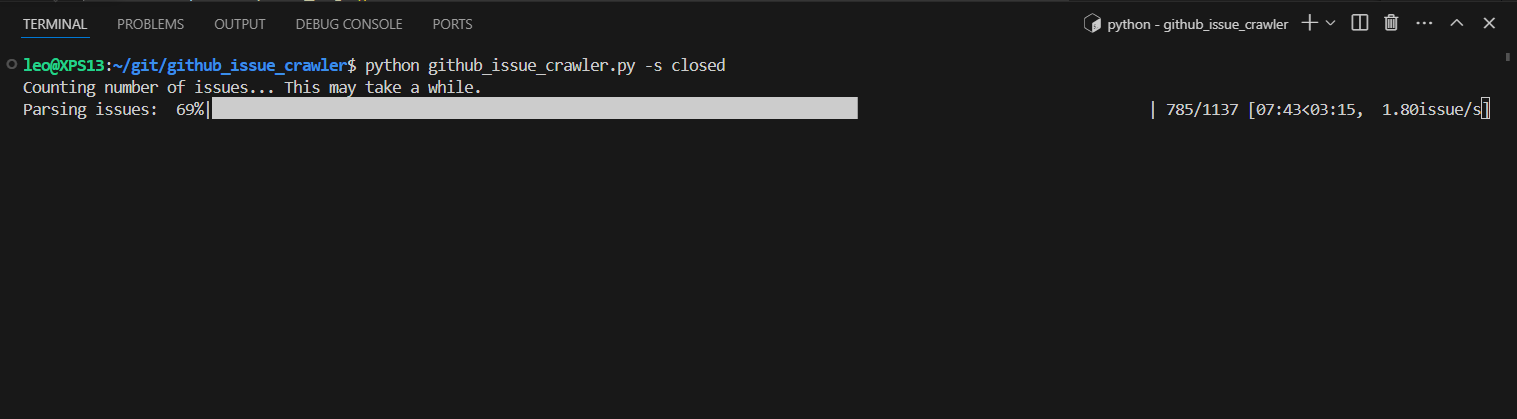

ros2/rclpyrepository as an example:python github_issue_crawler.py -r ros2/rclpy -s closed -k bug # or rclcpp python github_issue_crawler.py -r ros2/rclcpp -s closedThen you should see the output like this, the progress bar will show the fetching progress:

-

Then you can upload the generated markdown file to your ChatGPT bot for training. Enter the ChatGPT

There is another tool that helps you merge all the ROS documentation into a single file.

-

Download the ROS documentation Repo.

-

Run the

merge_rst.pyto merge all the.rstfiles into a single file.python merge_rst.py <output_directory> <output_directory>

These tools currently jam all the information into a single file, which may hit the token limit of ChatGPT. You may need to split the file into smaller pieces. This will be the TODO for the next version.

-

Github Token Rate Limit

github.GithubException.RateLimitExceededException: 403 {'message': 'API rate limit exceeded for user ID xxx...This is because of the Github's primary rate limit for authenticated users.

Long story for short,

- Personal token has a rate limit of 5,000 requests per hour.

- GitHub Enterprise Cloud organization have a higher rate limit of 15,000 requests per hour.

-

ChatGPT Knowledge Token Limit ChatGPT has a limit of 2 million tokens for each knowledge file. A good rule of thumb is to keep the knowledge file under 10,000 lines or 5 MB(markdown).

Contributions are what make the open source community such an amazing place to learn, inspire, and create. Any contributions you make are greatly appreciated.

- Fork the Project

- Create your Feature Branch (git checkout -b feature/AmazingFeature)

- Commit your Changes (git commit -m 'Add some AmazingFeature')

- Push to the Branch (git push origin feature/AmazingFeature)

- Open a Pull Request

⚠️ Don't forget to hide the information inconfig.jsonbefore committing your changes.

Distributed under the MIT License. See LICENSE for more information.

Contact [email protected]

Project Link: https://github.com/leochien1110/github-issue-crawler