A simple guide to compile Llama.cpp and llama-cpp-python using CLBlast for older generation AMD GPUs (the ones that don't support ROCm, like RX 5500).

- Install

cmakeusing pip.

pip install cmake

- Follow regular instructions to install llama-cpp-python. The uninstalled the default llama-cpp-python versions installed:

pip uninstall -y llama-cpp-python llama-cpp-python-cuda

-

Move the

OpenCLfolder under the C drive. Alternatively, edit theCLBlastConfig-release.cmakefile to point to where you save the folderOpenCL.- This folder was obtained from OCL SDK Light AMD. The only difference is that that one creates an env variable to OCL_ROOT, but you can just point to the folder directly and avoid dealing with .exe files.

- Here is the part you need to update in the

CLBlastConfig-release.cmakefile if you save theOpenCLfolder somewhere else:

# Import target "clblast" for configuration "Release" set_property(TARGET clblast APPEND PROPERTY IMPORTED_CONFIGURATIONS RELEASE) set_target_properties(clblast PROPERTIES IMPORTED_IMPLIB_RELEASE "${_IMPORT_PREFIX}/lib/clblast.lib" IMPORTED_LINK_INTERFACE_LIBRARIES_RELEASE "C:/OpenCL/lib/OpenCL.lib" IMPORTED_LOCATION_RELEASE "${_IMPORT_PREFIX}/lib/clblast.dll" )Edit the

IMPORTED_LINK_INTERFACE_LIBRARIES_RELEASEto where you put OpenCL folder. -

Reinstall llama-cpp-python using the following flags.The location

C:\CLBlast\lib\cmake\CLBlastshould be inside of where you downloaded the folder CLBlast from this repo (you can put it anywhere, just make sure you pass it to the -DCLBlast_DIR flag)- For powershell, set env variables first:

$env:CMAKE_ARGS="-DLLAMA_CLBLAST=on -DCLBlast_DIR=C:\CLBlast\lib\cmake\CLBlast" $env:FORCE_CMAKE=1 pip install llama-cpp-python --no-cache-dir- If you are installing through cmd, you can just use this instead:

CMAKE_ARGS="-DLLAMA_CLBLAST=on -DCLBlast_DIR=C:\CLBlast\lib\cmake\CLBlast" FORCE_CMAKE=1 pip install llama-cpp-python --no-cache-dir -

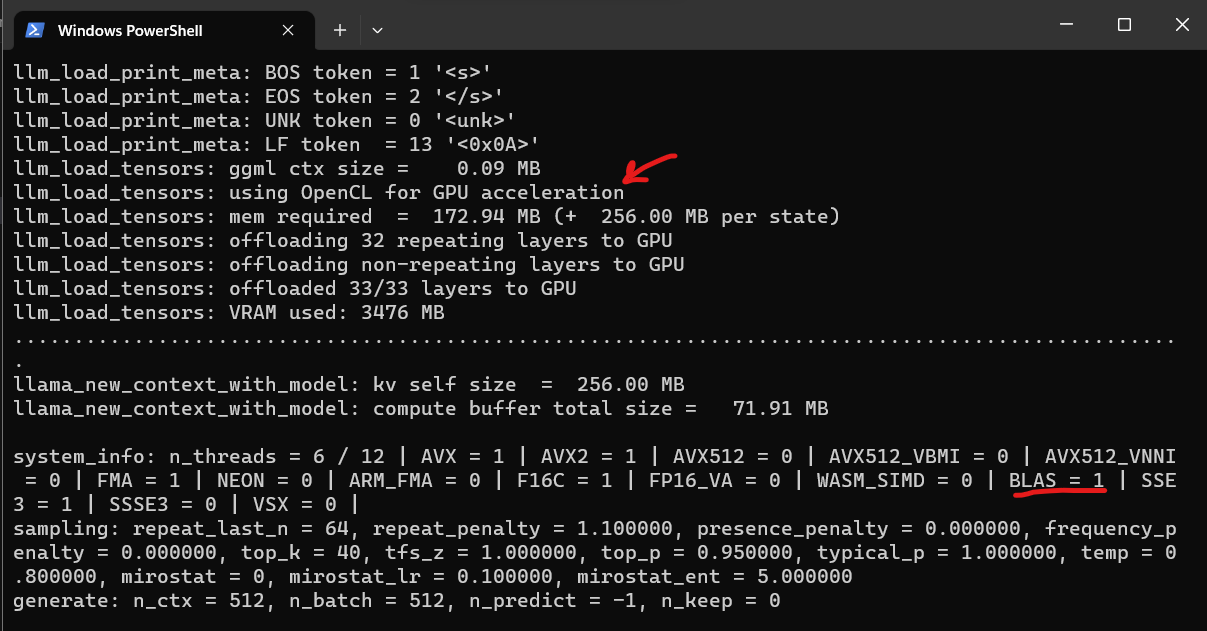

All done, fire it up and you should see something like this when you load a model using llama-cpp-python:

- Install

cmakeusing pip.

pip install cmake

- Clone

llama.cpprepo

git clone https://github.com/ggerganov/llama.cpp

cd llama.cpp

- Create a build folder and run cmake with the following flags:

-DLLAMA_CLBLAST=onto enable CLBlast-DCLBlast_DIR=C:\CLBlast\lib\cmake\CLBlastto point to where you downloaded the folder CLBlast from this repo (you can put it anywhere, just make sure you pass it to the -DCLBlast_DIR flag)

mkdir build

cd build

cmake .. -DLLAMA_CLBLAST=on -DCLBlast_DIR=C:/CLBlast

- Build the project

cmake --build . --config Release

-

Add the the

clblast.dllfolder to your path. Or alternatively. Just copy theclblast.dllto theReleasefolder where you have your llama-cpp executables.- You can find the

clblast.dllinC:\CLBlast\libon this repo.

- You can find the

-

Run a model

cd bin/Release

.\main.exe -m 'C:\YOUR-MODELS-FOLDER\codellama-7b-instruct.Q5_K_S.gguf' -ngl 40 -t 6 -p 'write a python function to delete all folders that are bigger than 100 mb on current directory'

where the flags mean:

-mpath to the model-nglnumber of layers to offload to gpu-tnumber of threads./main.exeis the executable generated by cmake

Error:

Error The code execution cannot proceed because clblast.dll was not found

Solution: Put clblast.dll in the same folder as the executables. You can find the clblast.dll in C:\CLBlast\lib on this repo. Or add the folder where you have the clblast.dll to your path.

Error:

CMake Error in CMakeLists.txt:

Imported target "clblast" includes non-existent path

"C:/OpenCL/include"

Solution

Edit the CLBlastConfig-release.cmake file to point to where you saved the folder OpenCL if it is different from C:\OpenCL.