This repository supports the development of the ground robotics challenge from RASC.

Keywords: Challenge, Ground-robotics, Turtlebot3

Sharp your spear!

For a good development it is suggested that you perform the following tutorials

Developing skills and knowledge in navigation is very important for a researcher in the field of robotics, in view of the growth of mobile robotics applied to the theme "robots in terrestrial environment", it is necessary to develop mechanisms that solidify knowledge in a practical way. Concepts such as SLAM, State-machine, computer vision and localization are quite recurrent in this context, so, the Heavy Hull challenge proposes the systematic application of these concepts in order to strengthen future developments.

The objective is to carry out the mission obeying the prerequisites.

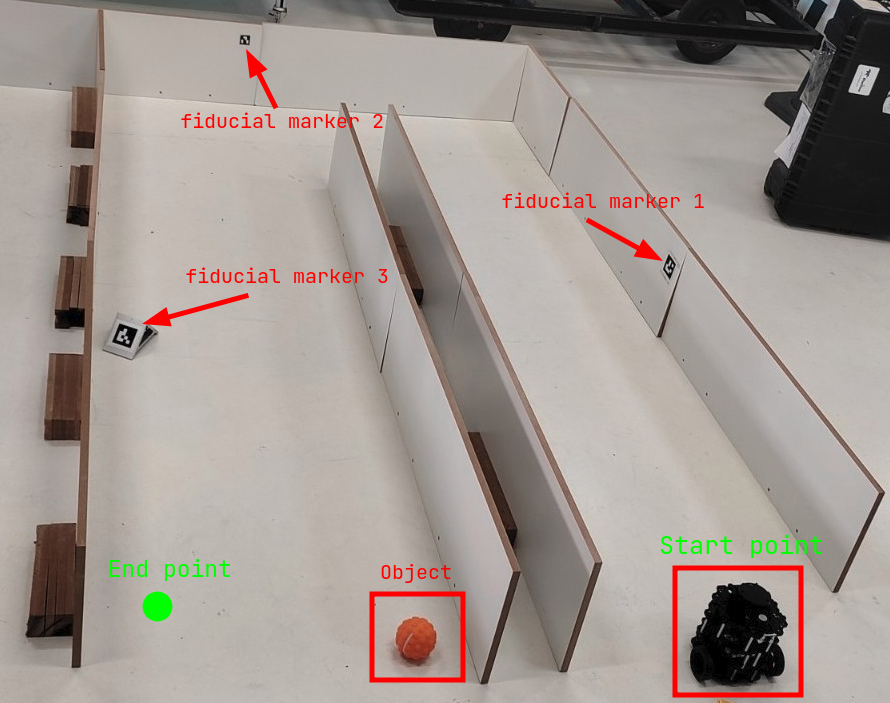

The Turtlebot 3 must go through a delimited environment starting at the start point highlighted in the example environment, being positioned with the camera facing away from the entrance. The mission environment is similar to a maze, composed of two corridors and with the 3 previously placed fiducial markers. The robot must go through the environment completely and reach the end point, at the end of the route (arrival at the final point) there will be an object that must be identified.

The object to be identified is an orange ball

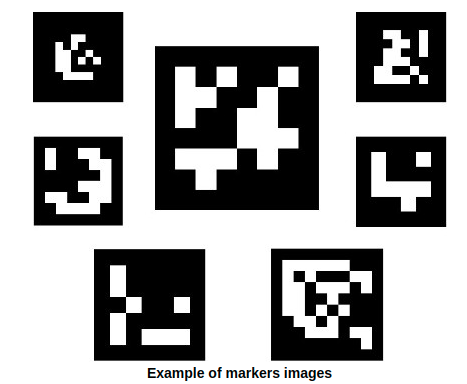

The markers used must be of the ArUco type

- The challenge must be performed using ROS2

- For autonomous navigation, state-machine must be used

- The simulation and real environment must be built based on the example environment

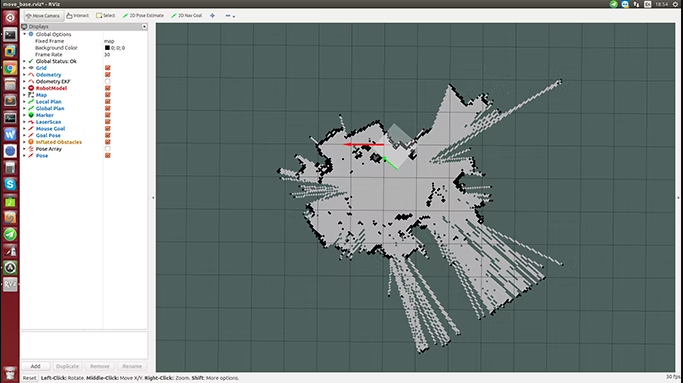

- The Turtlebot 3 must make the environment map while executing the mission

- The Turtlebot 3 must use three fiducial markers for localization

Anderson Lima : [email protected] Marco Reis : [email protected]

For more codes visit BIR - BrazilianInstituteofRobotics For more informations visit RASC

1. Install ROS Noetic.

2. Create a workspace, clone this repository and build the workspace.

pc remoto

$ roscore

$ export TURTLEBOT3_MODEL=burger

$ roslaunch turtlebot3_slam turtlebot3_slam.launch

$ export TURTLEBOT3_MODEL=burger

$ roslaunch turtlebot3_teleop turtlebot3_teleop_key.launch

$ rosrun map_server map_saver -f ~/map

$export TURTLEBOT3_MODEL=burger

roslaunch turtlebot3_navigation turtlebot3_navigation.launch map_file:=$HOME/map.yaml

$ roslaunch turtlebot3_autorace_camera intrinsic_camera_calibration.launch

$ roslaunch turtlebot3_autorace_camera extrinsic_camera_calibration.launch

$ roslaunch turtlebot3_autorace_detect detect_sign.launch mission:=SELECT_MISSION

$ rqt_image_view

turtle

$ ssh [email protected]

$ export TURTLEBOT3_MODEL=burger

$ roslaunch turtlebot3_bringup turtlebot3_robot.launch

$ roslaunch turtlebot3_autorace_camera raspberry_pi_camera_publish.launch

3. Initialize the simulation

$ source /ardrone_ws/devel/setup.bash

$ roslaunch ardrone_challenge challenge.launch

4. Inicialize the node

$ source /ardrone_ws/devel/setup.bash

$ rosrun ardrone_challenge drone_move.py

5. If you want to compare the real position of the drone with the one it is in the node

$ rostopic echo /ardrone/gt_pose

The node positions are printed while the node is running.