This project implements Deep Learning solution in generating music with it's image form - piano roll. It was part of my diploma thesis defended in January 2019.

LAST UPDATE - New article "Use of artificial intelligence for generating musical contents" PL

Listen AI Music at my SoundCloud

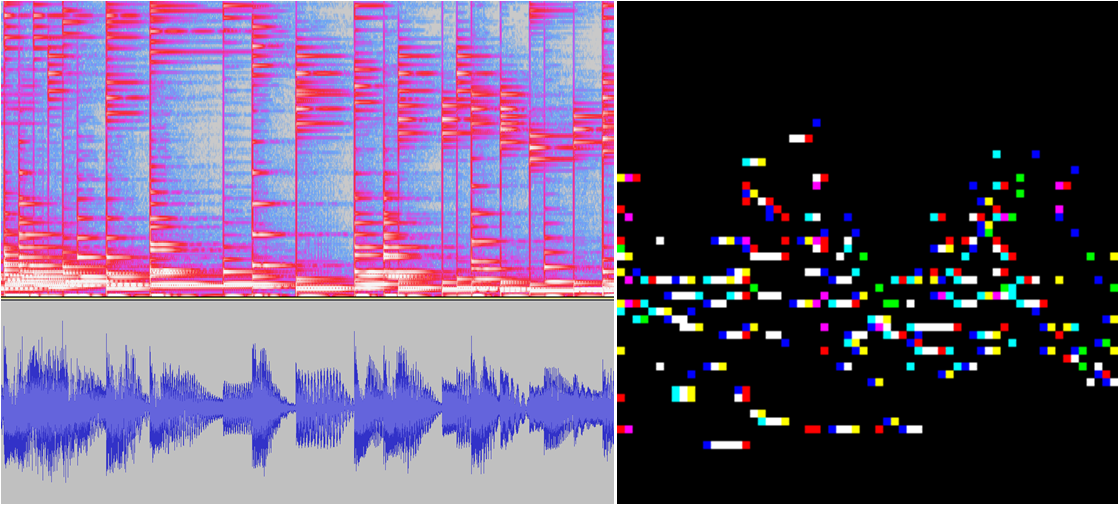

I've decided to use piano roll format, becouse it has less data redundation than wave form and spectrogram:

Images in piano roll format was created from two midi databases:

| Name | Description | Data type | Data size |

|---|---|---|---|

| Dough McKeznie | Jazz Piano Solo | MIDI | ~300 pieces, 20h |

| MAESTRO | Recordings from classical piano competition | Wave & MIDI | ~2500 pieces, 170h |

Using scripts in MidiScripts I've transformed raw midi files into piano roll image format.

- Removing unnecessary markers from MIDI file using mtxClearScript.py

- Quantizing midi events to 30ms using mtxQuantizeScript.py

- Merging all midi files with mtxMergeScript.py into one big pixel matrix

- Dividing and converting with mtxCompressScript.py into training image set.

MFile 1 2 384

MTrk

0 Tempo 500000

0 TimeSig 4/4 24 8

1 Meta TrkEnd

TrkEnd

MTrk

0 PrCh ch=1 p=0

806 On ch=1 n=42 v=71

909 Par ch=1 c=64 v=37

924 Par ch=1 c=64 v=50

939 Par ch=1 c=64 v=58

955 Par ch=1 c=64 v=62

970 Par ch=1 c=64 v=65

986 Par ch=1 c=64 v=68

...

I've decided to use DCGAN architecture described and implemented here

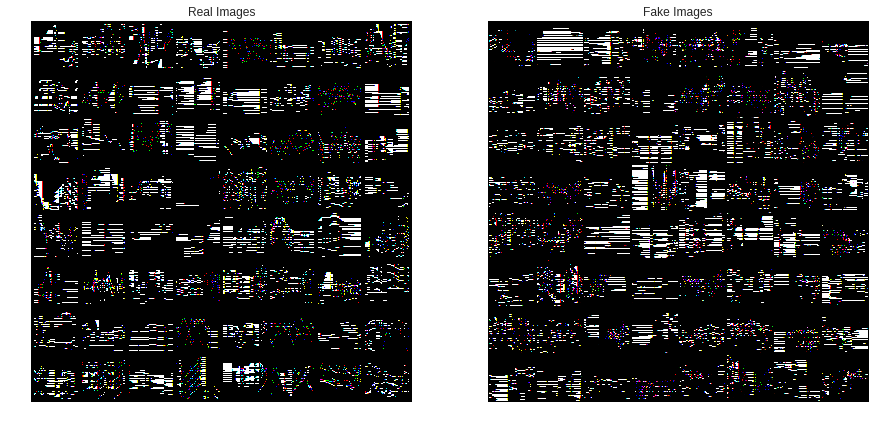

To train I've used Google Collaboratory with their amazing Tesla K80, and my private device with NVidia 1050Ti which performed really well. As a result of training I've recived bunch of indistinguishable images which I've to transform back to midi form with imagesDecodeScript.py

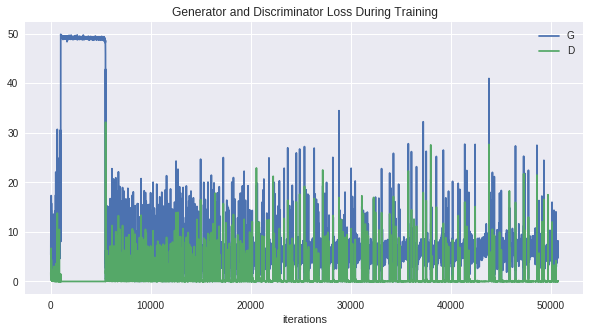

Overal cost function in first experiments semms to prove some overfitting, but in this kind of images it's nothing suprising.

And here we have one of the most beautiful things in using GAN networks, learning progres animation

As always not the theory, but results are the most interesting thigs in this type of projects.

Look at examples where you can find music generated using DCGAN.