Imagine a world where you can have a conversation with your data. SecondBrain is a framework that allows you to do just that by linking plain text prompts with external data sources, commonly referred to as Retrieval Augmented Generation (RAG). The resulting context is then presented an LLM to provide the information it needs to answer your questions.

This means you can ask a question like:

Highlight the important changes made since October 1 2024 in the GitHub repository "SecondBrain" owned by "mcasperson"

and get a meaningful response against real-time data.

SecondBrain is available as a GitHub Action. It bundles a copy of Ollama that executes locally on the GitHub runner, removing the need to host any external LLMs. This allows you to generate summaries of your git diffs as part of a GitHub Actions Workflow.

Note

The ability to interact with other data sources via the GitHub Action may be added in the future.

Here is an example of how to use the action:

jobs:

# Running the summarization action in its own job allows it to be run in parallel with

# the traditional build and test jobs. This is useful, because the summarization job

# can take some time to complete.

summarize:

runs-on: ubuntu-latest

steps:

- name: SecondBrainAction

id: secondbrain

uses: mcasperson/SecondBrain@main

with:

prompt: 'Provide a summary of the changes from the git diffs. Use plain language. You will be penalized for offering code suggestions. You will be penalized for sounding excited about the changes.'

token: ${{ secrets.GITHUB_TOKEN }}

owner: ${{ github.repository_owner }}

repo: ${{ github.event.repository.name }}

sha: ${{ github.sha }}

- name: Get the diff summary

env:

# The output often contains all sorts of special characters like quotes, newlines, and backticks.

# Capturing the output as an environment variable allows it to be used in subsequent steps.

RESPONSE: ${{ steps.secondbrain.outputs.response }}

# Save the response as a job summary:

# https://docs.github.com/en/actions/writing-workflows/choosing-what-your-workflow-does/workflow-commands-for-github-actions#adding-a-job-summary

run: echo "$RESPONSE" >> "$GITHUB_STEP_SUMMARY"| Input | Description | Mandatory | Default |

|---|---|---|---|

prompt |

The prompt sent to the LLM to generate the summary. This prompt has access to system prompts that contain the single paragraph summaries of the commits. | No | A default prompt is used to generate summaries of the git diffs. See action.yml for the exact default value. |

token |

The GitHub token to use to access the repository. Set this to ${{ secrets.GITHUB_TOKEN }}. |

Yes | None |

owner |

The owner of the repository. | No | ${{ github.repository_owner }} |

repo |

The name of the repository. | No | ${{ github.event.repository.name }} |

sha |

A comma separated list of commit SHAs to generate the summary for. This will typically be set to ${{ github.sha }}. See this StackOverflow post for details on getting SHAs associated with PRs. |

Yes | None |

model |

The model to used to answer the prompt. Note that using a custom model will slow down the action as the model must be downloaded first. | No | llama3.2 |

gitDiffModel |

The model to used to summarize the git diffs. Note that using a custom model will slow down the action as the model must be downloaded first. | No | qwen2.5-coder |

getSummarizeIndividualDiffs |

Whether to summarize each individual diff to a single, plain-text paragraph before it is passed as context to the LLM when answering the prompt. Summarizing each individual diff ensures many large diffs are condensed down into simple paragraphs, allowing more commits to be used in the prompt context. However, each individual commit is summarized in isolation, meaning multiple related commits may not be interpreted correctly. Set the value to false when the diffs of each commit are small enough to fit into the context window size (which is 8192 tokens by default), and set to true if you expect to have to process large diffs. If setting the value to false, you may also consider setting the model property to qwen2.5-coder to have a LLM trained specifically for coding tasks to process the prompt. Note that when set to false, the annotations likely will not match the diffs to the prompt answer. |

No | true |

| Output | Description |

|---|---|

response |

The response generated by the LLM. This is the summary of the git diffs. |

This is what the response looks like:

SecondBrain was awarded second place in the Payara Hackathon - Generative AI on Jakarta EE!

An example usage of SecondBrain is a prompt like this:

Summarize 7 days worth of messages from the #announcements channel in Slack

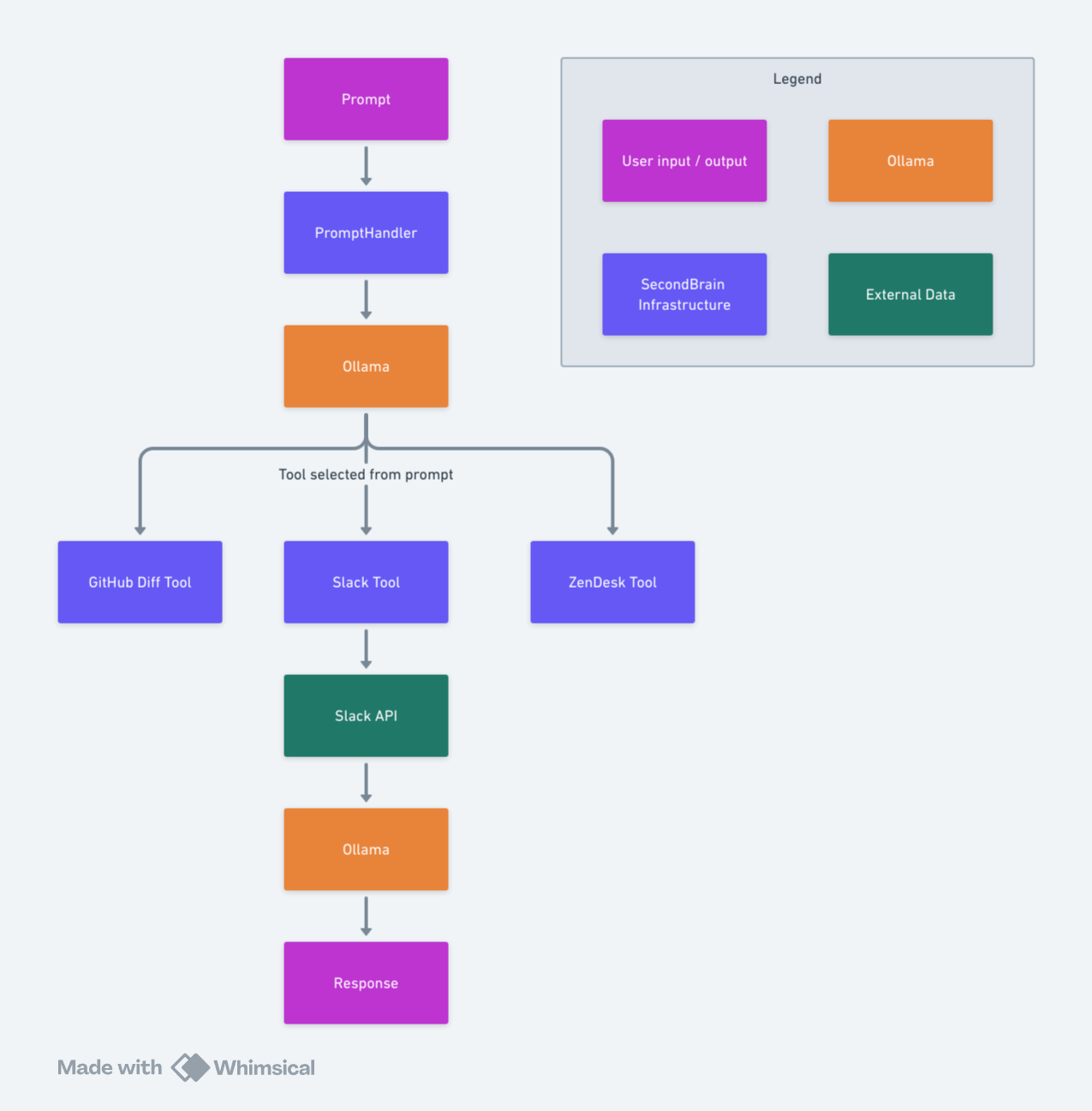

This prompt is handled like this:

- The prompt is passed to Ollama which selects the

secondbrain.tools.SlackChanneltool. This is commonly referred to as tool or function calling. - Ollama also extracts the channel name

#announcementsand days7from the prompt as an argument. - The

secondbrain.tools.SlackChanneltool is called with the argument#announcementsand7. - The tool uses the Slack API to find messages from the last

7days in the#announcementschannel. - The messages are placed in the context of the original prompt and passed back to Ollama.

- Ollama answers the prompt with the messages context and returns the result to the user.

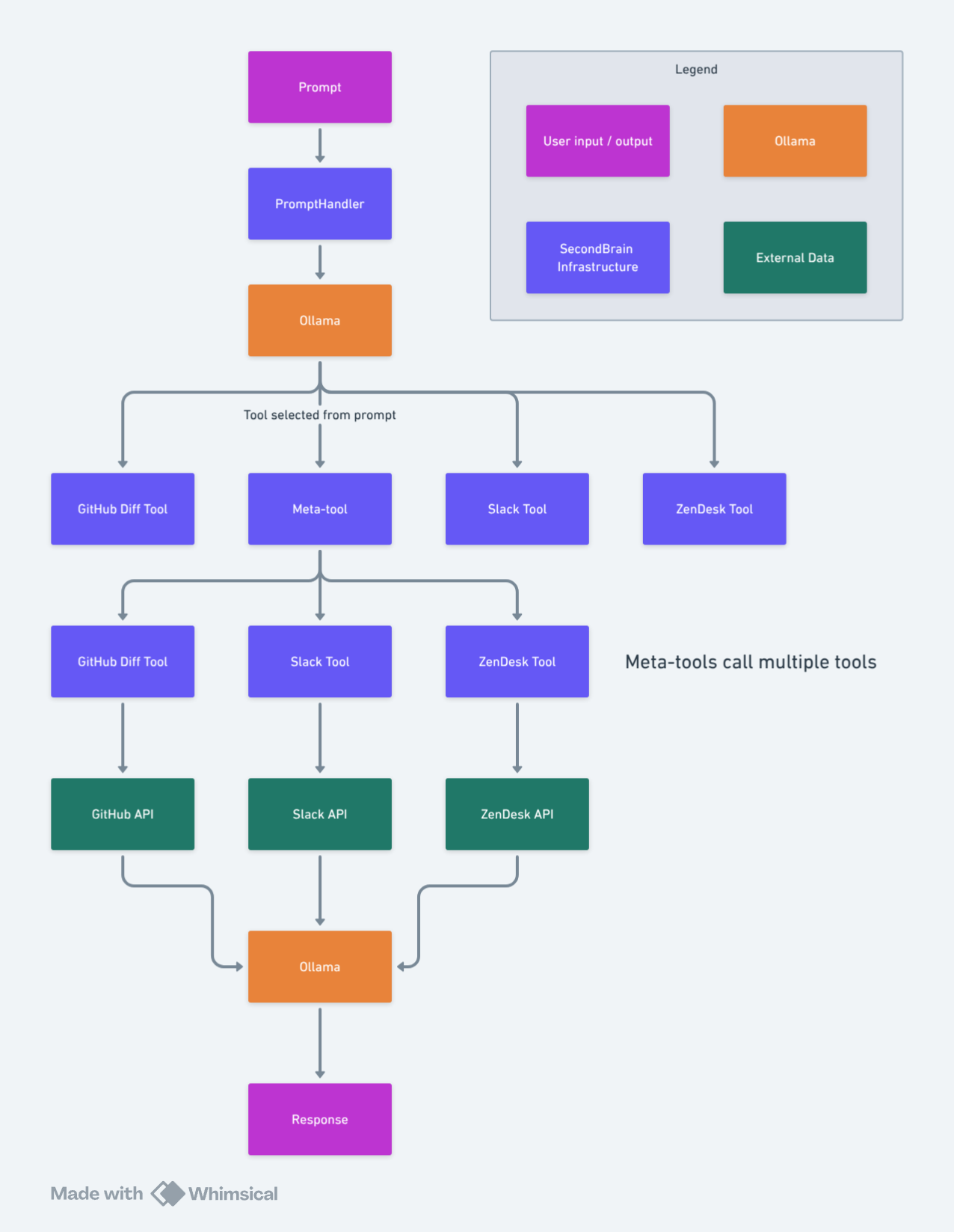

Meta-tools call multiple tools and collate the context to be passed to the LLM to generate a response. This allows SecondBrain to answer complex questions that require data from multiple sources.

The results generated by SecondBrain include annotations that link the source information to the response generated by the LLM. This helps to solve the common problem when using LLMs where you can not be sure why it provided the answer that it did. By annotating the response with links to the source information, you can quickly verify the answer by confirming the source information.

Matching the LLMs answer to the source is done by converting sentences to vectors with a model like all-MiniLM-L6-v2. When sentences in the source and the response are close, the source is annotated.

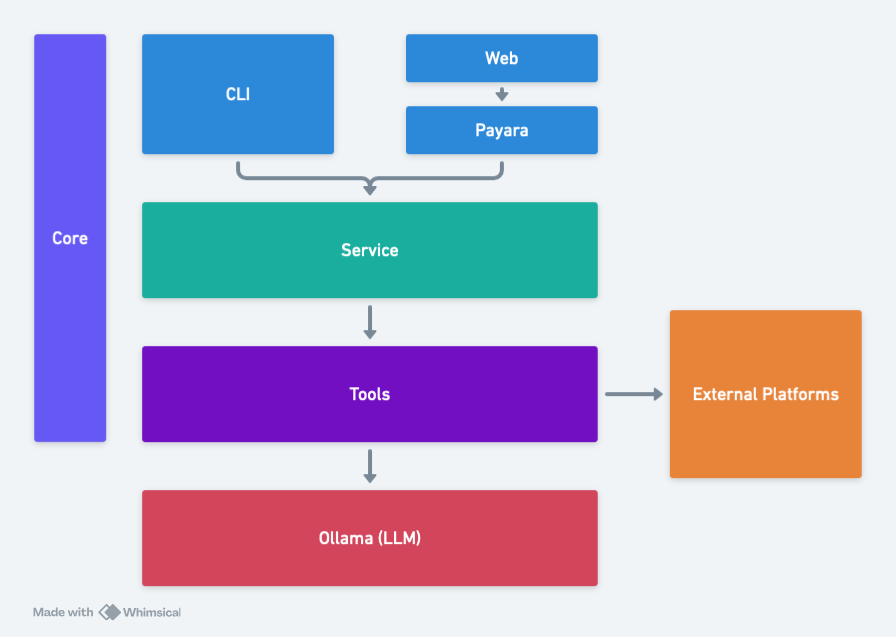

SecondBrain makes heavy use of Jakarta EE and MicroProfile, executed in a Docker image using Payara Micro.

Secondbrain is distributed as a Docker image and run in parallel with Ollama using Docker Compose:

git clone https://github.com/mcasperson/SecondBrain.gitto clone the repositorycd SecondBrainto enter the project directorydocker compose upto start the Docker Compose stackdocker exec secondbrain-ollama-1 ollama pull llama3.2to pull thellama3.2LLM. This is used by the queries.docker exec secondbrain-ollama-1 ollama pull llama3.1to pull thellama3.1LLM. This is used by tool selection.- Create a GitHub access token

- Open https://localhost:8181, paste in your access token, and click

Submitto answer the default query - Optional: Run

docker exec secondbrain-ollama-1 ollama psto see the status of the Ollama service

The CLI is useful when you want to automate SecondBrain, usually to run it on a schedule. For example, you could summarize a Slack channel every week with a command like:

java \

-Dsb.ollama.toolmodel=llama3.1 \

-Dsb.ollama.model=gemma2 \

-Dsb.ollama.contextlength=32000 \

-Dstdout.encoding=UTF-8 \

-jar secondbrain.jar \

"Given 1 days worth of ZenDesk tickets to recipient \"[email protected]\" provide a summary of the questions and problems in the style of a news article with up to 7 paragraphs. You must carefully consider each ticket when generating the summary. You will be penalized for showing category percentages. You will be penalized for including ticket IDs or reference numbers. Use concise, plain, and professional language. You will be penalized for using emotive or excited language. You will be penalized for including a generic final summary paragraph. You must only summarize emails that are asking for support. You will be penalized for summarizing emails that are not asking for support. You will be penalized for summarizing marketing emails. You will be penalized for attempting to answer the questions. You will be penalized for using terms like flooded, wave, or inundated. You will be penalized for including an introductory paragraph."ZendeskSendToSlack.ps1 is an example PowerShell script that executes the CLI. This script is run with using Windows Task Scheduler with the command:

pwsh.exe -file "C:\path\to\ZendeskSendToSlack.ps1"SecondBrain excels at generating reports from unstructured data. Because local LLMs are quite slow (unless you happen to have a collection of high end GPUs), these reports can be generated as part of a batch job using the CLI tool. This allows you to run SecondBrain overnight and review the report in the morning.

The scripts directory contains many examples of these kind of reports generated as part of a PowerShell script and run

as a scheduled task.

Some examples include:

- Summarize the last week's worth of messages in a Slack channel

- Generate a summary of the last week's worth of ZenDesk tickets

- Create a report highlighting the changes in a GitHub repository

The project is split into modules:

secondbrain-corewhich contains shared utilities and interfaces used by all other modules.secondbrain-servicewhich orchestrates the function calling with the LLM.secondbrain-toolswhich contains the tools that interact with external data sources.secondbrain-webwhich is a web interface for interacting with the service.secondbrain-cliwhich is a CLI tool for interacting with the service.

SecondBrain supports switching between different LLMs by changing the sb.ollama.model configuration value. The

following models are supported:

llama3.1- Facebook's open source LLM.llama3.2- Facebook's open source LLM.gemma2- Google's open source LLM model.phi3- Microsoft's Phi model.qwen2- Alibaba's Qwen model.

Support for new models requires implementing the secondbrain.domain.prompt.PromptBuilder interface.

Note that the function calling feature of SecondBrain only supports llama3.1 and llama3.2 models. Function calling

is configured via the sb.ollama.toolmodel configuration value.

SecondBrain is configured via MicroProfile Config. Note that MicroProfile allows these configuration values to be set via a number of different locations, including environment variables, system properties, and configuration files:

sb.slack.clientid- The Slack client IDsb.slack.clientsecret- The Slack client secretsb.zendesk.accesstoken- The ZenDesk tokensb.zendesk.user- The ZenDesk usersb.zendesk.url- The ZenDesk urlsb.google.serviceaccountjson- The Google service account JSON file used to authenticate with Google APIssb.ollama.url- The URL of the Ollama service. (defaults to http://localhost:11434)sb.ollama.model- The model to use in Ollama. Supportsllama3.x,gemma2,phi3,qwen2. See classes that implementsecondbrain.domain.prompt.PromptBuilder. (defaults tollama3.2)sb.ollama.toolmodel- The model to use in Ollama to select a tool. This only supports llama3 models (i.e.llama3.1orllama3.2). (defaults tollama3.2)sb.ollama.contentlength- The content window length to use in Ollama. (defaults to7000 * 4, where each token is assumed to be 4 characters)sb.encryption.password- The password to use for encrypting sensitive data stored by web clients. (defaults to12345678)sb.tools.debug- Whether to log debug information about the tool in the response. (defaults tofalse)

The GoogleDocs tool can

use Application Default Credentials to

authenticate with Google APIs. To set this up, you need to run the following command:

gcloud auth application-default login --scopes https://www.googleapis.com/auth/documents.readonly,https://www.googleapis.com/auth/cloud-platform --client-id-file ~/Downloads/client.json- Install Ollama locally

- Pull the

llama3.2model with the commandollama pull llama3.2 - Pull the

llama3.1model with the commandollama pull llama3.1 - Build and install all the modules with command

mvn clean install - Start Payara Micro with the command

cd web; mvn package; mvn payara-micro:start - Create a GitHub access token

- Open https://localhost:8181/index.html in a browser, paste in the access token, and run the default query

See the secondbrain.tools.HelloWorld tool for an example of how to create a new tool.