Imagine a world where you can have a conversation with your data. SecondBrain is a framework that allows you to do just that by linking plain text prompts with external data sources, commonly referred to as Retrieval Augmented Generation ( RAG). The resulting context is then presented an LLM to provide the information it needs to answer your questions.

This means you can ask a question like:

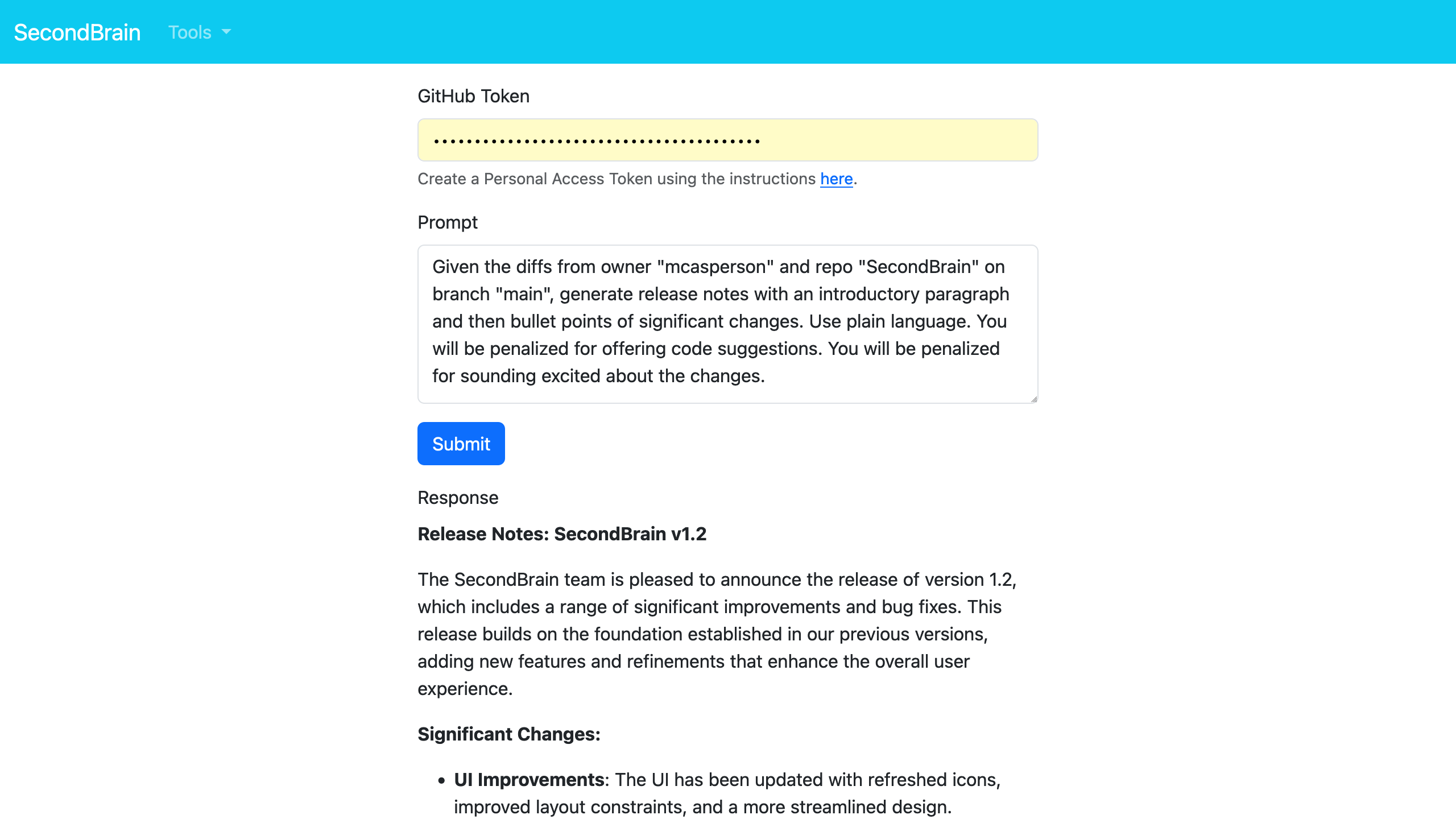

Highlight the important changes made since October 1 2024 in the GitHub repository "SecondBrain" owned by "mcasperson"

and get a meaningful response against real-time data.

An example usage of SecondBrain is a prompt like this:

Summarize 7 days worth of messages from the #announcements channel in Slack

This prompt is handled like this:

- The prompt is passed to Ollama which selects the

secondbrain.tools.SlackChanneltool. This is commonly referred to as tool or function calling. - Ollama also extracts the channel name

#announcementsand days7from the prompt as an argument. - The

secondbrain.tools.SlackChanneltool is called with the argument#announcementsand7. - The tool uses the Slack API to find messages from the last

7days in the#announcementschannel. - The messages are placed in the context of the original prompt and passed back to Ollama.

- Ollama answers the prompt with the messages context and returns the result to the user.

SecondBrain makes heavy use of Jakarta EE and MicroProfile, executed in a Docker image using Payara Micro.

Secondbrain is distributed as a Docker image and run in parallel with Ollama using Docker Compose:

git clone https://github.com/mcasperson/SecondBrain.gitto clone the repositorycd SecondBrainto enter the project directorydocker compose upto start the Docker Compose stackdocker exec secondbrain-ollama-1 ollama pull llama3.2to pull thellama3.2LLM- Create a GitHub access token

- Open https://localhost:8181, paste in your access token, and click

Submitto answer the default query

The project is split into modules:

secondbrain-corewhich contains shared utilities and interfaces used by all other modules.secondbrain-servicewhich orchestrates the function calling with the LLM.secondbrain-toolswhich contains the tools that interact with external data sources.secondbrain-webwhich is a web interface for interacting with the service.secondbrain-cliwhich is a CLI tool for interacting with the service.

SecondBrain is configured via MicroProfile Config. The supplied SlackChannel tool requires the following

configuration to support Oauth logins. Note that MicroProfile allows these configuration values to be set via

a number of different locations, including

environment variables, system properties, and configuration files:

sb.slack.clientid- The Slack client IDsb.slack.clientsecret- The Slack client secret

Other common configuration values include:

sb.ollama.url- The URL of the Ollama service (defaults to http://localhost:11434)sb.ollama.model- The model to use in Ollama (defaults tollama3.2)sb.ollama.contentlength- The content window length to use in Ollama (defaults to7000 * 4, where each token is assumed to be 4 characters)sb.encryption.password- The password to use for encrypting sensitive data stored by web clients (defaults to12345678)sb.tools.debug- Whether to log debug information about the tool in the response (defaults tofalse)

SecondBrain is built around Ollama, which a is a local service exposing a huge selection of LLMs. This ensures that your data and prompts are kept private and secure.

- Install Ollama locally

- Pull the

llama3.2model with the commandollama pull llama3.2 - Build and install all the modules with command

mvn clean install - Start Payara Micro with the command

cd web; mvn package; mvn payara-micro:start - Create a GitHub access token

- Open https://localhost:8181/index.html in a browser, paste in the access token, and run the default query

See the secondbrain.tools.HelloWorld tool for an example of how to create a new tool.