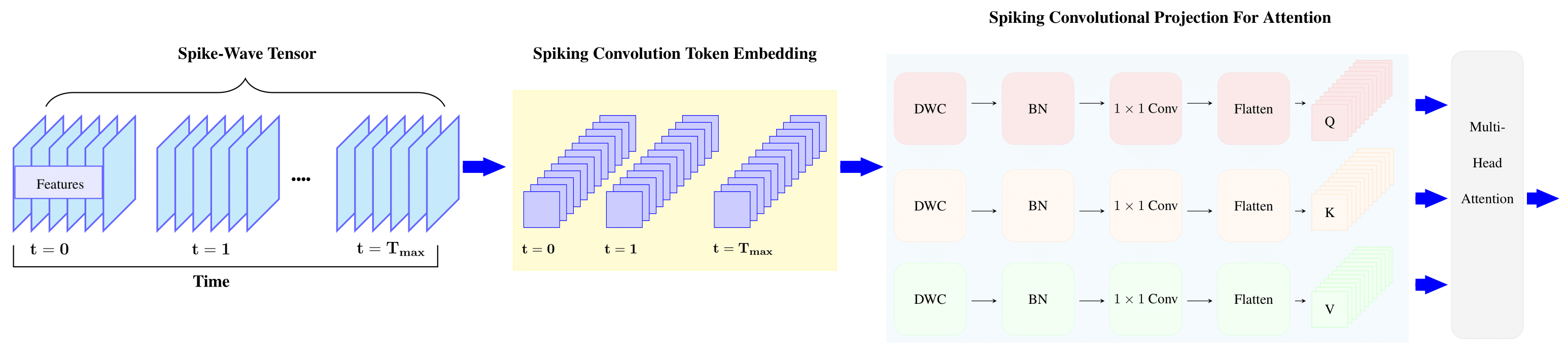

This repository presents the implementation of SCvT model from the paper Spiking Convolutional Vision Transformer (under review).

To create an environment with Python 3.8and download pytorch in CUDA Toolkit 11.3 run:

$conda create -n py3.8 python=3.8

$conda install pytorch torchvision torchaudio cudatoolkit=11.3 -c pytorchTo install all requirements via pip:

$ pip install -r requirements.txtWe use Tiny-Imagenet-200 dataset to test our code starting from small with subsets of 10 classes, then eventually expand to larger and larger subsets, making my way up to all 200 classes. To download this datset follow thes steps:

$ wget http://cs231n.stanford.edu/tiny-imagenet-200.zip

$ unzip tiny-imagenet-200.zipTo resize the images in Tiny-Imagenet-200 dataset from 256X256 to 224X224 run:

$ python resize.pyTo train the model, you can run:

python run.pyTo take advantage of multiple GPUs to train our larger model. We modified the library SpikeTorch to work for multbatch with multiple GPUs (see parrlell_SpykeTorch). Note that in SpykeTorch’s modules and functionalities such as Convolution or Pooling, the mini-batch dimension is sacrificed for the time dimension. Therefore, it does not provide built-in batch processing at the moment.