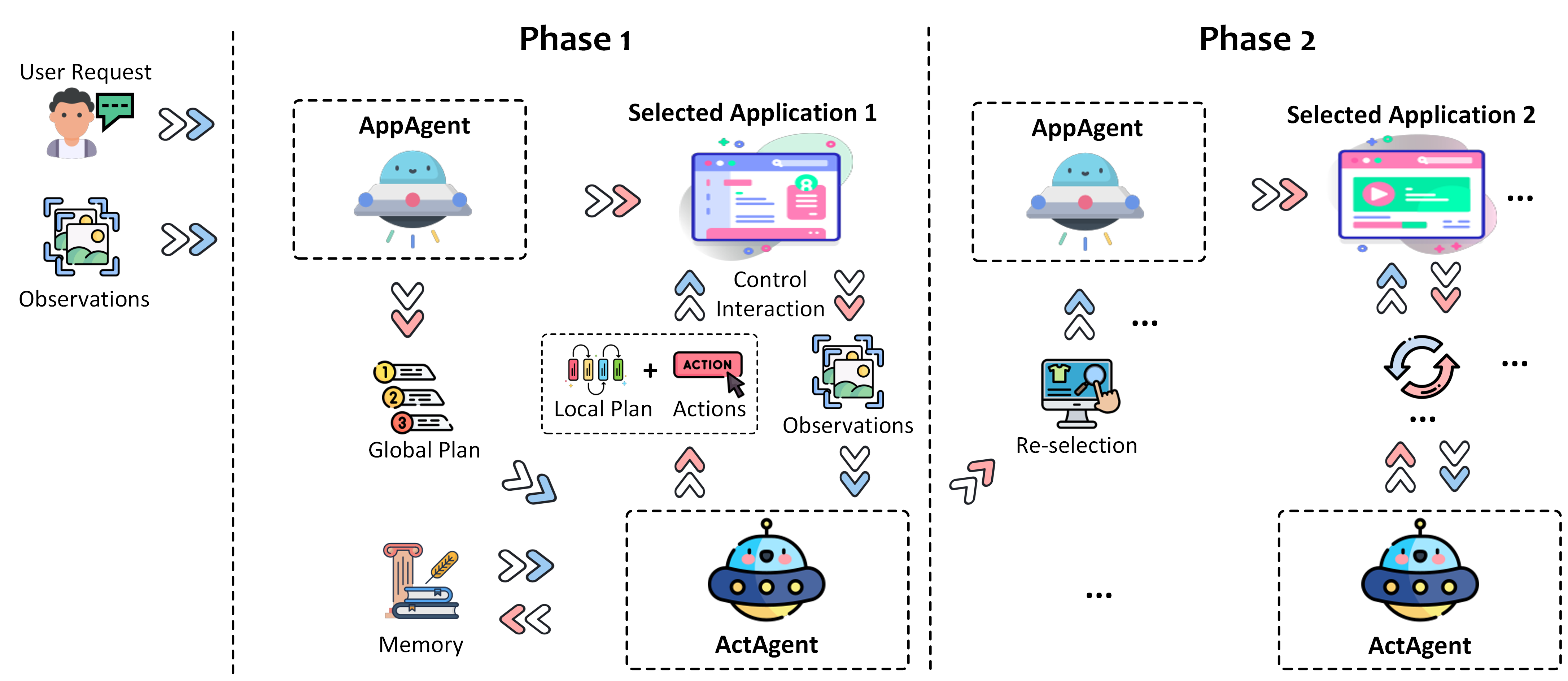

UFO is a UI-Focused dual-agent framework to fulfill user requests on Windows OS by seamlessly navigating and operating within individual or spanning multiple applications.

UFO ![]() operates as a dual-agent framework, encompassing:

operates as a dual-agent framework, encompassing:

- AppAgent 🤖, tasked with choosing an application for fulfilling user requests. This agent may also switch to a different application when a request spans multiple applications, and the task is partially completed in the preceding application.

- ActAgent 👾, responsible for iteratively executing actions on the selected applications until the task is successfully concluded within a specific application.

- Control Interaction 🎮, is tasked with translating actions from AppAgent and ActAgent into interactions with the application and its UI controls. It's essential that the targeted controls are compatible with the Windows UI Automation API.

Both agents leverage the multi-modal capabilities of GPT-Vision to comprehend the application UI and fulfill the user's request. For more details, please consult our technical report.

- 📅 2024-03-25: New Release for v0.0.1! Check out our exciting new features:

- We now support creating your help documents for each Windows application to become an app expert. Check the README for more details!

- UFO now supports RAG from offline documents and online Bing search.

- You can save the task completion trajectory into its memory for UFO's reference, improving its future success rate!

- You can customize different GPT models for AppAgent and ActAgent. Text-only models (e.g., GPT-4) are now supported!

- 📅 2024-02-14: Our technical report is online!

- 📅 2024-02-10: UFO is released on GitHub🎈. Happy Chinese New year🐉!

UFO sightings have garnered attention from various media outlets, including:

- Microsoft's UFO abducts traditional user interfaces for a smarter Windows experience

- 🚀 UFO & GPT-4-V: Sit back and relax, mientras GPT lo hace todo🌌

- The AI PC - The Future of Computers? - Microsoft UFO

- 下一代Windows系统曝光:基于GPT-4V,Agent跨应用调度,代号UFO

- 下一代智能版 Windows 要来了?微软推出首个 Windows Agent,命名为 UFO!

- Microsoft発のオープンソース版「UFO」登場! Windowsを自動操縦するAIエージェントを試す

- ...

These sources provide insights into the evolving landscape of technology and the implications of UFO phenomena on various platforms.

- First Windows Agent - UFO is the pioneering agent framework capable of translating user requests in natural language into actionable operations on Windows OS.

- RAG Enhanced - UFO is enhanced by Retrieval Augmented Generation (RAG) from heterogeneous sources to promote its ability, including offling help documents and online search engine.

- Interactive Mode - UFO facilitates multiple sub-requests from users within the same session, enabling the completion of complex tasks seamlessly.

- Action Safeguard - UFO incorporates safeguards to prompt user confirmation for sensitive actions, enhancing security and preventing inadvertent operations.

- Easy Extension - UFO offers extensibility, allowing for the integration of additional functionalities and control types to tackle diverse and intricate tasks with ease.

UFO requires Python >= 3.10 running on Windows OS >= 10. It can be installed by running the following command:

# [optional to create conda environment]

# conda create -n ufo python=3.10

# conda activate ufo

# clone the repository

git clone https://github.com/microsoft/UFO.git

cd UFO

# install the requirements

pip install -r requirements.txtBefore running UFO, you need to provide your LLM configurations individully for AppAgent and ActAgent. You can create your own config file ufo/config/config.yaml, by copying the ufo/config/config.yaml.template and editing config for APP_AGENT and ACTION_AGENT as follows:

VISUAL_MODE: True, # Whether to use the visual mode

API_TYPE: "openai" , # The API type, "openai" for the OpenAI API.

API_BASE: "https://api.openai.com/v1/chat/completions", # The the OpenAI API endpoint.

API_KEY: "sk-", # The OpenAI API key, begin with sk-

API_VERSION: "2024-02-15-preview", # "2024-02-15-preview" by default

API_MODEL: "gpt-4-vision-preview", # The only OpenAI model by now that accepts visual inputVISUAL_MODE: True, # Whether to use the visual mode

API_TYPE: "aoai" , # The API type, "aoai" for the Azure OpenAI.

API_BASE: "YOUR_ENDPOINT", # The AOAI API address. Format: https://{your-resource-name}.openai.azure.com

API_KEY: "YOUR_KEY", # The aoai API key

API_VERSION: "2024-02-15-preview", # "2024-02-15-preview" by default

API_MODEL: "gpt-4-vision-preview", # The only OpenAI model by now that accepts visual input

API_DEPLOYMENT_ID: "YOUR_AOAI_DEPLOYMENT", # The deployment id for the AOAI APIYou can also non-visial model (e.g., GPT-4) for each agent, by setting VISUAL_MODE: True and proper API_MODEL (openai) and API_DEPLOYMENT_ID (aoai). You can also optionally set an backup LLM engine in the field of BACKUP_AGENT if the above engines failed during the inference.

You can utilize non-visual models (e.g., GPT-4) for each agent by configuring the following settings in the config.yaml file:

VISUAL_MODE: False # To enable non-visual mode.- Specify the appropriate

API_MODEL(OpenAI) andAPI_DEPLOYMENT_ID(AOAI) for each agent.

Optionally, you can set a backup language model (LLM) engine in the BACKUP_AGENT field to handle cases where the primary engines fail during inference. Ensure you configure these settings accurately to leverage non-visual models effectively.

If you want to enhance UFO's ability with external knowledge, you can optionally configure it with an external database for retrieval augmented generation (RAG) in the ufo/config/config.yaml file.

Before enabling this function, you need to create an offline indexer for your help document. Please refer to the README to learn how to create an offline vectored database for retrieval. You can enable this function by setting the following configuration:

## RAG Configuration for the offline docs

RAG_OFFLINE_DOCS: True # Whether to use the offline RAG.

RAG_OFFLINE_DOCS_RETRIEVED_TOPK: 1 # The topk for the offline retrieved documentsAdjust RAG_OFFLINE_DOCS_RETRIEVED_TOPK to optimize performance.

Enhance UFO's ability by utilizing the most up-to-date online search results! To use this function, you need to obtain a Bing search API key. Activate this feature by setting the following configuration:

## RAG Configuration for the Bing search

BING_API_KEY: "YOUR_BING_SEARCH_API_KEY" # The Bing search API key

RAG_ONLINE_SEARCH: True # Whether to use the online search for the RAG.

RAG_ONLINE_SEARCH_TOPK: 5 # The topk for the online search

RAG_ONLINE_RETRIEVED_TOPK: 1 # The topk for the online retrieved documentsAdjust RAG_ONLINE_SEARCH_TOPK and RAG_ONLINE_RETRIEVED_TOPK to get better performance.

Save task completion trajectories into UFO's memory for future reference. This can improve its future success rates based on its previous experiences!

After completing a task, you'll see the following message:

Would you like to save the current conversation flow for future reference by the agent?

[Y] for yes, any other key for no.

Press Y to save it into its memory and enable memory retrieval via the following configuration:

## RAG Configuration for experience

RAG_EXPERIENCE: True # Whether to use the RAG from its self-experience.

RAG_EXPERIENCE_RETRIEVED_TOPK: 5 # The topk for the offline retrieved documents# assume you are in the cloned UFO folder

python -m ufo --task <your_task_name>This will start the UFO process and you can interact with it through the command line interface. If everything goes well, you will see the following message:

Welcome to use UFO🛸, A UI-focused Agent for Windows OS Interaction.

_ _ _____ ___

| | | || ___| / _ \

| | | || |_ | | | |

| |_| || _| | |_| |

\___/ |_| \___/

Please enter your request to be completed🛸:- Before UFO executing your request, please make sure the targeted applications are active on the system.

- The GPT-V accepts screenshots of your desktop and application GUI as input. Please ensure that no sensitive or confidential information is visible or captured during the execution process. For further information, refer to DISCLAIMER.md.

You can find the screenshots taken and request & response logs in the following folder:

./ufo/logs/<your_task_name>/

You may use them to debug, replay, or analyze the agent output.

- ❔GitHub Issues (prefered)

- For other communications, please contact [email protected]

We present two demo videos that complete user request on Windows OS using UFO. For more case study, please consult our technical report.

In this example, we will demonstrate how to efficiently use UFO to delete all notes on a PowerPoint presentation with just a few simple steps. Explore this functionality to enhance your productivity and work smarter, not harder!

ufo_delete_note.mp4

In this example, we will demonstrate how to utilize UFO to extract text from Word documents, describe an image, compose an email, and send it seamlessly. Enjoy the versatility and efficiency of cross-application experiences with UFO!

ufo_meeting_note_crossed_app_demo_new.mp4

Please consult the WindowsBench provided in Section A of the Appendix within our technical report. Here are some tips (and requirements) to aid in completing your request:

- Prior to UFO execution of your request, ensure that the targeted application is active (though it may be minimized).

- Occasionally, requests to GPT-V may trigger content safety measures. UFO will attempt to retry regardless, but adjusting the size or scale of the application window may prove helpful. We are actively solving this issue.

- Currently, UFO supports a limited set of applications and UI controls that are compatible with the Windows UI Automation API. Our future plans include extending support to the Win32 API to enhance its capabilities.

- Please note that the output of GPT-V may not consistently align with the same request. If unsuccessful with your initial attempt, consider trying again.

Our technical report paper can be found here. If you use UFO in your research, please cite our paper:

@article{ufo,

title={{UFO: A UI-Focused Agent for Windows OS Interaction}},

author={Zhang, Chaoyun and Li, Liqun and He, Shilin and Zhang, Xu and Qiao, Bo and Qin, Si and Ma, Minghua and Kang, Yu and Lin, Qingwei and Rajmohan, Saravan and Zhang, Dongmei and Zhang, Qi},

journal={arXiv preprint arXiv:2402.07939},

year={2024}

}

- RAG enhanced UFO.

- Documentation.

- Support local host GUI interaction model.

- Support more control using Win32 API.

- Chatbox GUI for UFO.

You may also find TaskWeaver useful, a code-first LLM agent framework for seamlessly planning and executing data analytics tasks.

By choosing to run the provided code, you acknowledge and agree to the following terms and conditions regarding the functionality and data handling practices in DISCLAIMER.md

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.