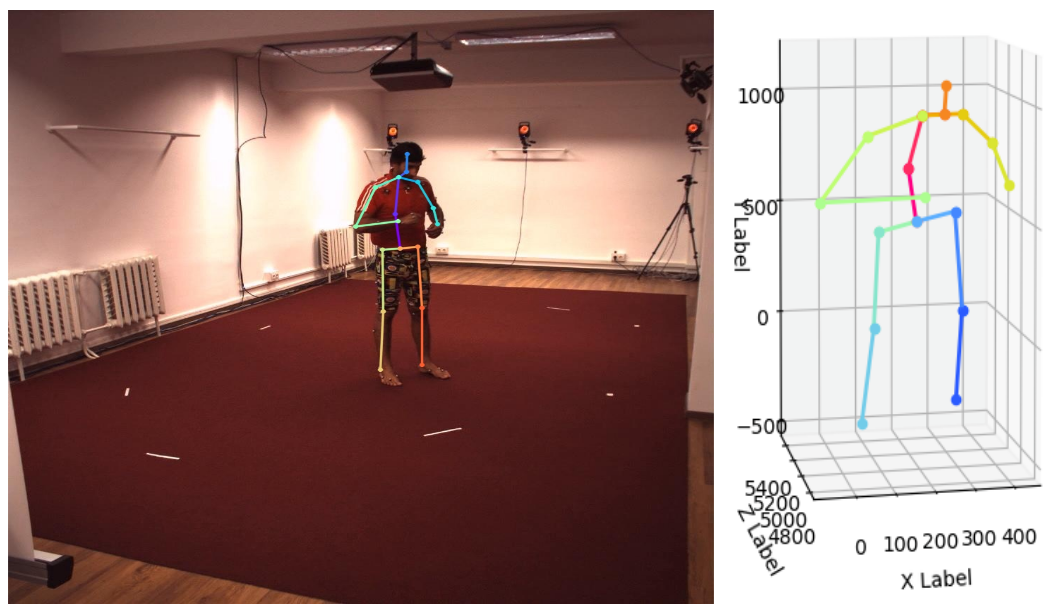

This repo is PyTorch implementation of Integral Human Pose Regression (ECCV 2018) of MSRA for 3D human pose estimation from a single RGB image.

What this repo provides:

- PyTorch implementation of Integral Human Pose Regression.

- Flexible and simple code.

- Dataset pre-processing codes for MPII and Human3.6M dataset.

This code is tested under Ubuntu 16.04, CUDA 9.0, cuDNN 7.1 environment with two NVIDIA 1080Ti GPUs.

Python 3.6.5 version with Anaconda 3 and PyTorch 1.0.0 is used for development.

The ${POSE_ROOT} is described as below.

${POSE_ROOT}

|-- data

|-- common

|-- main

|-- tool

`-- output

datacontains data loading codes and soft links to images and annotations directories.commoncontains kernel codes for 3d human pose estimation system.maincontains high-level codes for training or testing the network.toolcontains Human3.6M dataset preprocessing code.outputcontains log, trained models, visualized outputs, and test result.

You need to follow directory structure of the data as below.

${POSE_ROOT}

|-- data

|-- |-- MPII

| `-- |-- annotations

| | |-- train.json

| | `-- test.json

| `-- images

| |-- 000001163.jpg

| |-- 000003072.jpg

|-- |-- Human36M

| `-- |-- data

| | |-- s_01_act_02_subact_01_ca_01

| | |-- s_01_act_02_subact_01_ca_02

- In the

tool, runpreprocess_h36m.mto preprocess Human3.6M dataset. It converts videos to images and save meta data for each frame.datainHuman36Mcontains the preprocessed data. - Use MPII dataset preprocessing code in my TF-SimpleHumanPose git repo

- You can change default directory structure of

databy modifying$DATASET_NAME.pyof each dataset folder.

You need to follow the directory structure of the output folder as below.

${POSE_ROOT}

|-- output

|-- |-- log

|-- |-- model_dump

|-- |-- result

`-- |-- vis

- Creating

outputfolder as soft link form is recommended instead of folder form because it would take large storage capacity. logfolder contains training log file.model_dumpfolder contains saved checkpoints for each epoch.resultfolder contains final estimation files generated in the testing stage.visfolder contains visualized results.- You can change default directory structure of

outputby modifyingmain/config.py.

- In the

main/config.py, you can change settings of the model including dataset to use, network backbone, and input size and so on.

In the main folder, set training set in config.py. Note that trainset must be list type and 0th dataset is the reference dataset.

In the main folder, run

python train.py --gpu 0-1to train the network on the GPU 0,1.

If you want to continue experiment, run

python train.py --gpu 0-1 --continue--gpu 0,1 can be used instead of --gpu 0-1.

In the main folder, set testing set in config.py. Note that testset must be str type.

Place trained model at the output/model_dump/.

In the main folder, run

python test.py --gpu 0-1 --test_epoch 16to test the network on the GPU 0,1 with 16th epoch trained model. --gpu 0,1 can be used instead of --gpu 0-1.

Here I report the performance of the model from this repo and the original paper. Also, I provide pre-trained 3d human pose estimation models.

The tables below are PA MPJPE and MPJPE on Human3.6M dataset. Provided config.py file is used to achieve below results. It's currently slightly worse than the performance of the original paper, however I'm trying to achieve the same performance. I think training schedule has to be changed.

The PA MPJPEs of the paper are from protocol 1, however, note that protocol 2 uses smaller training set.

| Methods | Dir. | Dis. | Eat | Gre. | Phon. | Pose | Pur. | Sit. | Sit D. | Smo. | Phot. | Wait | Walk | Walk D. | Walk P. | Avg |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| my repo | 39.0 | 38.6 | 44.1 | 42.5 | 40.6 | 35.3 | 38.2 | 49.9 | 59.4 | 41.00 | 46.1 | 37.6 | 30.3 | 40.8 | 35.5 | 41.5 |

| original paper | 36.9 | 36.2 | 40.6 | 40.4 | 41.9 | 34.9 | 35.7 | 50.1 | 59.4 | 40.4 | 44.9 | 39.0 | 30.8 | 39.8 | 36.7 | 40.6 |

| Methods | Dir. | Dis. | Eat | Gre. | Phon. | Pose | Pur. | Sit. | Sit D. | Smo. | Phot. | Wait | Walk | Walk D. | Walk P. | Avg |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| my repo | 50.8 | 52.3 | 54.8 | 57.9 | 52.8 | 47.0 | 52.1 | 62.0 | 73.7 | 52.6 | 58.3 | 50.4 | 40.9 | 54.1 | 45.1 | 53.9 |

| original paper | 47.5 | 47.7 | 49.5 | 50.2 | 51.4 | 43.8 | 46.4 | 58.9 | 65.7 | 49.4 | 55.8 | 47.8 | 38.9 | 49.0 | 43.8 | 49.6 |

- Pre-trained model of protocol 2 [model]

If you get an extremely large error, disable cudnn for batch normalization. This typically occurs in low version of PyTorch.

# PYTORCH=/path/to/pytorch

# for pytorch v0.4.0

sed -i "1194s/torch\.backends\.cudnn\.enabled/False/g" ${PYTORCH}/torch/nn/functional.py

# for pytorch v0.4.1

sed -i "1254s/torch\.backends\.cudnn\.enabled/False/g" ${PYTORCH}/torch/nn/functional.py

This repo is largely modified from Original PyTorch repo of IntegralHumanPose.

[1] Sun, Xiao and Xiao, Bin and Liang, Shuang and Wei, Yichen. "Integral human pose regression". ECCV 2018.