Barbershop: GAN-based Image Compositing using Segmentation Masks

Peihao Zhu, Rameen Abdal, John Femiani, Peter Wonka

arXiv | BibTeX | Project Page | Video

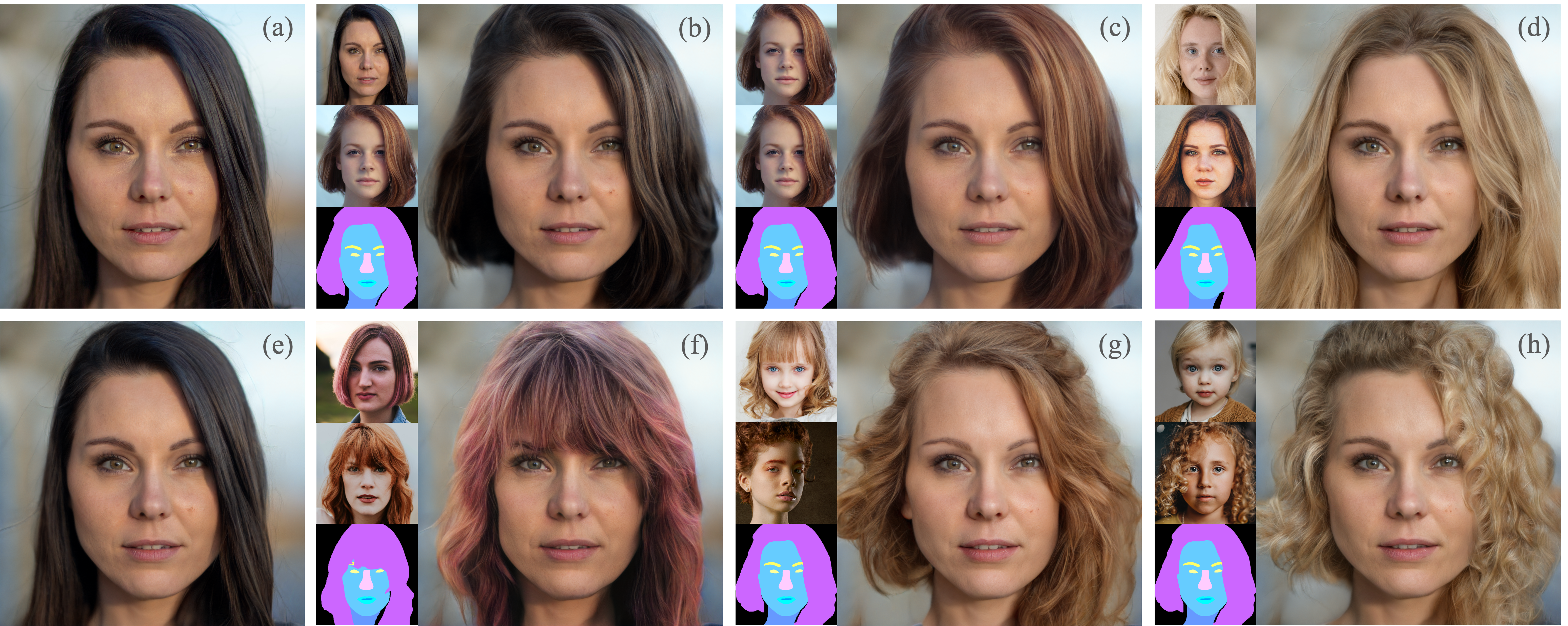

Abstract Seamlessly blending features from multiple images is extremely challenging because of complex relationships in lighting, geometry, and partial occlusion which cause coupling between different parts of the image. Even though recent work on GANs enables synthesis of realistic hair or faces, it remains difficult to combine them into a single, coherent, and plausible image rather than a disjointed set of image patches. We present a novel solution to image blending, particularly for the problem of hairstyle transfer, based on GAN-inversion. We propose a novel latent space for image blending which is better at preserving detail and encoding spatial information, and propose a new GAN-embedding algorithm which is able to slightly modify images to conform to a common segmentation mask. Our novel representation enables the transfer of the visual properties from multiple reference images including specific details such as moles and wrinkles, and because we do image blending in a latent-space we are able to synthesize images that are coherent. Our approach avoids blending artifacts present in other approaches and finds a globally consistent image. Our results demonstrate a significant improvement over the current state of the art in a user study, with users preferring our blending solution over 95 percent of the time.

Extending Barbershop code to apply general swapping and video-to-video translation

- Clone the repository:

git clone https://github.com/jjungkang2/Barbershop.git

cd Barbershop

- Make and move to branch:

git checkout -b <branchname>

branchname은 '프로젝트 이름/본인이름' 으로 설정해주세요. ex) 'general_swap/jungeun', 'data_parallel/sangyoon'

- Dependencies:

Anaconda를 사용하여 환경 설정을 해주세요. 모든 환경은environment/environment.yml파일 안에 작성되어 있습니다.conda env create --file environment/environment.yml을 실행하면 Barbershop 이름으로 conda env가 생성됨니다.

Please download the II2S

and put them in the input/face folder.

Please download the afhqwild.pt, afhqdog.pt, afhqcat.pt, ffhq.pt, metfaces.pt, seg.pth and put them in the pretrained_models folder.

Preprocess your own images. Please put the raw images in the unprocessed folder.

python align_face.py

Produce realistic results:

python main.py --im_path1 90.png --im_path2 15.png --im_path3 117.png --sign realistic --smooth 5

Produce results faithful to the masks:

python main.py --im_path1 90.png --im_path2 15.png --im_path3 117.png --sign fidelity --smooth 5

skin, eyes, eyebrow, nose, mouth, hair 중에 선택해서 넣을 수 있습니다. 아무것도 선택하지 않는다면 hair swap만 진행됩니다.

머리, 눈, 눈썹 swap:

python main.py --im_path1 90.png --im_path2 15.png --im_path3 117.png --sign realistic --smooth 5 --swap_parts hair eyes eyebrow

첫번째 경로는 무조건 영상이어야 하며, 두번째, 세번째 경로는 이미지 또는 영상

실행 시간을 줄이기 위해서는 두번째 경로와 세번째 경로를 동일하게 설정

가장 처음에 다음을 실행, 영상이 입력되어 모든 frame을 저장한 뒤 모든 frame에 대해 align_face

python preprocess_video.py --vid_path1 sample_video_1.mp4 --vid_path2 15.png --vid_path3 117.png

python preprocess_video.py --vid_path1 sample_video_1.mp4 --vid_path2 sample_video_2.mp4 --vid_path3 sample_video_3.mp4

swap을 진행하기 위해서는 다음을 실행 (e.g. 눈, 눈썹 swap)

python main.py --vid_path1 sample_video_1.mp4 --vid_path2 15.png --vid_path3 117.png --sign realistic --smooth 5 --swap_parts eyes eyebrow

python main.py --vid_path1 sample_video_1.mp4 --vid_path2 sample_video_2.mp4 --vid_path3 sample_video_3.mp4 --sign realistic --smooth 5 --swap_parts eyes eyebrow

image_to_video.py는 추후 결과 frame들을 다시 하나의 영상으로 만들기 위한 코드 (저장된 파일 이름에 따라 조절 필요)

Github과 서버를 동시에 사용해야하는 환경에서는 아래와 같은 세팅을 추천합니다.

- 코드 작성 및 깃헙 관리는 로컬에서 진행

- sftp 사용하여 로컬 코드 서버로 전송

- Pretrained models 서버로 바로 받으려면 원래는 gdown 같은 라이브러리 사용하면 되는데, 파일 크기가 너무 커서 사용이 안되더라구요. 로컬에 다운받고 scp 사용해서 서버로 올리는 걸 추천합니다! 저는 윈도우를 사용중이라 winscp 사용했습니다

You can see our project report here.