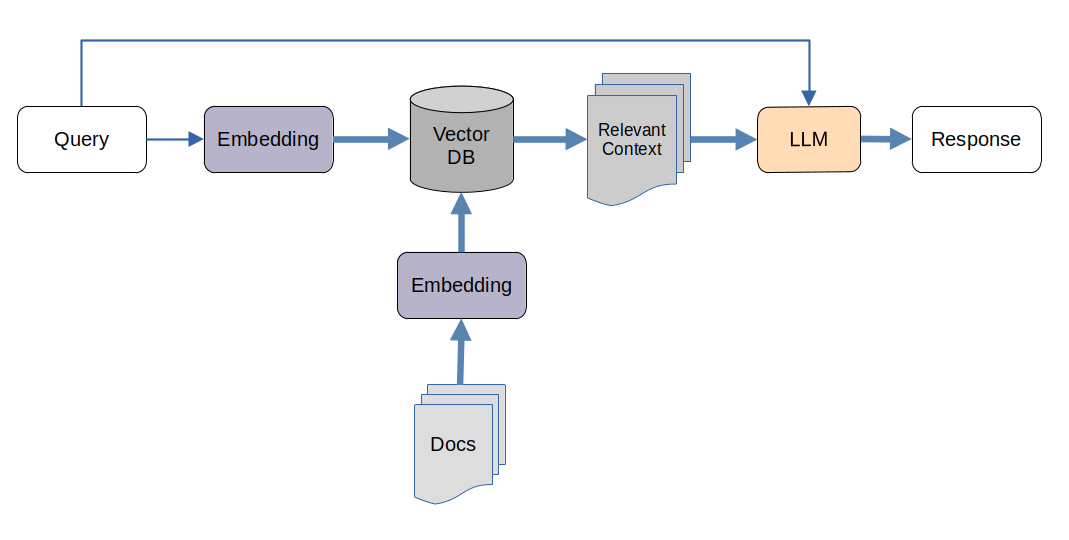

Implement a Retrieval Augmented Generation (RAG) system to query and retrieve information from your local documents efficiently.

Gain practical experience with embeddings, vector databases, and local Large Language Models (LLMs)

Install it step by step (or check Auto Installation for a single command)

$ python3 -m venv myvenv

$ source myvenv/bin/activate$ pip3 install -r requirements.txtInstall to run large language models locally.

$ curl -fsSL https://ollama.ai/install.sh | shOr follow the installation instructions for your operating system: Install Ollama

Choose and download an LLM model. For example:

$ ollama pull phi3Alternatively, on bash, run the following installation script:

$ bin/install.sh(myvenv)$ python3 local-rag-gui.pyAnd open the exposed link with your browser for the Graphical User Interface version.

Or, run the following for the command line input version

(myvenv)$ python3 local-rag-cli.pyIn case the LLM server is not running start it in a different terminal with:

$ ollama serve-

Top k: Ranks the output tokens in descending order of probability, selects the first k tokens to create a new distribution, and it samples the output from it. Higher values result in more diverse answers, and lower values will produce more conservative answers. ([0, 10]. Default: 5)

-

Top p: Works together with Top k, but instead of selecting a fixed number of tokens, it selects enough tokens to cover the given cumulative probability. A higher value will produce more varied text, and a lower value will lead to more focused and conservative answers. ([0.1, 1] Default: 0.9)

-

Temp: This affects the “randomness” of the answers by scaling the probability distribution of the output elements. Increasing the temperature will make the model answer more creatively. ([0.1, 1]. Default: 0.5)

Before commiting, format the code by using black as following on the project folder:

$ black -t py311 -S -l 99 .You can Install Black with:

$ python3 -m pip install black