Training models like ChatGPT and Llama costs approximately $300 million, requiring billion-dollar datacenters. This expense is a significant barrier for many researchers. Inspired by initiatives like Folding at Home and Learning at Home, we envision a decentralized network that democratizes AI research and development through a shared mission and purpose.

During the global pandemic, Folding at Home saw such an influx of participants that its computing power exceeded the world's largest supercomputers. Recognizing this potential, we are developing technology to enable the decentralized training of large models, benefiting all participants. We believe that the network's value will grow as more participants join and as we continue to release new models and technologies.

Please refer to the guide on how to run a miner to get started. For more technical details, refer to the white paper.

In this subnet, miners are rewarded for completing assigned tasks, which involve machine learning computations instead of solving cryptographic puzzles like in Bitcoin mining. The results are validated to ensure correctness and maintain network integrity. Rewards are distributed based on each miner's share of the total computation performed, meaning the more computation a miner completes, the more rewards they receive.

Since the validation process is entirely objective, YUMA consensus is irrelevant. A private, central validator will assign tasks and validate the computation results. The outputs of all miners will be validated through a multistep process with redundancy, involving multiple miners.

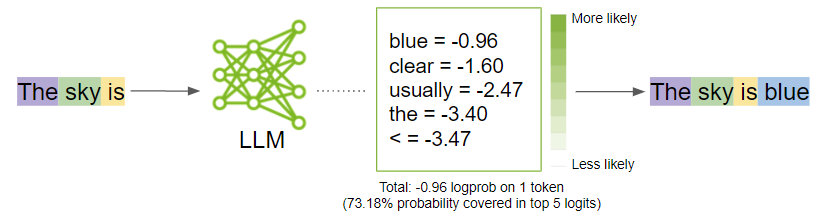

Knowledge distillation is the process of transferring knowledge from a large model to a smaller one. A Large Language Model (LLM) is a probabilistic model trained on vast datasets to assign probabilities to sequences of tokens. In KD, there are two important concepts: "hard labels" and "soft labels." Hard labels are the actual data or the true labels from the dataset, while soft labels are the probabilities assigned to each possible output by the LLM, capturing the model's uncertainty and the relative likelihood of different classes. Training the smaller model on these soft labels provides a richer, more efficient and nuanced training signal, allowing it to better mimic the larger model's performance and enhance its generalization and accuracy.

Consider the following example:

Source: NVIDIA Developer Blog

Source: NVIDIA Developer Blog

If we were to train an LLM on "The sky is blue", the forth token "blue" would be considered the only correct token. At the same time, we know there are other possible tokens that could follow "The sky is," such as "clear", "beautiful", "cloudy", "dark", etc. With KD, we can capture signals other than the hard label "blue" and use them to train a smaller student model. As a result, we expect to have more efficient training and better generalization.

Now, given a pre-trained model as teacher and a dataset, one can precompute and cache the teacher's outputs. Such outputs can be used for improving efficiency and reducing the computational cost of future training tasks. We have selected Mistral-7B-Instruct-v0.3 as the teacher. Mistral is a family of models released by Mistral AI under apache-2.0 License that is competitive with other commercial models such as GPT-3.5.

As for the dataset, we have selected FineWeb, the largest (+15 trillion tokens, 44 TB of text!) and finest open source dataset available.

The precomputed teacher outputs collected in this subnet will be periodically released to the public under MIT License. These outputs can be used for future training tasks.

For more information on KD, refer to Neptune AI, DistilBERT, Pytorch Tutorial, and roboflow.

- I want to customize the model and the dataset. How can I do that?

- You cannot customize the model, dataset, or the task in this subnet. You can join other subnets if you prefer to customize these components.

- I want to run a validator. How can I do that?

- At this moment, we are not supporting running a validator. We will work with the participants to ensure subnets' parameters are set

- What is the registration fee for the subnet?

- Starting registration fee is set to 10 tokens, we plan to remove this fee once early testing is completed.

- I like this project. How can I contribute?

- Aside from participating as a miner, feel free to review the miner's code and suggest improvements. Furthermore, if you have any ideas or suggestions, please share them with our team.

In the future, subnet stakeholders will be able to vote on the network's direction and the features to be developed. They can request models for specific tasks, and the subnet will facilitate the training of these models.

- Add more details on future plans and phases

- Add weights and biases monitoring for the miner

- Add leaderboard