- Novel Perspective for Backdoor Analysis

- Novel Algorithm for Backdoor Purification

- Extensive Experimental Evaluation on Multiple Tasks

Welcome to watch 👀 this repository for the latest updates.

✅ [2023.04.07] : FIP is accepted to ACM CCS'2024

-

Install Anaconda and create an environment

conda create -n fip-env python=3.10 conda activate fip-env

-

After creating a virtual environment, run

pip install -r requirements.txt

-

Image Classification ( CIFAR10, GTSRB, Tiny-ImageNet, ImageNet)

-

Object Detection (MS-COCO, Pascal VOC)

-

3D Point Cloud Classifier (ModelNet40)

-

Natural Language Processing (NLP) (WMT2014 En-De, OpenSubtitles2012)

- To train a benign model

python train_backdoor_cifar.py --poison-type benign --output-dir /folder/to/save --gpuid 0 - To train a backdoor model with the "blend" attack with a poison ratio of 10%

python train_backdoor_cifar.py --poison-type blend --poison-rate 0.10 --output-dir /folder/to/save --gpuid 0 -

Follow the same training pipeline as Cifar10 and change the trigger size, poison-rate, and data transformations according to the dataset.

-

For ImageNet, you can download pre-trained ResNet50 model weights from PyTorch first, then train this benign model with "clean and backdoor training data" for 20 epochs to insert the backdoor.

- Follow this link to create the backdoor model.

-

First, download the GitHub repository of SSD Object Detection pipeline.

-

Follow Algorithm 1 and 2 in Clean-Image Backdoor for "Trigger Selection" and "Label Poisoning".

-

Once you have the triggered data, train the model following SSD Object Detection.

- Follow this link to create the backdoor model.

- Follow this link to create the backdoor model.

-

For smoothness analysis, run the following-

cd Smoothness Analysispython hessian_analysis.py --resume "path-to-the-model" -

NOTE: "pyhessian" is an old package. Updated PyTorch can cause some issues while running this. You may see a lot of warnings.

-

Go to "src" folder

-

For CIFAR10, To remove the backdoor with 1% clean validation data-

python Remove_Backdoor_FIP.py --poison-type blend --val-frac 0.01 --checkpoint "path/to/backdoor/model" --gpuid 0 -

Please change the dataloader and data transformations according to the dataset.

-

We can do it in two ways

-

We can exactly follow the FIP implementation with high "--reg_F ($eta$_F in the paper)"

python train_backdoor_with_spect_regul.py --reg_F 0.01

-

We can deploy Sharpness-aware minimization (SAM) optimizer during training. Use a value greater than 2 for "--rho"

python train_backdoor_with_sam.py --rho 3

-

-

With tighter smoothness constraints, it gets harder to find a favorable optimization point for both clean and backdoor data distribution

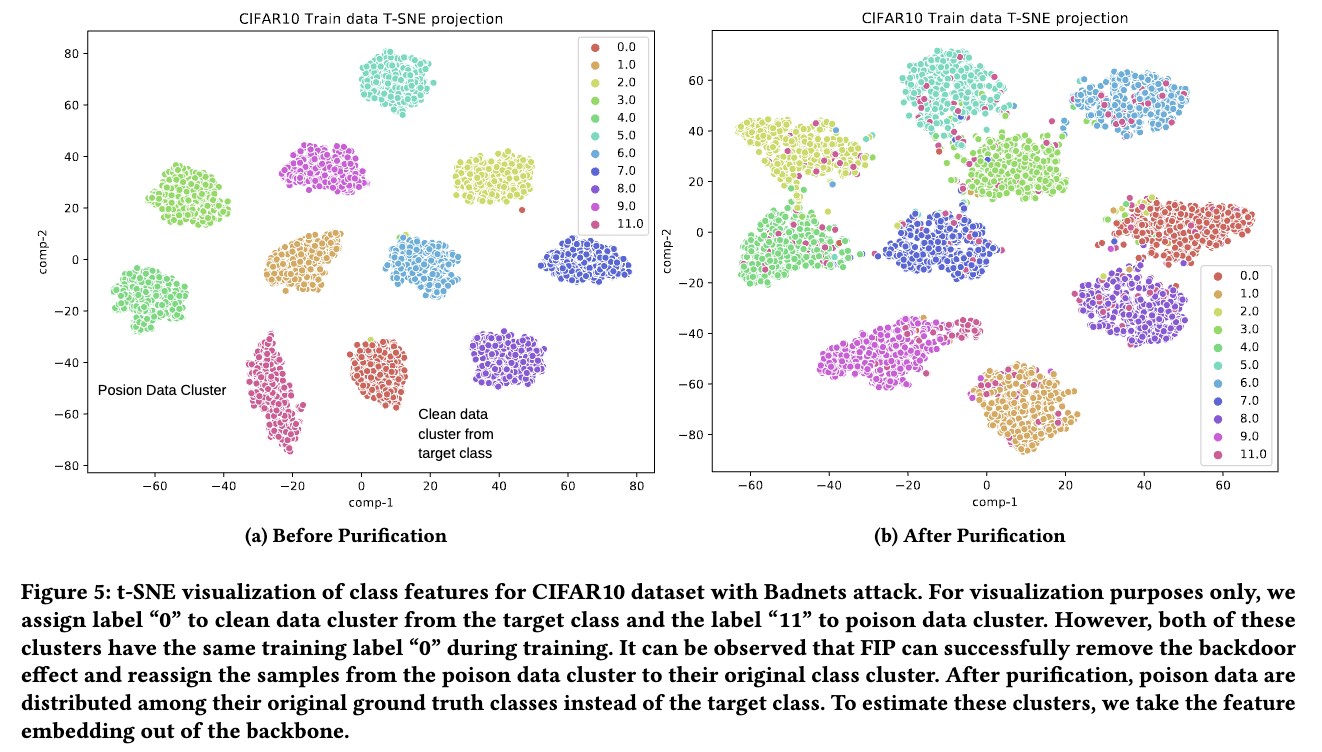

- FIP is able to purify backdoor by re-optimizing the model to a smoother minima.

If you find our paper and code useful in your research, please consider giving a star ⭐ and a citation 📝.

@article{karim2024fisher,

title={Fisher information guided purification against backdoor attacks},

author={Karim, Nazmul and Arafat, Abdullah Al and Rakin, Adnan Siraj and Guo, Zhishan and Rahnavard, Nazanin},

journal={arXiv preprint arXiv:2409.00863},

year={2024}

}