- Pytorch implementation of the paper: "FaceNet: A Unified Embedding for Face Recognition and Clustering".

- Training of network is done using triplet loss.

- I will update codes and git repository for a better accuracy after my doctoral defence on Nov. 20th 2018.

-

Download vggface2 (for training) and lfw (for validation) datasets.

-

Align face image files by following David Sandberg's instruction (part of "Face alignment").

-

Write list file of face images by running "datasets/write_csv_for_making_dataset.ipynb"

- This is aready in the directory of datasets so that you don't need to do again if you are urgent.

- To run this one need to modify paths in accordance with location of image dataset.

-

Train

- Again, one need to modify paths in accordance with location of image dataset.

- Also feel free to change some parameters.

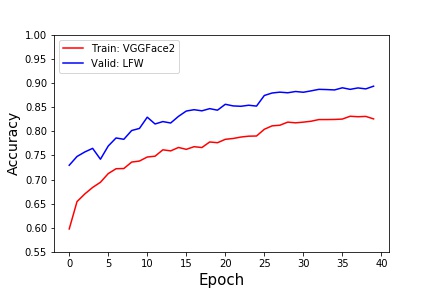

- Accuracy on VGGFace2 and LFW datasets

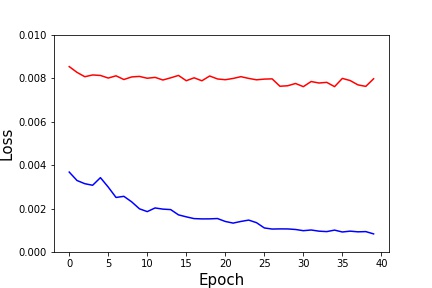

- Triplet loss on VGGFace2 and LFW datasets

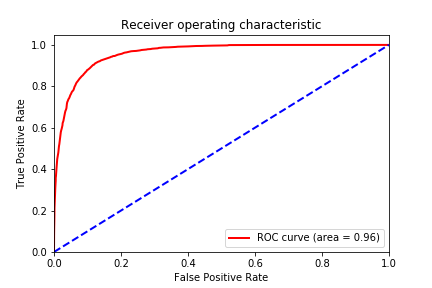

- ROC curve on LFW datasets for validation