This is a minimal PyTorch implmentation of the Visual Autoregressive Modeling: Scalable Image Generation via Next-Scale Prediction paper (NeurIPS'24 best paper).

The entire thing is in 3 simple, self-contained files, mainly for educational and experimental purposes. Code also uses Shape Suffixes for easy readibility.

vqvae.py: The VQVAE implementation with the residual quantizationvar.py: The transformer and sampling logicmain.py: A simple training script for both the VQVAE and the VAR transformer.

You will need PyTorch and WandB (if you want logging)

pip install torch torchvision wandb[media]To train on MNIST,

python main.py

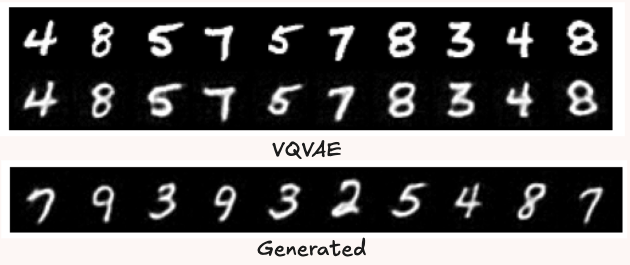

VQVAE construction and generated samples with VAR on MNIST

There is also CIFAR10 support,

python main.py --cifar

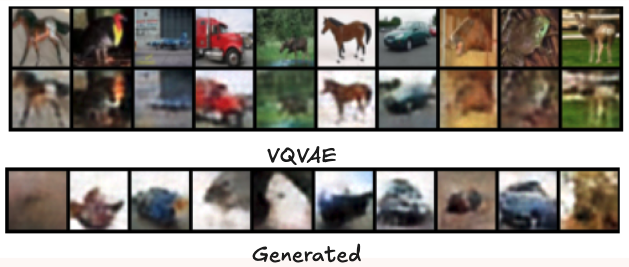

VQVAE construction and generated samples with VAR on CIFAR10. (Random class labels)

Change the model and training params in

main.pyas required

The architecture implemented here is a little different from the one in the paper. The VAVAE is just a simple convolution network. The transformer mainly follows the Noam Transformer with adaptive normilization (from DiT) - Rotary Positional Embedding and SWIGLU mainly. This implementation also doens't have Attention Norms. For simplicity, attention is still standard Multi-Head Attention. The VQVAE is also trained on standard codebook, commitment and reconstruction losses without the perceptual and GAN loss terms that is standard.

The performance on CIFAR is not as good compared to MNIST. My hypothesis is that the the encoder-decoder of the VQVAE just isn't good enough. The codebook is not representative enough. As a result, while training loss on VAR has yet to converge, the samples tend to get worse. CFG is also another area for future work, my guess is that it isn't trained enough to make full use of CFG.

Original code by the authors can be found here. This repository is mainly inspired by Simo Ryu's minRF and the VQVAE Encoder/Decoder is from here.

@Article{VAR,

title={Visual Autoregressive Modeling: Scalable Image Generation via Next-Scale Prediction},

author={Keyu Tian and Yi Jiang and Zehuan Yuan and Bingyue Peng and Liwei Wang},

year={2024},

eprint={2404.02905},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

If you found this repository useful,

@misc{wh2025minVAR,

author = {nreHieW},

title = {minvAR: Minimal Implementation of Visual Autoregressive Modelling (VAR)},

year = 2025,

publisher = {Github},

url = {https://github.com/nreHieW/minVAR},

}

This was mainly developed on Free Colab and a rented cloud 3090, so if you found my work useful and would like to sponsor/support me, do reach out :)