Update (December 2, 2016) TensorFlow implementation of [Show, Attend and Tell: Neural Image Caption Generation with Visual Attention] (http://arxiv.org/abs/1502.03044) which introduces an attention based image caption generator. The model changes its attention to the relevant part of the image while it generates each word.

Referenced author's theano code can be found [here] (https://github.com/kelvinxu/arctic-captions). Additionally, this repo is an upgraded version of the existing tensorflow implementation. You can find it [here] (https://github.com/jazzsaxmafia/show_attend_and_tell.tensorflow).

For evaluation, clone pycocoevalcap as below.

$ git clone https://github.com/tylin/coco-caption.gitThis code is written in Python2.7 and requires TensorFlow. In addition, you need to install a few more packages to process MSCOCO data set. I have provided a script to download the MSCOCO image dataset and [VGGNet19 model] (http://www.vlfeat.org/matconvnet/pretrained/). Downloading the data may take several hours depending on the network speed. Run commands below then the images will be downloaded in the image/ directory and VGGNet will be downloaded in the data/ directory.

$ git clone https://github.com/yunjey/show-attend-and-tell-tensorflow.git

$ cd show-attend-and-tell-tensorflow

$ pip install -r requirements.txt

$ chmod +x ./download.sh

$ ./download.shFor feeding the image to the VGGNet, you should resize the MSCOCO image dataset to the fixed size of 224x224. Run command below then resized images will be stored in image/train2014_resized/ and image/val2014_resized/ directory.

$ python resize.pyBefore training the model, you have to preprocess the MSCOCO caption dataset. To generate caption dataset and image feature vectors, run command below.

$ python prepro.pyTo train the caption generating model, run command below.

$ python train.py

I have provided a tensorboard visualization for real-time debugging.

Open the new terminal, run command below and open http://localhost:6005/ into your web browser.

$ tensorboard --logdir='./log' --port=6005 To evaluate the model, please see evaluate_model.ipynb.

####Training data

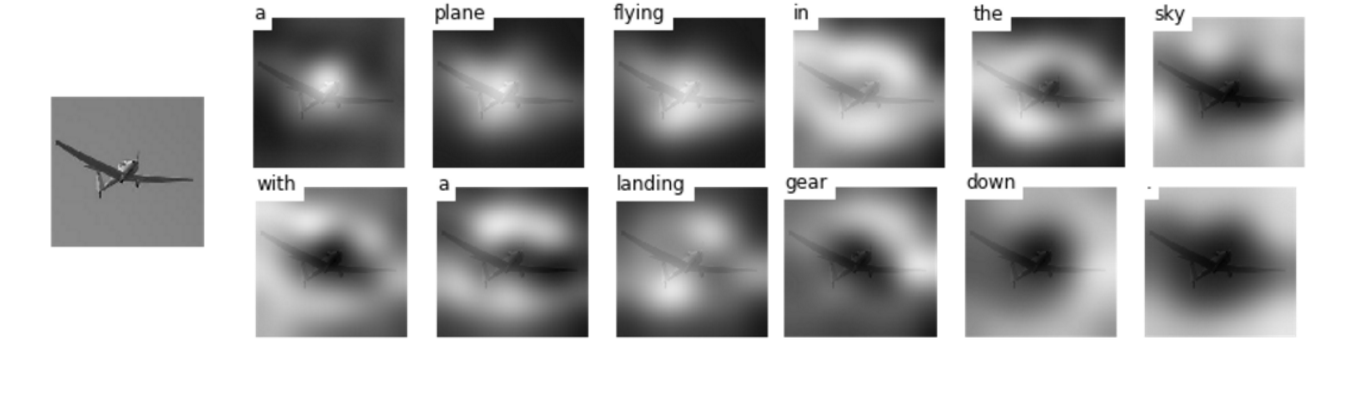

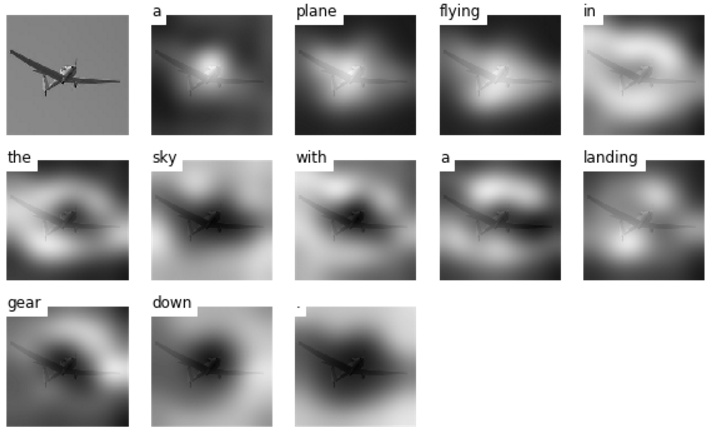

#####(1) Generated caption: A plane flying in the sky with a landing gear down.

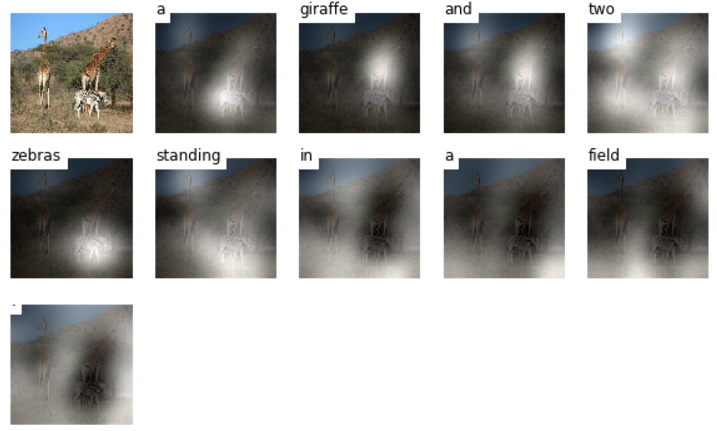

#####(2) Generated caption: A giraffe and two zebra standing in the field.

####Validation data

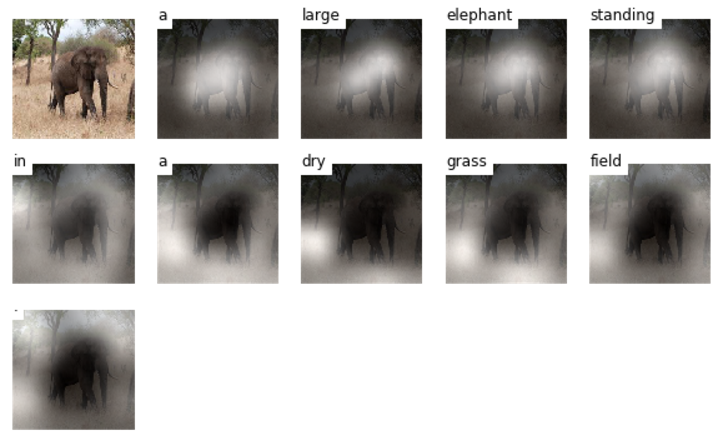

#####(1) Generated caption: A large elephant standing in a dry grass field.

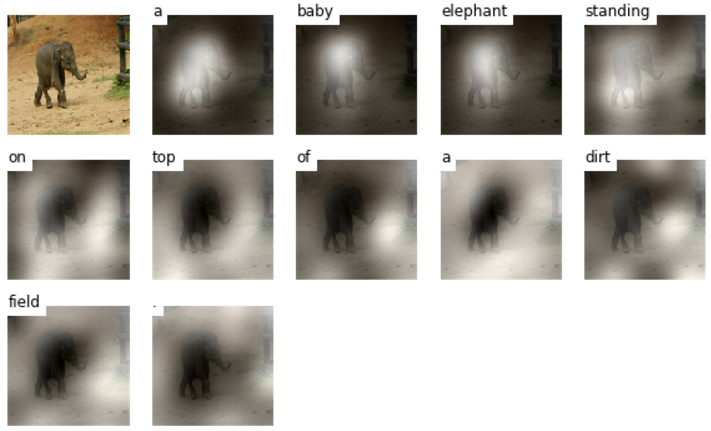

#####(2) Generated caption: A baby elephant standing on top of a dirt field.

####Test data

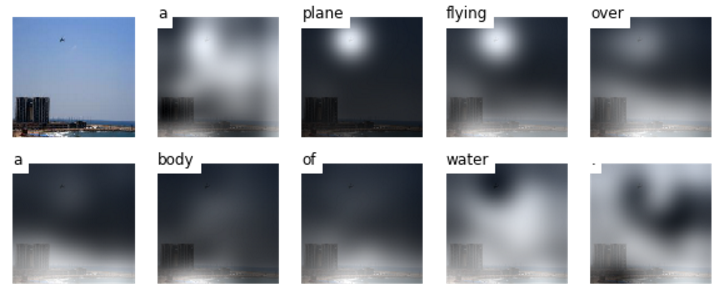

#####(1) Generated caption: A plane flying over a body of water.

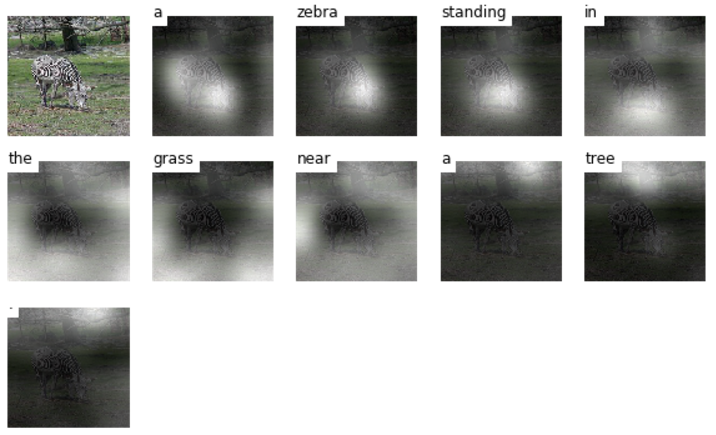

#####(2) Generated caption: A zebra standing in the grass near a tree.