This project is a hardware-software integrated solution designed to facilitate communication between deaf individuals and normal users. It was developed as part of the Engineering Clinics Course (ECS) at VIT-AP University and addresses a Smart India Hackathon (SIH) problem statement.

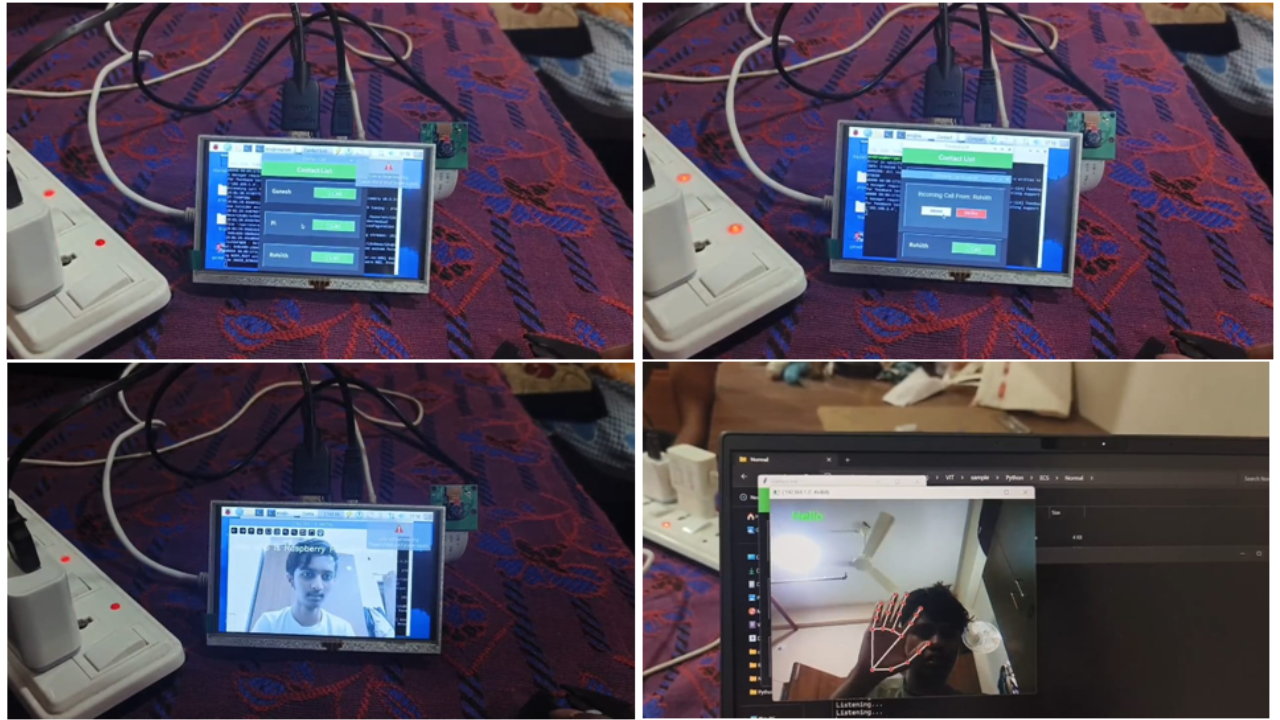

The system enables video calls over a local network with zero communication costs, using a combination of hardware (Raspberry Pi) and Python-based software. It also features sign language translation and speech-to-text functionality, providing a seamless and inclusive communication platform.

- Operates over a local network (eth0/wlan).

- Devices communicate using static IPs through Python's socket library.

- Supports video calls between:

- Devices designed for deaf individuals.

- Regular desktops or other devices.

- Dataset and Mapping:

- Static gestures corresponding to 24 commonly used words (mapped to important phrases).

- Each gesture represented by angles between hand landmarks, captured using Mediapipe.

- All angles saved in a Pickle file for efficient retrieval.

- Translation Process:

- Mediapipe processes real-time hand landmarks.

- Angles are compared with the pre-trained dataset to identify gestures.

- The corresponding word is displayed on the user interface.

- Converts spoken words into text for better understanding, displayed on the UI.

- Raspberry Pi 4 with:

- XPT2046 5-inch touchscreen.

- Camera module for video capture.

- Vibration motor for incoming call alerts (triggered via GPIO).

- Simple interface built with Tkinter.

- Displays a list of available devices in the network, retrieved from the server.

-

Server Setup:

- A FastAPI server provides the list of connected devices, their names, and static IPs.

-

Communication:

- Devices communicate using socket programming, exchanging video frames and other data.

-

Gesture Recognition:

- Mediapipe detects hand landmarks.

- Captured angles are compared to the pre-trained dataset stored in a Pickle file.

- Recognized gestures are mapped to specific words and displayed on the screen.

-

User Interaction:

- Devices display the list of connected devices on the UI.

- Users select a device to initiate a video call.

-

Alerts:

- Incoming calls trigger the vibration motor, notifying deaf users.

- Raspberry Pi 4

- XPT2046 5-inch touchscreen

- Camera module

- Vibration motor

- Local network setup (Ethernet or WiFi)

- Programming Language: Python

- Libraries and Tools:

This project was developed as part of the Engineering Clinics Course (ECS) at VIT-AP University and addresses a Smart India Hackathon (SIH) problem statement.

This project is licensed under the MIT License. See the LICENSE file for details.