Demostration: https://example.batchai.kailash.cloud:8443

batchai has a simple goal: run a command to scan and process an entire codebase, letting AI perform bulk tasks like automatically finding and fixing common bugs, or generating unit tests. It’s similar to AI-driven SonarQube for error checking. Essentially, batchai complements tools like Copilot and Cursor by eliminating the need to copy-paste between chat windows and open files, or manually adding files to the AI’s context, making the process more efficient.

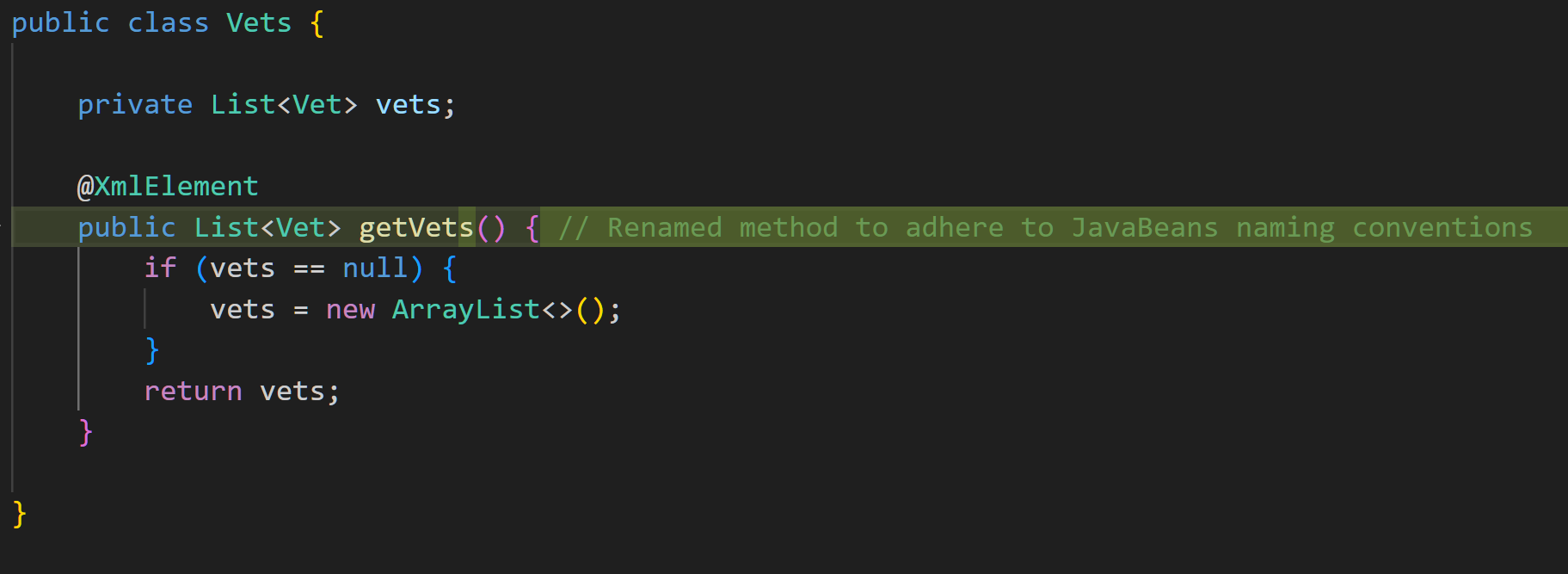

To demonstrate with the spring-petclinic project (cloned from https://github.com/spring-projects/spring-petclinic), I ran the following batchai command in the cloned directory:

batchai check --fixAnd here are the results I got:

The full results are as follows:

The results above were generated by running batchai with the OpenAI gpt-4o-mini model.

Additionally, I’ve just launched a demo website (https://example.batchai.kailash.cloud:8443), where you can submit your own GitHub repositories for batchai to perform bulk code checks and generate unit test codes. Given the high cost of using OpenAI, this demo site uses the open-source model qwen2.5-coder:7b-instruct-fp16(performs not as good as gpt-4o-mini), running on my own Ollama instance. Note that due to resource limitations, tasks will be executed in a queue.

Here are some interesting findings from eat-my-dog on my own projects over the past few weeks:

-

AI often detects issues that traditional tools (like SonarQube) might miss, and it can fix them directly.

-

The AI doesn’t report all issues at once, so I need to run it multiple times to catch everything.

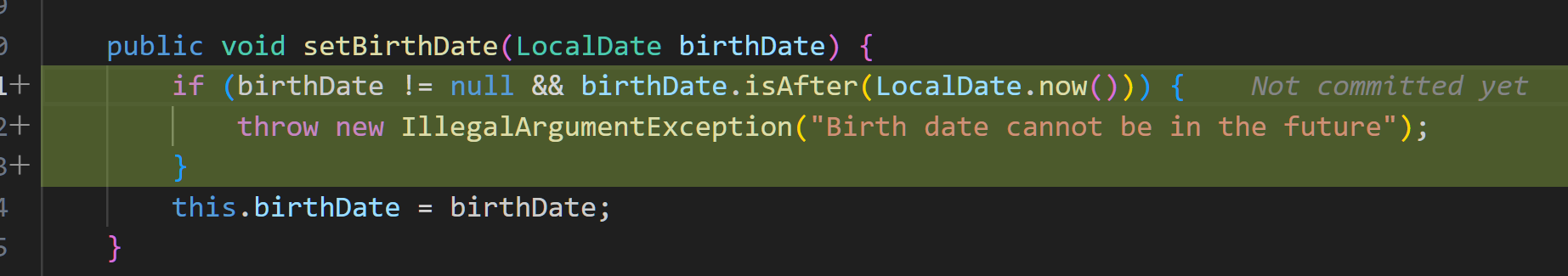

Also, here’s an example of where the AI didn’t quite get it right:

The issue of LLM hallucinations is unavoidable, so to prevent AI errors from overwriting our existing changes, I designed batchai to only work in a clean Git repository. If there are any unstaged files, batchai will refuse to execute. This way, we can use git diff to confirm the changes made by batchai. If there are mistakes, we can simply revert them. This step is still essential.

- Batch Code Check : Reports issues to the console, saves as a check report, and then optionally fixes code directly.

- Batch Test Code Generation

- Customized Prompts.

- File Ignoring : Specifies files to ignore, respecting both

.gitignoreand an additional.batchai_ignorefile. - Target Specification : Allows specifying target directories and files within the Git repository.

- Implemented using Go: Resulting in a single executable binary that works on Mac OSX, Linux, and Windows.

- Diff: Displays colorized diffs in the console.

- LLM Support : Supports OpenAI-compatible LLMs, including Ollama.

- I18N : Supports internationalization comment/explaination generation.

Currently, batchai only supports bulk code checks and generating unit test code, but planned features include code explanation and comment generation, refactoring, and more — all to be handled in bulk. Another goal is to give batchai a broader understanding of the project by building a cross-file code symbol index, which should help the AI work more effectively.

Of course, suggestions and feature requests are always welcome at the Issues page. Feel free to contribute, and we can discuss them together.

-

Download the latest executable binary from here and add it to your $PATH. For Linux and Mac OSX, remember to run

chmod +x ...to make the binary executable. -

Clone the demo project. The following steps assume the cloned project directory is

/data/spring-petcliniccd /data git clone https://github.com/spring-projects/spring-petclinic cd spring-petclinic

In this directory, create a .env file. In the .env file, set the OPENAI_API_KEY. Below is an example:

OPENAI_API_KEY=change-it

For Ollama, you can refer to my example docker-compose.yml

-

CLI Examples:

- Report issues to the console (also saved to

build/batchai):

cd /data/spring-petclinic batchai check . src/main/java/org/springframework/samples/petclinic/vet/Vets.java

- Directly fix the target files via option

--fix:

cd /data/spring-petclinic batchai check --fix . src/main/java/org/springframework/samples/petclinic/vet/Vets.java

- Run

batchaiin main Java code only:

cd /data/spring-petclinic batchai check . src/main/java/

- Run

batchaion the entire project:

cd /data/spring-petclinic batchai check .

- Generates unit test code for the entire project:

cd /data/spring-petclinic batchai test .

- Report issues to the console (also saved to

Tested and supported models:

-

OpenAI series:

-

openai/gpt-4o -

openai/gpt-4o-mini

Other OpenAI models should work too.

-

-

Ali TONYI Qwen series (also available via Ollama):

-

qwen2.5-coder-7b-instruct -

qwen2.5-coder:7b-instruct-fp16

Other Qwen models should work too.

-

To add more LLMs, simply follow the configuration in res/static/batchai.yaml, as long as the LLM exposes an OpenAI-compatible API.

-

Optional configuration file:

You can provide an optional configuration file:

${HOME}/batchai/batchai.yaml. For a full example, refer to res/static/batchai.yaml -

Environment file:

You can also configure

batchaivia an environment file.envlocated in the target Git repository directory. Refer to res/static/batchai.yaml for all available environment variables, and res/static/batchai.env for their default values. -

Ignore specific files:

batchaiignores the directories and files following.gitignorefiles. This is usually sufficient, but if there are additional files or directories that cannot be ignored by Git but should not be processed by batchai, we can specify them in the.batchai_ignorefiles. The rules are written in the same way as in.gitignore. -

Customized Prompts Refer to

BATCHAI_CHECK_RULE_*andMY_CHECK_RULE_*in [res/static/batchai.yaml]

MIT