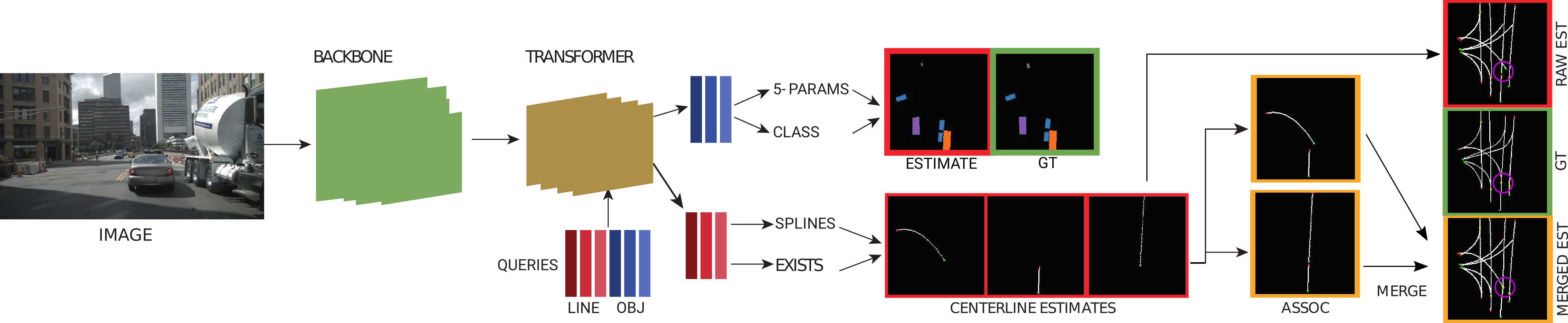

Official code for "Structured Bird’s-Eye-View Traffic Scene Understanding from Onboard Images" (ICCV 2021)

We provide support for Nuscenes and Argoverse datasets.

- Make sure you have installed Nuscenes and/or Argoverse devkits and datasets installed

- In configs/deafults.yml file, set the paths

- Run the make_labels.py file for the dataset you want to use

- If you want to use zoom augmentation (only for Nuscenes currently), run src/data/nuscenes/sampling_grid_maker.py (Set the path to save the .npy file in the sampling_grid_maker.py)

- You can use train_tr.py for training the transformer based model or train_prnn.py to train the Polygon-RNN based model

- We recommend using the Cityscapes pretrained Deeplab model (link provided below) as backbone for training your own model

- Validator files can be used for testing. The link to trained models are given below.

Cityscapes trained Deeplabv3 model is at: https://data.vision.ee.ethz.ch/cany/STSU/deeplab.pth

Nuscenes trained Polygon-RNN based model is at: https://data.vision.ee.ethz.ch/cany/STSU/prnn.pth

Nuscenes trained Transformer based model is at: https://data.vision.ee.ethz.ch/cany/STSU/transformer.pth

The implementation of the metrics can be found in src/utils/confusion.py. Please refer to the paper for explanations on the metrics.