Nextflow plugin for reading and writing Quilt packages as a FileSystem

nf-quilt (v0.3.2 or later) is a Nextflow plugin

developed by Quilt Data that enables you read and write directly

to Quilt packages using quilt+s3 URIs wherever your Nextflow pipeline currently use s3 URIs.

In v0.8+, the plugin can even be used with "native" URIs, and it will automatically register a Quilt package at the root of the bucket.

Inspired by the original nf-quilt plugin (v0.2.0) developed by Seqera labs.

This plugin allows your existing pipelines, without modification, to read and write versioned Quilt packages stored on Amazon S3.

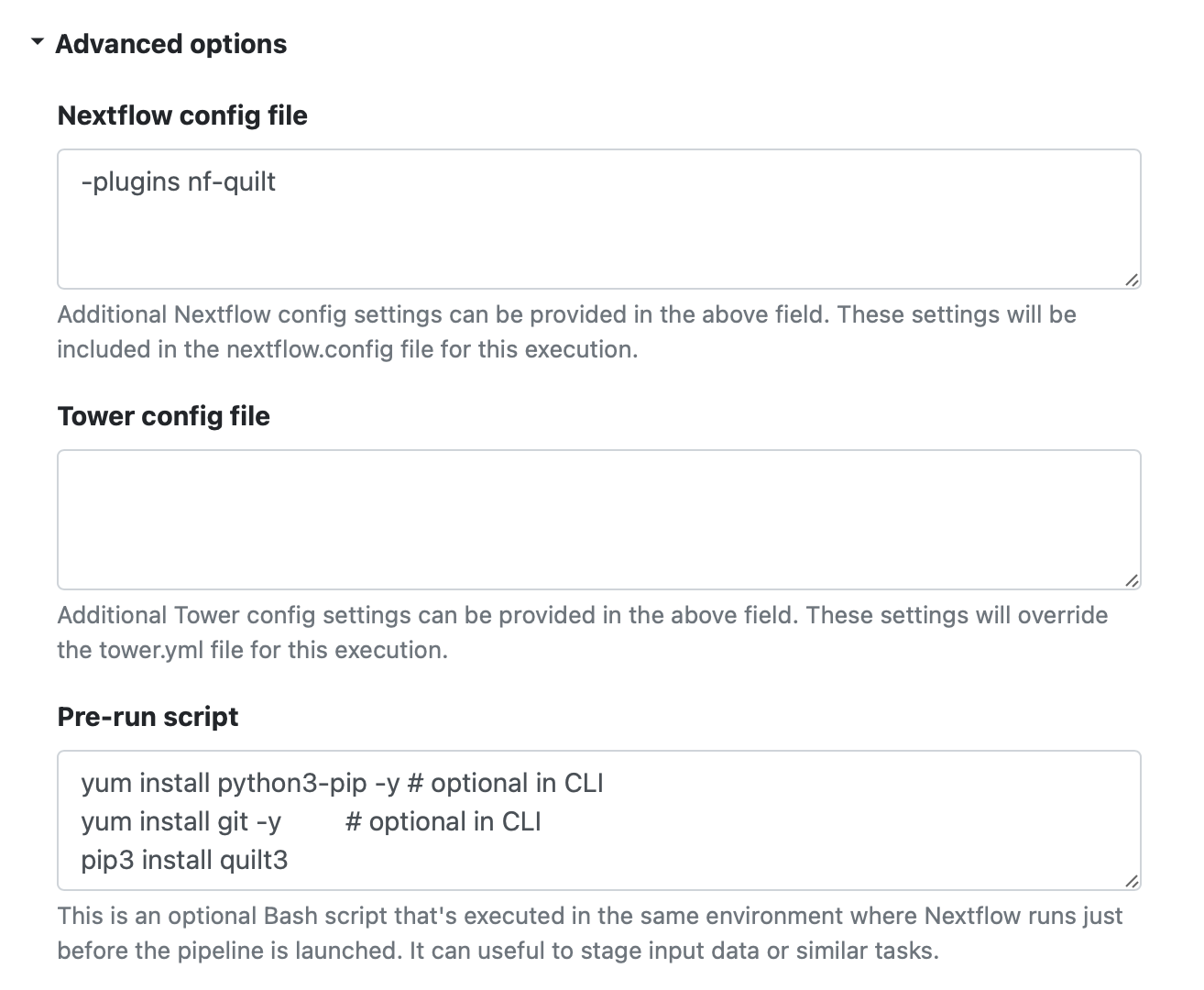

Use the following three steps to configure Nextflow Tower or your command-line environment.

[Note: versions 0.7.0 and later no longer require the quilt3 Python client.]

- Enable the

nf-quiltplugin

The usual way to enable a plugin is to add the following to your nextflow.config file,

or (in Tower) the "Advanced Options -> Nextflow config file":

plugins {

id 'nf-quilt'

}You can alternatively specify the plugin as part of the command-line, .e.g.:

nextflow run ./main.nf -profile standard -plugins nf-quilt --outdir 'quilt+s3://bucket#package=prefix/suffix'- Obtain a Quilt+ URI for each package

Each Quilt+ package URI you read or write from has the form:

quilt+s3://bucket#package=prefix/suffix

You must have the appropriate read or write permissions for that bucket,

and your environment must have the corresponding

AWS credentials.

In the Quilt catalog, you can find the Quilt+ URI for an existing package

in the <> CODE | URI section at the top.

You can also manually create URIs for new packages that don't yet exist.

See 'Advanced Options' below for more details.

- Set the appropriate parameter(s) in your pipeline

For nf-core pipelines, use --input to read and --outdir to write.

Otherwise, whatever is passed to Channel.fromPath as input

and to publishDir as outdir.

You can also specify these as YAML to pass to -params-file:

input: "quilt+s3://quilt-example#package=examples/hurdat"

outdir: "quilt+s3://nf-core-gallery#package=test/hurdat"Note that --key on the command-line corresponds to params.key in your script.

- Optional: use a pre-release plugin

If a plugin is not yet available in the Nextflow plugin registry, you can use a pre-release version. From the command-line, do, e.g.:

# export NXF_VER=23.04.3

export LOG4J_DEBUG=true # for verbose logging

export NXF_PLUGINS_TEST_REPOSITORY=https://github.com/quiltdata/nf-quilt/releases/download/0.8.0/nf-quilt-0.8.0-meta.json

nextflow run main.nf -plugins [email protected]For Tower, you can use the "Pre-run script" to set the environment variables.

There are a number of additional parameters you can add to Quilt+ URIs, in order to customize the behavior of the plugin:

-

Fragment Parameters:

- catalog: specify the DNS hostname of the Quilt catalog to use (default:

open.quiltdata.com) - force: force package update (even if already exists or local copy out-of-date)

- package: specify the name of the package to read or write (default:

.) - path: specify a path within the package to read or write (default:

.) [not fully supported yet] - workflow: specify the name of a workflow to use for metadata validation (default: none)

- catalog: specify the DNS hostname of the Quilt catalog to use (default:

-

Query Parameters: also stored as package-level metadata

- msg: specify the commit message to use when saving the package

- readme: specify a string for the package README_NF_QUILT.md file (will substitute "${variables}"), or SKIP to not create a README

- metadata: specify SKIP to not push any new metadata (implicit or explicit)

- any other key: specify any other metadata key to store in the package

See below for more details. When running from the git repository, you can use the Makefile to test the various options, as long as you set a WRITE_BUCKET:

export WRITE_BUCKET=bucket-with-write-access

make pkg-test # create "test/hurdat" package on s3://$WRITE_BUCKETSometimes you may want to ensure the created package contains specific metadata.

This is done using Quilt workflows.

Specify the workflow name as an additional workflow= fragment parameter,

and any metadata properties as part of the query string.

make pkg-test QUERY='?mkey1=val1&mkey2=val2' FRAGMENT='&workflow=my_workflow'Note that specifying a workflow means that package creation will fail (and nothing will be saved) if the query string does not contain all the required metadata, so you should carefully test it before running long jobs.

Version 0.3.4 and later allow you to customize both the msg

and readme via metadata query keys:

make pkg-test QUERY='?msg=text+str&readme=GStr+%24msg+%24now+%24%7Bmeta[%22quilt%22]%7D'The readme parameter is a Groovy GString template which expands the ${variables}:

msg: the current commit messagenow: the ISO 8601 date and timemeta: the complete metadata (very large! use only subsets)

To quickly run nf-quilt from this GitHub repository:

git clone https://github.com/quiltdata/nf-quilt.git

cd nf-quilt

make test-all # runs unit tests and installs dependencies

export WRITE_BUCKET=bucket-with-write-access

make pkg-test # create "test/hurdat" package on s3://$WRITE_BUCKET

./launch.sh run nf-core/sarek -profile test,docker -plugins nf-quilt \

--outdir "quilt+s3://$WRITE_BUCKET#package=nf-quilt/sarek"If you want to use an unpublished plugin, you must run it with a development version of nextflow.

The simplest way to do that is to pull them both directly from GitHub:

git clone https://github.com/nextflow.io/nextflow.git

git clone https://github.com/quiltdata/nf-quilt.git

cd ./nf-quiltYou can compile run unit tests with:

make checkIf this is your first time using Nextflow, you may also need to install a recent version of Java for your platform. Nextflow itself will take care of all the other dependencies.

You can verify and compile Nextflow with:

make nextflowTo verify that the plugin, nextflow, and your AWS credentials have been properly installed, type:

./launch.sh run ./main.nf -profile standard -plugins $(PROJECT) --pub "quilt+s3://bucket#package=test/hurdat"Replace "bucket" with an S3 bucket that those credentials can write to.

From inside the nf-quilt directory, call ./launch.sh with a path to your pipeline.

For example, with a standard nf-core pipeline like sarek:

./launch.sh run nf-core/sarek -profile test,docker -plugins nf-quilt --outdir "quilt+s3://bucket#package=nf-quilt/sarek"Otherwise, replace nf-core/sarek with the local path to your pipeline's .nf file,

and replace outdir with the appropriate parameter for publishDir.

The project should be hosted in a GitHub repository whose name should match the name of the plugin,

that is the name of the directory in the plugins folder (e.g. nf-quilt).

If your system is properly configured, use make publish to package, upload, and publish the plugin.

Otherwise, follow these steps:

-

Create a file named

gradle.propertiesin the project root containing the following attributes (this file should not be committed to Git):github_organization: the GitHub organisation where the plugin repository is hosted.github_username: The GitHub username granting access to the plugin repository.github_access_token: The GitHub access token required to upload and commit changes to the plugin repository.github_commit_email: The email address associated with your GitHub account.

-

Use the following command to package and create a release for your plugin on GitHub:

./gradlew :plugins:nf-quilt:upload

-

Fork the nextflow-io/plugins repository to one you can write to

-

Use the following command to publish your plugin to your fork:

./gradlew :plugins:publishIndex

-

Create a pull request to push your changes back to nextflow-io/plugins