This is a repository for the traffic sign detection using the YOLOv4.

- Traffic sign data set making

In this repo, the TT100K dataset has been used to train and test the model. In order to use the TT100K, it firstly needs to transfer to PASCAL VOC format.

- Automatically classify the training dataset and test dataset

- YOLOv4 configuration file setting

- training and testing YOLOv4 network

- evaluating the network

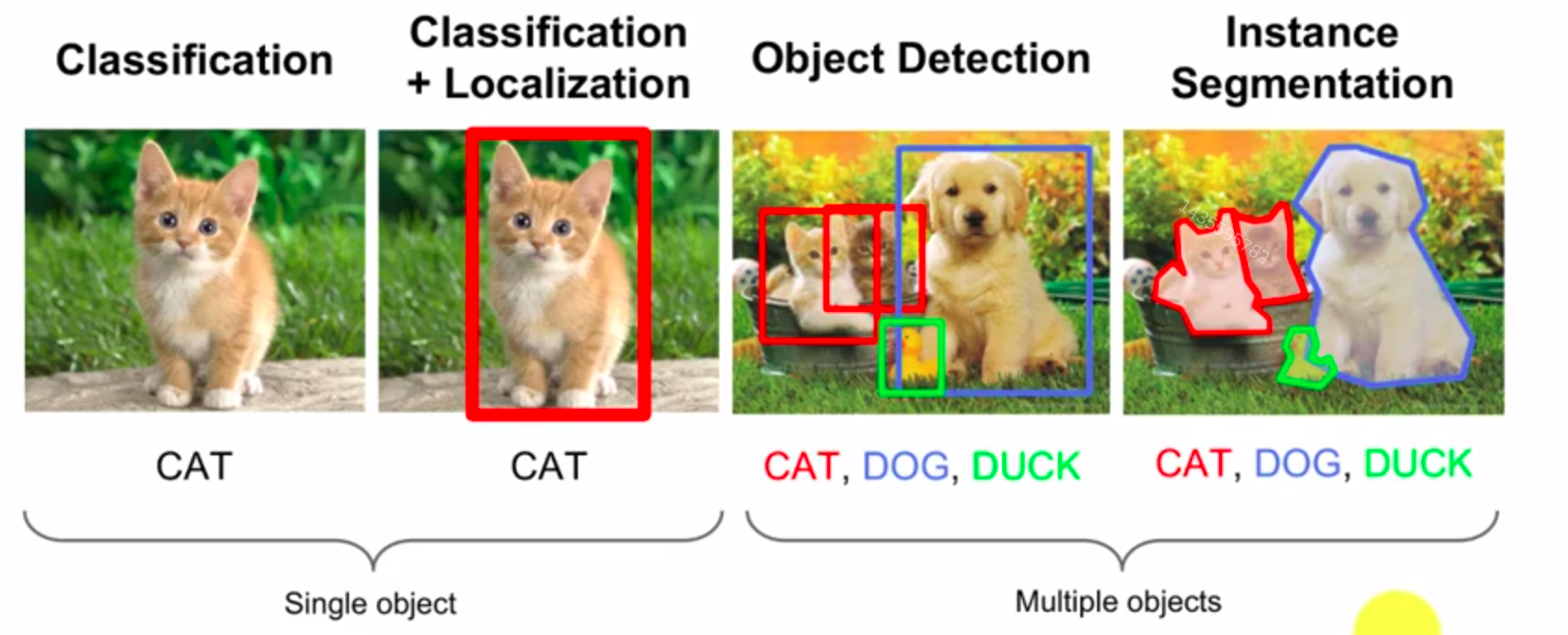

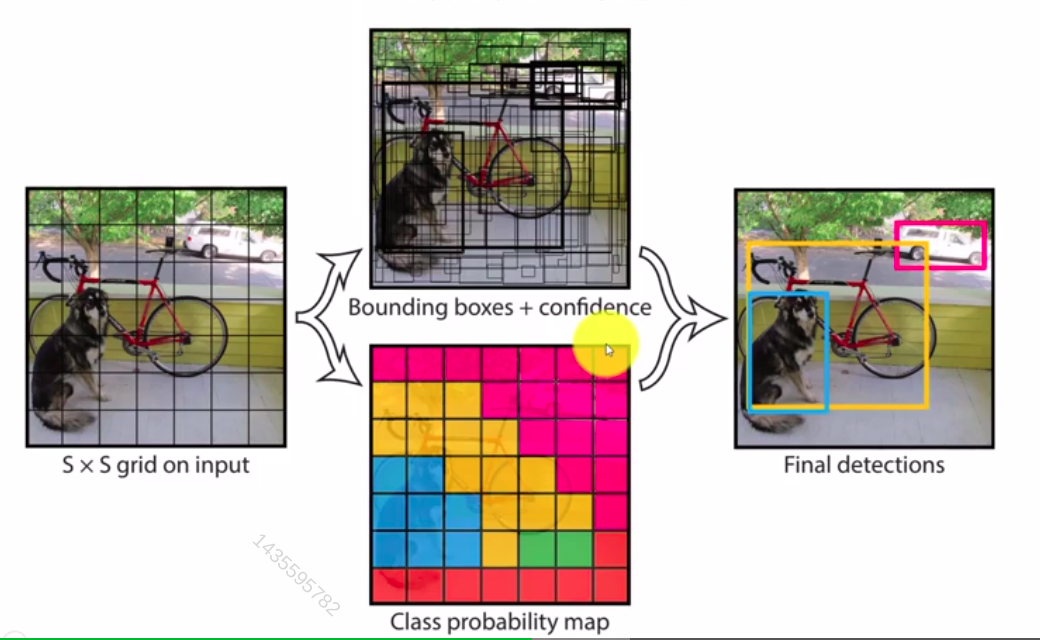

The object detection has two tasks: the localisation (Bounding box) and recognition (category label).

-

For the single object: the problem is classification and localisation.

-

For the multiple objects: the problem is objection detection and instance segmentation.

Localisation and detection:

- Localisation: the definition is to find a labelled object in one image.

- Detection: find all objects in one image.

- PASCAL VOC

It includes 20 categories and it has 11530 images.

- ImageNet (ILSVRC 2010-2017)

It contains 1000 categories and it uses to classification, localisation and detection. It has 470000 images.

- COCO

COCO: Microsoft Common Objects in Context. COCO has 200000 images and 80 categories in it.

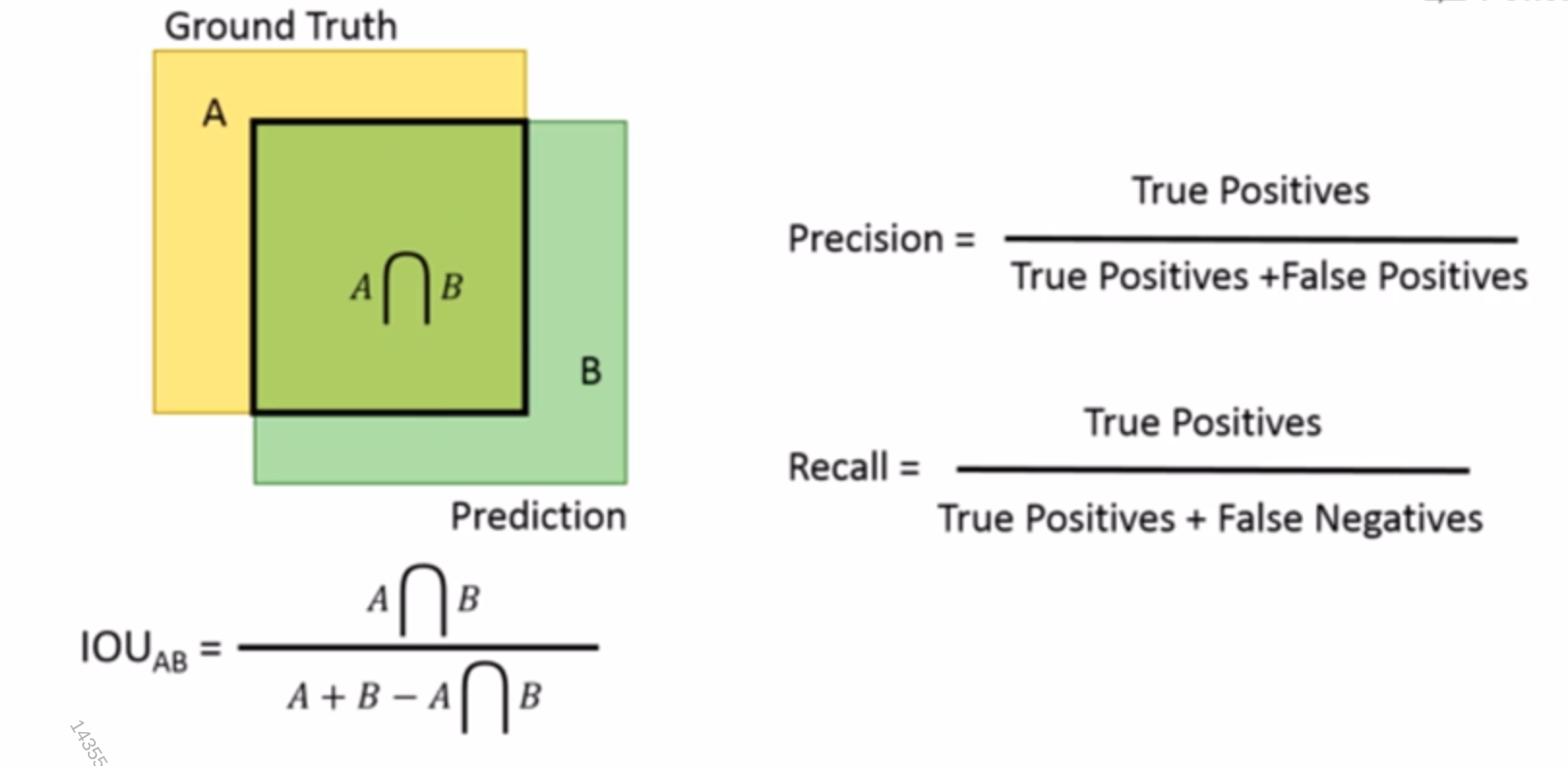

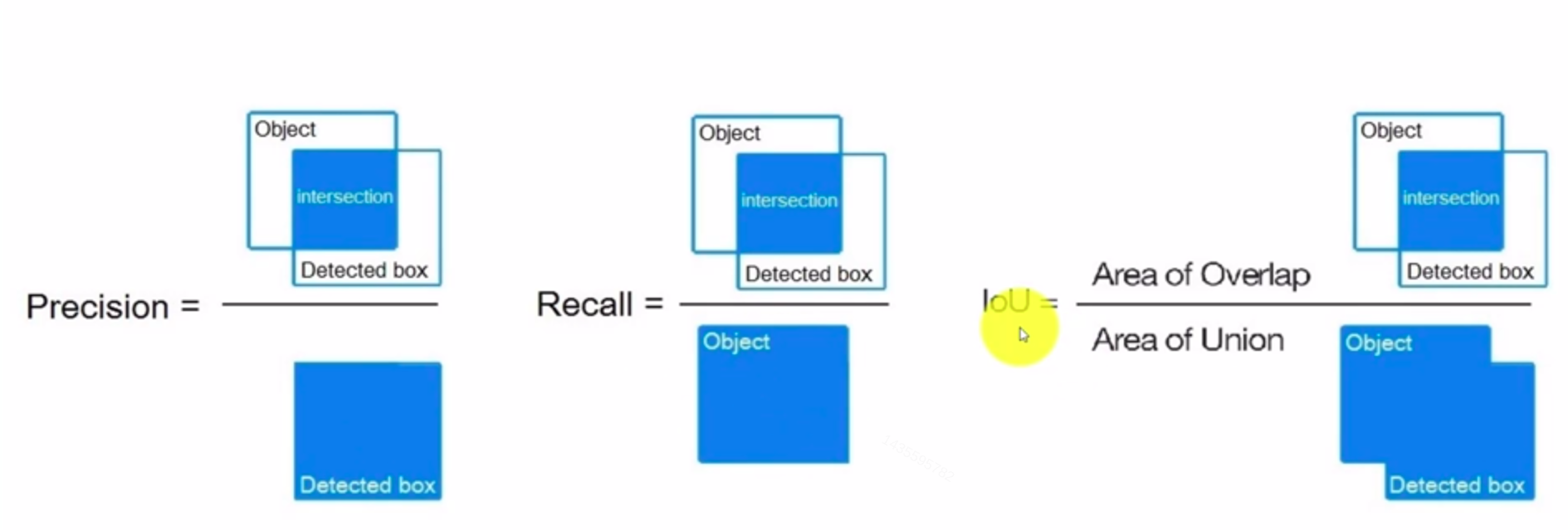

Evaluating the network performance

- Precision, Recall, F1 score

$F1 = \frac{2precisionrecall}{precision + recall}$

- IoU (Intersection over Union)

If

-

P-R curve (Precision- Recall curve)

-

AP (Average Precision)

-

mAP (mean Average Precision)

-

FPS (Frames Per Second)

Summary

- Pascal VOC 2007 uses 11 Recall Points on PR curve.

- Pascal VOC 2010-2021 uses Area Under Curve on PR curve.

- MS COCO uses 101 Recall points on PR curve as well as different IoU thresholds.

- GTSRB dataset

- BTSD dataset

- LISA dataset

- CCTSDB dataset

- TT100K dataset

Chinese road sign:

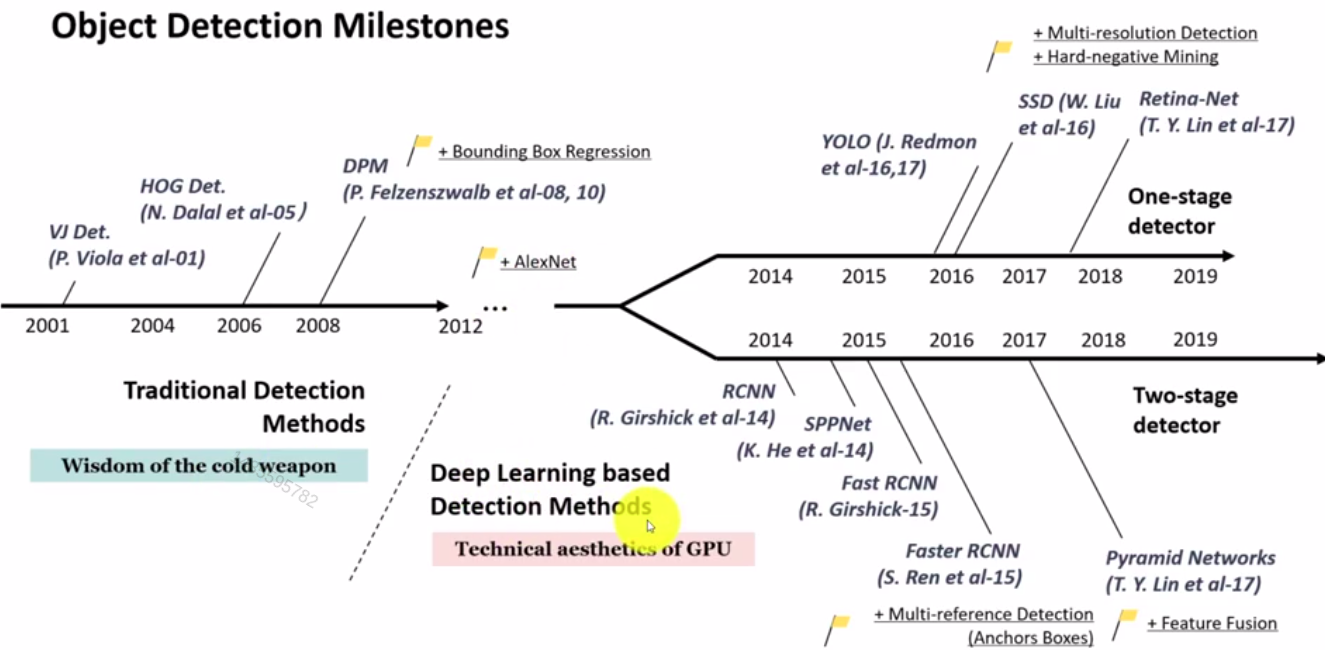

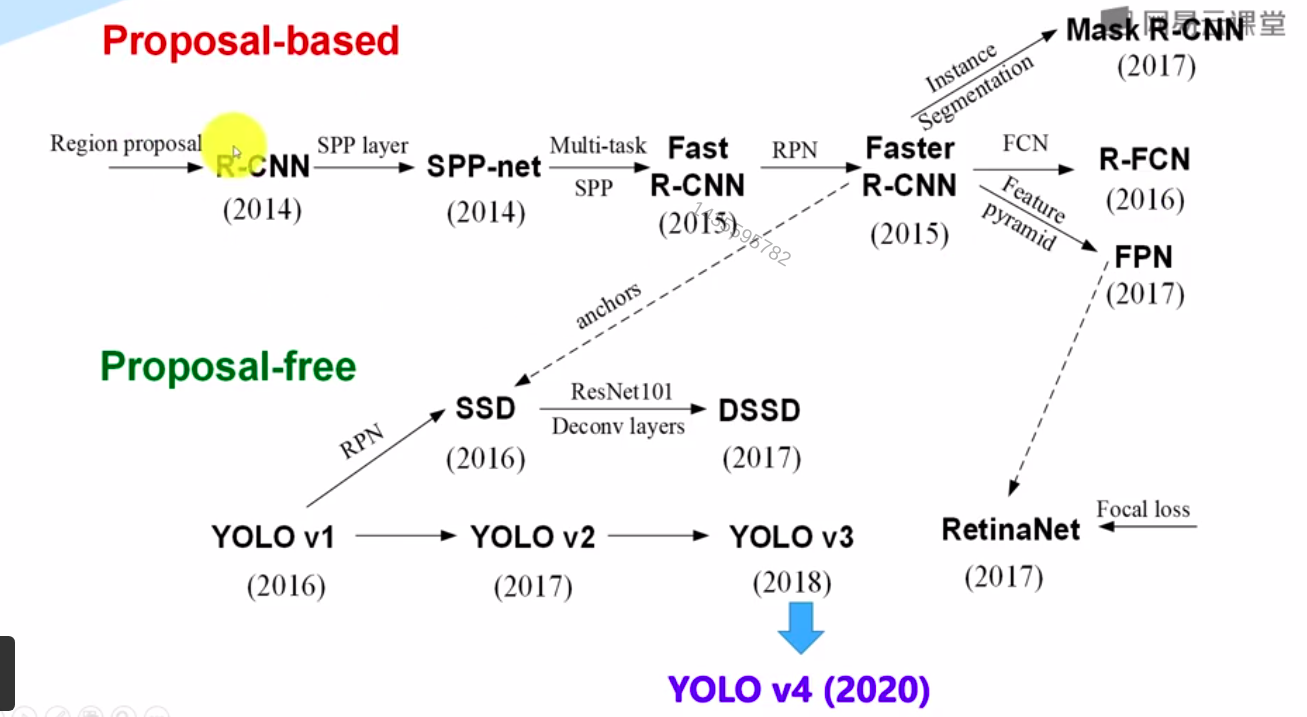

The road map of object detection:

Darknet

Darknet is an open source neural network framework written in C and CUDA. It is fast, easy to install , and supports CPU and GPU computation.

YOLOv4 source code: https://github.com/AlexeyAB/darknet

YOLOv4 paper: https://arxiv.org/abs/2004.10934

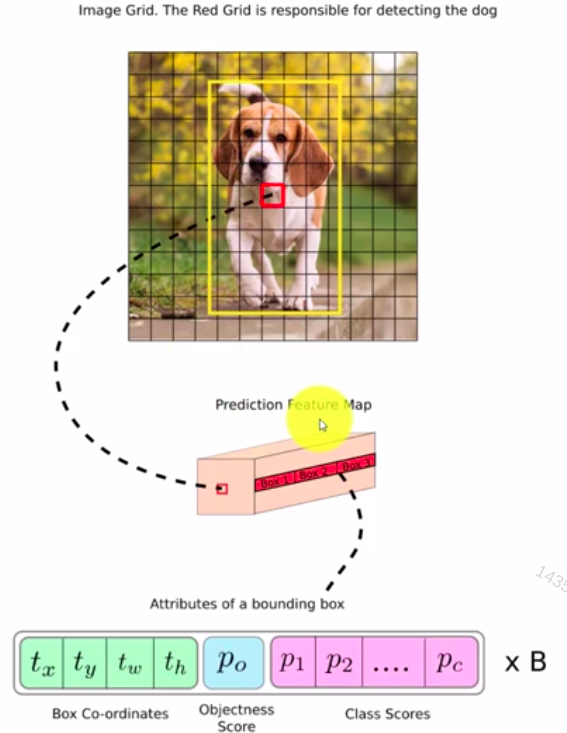

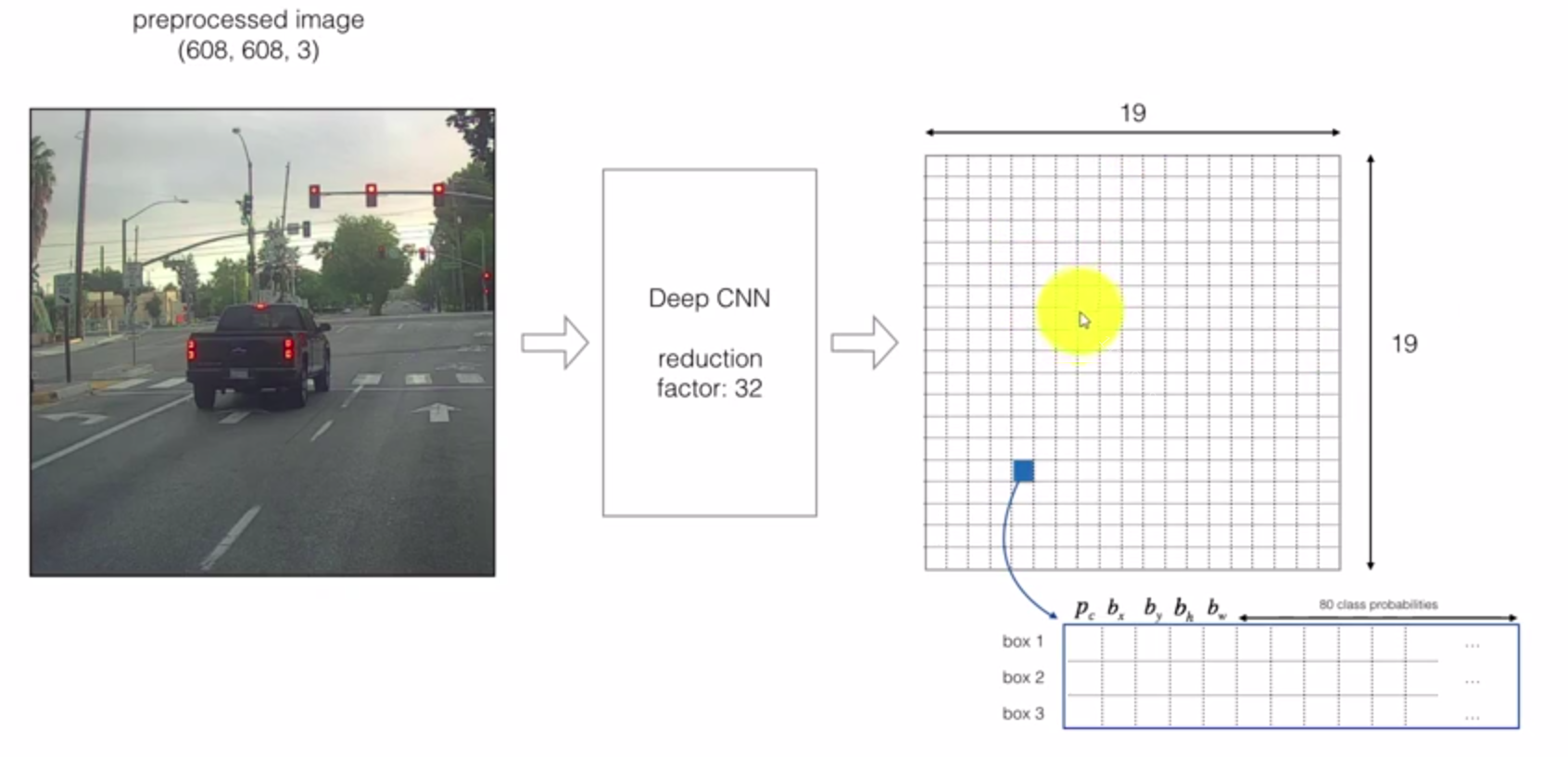

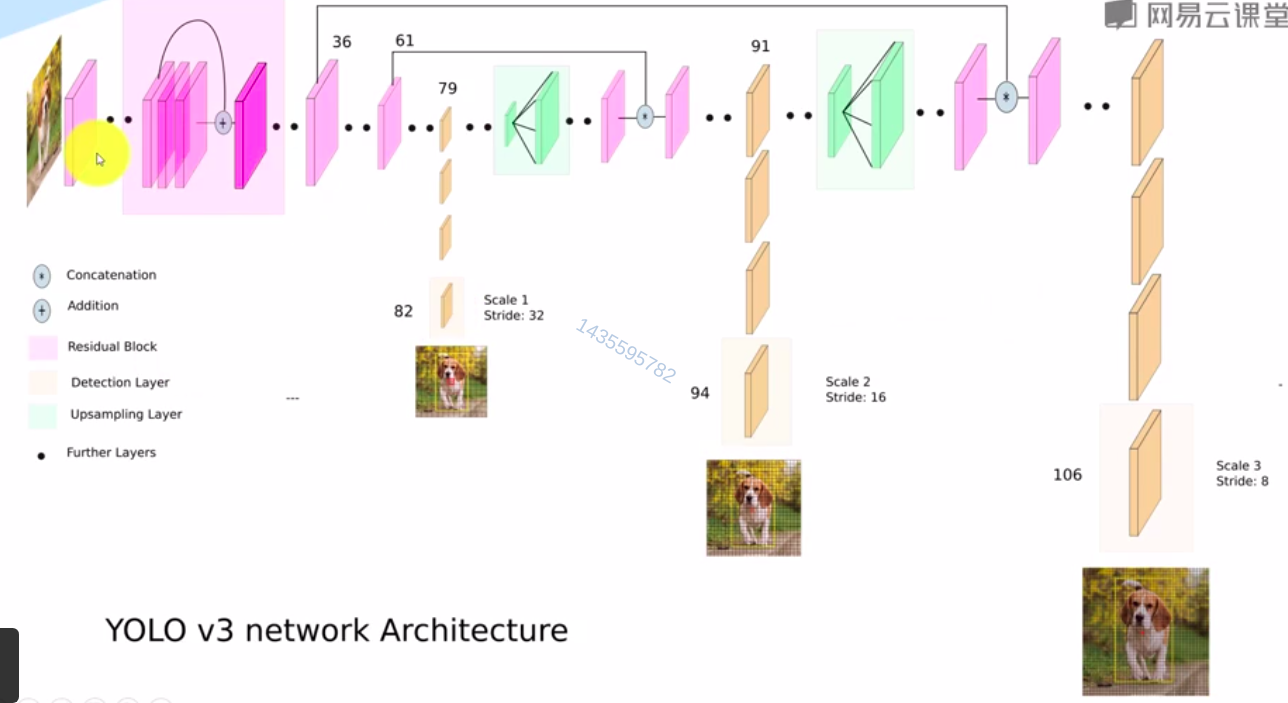

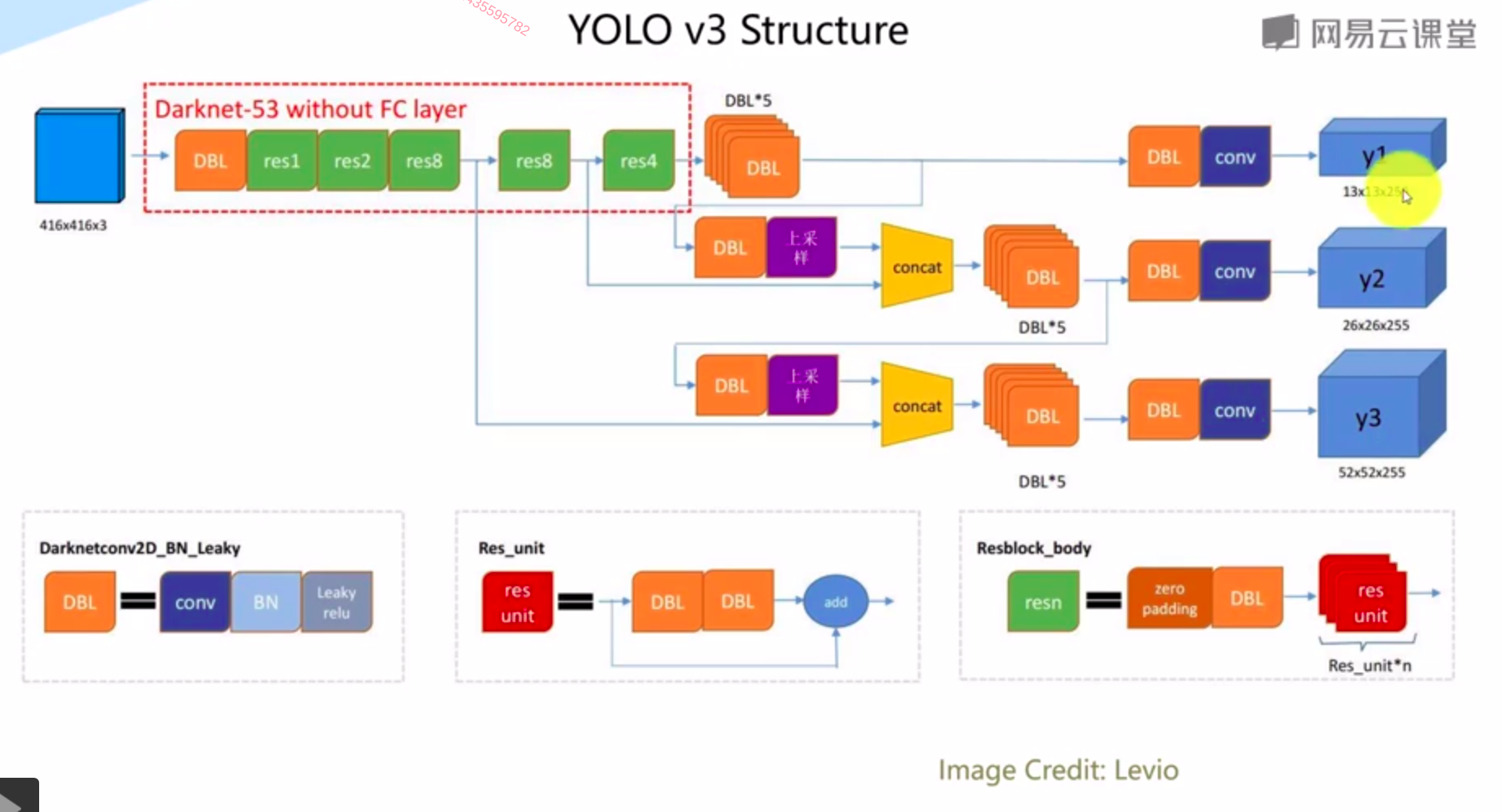

The basic idea of YOLOv3:

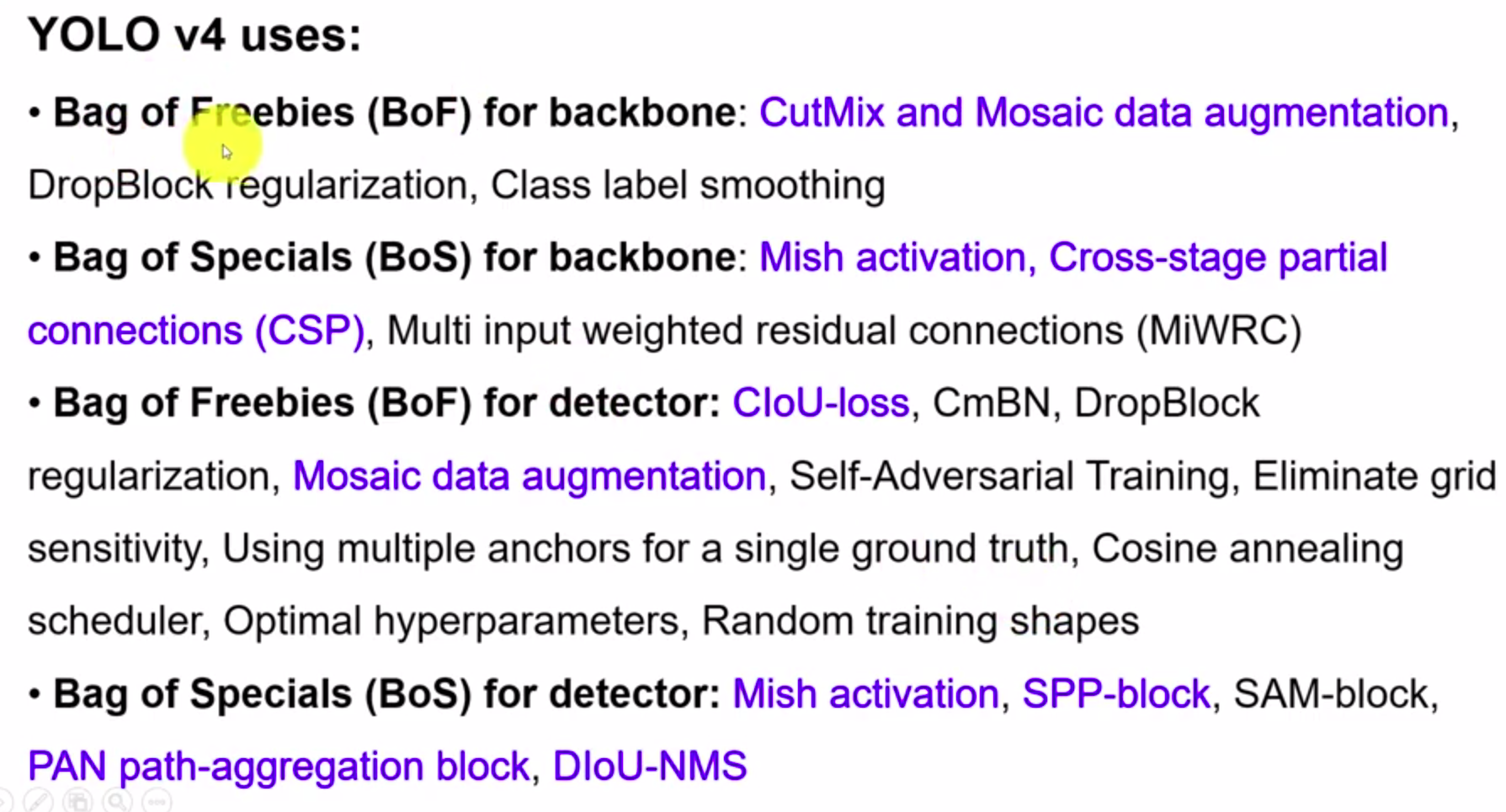

YOLOv4:

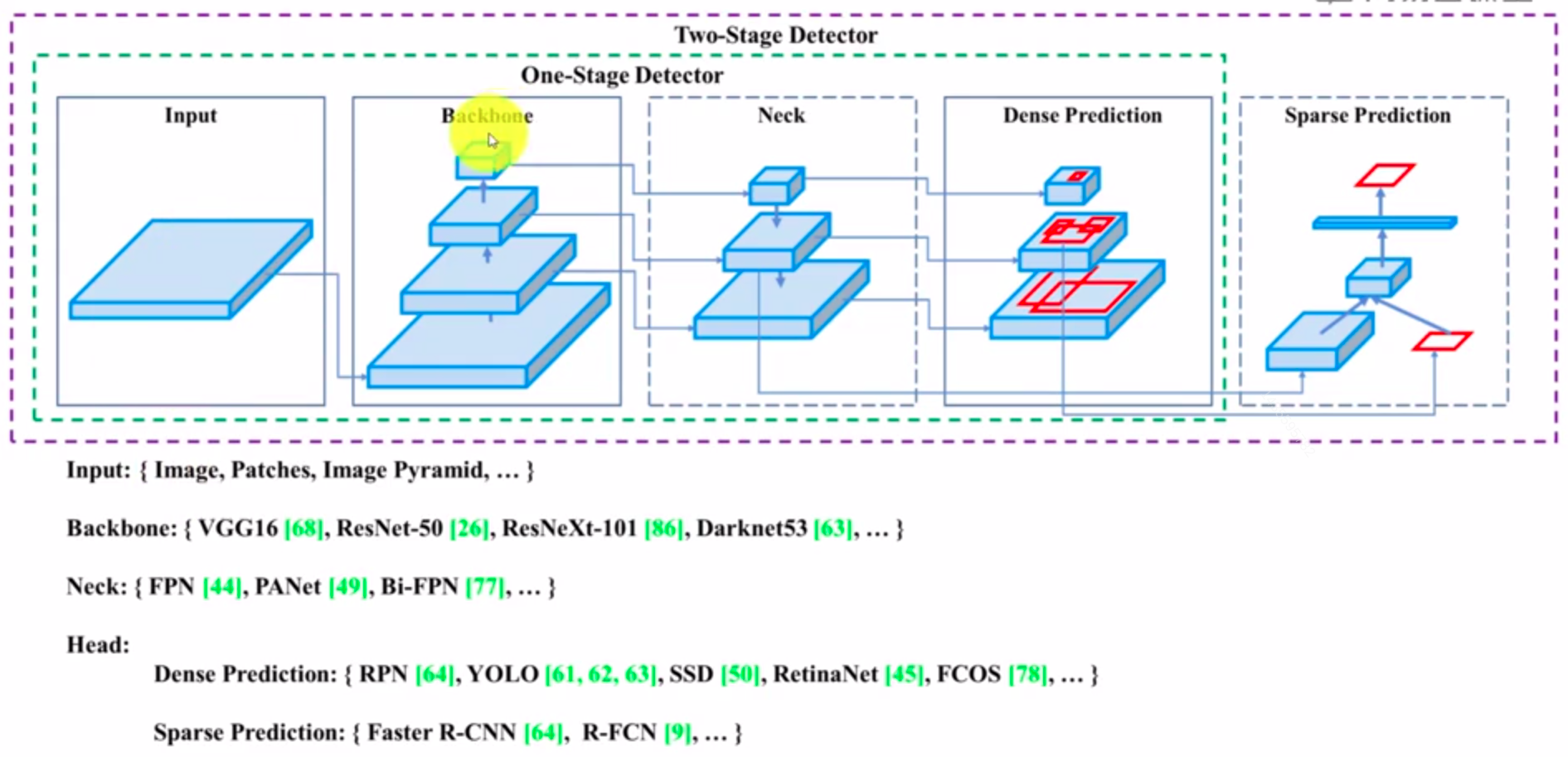

- Backbone: CSPDarknet53

- Neck: SPP, PAN

- Head:YOLO v3

The backbone, neck and head are chosen as the architecture of YOLOv4.

- TT100K dataset format

Download the dataset: https://cg.cs.tsinghua.edu.cn/traffic-sign/

- TT100K dataset: data.zip, code.zip

- mypython.tar.gz

- Jinja2-2.10.1-py2.py3-none-any.whl

The label file is in the annotations.json.

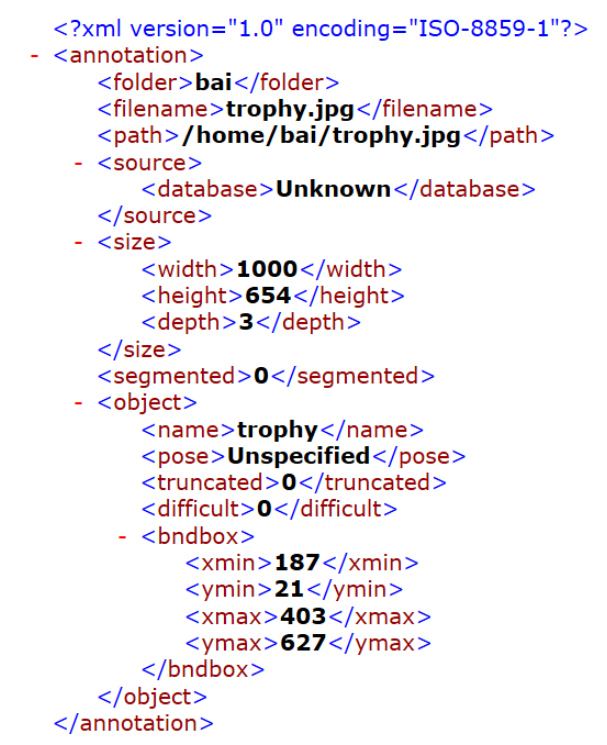

PASCAL VOC format uses the xml to record the coordinate. It contains the left corner of one image and the right corner coordinate. The format is below:

The YOLO format uses txt to store.

It contains class_id, x, y, w and h. The x and y means the image's centre coordination and w and h represent the image's width and height.

The transfer between PASCAL VOC and YOLO format uses the function convert(size, box):

def convert(size, box):

dw = 1. / size[0]

dh = 1. / size[1]

x = (box[0] + box[1]) / 2.0

y = (box[2] + box[3]) / 2.0

w = box[1] - box[0]

h = box[3] - box[2]

x = x * dw

w = w * dw

y = y * dh

h = h * dh

return (x, y , w, h)- install jinjia2

sudo pip install Jinja2-2.10.1-py2.py3-none-any.whl

sudo pip install pillow- Use the

tt100k_to_voc_test.pyandtt100k_to_voc_train.pyto transfer theTT100Kdataset toPASCAL VOCxmlformat.

python ./tt100k_to_voc_test.py

python ./tt100K_to_voc_train.py- Copy the

VOCdevkittodarknetrepository - Copy the images to

darknet

cp ~/Downloads/TT100K/data/test/*.jpg VOCdevkit/VOC2007/JPEGImages/

cp ~/Downloads/TT100K/data/train/*.jpg VOCdevkit/VOC2007/JPEGImages/Prepare these files:

- testfiles.tar.gz

- genfiles.py

- reval_voc.py

- voc_eval.py

- draw_pr.py

The datatset tree:

.

├── 2007_test.txt

├── 2007_train.txt

└── VOCdevkit

└── VOC2007

├── Annotations

├── ImageSets

├── JPEGImages

└── labels

Generate the training dataset and testing dataset:

python genfiles.pyThe labels are below the VOCdevkit/VOC2007 and also, it generates the 2007_train.txt and 2007_test.txt.

For the model training, it needs 2007_train.txt, 2007_test.txt ,labels and all images in the dataset.