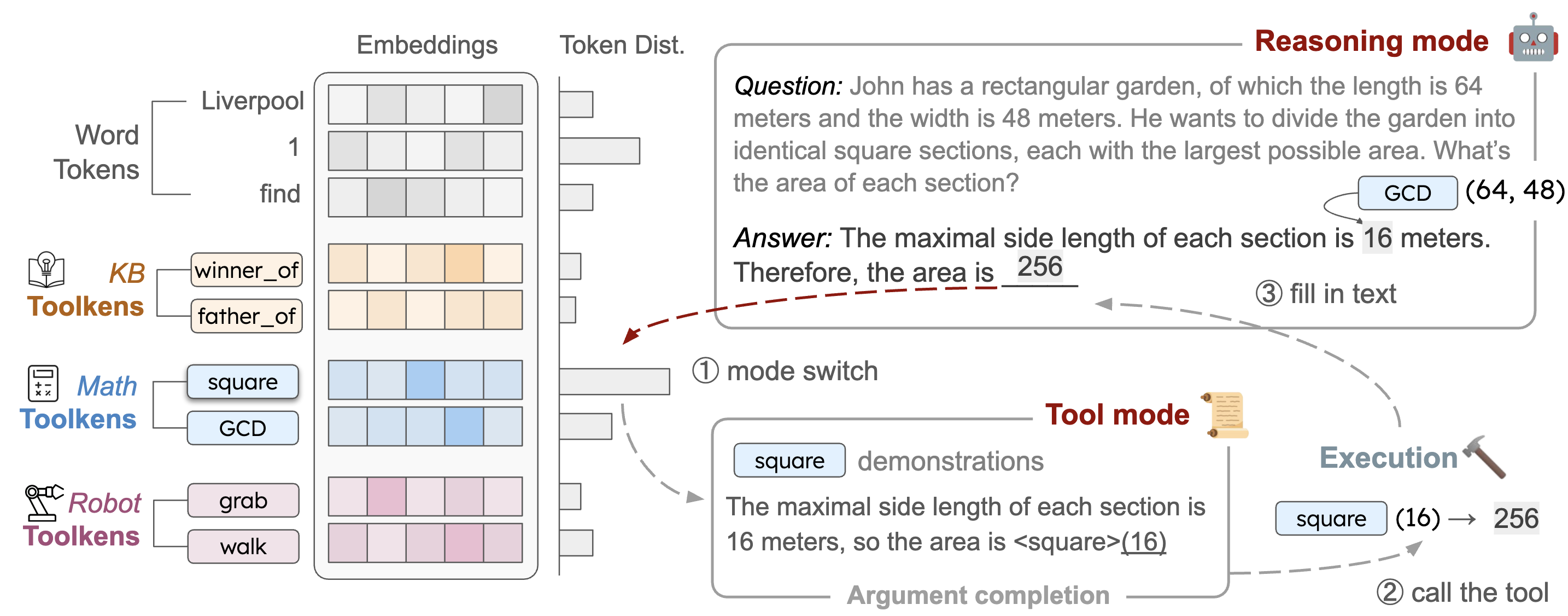

Source code for ToolkenGPT: Augmenting Frozen Language Models with Massive Tools via Tool Embeddings

NeurIPS 2023 (oral) | Best Paper Award at SoCalNLP 2023

- Our experiments are conducted with LLaMA-13B/33B, which takes at least 2/4 GPUs of 24GB memory each.

- Acquire the checkpoints of LLaMA from MetaAI and install all required packages. Please refer to LLaMA official repo.

- Download the data from here (all datasets uploaded)

- (For VirtualHome) Please download the data following the instructions here.

A side note: the folder

virtualhomeis from its official repo, but we fixed some small bugs in the evolving graph.

CUDA_VISIBLE_DEVICES=1,2 python -m torch.distributed.run --nproc_per_node 2 --master_port 1200 train_llama.py --ckpt_dir /remote-home/share/models/llama/13B --tokenizer_path /remote-home/share/models/llama/tokenizer.model --input_file data/gsm8k-xl/train.json --lr 1e-3 --num_epochs 10CUDA_VISIBLE_DEVICES=2,3 python -m torch.distributed.run --nproc_per_node 2 --master_port 1250 inference_llama.py --ckpt_dir /remote-home/share/models/llama/13B --tokenizer_path /remote-home/share/models/llama/tokenizer.model --mode func_embedding --dataset gsm8k-xl --func_load_path checkpoints/gsm8k-xl/epoch_9.pth --logits_bias 3.0CUDA_VISIBLE_DEVICES=0,5,6,7 python -m torch.distributed.run --nproc_per_node 4 --master_port 1200 train_llama.py --ckpt_dir /remote-home/share/models/llama/30B --tokenizer_path /remote-home/share/models/llama/tokenizer.model --dataset funcqa --input_file data/funcqa/train.json --lr 1e-4 --num_epochs 10CUDA_VISIBLE_DEVICES=0,1,2,3 python -m torch.distributed.run --nproc_per_node 4 --master_port 1250 inference_llama.py --ckpt_dir /remote-home/share/models/llama/30B --tokenizer_path /remote-home/share/models/llama/tokenizer.model --mode func_embedding --dataset funcqa_oh --func_load_path checkpoints/funcqa/epoch_7.pth --logits_bias 2.7CUDA_VISIBLE_DEVICES=0,1,2,3 python -m torch.distributed.run --nproc_per_node 4 --master_port 1250 inference_llama.py --ckpt_dir /remote-home/share/models/llama/30B --tokenizer_path /remote-home/share/models/llama/tokenizer.model --mode func_embedding --dataset funcqa_mh --func_load_path checkpoints/funcqa/epoch_7.pth --logits_bias 4.0CUDA_VISIBLE_DEVICES=6,7 python -m torch.distributed.run --nproc_per_node 2 --master_port 3001 train_llama.py --ckpt_dir /remote-home/share/models/llama/13B --tokenizer_path /remote-home/share/models/llama/tokenizer.model --dataset vh --input_file data/vh/legal_train_v4_embedding.json --only_functoken True --num_epochs 10CUDA_VISIBLE_DEVICES=3,5 python -m torch.distributed.run --nproc_per_node 2 inference_llama.py --ckpt_dir /remote-home/share/models/llama/13B --tokenizer_path /remote-home/share/models/llama/tokenizer.model --mode vh_embedding_inference --dataset vh --func_load_path checkpoints/vh/epoch_7.pth --logits_bias 10.0See evaluation/eval_vh.ipynb

- synthetic data

CUDA_VISIBLE_DEVICES=2,3 python -m torch.distributed.run --nproc_per_node 2 --master_port 3002 train_llama.py --ckpt_dir /remote-home/share/models/llama/13B --tokenizer_path /remote-home/share/models/llama/tokenizer.model --dataset kamel --input_file data/kamel/train_clean.json --only_functoken False ---log_prefix synthetic-data- --num_epochs 10- supervised data

CUDA_VISIBLE_DEVICES=2,3 python -m torch.distributed.run --nproc_per_node 2 --master_port 3002 train_llama.py --ckpt_dir /remote-home/share/models/llama/13B --tokenizer_path /remote-home/share/models/llama/tokenizer.model --dataset kamel --input_file data/kamel/kamel_id_train.json --only_functoken False ---log_prefix supervised-data- --num_epochs 10CUDA_VISIBLE_DEVICES=2,3 python -m torch.distributed.run --nproc_per_node 2 inference_llama.py --ckpt_dir /remote-home/share/models/llama/13B --tokenizer_path /remote-home/share/models/llama/tokenizer.model --mode kamel_embedding_inference --dataset kamel_30 --func_load_path checkpoints/kamel/epoch_4.pth --logits_bias 10See evaluation/eval_kamel.ipynb

add lanuch.json

{

"version": "0.2.0",

"configurations": [

{

"name": "Run inference_llama.py",

"type": "debugpy",

"request": "launch",

"module": "torch.distributed.run", // 直接指定模块

"console": "integratedTerminal",

"cwd": "/remote-home/jyang/ToolkenGPT",

"env": {

"CUDA_VISIBLE_DEVICES": "2,3"

},

"args": [

"--nproc_per_node", "2",

"--master_port", "1250",

"inference_llama.py", // 指定要运行的脚本

"--ckpt_dir", "/remote-home/share/models/llama/13B",

"--tokenizer_path", "/remote-home/share/models/llama/tokenizer.model",

"--mode", "func_embedding",

"--dataset", "gsm8k-xl",

"--func_load_path", "checkpoints/gsm8k-xl/epoch_9.pth",

"--logits_bias", "3.0"

],

"python": "/remote-home/jyang/miniconda3/envs/ToolkenGPT/bin/python", // 确保这是你使用的Python解释器

"subProcess": true,

"justMyCode": true

}

]

}