CrackDetect (Machine-learning approach for real-time assessment of road pavement service life based on vehicle fleet data)

Repository containing code for the project Machine-learning approach for real-time assessment of road pavement service life based on vehicle fleet data. Complete pipeline including data preprocessing, feature extraction, model training and prediction. An overview of the project can be found in the user manual.

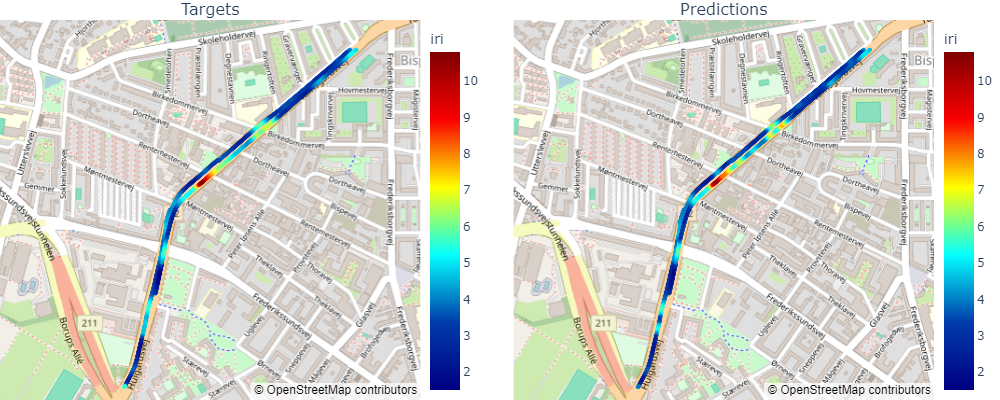

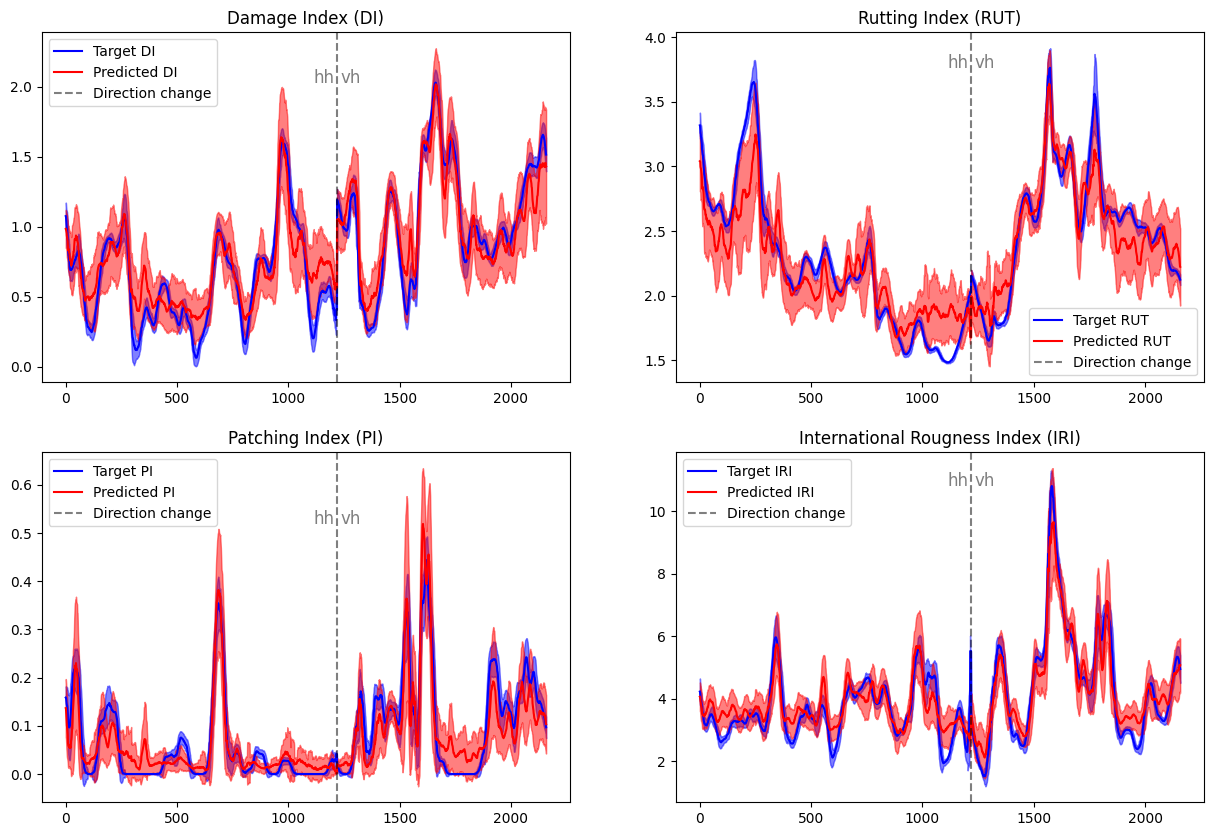

Our results are found in reports/figures/our_model_results/.

- Clone this repository

git clone https://github.com/rreezN/CrackDetect.git. - (Optional) Create Virtual environment in powershell. Note this project requires

python >= 3.10. - Install requirements

pip install -r requirements.txt. - Download the data from sciencedata.dk, unzip it and place it in the data folder (see data section).

- Call

wandb disabledif you have not set-up a suitable wandb project. (This project and entity information has been hard-coded intosrc\train_hydra_mr.pyas thewandb.init()command.) - Run

python src/main.py all

This will run all steps of the pipeline, from data preprocessing to model prediction. At the end a plot will appear that shows our (FleetYeeters) results and the results from the newly trained model. It will extract features using a Hydra model from all signals except location signals. The main.py script is setup to recreate our results, and thus all arguments are pre specified.

It is possible to call main.py with individual steps, or beginning at a certain step. To call individual steps, you would replace all with the desired step. Possible steps are:

python src/main.py [all, make_data, extract_features, train_model, predict_model, validate_model]Additionally, if you wish to start from a specific step, skipping the steps before, you can add the --begin-from argument, i.e. if you wish to start from predict_model you would call:

python src/main.py --begin-from predict_modelIf you wish to go through each step manually with your own arguments, call each script directly with its own arguments:

- Create dataset with

python src/data/make_dataset.py all - Extract features with

python src/data/feature_extraction - Train model with

python src/train_hydra_mr.py - Predict using trained model with

python src/predict_model.py - See results in

reports/figures/model_results

- CrackDetect (Machine-learning approach for real-time assessment of road pavement service life based on vehicle fleet data)

- Results

- Quickstart

- Table of Contents

- Installation

- Usage

- Credits

- License

- Clone this repository

git clone https://github.com/rreezN/CrackDetect.git- Install requirements

Note: This project requires python > 3.10 to run

There are two options for installing requirements. If you wish to setup a dedicated python virtual environment for the project, follow the steps in Virtual environment in powershell. If not, then simply run the following command, and all python modules required to run the project will be installed

python -m pip install -r requirements.txtHave python >= 3.10

- CD to CrackDetect

cd CracDetect python -m venv fleetenv-- Create environmentSet-ExecutionPolicy -Scope CurrentUser RemoteSigned-- Change execution policy if necessary (to be executed in powershell).\fleetenv\Scripts\Activate.ps1-- Activate venvpython -m pip install -U pip setuptools wheelpython -m pip install -r requirements.txt- ...

- Profit

To activate venv in powershell:

.\fleetenv\Scripts\Activate.ps1There are several steps in the pipeline of this project. Detailed explanations of each step, and how to use them in code can be found in notebooks in notebooks/.

The data is made available at sciencedata.dk.

Once downloaded it should be unzipped and placed in the empty data/ folder. The file structure should be as follows:

- data

- raw

- AutoPi_CAN

- platoon_CPH1_HH.hdf5

- platoon_CPH1_VH.hdf5

- read_hdf5_platoon.m

- read_hdf5.m

- readme.txt

- visualize_hdf5.m

- gopro

- car1

- GH012200

- GH012200_HERO8 Black-ACCL.csv

- GH012200_HERO8 Black-GPS5.csv

- GH012200_HERO8 Black-GYRO.csv

- ...

- GH012200

- car3

- ...

- car1

- ref_data

- cph1_aran_hh.csv

- cph1_aran_vh.csv

- cph1_fric_hh.csv

- cph1_fric_vh.csv

- cph1_iri_mpd_rut_hh.csv

- cph1_iri_mpd_rut_vh.csv

- cph1_zp_hh.csv

- cph1_zp_vh.csv

- AutoPi_CAN

- raw

The data goes through several preprocessing steps before it is ready for use in the feature extractor.

- Convert

- Validate

- Segment

- Matching

- Resampling

- KPIs

To run all preprocessing steps

python src/data/make_dataset.py all

A single step can be run by changing all to the desired step (e.g. matching). You can also run from a step to the end by calling, e.g. from (including) validate:

python src/data/make_dataset.py --begin_from validate

The main data preprocessing script is found in src/data/make_dataset.py. It has the following arguments and default parameters

mode all--begin-from(False)--skip-gopro(False)--speed-threshold 5--time-threshold 10--verbose(False)

There are two feature extractors implemented in this repository: HYDRA and MultiRocket. They are found in src/models/hydra and src/models/multirocket.

The main feature extraction is found in src/data/feature_extraction.py. It has the following arguments and default parameters

--cols acc.xyz_0 acc.xyz_1 acc.xyz_2--all_cols(default False)--all_cols_wo_location(default False)--feature_extractor both(choices:multirocket,hydra,both)--mr_num_features 50000--hydra_k 8--hydra_g 64--subset None--name_identifier(empty string)--folds 5--seed 42

To extract features using HYDRA and MultiRocket, call

python src/data/feature_extraction.pyThe script will automatically set up the feature extractors based on the amount of cols (1 = univariate, >1 = multivariate). The features will be stored in data/processed/features.hdf5, along with statistics used to standardize during training and prediction. Features and statistics will be saved under feature extractors based on their names as defined in the model scripts.

The structure of the HDF5 features file can be seen below

You can print the structure of your own features.hdf5 file with src/data/check_hdf5.py by calling

python src/data/check_hdf5.pycheck_hdf5 has the following arguments and defaults

--file_path data/processed/features.hdf5--limit 3--summary(False)

A simple model has been implemented in src/models/hydramr.py. The model training script is implemented in src/train_hydra_mr.py. It has the following arguments and default parameters

--epochs 50--batch_size 32--lr 1e-3--feature_extractors HydraMV_8_64--name_identifier(empty string)--folds 5--model_name HydraMRRegressor--weight_decay 0.0--hidden_dim 64--project_name hydra_mr_test(for wandb)--dropout 0.5--model_depth 0--batch_norm(False)

To train the model using Hydra on a multivariate dataset call

python src/train_hydra_mr.pyThe trained model will be saved in models/, along with the best model during training (based on validation loss) for each fold. The training curves are saved in reports/figures/model_results.

Trained models will be saved based on the name in their model file scripts.

To predict using the trained model use the script src/predict_model.py. It has the following arguments and default parameters

--model models/HydraMRRegressor/HydraMRRegressor.pt--data data/processed/features.hdf5--name_identifier(empty string)--data_type test--batch_size 32--plot_during(False)--fold -1--save_predictions(False)

To run the script on the saved HydraMRRegressor model, call

python src/predict_model.pyThe model will predict on the specified data set, plot the predictions and save them in reports/figures/model_results.

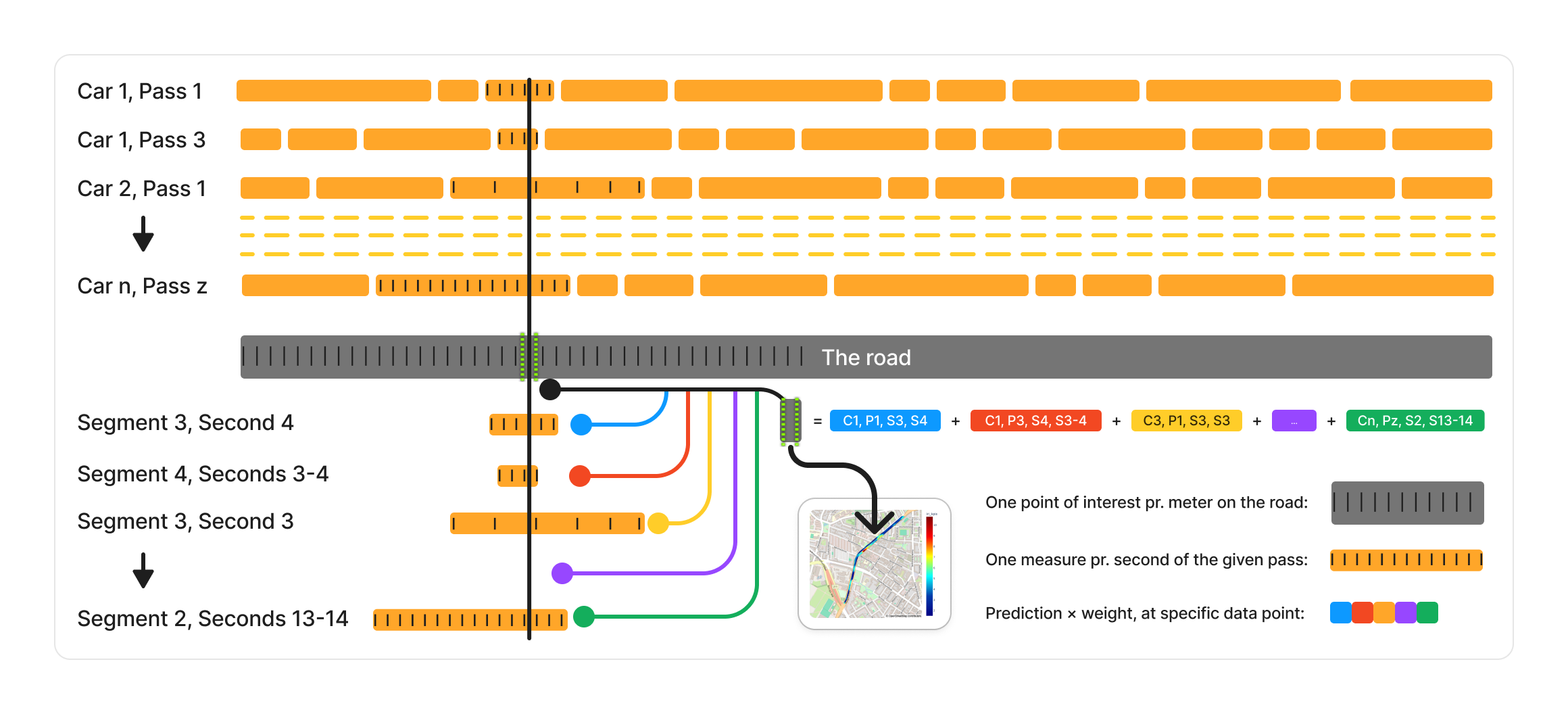

Finally the predictions is translated to the actual locations in the real world; the process can be seen in the top figure of the README. To investigate it one can run the Points of Interest Notebook notebooks/POI.ipynb.

- David Ari Ostenfeldt

- Dennis Chenxi Zhuang

- Kasper Niklas Kjær Hansen

- Kristian Rhindal Møllmann

- Kristoffer Marboe

Supervisors

- Asmus Skar

- Tommy Sonne Alstrøm

LiRA-CD: An open-source dataset for road condition modelling and research

Asmus Skar, Anders M. Vestergaard, Thea Brüsch, Shahrzad Pour, Ekkart Kindler, Tommy Sonne Alstrøm, Uwe Schlotz, Jakob Elsborg Larsen, Matteo Pettinari. 2023. LiRA-CD: An open-source dataset for road condition modelling and research. Technical University of Denmark: Geotechnics & GeologyDepartment of Environmental and Resource Engineering, Cognitive Systems, Department of Applied Mathematics and Computer Science, Software Systems Engineering Sweco Danmark A/S Danish Road Directorate.

HYDRA: Competing convolutional kernels for fast and accurate time series classification

Angus Dempster and Daniel F. Schmidt and Geoffrey I. Webb

Angus Dempster and Daniel F. Schmidt and Geoffrey I. Webb. HYDRA: Competing convolutional kernels for fast and accurate time series classification. 2022.

MultiRocket: Multiple pooling operators and transformations for fast and effective time series classification

Tan, Chang Wei and Dempster, Angus and Bergmeir, Cristoph and Webb, Geoffrey I

Tan, Chang Wei and Dempster, Angus and Bergmeir, Christoph and Webb, Geoffrey I. 2021. MultiRocket: Multiple pooling operators and transformations for fast and effective time series classification.

Created using mlops_template, a cookiecutter template for getting started with Machine Learning Operations (MLOps).

See LICENSE

Copyright 2024 FleetYeeters

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.