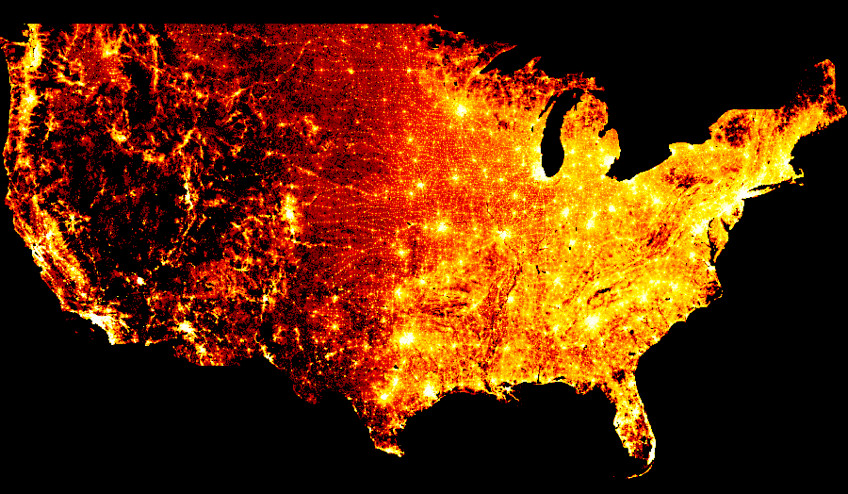

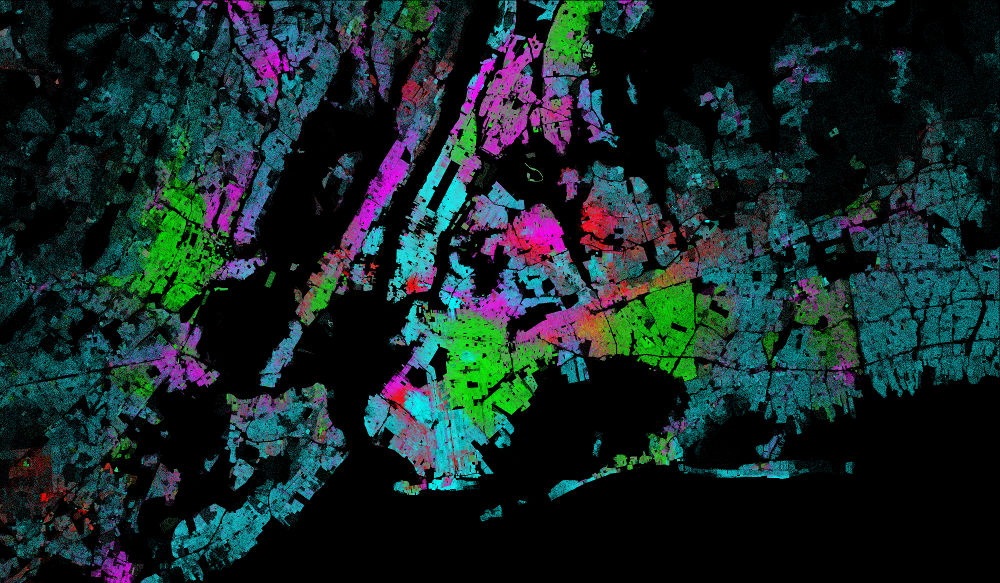

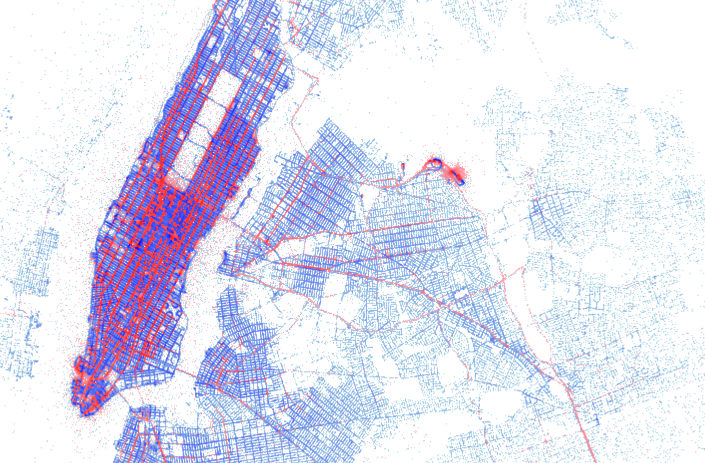

Datashader is a data rasterization pipeline for automating the process of creating meaningful representations of large amounts of data. Datashader breaks the creation of images of data into 3 main steps:

-

Projection

Each record is projected into zero or more bins of a nominal plotting grid shape, based on a specified glyph.

-

Aggregation

Reductions are computed for each bin, compressing the potentially large dataset into a much smaller aggregate array.

-

Transformation

These aggregates are then further processed, eventually creating an image.

Using this very general pipeline, many interesting data visualizations can be created in a performant and scalable way. Datashader contains tools for easily creating these pipelines in a composable manner, using only a few lines of code. Datashader can be used on its own, but it is also designed to work as a pre-processing stage in a plotting library, allowing that library to work with much larger datasets than it would otherwise.

The best way to get started with Datashader is install it together with our extensive set of examples, following the instructions in the examples README.

If all you need is datashader itself, without any of the files used in

the examples, you can install it from the bokeh channel using the using the

conda package manager:

conda install -c bokeh datashaderIf you want to get the very latest unreleased changes to datashader (e.g. to edit the source code yourself), first install using conda as above to ensure the dependencies are installed, and you can then tell Python to use a git clone instead:

conda remove --force datashader

git clone https://github.com/bokeh/datashader.git

cd datashader

pip install -e .Datashader is not currently available on PyPI, to avoid broken or low-performance installations that come from not keeping track of C/C++ binary dependencies such as LLVM (required by Numba).

To run the test suite, first install pytest (e.g. conda install pytest), then run py.test datashader in your datashader source

directory.

After working through the examples, you can find additional resources linked from the datashader documentation, including API documentation and papers and talks about the approach.