SMPL character animation in Unity

with real-time estimated 3D pose from a single monocular RGB image

There are so many models estimating 3D human mesh with shape reconstruction using smpl-like model. Expose, VIBE, HuManiFlow are some of those examples. Sometimes, they could reconstruct human pose in real-time quite well. But it is hard to import those on Unity because of the onnx-barracuda problem. Even if it is possible, the accuracy drops when converting python model to onnx model, and might be hard to be conducted in real-time.

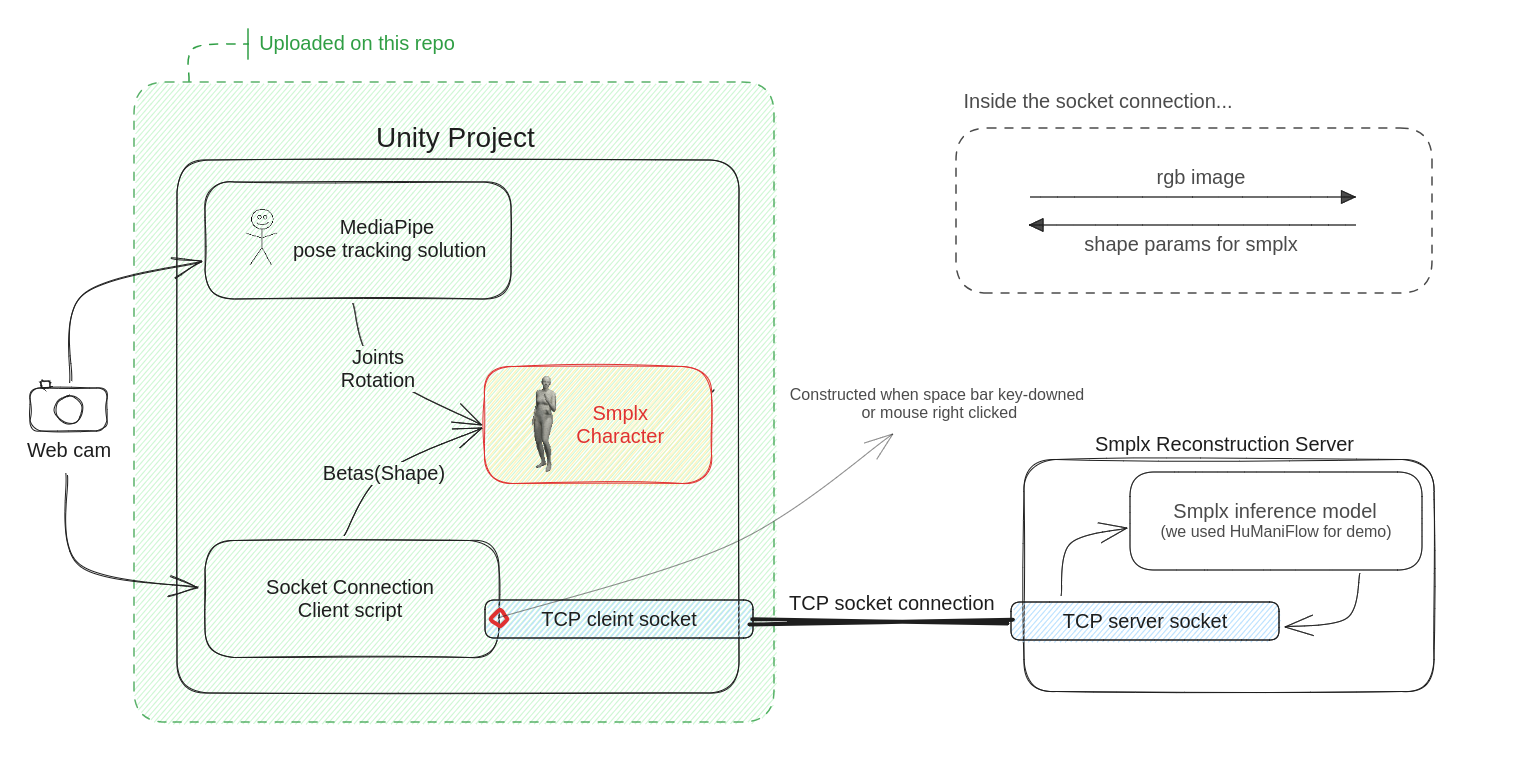

So, we decided to split it as two sub modules, shape reconstruction and pose estimation. Shape reconstruction is done by a python server. When client(Unity side) requests to get current frame's human shape information, byte-converted frame image is sent to the server and waiting for a response asynchronously. When server receives image data, it would be cropped or padded to the desired size for its own Smpl-like mesh inference model(we used HuManiFlow for demo) and forwarded to that model. After inference, shape parameters(betas) are packed into response message , sent to client, and connection is closed.

Pose estimation is done by Unity itself. We selected MediaPipe for pose estimation(MediaPipeUnityPlugin; Pre-developed Plugin are there already). Thanks to homuler(the author of the plugin), we could get 3d pose estimated data without networking.

Here is an architecture image.

You have to maintain your own Smplx Reconstruction Server, if you want to set Smplx body shapes automatically. Those two endpoint would be connected by TCP.

You can make .txt file containing a hostname(ipv4 addr) and a port number of shape inference server, and locate it ./Assets/RealTimeSMPL/ShapeConf/Secrets/ directory.

By assigning it to UnitySocketCleint_auto.cs script's IpConf variable,

it will send an image frame, receive betas and pass it to the target smplx mesh sequentially.

The script is located at

Main Canvas > ContainerPanel > Body > Annotatable Screen

in the Assets > RealTimeSMPL > Scene > RealTimeSMPLX.unity scene's Hierarchy window.

Hostname and port number must be split by "line separator" like this

xxx.xxx.xx.xxx

0000

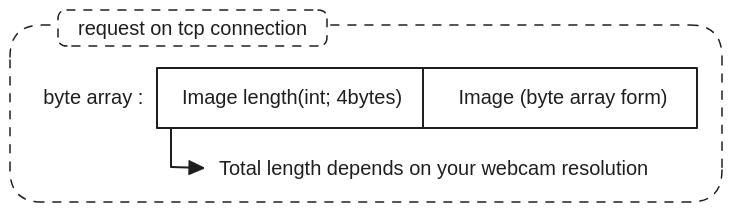

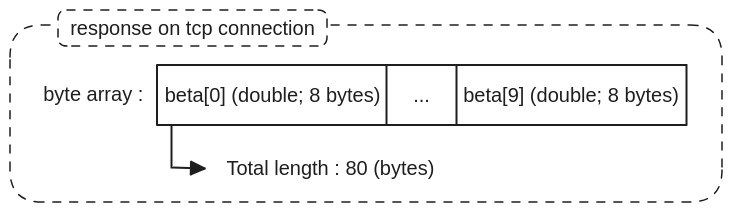

- request

- response

Those two byte arrays are passed on tcp stream. Request form would be passed on the stream correctly if you assigned valid value on IpConf variable. You have to obey response message format when implementing inference server.

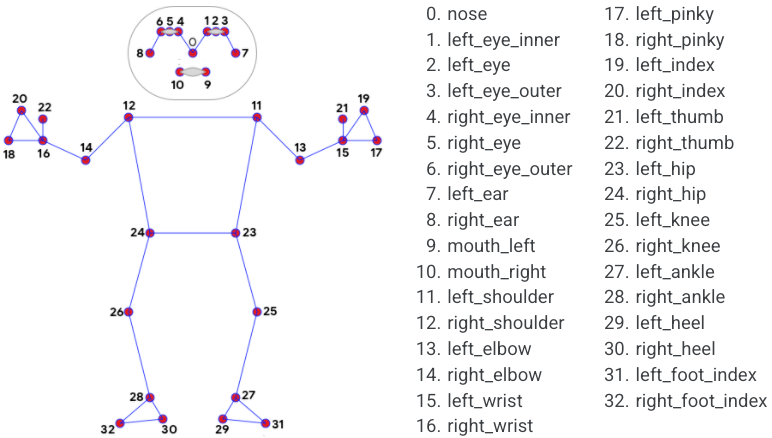

We used MediaPipe framework for 3D pose estimation.

The estimated data contains each joint's rotation angles. Since the skeleton structure and the number of joints in the MediaPipe framework were different from those in SMPL/SMPLX, it was necessary to work on mapping them as closely as possible.

You can find demo scene at ./RealTimeSMPL/Scene/RealTimeSMPLX. Run the scene and see your smpl avatar dancing just like you.

If you constructed your own smpl human mesh reconstruction server and configured connection correctly, the smpl mesh's shape changes every time you push down space bar or right-click your mouse.

This repository does not contain required libraries (e.g. libmediapipe_c.so, Google.Protobuf.dll, etc).

You have to download whole things at this page and extract it anywhere you want, instead of git clone.

This repository is based on...

- Unity 2021.3.18

- MediaPipeUnityPlugin_v0.11.0

and tested on

- OS : Ubuntu 20.04, Windows 10

- Processor : AMD Ryzen 7 5800X + RTX 3080 Ti / AMD Ryzen 5 4500U with Radeon Graphics

Despite such efforts, it still exhibited less natural movements when inferring and rendering the joints of SMPL/SMPLX meshes in real-time in Python, as shown in the demo video linked below. If we can find a way to perform real-time Pose Estimation in Unity that aligns with the skeleton structure used in SMPL, it is likely to yield better results.

Original README from MediaPipeUnityPlugin repository

This is a Unity (2021.3.18f1) Native Plugin to use MediaPipe (0.9.1).

The goal of this project is to port the MediaPipe API (C++) one by one to C# so that it can be called from Unity.

This approach may sacrifice performance when you need to call multiple APIs in a loop, but it gives you the flexibility to use MediaPipe instead.

With this plugin, you can

- Write MediaPipe code in C#.

- Run MediaPipe's official solution on Unity.

- Run your custom

CalculatorandCalculatorGraphon Unity.⚠️ Depending on the type of input/output, you may need to write C++ code.

Here is a Hello World! example.

Compare it with the official code!

using Mediapipe;

using UnityEngine;

public sealed class HelloWorld : MonoBehaviour

{

private const string _ConfigText = @"

input_stream: ""in""

output_stream: ""out""

node {

calculator: ""PassThroughCalculator""

input_stream: ""in""

output_stream: ""out1""

}

node {

calculator: ""PassThroughCalculator""

input_stream: ""out1""

output_stream: ""out""

}

";

private void Start()

{

var graph = new CalculatorGraph(_ConfigText);

var poller = graph.AddOutputStreamPoller<string>("out").Value();

graph.StartRun().AssertOk();

for (var i = 0; i < 10; i++)

{

graph.AddPacketToInputStream("in", new StringPacket("Hello World!", new Timestamp(i))).AssertOk();

}

graph.CloseInputStream("in").AssertOk();

var packet = new StringPacket();

while (poller.Next(packet))

{

Debug.Log(packet.Get());

}

graph.WaitUntilDone().AssertOk();

}

}For more detailed usage, see the API Overview page or the tutorial on the Getting Started page.

This repository does not contain required libraries (e.g. libmediapipe_c.so, Google.Protobuf.dll, etc).

You can download them from the release page instead.

| file | contents |

|---|---|

MediaPipeUnityPlugin-all.zip |

All the source code with required libraries. If you need to run sample scenes on your mobile devices, prefer this. |

com.github.homuler.mediapipe-*.tgz |

A tarball package |

MediaPipeUnityPlugin.*.unitypackage |

A .unitypackage file |

If you want to customize the package or minify the package size, you need to build them by yourself.

For a step-by-step guide, please refer to the Installation Guide on Wiki.

You can also make use of the Package Workflow on Github Actions after forking this repository.

⚠️ libraries that can be built differ depending on your environment.

⚠️ GPU mode is not supported on macOS and Windows.

| Editor | Linux (x86_64) | macOS (x86_64) | macOS (ARM64) | Windows (x86_64) | Android | iOS | WebGL | |

|---|---|---|---|---|---|---|---|---|

| Linux (AMD64) 1 | ✔️ | ✔️ | ✔️ | |||||

| Intel Mac | ✔️ | ✔️ | ✔️ | ✔️ | ||||

| M1 Mac | ✔️ | ✔️ | ✔️ | ✔️ | ||||

| Windows 10/11 (AMD64) 2 | ✔️ | ✔️ | ✔️ |

Here is a list of solutions that you can try in the sample app.

🔔 The graphs you can run are not limited to the ones in this list.

| Android | iOS | Linux (GPU) | Linux (CPU) | macOS (CPU) | Windows (CPU) | WebGL | |

|---|---|---|---|---|---|---|---|

| Face Detection | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | |

| Face Mesh | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | |

| Iris | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | |

| Hands | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | |

| Pose | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | |

| Holistic | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | |

| Selfie Segmentation | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | |

| Hair Segmentation | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | |

| Object Detection | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | |

| Box Tracking | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | |

| Instant Motion Tracking | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | |

| Objectron | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | |

| KNIFT |

Select Mediapipe/Samples/Scenes/Start Scene and play.

If you've built native libraries for CPU (i.e. --desktop cpu), select CPU for inference mode from the Inspector Window.

Make sure that you select GPU for inference mode before building the app, because CPU inference mode is not supported currently.

https://github.com/homuler/MediaPipeUnityPlugin/wiki

Note that some files are distributed under other licenses.

- MediaPipe (Apache Licence 2.0)

- emscripten (MIT)

third_party/mediapipe_emscripten_patch.diffcontains code copied from emscripten

- FontAwesome (LICENSE)

- Sample scenes use Font Awesome fonts

See also Third Party Notices.md.

</div>

Original README from smplx repository

[Paper Page] [Paper] [Supp. Mat.]

- License

- Description

- News

- Installation

- Downloading the model

- Loading SMPL-X, SMPL+H and SMPL

- MANO and FLAME correspondences

- Example

- Modifying the global pose of the model

- Citation

- Acknowledgments

- Contact

Software Copyright License for non-commercial scientific research purposes. Please read carefully the terms and conditions and any accompanying documentation before you download and/or use the SMPL-X/SMPLify-X model, data and software, (the "Model & Software"), including 3D meshes, blend weights, blend shapes, textures, software, scripts, and animations. By downloading and/or using the Model & Software (including downloading, cloning, installing, and any other use of this github repository), you acknowledge that you have read these terms and conditions, understand them, and agree to be bound by them. If you do not agree with these terms and conditions, you must not download and/or use the Model & Software. Any infringement of the terms of this agreement will automatically terminate your rights under this License.

The original images used for the figures 1 and 2 of the paper can be found in this link. The images in the paper are used under license from gettyimages.com. We have acquired the right to use them in the publication, but redistribution is not allowed. Please follow the instructions on the given link to acquire right of usage. Our results are obtained on the 483 × 724 pixels resolution of the original images.

SMPL-X (SMPL eXpressive) is a unified body model with shape parameters trained jointly for the face, hands and body. SMPL-X uses standard vertex based linear blend skinning with learned corrective blend shapes, has N = 10, 475 vertices and K = 54 joints, which include joints for the neck, jaw, eyeballs and fingers. SMPL-X is defined by a function M(θ, β, ψ), where θ is the pose parameters, β the shape parameters and ψ the facial expression parameters.

- 3 November 2020: We release the code to transfer between the models in the SMPL family. For more details on the code, go to this readme file. A detailed explanation on how the mappings were extracted can be found here.

- 23 September 2020: A UV map is now available for SMPL-X, please check the Downloads section of the website.

- 20 August 2020: The full shape and expression space of SMPL-X are now available.

To install the model please follow the next steps in the specified order:

- To install from PyPi simply run:

pip install smplx[all]- Clone this repository and install it using the setup.py script:

git clone https://github.com/vchoutas/smplx

python setup.py installTo download the SMPL-X model go to this project website and register to get access to the downloads section.

To download the SMPL+H model go to this project website and register to get access to the downloads section.

To download the SMPL model go to this (male and female models) and this (gender neutral model) project website and register to get access to the downloads section.

The loader gives the option to use any of the SMPL-X, SMPL+H, SMPL, and MANO models. Depending on the model you want to use, please follow the respective download instructions. To switch between MANO, SMPL, SMPL+H and SMPL-X just change the model_path or model_type parameters. For more details please check the docs of the model classes. Before using SMPL and SMPL+H you should follow the instructions in tools/README.md to remove the Chumpy objects from both model pkls, as well as merge the MANO parameters with SMPL+H.

You can either use the create function from body_models or directly call the constructor for the SMPL, SMPL+H and SMPL-X model. The path to the model can either be the path to the file with the parameters or a directory with the following structure:

models

├── smpl

│ ├── SMPL_FEMALE.pkl

│ └── SMPL_MALE.pkl

│ └── SMPL_NEUTRAL.pkl

├── smplh

│ ├── SMPLH_FEMALE.pkl

│ └── SMPLH_MALE.pkl

├── mano

| ├── MANO_RIGHT.pkl

| └── MANO_LEFT.pkl

└── smplx

├── SMPLX_FEMALE.npz

├── SMPLX_FEMALE.pkl

├── SMPLX_MALE.npz

├── SMPLX_MALE.pkl

├── SMPLX_NEUTRAL.npz

└── SMPLX_NEUTRAL.pklThe vertex correspondences between SMPL-X and MANO, FLAME can be downloaded from the project website. If you have extracted the correspondence data in the folder correspondences, then use the following scripts to visualize them:

- To view MANO correspondences run the following command:

python examples/vis_mano_vertices.py --model-folder $SMPLX_FOLDER --corr-fname correspondences/MANO_SMPLX_vertex_ids.pkl

- To view FLAME correspondences run the following command:

python examples/vis_flame_vertices.py --model-folder $SMPLX_FOLDER --corr-fname correspondences/SMPL-X__FLAME_vertex_ids.npy

After installing the smplx package and downloading the model parameters you should be able to run the demo.py script to visualize the results. For this step you have to install the pyrender and trimesh packages.

python examples/demo.py --model-folder $SMPLX_FOLDER --plot-joints=True --gender="neutral"

If you want to modify the global pose of the model, i.e. the root rotation and translation, to a new coordinate system for example, you need to take into account that the model rotation uses the pelvis as the center of rotation. A more detailed description can be found in the following link. If something is not clear, please let me know so that I can update the description.

Depending on which model is loaded for your project, i.e. SMPL-X or SMPL+H or SMPL, please cite the most relevant work below, listed in the same order:

@inproceedings{SMPL-X:2019,

title = {Expressive Body Capture: 3D Hands, Face, and Body from a Single Image},

author = {Pavlakos, Georgios and Choutas, Vasileios and Ghorbani, Nima and Bolkart, Timo and Osman, Ahmed A. A. and Tzionas, Dimitrios and Black, Michael J.},

booktitle = {Proceedings IEEE Conf. on Computer Vision and Pattern Recognition (CVPR)},

year = {2019}

}

@article{MANO:SIGGRAPHASIA:2017,

title = {Embodied Hands: Modeling and Capturing Hands and Bodies Together},

author = {Romero, Javier and Tzionas, Dimitrios and Black, Michael J.},

journal = {ACM Transactions on Graphics, (Proc. SIGGRAPH Asia)},

volume = {36},

number = {6},

series = {245:1--245:17},

month = nov,

year = {2017},

month_numeric = {11}

}

@article{SMPL:2015,

author = {Loper, Matthew and Mahmood, Naureen and Romero, Javier and Pons-Moll, Gerard and Black, Michael J.},

title = {{SMPL}: A Skinned Multi-Person Linear Model},

journal = {ACM Transactions on Graphics, (Proc. SIGGRAPH Asia)},

month = oct,

number = {6},

pages = {248:1--248:16},

publisher = {ACM},

volume = {34},

year = {2015}

}

This repository was originally developed for SMPL-X / SMPLify-X (CVPR 2019), you might be interested in having a look: https://smpl-x.is.tue.mpg.de.

Special thanks to Soubhik Sanyal for sharing the Tensorflow code used for the facial landmarks.

The code of this repository was implemented by Vassilis Choutas.

For questions, please contact [email protected].

For commercial licensing (and all related questions for business applications), please contact [email protected].

Footnotes

-

Tested on Arch Linux. ↩

-

Running MediaPipe on Windows is experimental. ↩