This repository is the official implementation of Evaluating the Robustness of Text-Conditioned Object Detection Models to False Captions.

-

Problem Statement: Empirically, text-conditioned OD models have shown weakness to negative captions (a caption where the implied object is not in the image); currently the means to evaluate this phenomenon systematically are limited.

-

Approach: Use large pretrained MLM (e.g., RoBERTa) to create negative captions dataset. Perform systematic evaluation over SOTA MDETR models. Evaluate model robustness + runtime performance of different model variations

-

Benefit: This work shows the strengths and limitations of current text-conditioned OD methods

data/: Flickr30k annotation data and output of negative-caption generationsbatch/: Slurm scripts used to run MDETR evaluationeval_flickr.*: Using MDETR models for inference on our negative-caption dataseteval_MLM.ipynb: Analysis of the MLM (roberta-large) outputseval_runtime.ipynb: Analysis of the MDETR inference runtimepre_MLM.ipynb: Generation and preprocessing of negative-caption dataset usingroberta-large

You'll need to have Anaconda/Miniconda installed on your machine. You can duplicate our environment via the following command:

conda env create -f environment.yaml

This project relies on code from the official MDETR repo, so you'll need to clone it to your machine:

git clone https://github.com/ashkamath/mdetr.git

Download the flickr30k dataset: link

Finally, update the paths in eval_flickr.sh to match your environment:

OUTPUT_DIR: Folder for evaluation results to be saved toIMG_DIR: flickr30k image folderMDETR_GIT_DIR: Path to MDETR repo cloned from above

The core model evaluation is run via eval_flickr.sh:

./eval_flickr.sh <batch size> <pretrained model> <gpu type>

The currently supported pretrained models are:

mdetr_efficientnetB5mdetr_efficientnetB3mdetr_resnet101

For example to evaluate mdetr_resnet101 with a batch size of 8 while using an RTX8000:

./eval_flickr.sh 8 mdetr_resnet101 rtx8000

All of the MDETR evaluation was run via Slurm jobs: refer to sbatch/ for the exact scripts used.

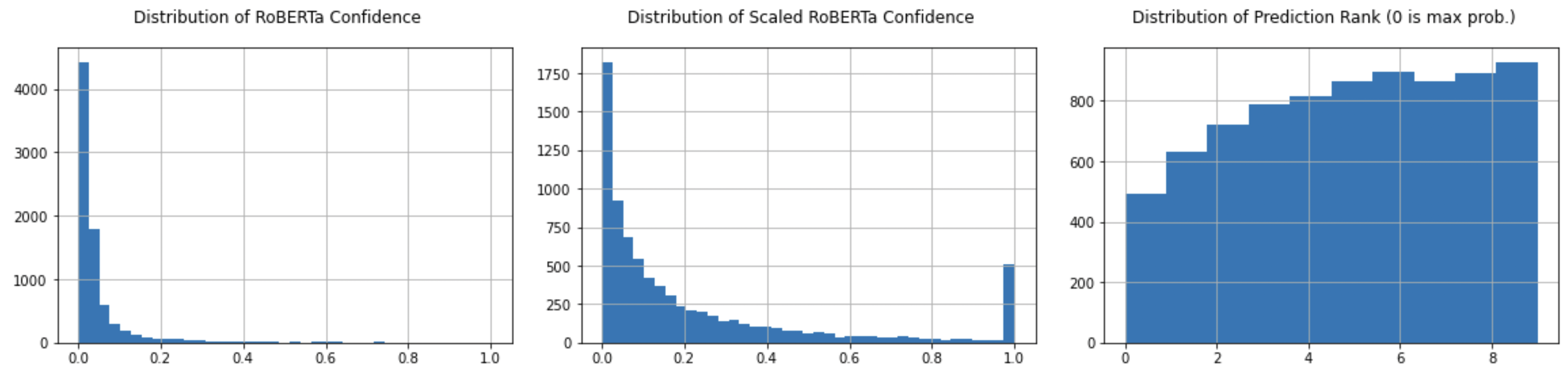

In general, RoBERTa predictions are of low confidence, but more predictions accepted from lower k. Higher confidence predictions more likely to be synonyms, and so therefore are not accepted.

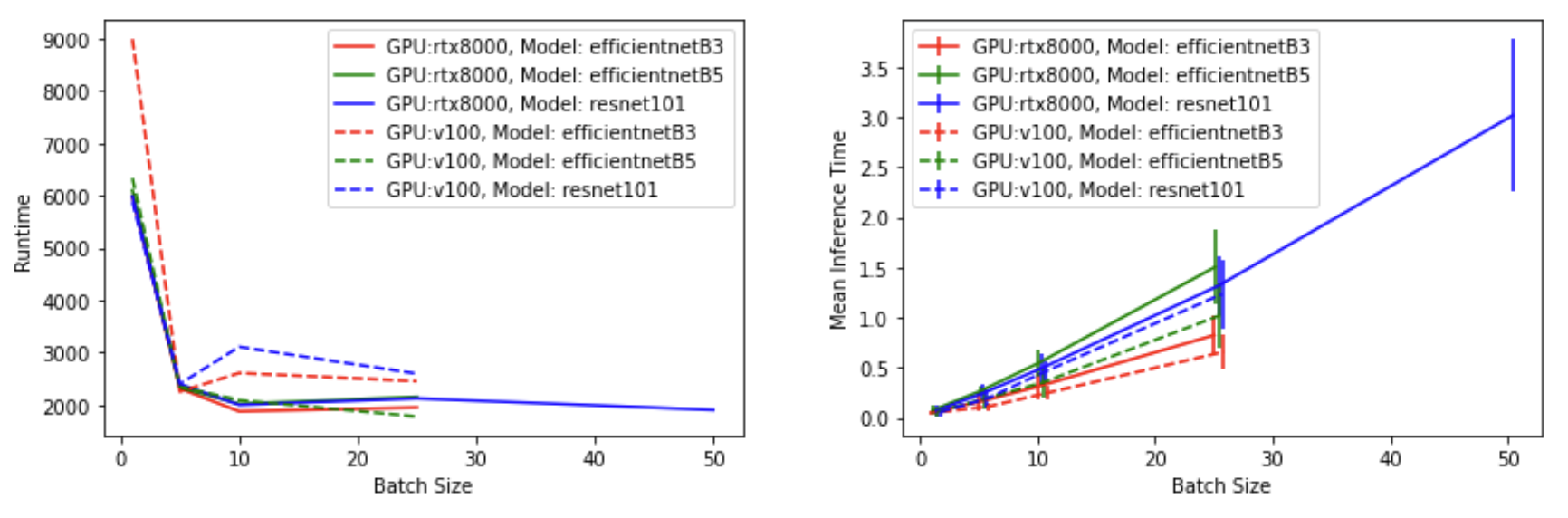

We saw a significant improvement in total runtime from batch size ≠ 1, however, minimal improvement in runtime as batch size increased further. We also saw a ~linear increase in inference time as batch size increased. Comparing the V100 to the RTX8000, both GPUs comparable in system speed performance (slight difference in mean inference time but standard deviation overlap is too great to make any strong claims)

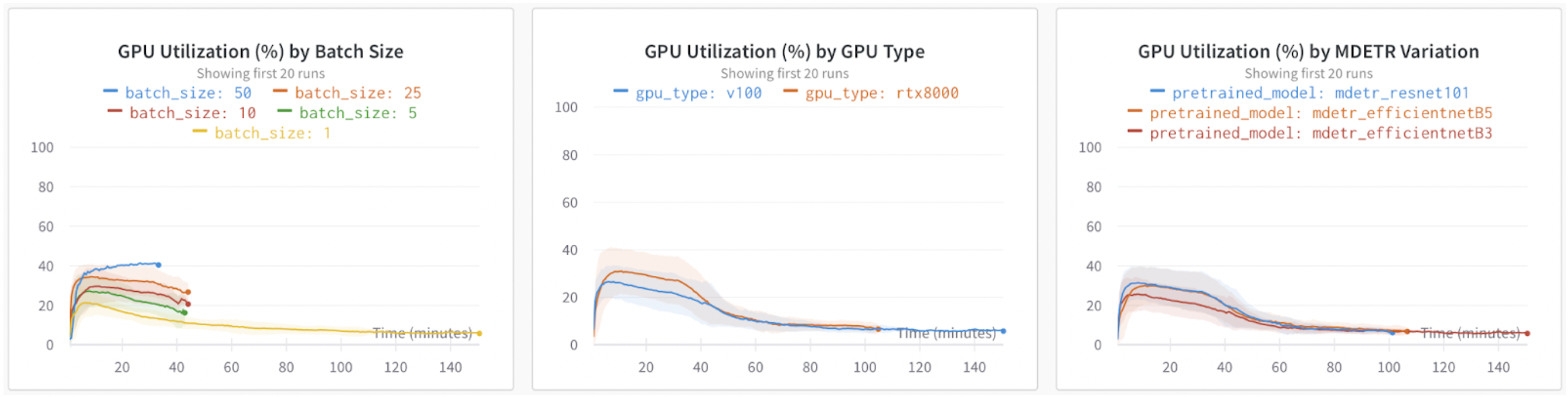

During MDETR inference we recorded GPU utilisation statistics; the diagram above shows this data stratified by batch size, GPU type, and MDETR variation. As expected, using a higher batch size results in better GPU utilization. Comparing GPUs, we see that RTX8000 achieves better utilisation overall. Looking at MDETR variations, EfficientNetB3 has worst utilisation, while EfficientNetB5 and ResNet101 are comparable.

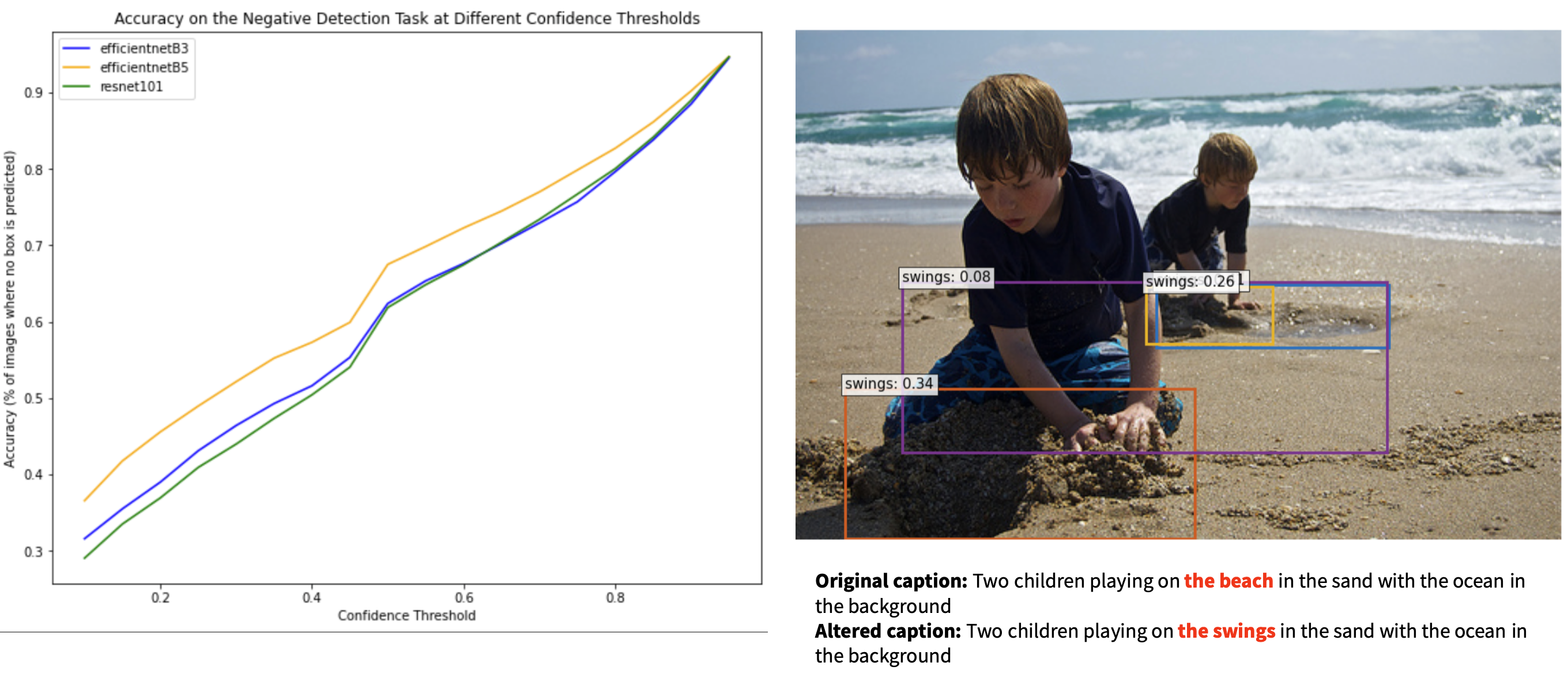

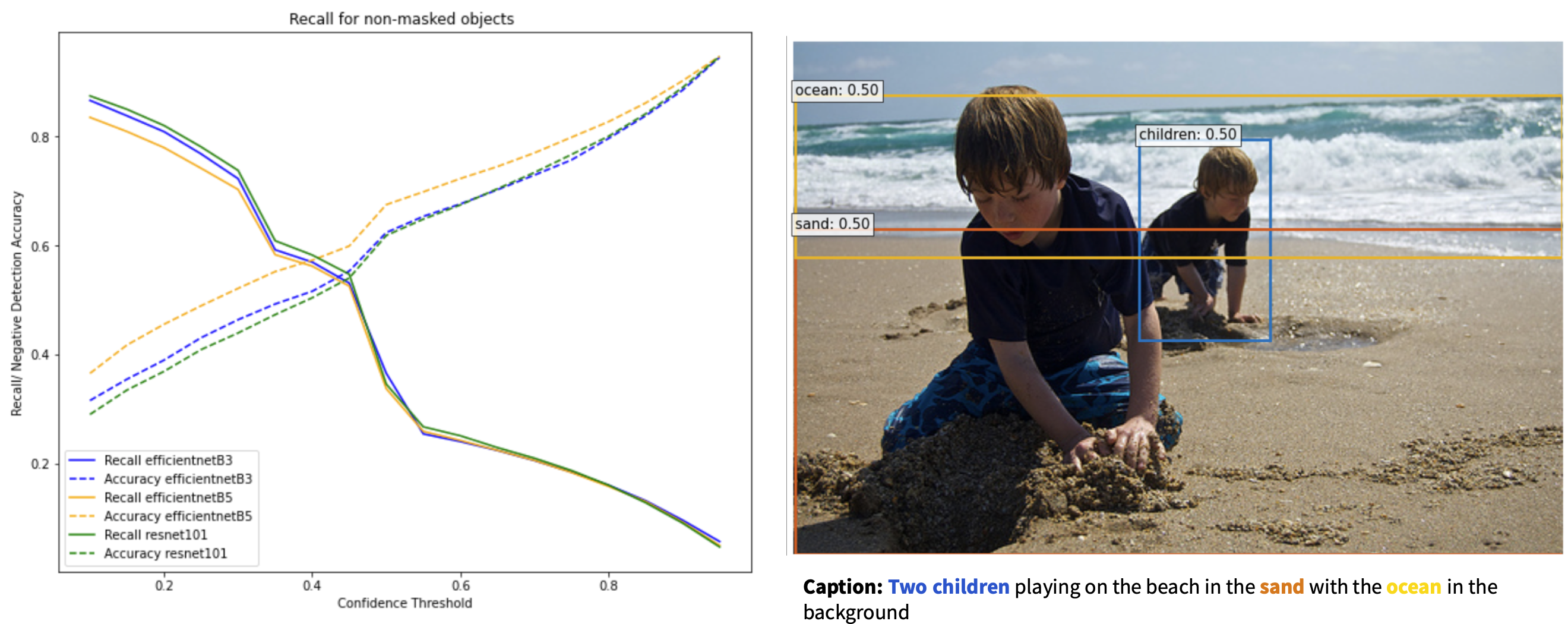

As expected, accuracy on the negative detection task (i.e. MDETR does not make a predict when an object is not in the image) increases linearly with the confidence score threshold for bounding box predictsion.

Our primary goal is to test the negative detection task but we still want the models to be able to identify objects that are in the image. We count a correct prediction is IOU between a predicted box and target box > 0.5. We can see that as we increase the score cutoff for bounding box predictions, recall on existent objects drops quicky. It appears that a confidence threshold of ~0.45 achieves a balanced between recall and accuracy on the negative detection task with both values ~ 55%.