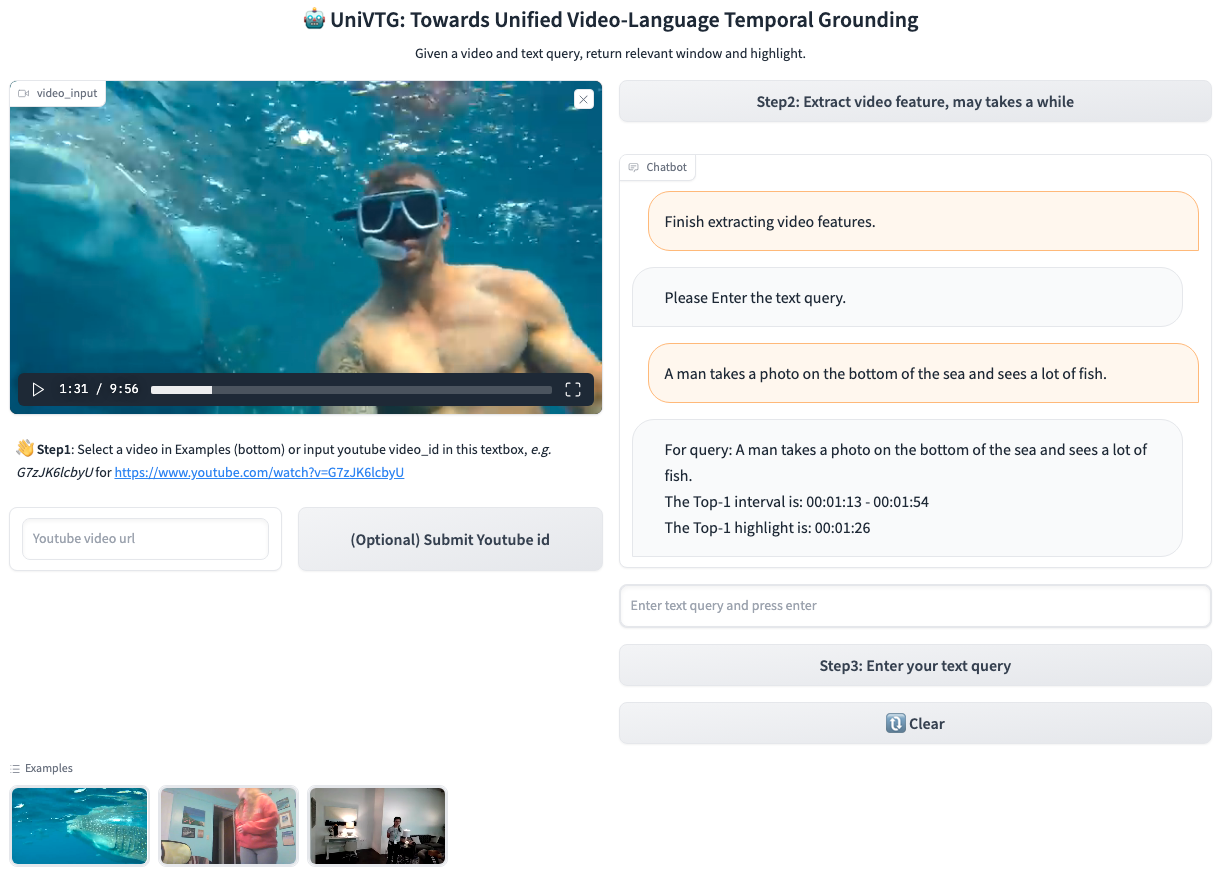

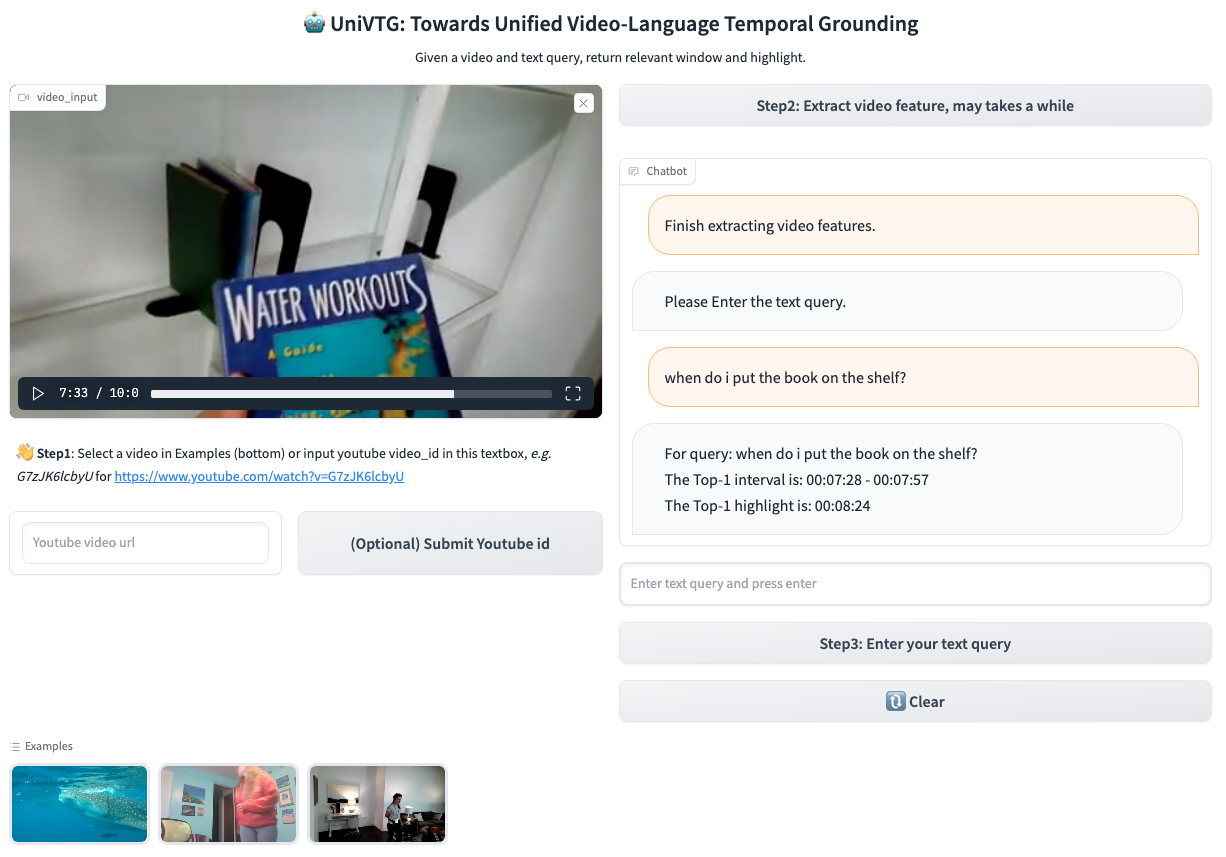

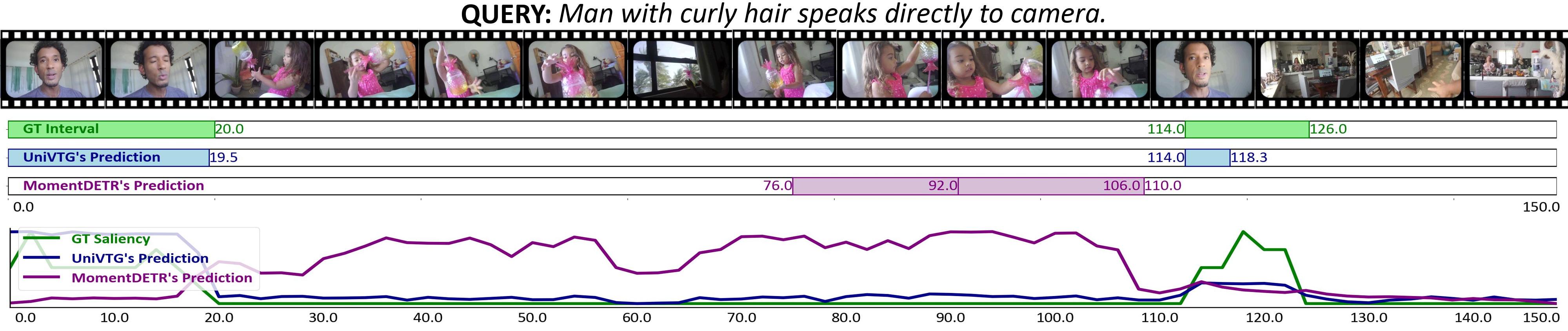

TL; DR: The first video temporal grounding pretraining model, unifying diverse temporal annotations to power moment retrieval (interval), highlight detection (curve) and video summarization (point).

- [2023.10.15] Upload the Clip teacher scripts to create scalable pseudo annotations.

- [2023.8.22] Code cleaning, add training/inference instruction, upload all downstream checkpoints.

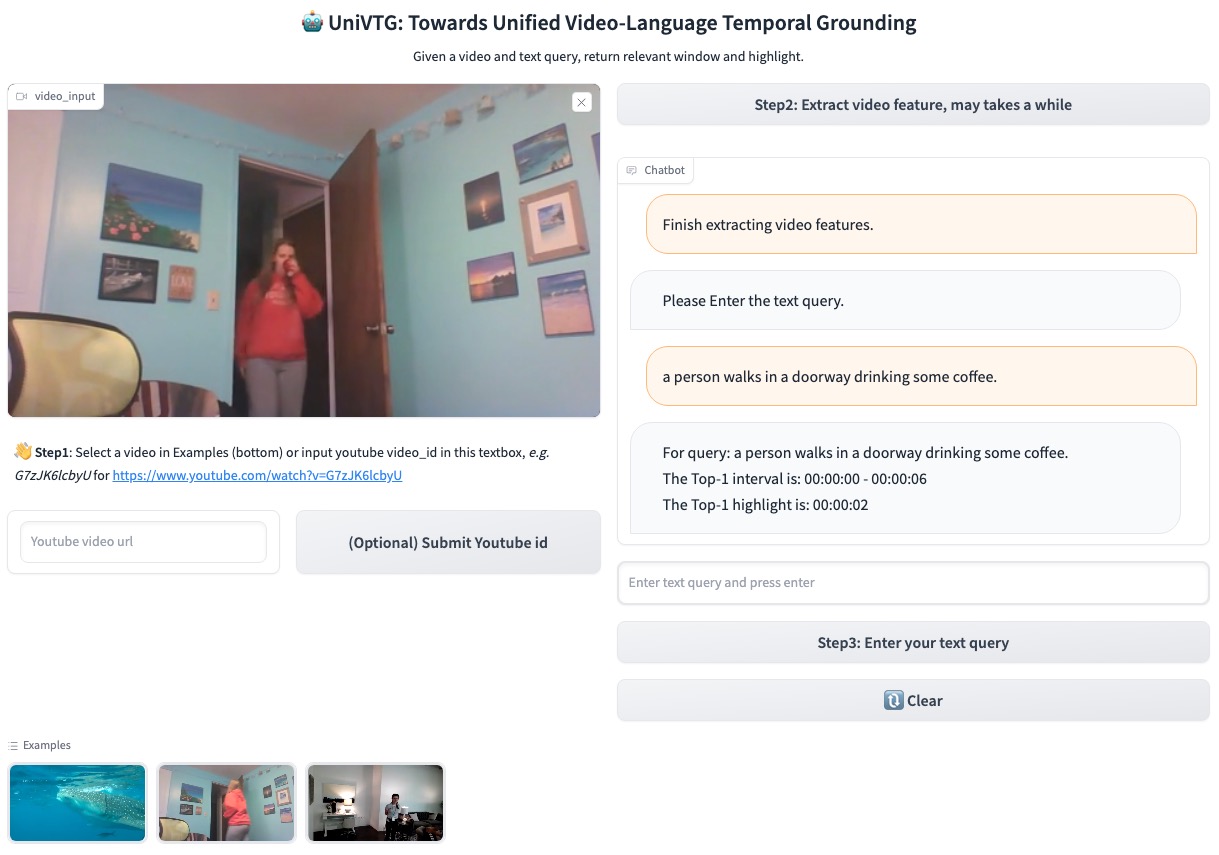

- [2023.8.6] Create the Huggingface space demo!

- [2023.7.31] We release the arXiv paper, codes, checkpoints, and gradio demo.

- Connect UniVTG with LLM e.g., ChatGPT.

- Upload all downstream checkpoints.

- Upload all pretraining checkpoints.

To power practical usage, we release the following checkpoints:

can be run on a single GPU with less than 4GB memory, highly efficient, less than 1 sec to perform temporal grounding even a 10 minutes long video.

| Video Enc. | Text Enc. | Pretraining | Fine-tuning | Checkpoints |

|---|---|---|---|---|

| CLIP-B/16 | CLIP-B/16 | 4M | - | Google Drive |

| CLIP-B/16 | CLIP-B/16 | 4M | QVHL + Charades + NLQ + TACoS + ActivityNet + DiDeMo | Google Drive |

Download checkpoint and put it in the dir results/omni.

Download the example videos from here and put it under examples/

Run python3 main_gradio.py --resume ./results/omni/model_best.ckpt

Please find instructions in install.md to setup environment and datasets.

Download checkpoints in model.md to reproduce the benchmark results.

We use slurm for job running, you may need to slightly modify the code to adapt your environment if you do not use slurm system.

Large-scale pretraining: bash scripts/pretrain.sh

Multi-datasets co-training: bash scripts/cotrain.sh

Indicate --resume to init model by pretraining weight. Refer to our model zoo for detailed parameter settings

Training: bash scripts/qvhl_pretrain.sh

Indicate --eval_init and --n_epoch=0 to evaluate selected checkpoint --resume.

Inference: bash scripts/qvhl_inference.sh

-

Download the openimages v6 class list from

https://storage.googleapis.com/openimages/v6/oidv6-class-descriptions.csv. -

Convert it as json by

python3 teacher/csv2json.pythen extract the textual class features bypython3 teacher/label2feature.py -

(Before this, you should have extracted the video features of the video) Run the script to generate pseudo labels

python3 teacher/clip2labels.py

If you want to draw visualizations like our paper, you can simply run python3 plot/qvhl.py to generate corresponding figures by providing the prediction jsons (you can download them in Model Zoo).

If you find our work helps, please cite our paper.

@misc{lin2023univtg,

title={UniVTG: Towards Unified Video-Language Temporal Grounding},

author={Kevin Qinghong Lin and Pengchuan Zhang and Joya Chen and Shraman Pramanick and Difei Gao and Alex Jinpeng Wang and Rui Yan and Mike Zheng Shou},

year={2023},

eprint={2307.16715},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

This repo is maintained by Kevin. Questions and discussions are welcome via [email protected] or open an issue.

This codebase is based on moment_detr, HERO_Video_Feature_Extractor, UMT.

We thank the authors for their open-source contributions.