-

Today I studied the concept of GAN(Generative Adversarial Network).

-

Implemented DCGAN(Deep Convolutional Generative Adversarial Network) on the MNIST dataset to generate handwritten digits.

-

I Would love to explore the applications of GAN in the upcoming days.

-

Link to the notebook. Click here.

Deep Convolutional Generative Adversarial Network (Tensorflow doc)

What is Generative Adversarial Networks GAN?

-

Studied various regularization techniques in order to handle overfitting.

-

Completed Week 1 assignment of the Andrew Ng Deep Learning course.

-

Explored various optimization algorithms such as Gradient descent with momentum, RMSprop and Adam.

-

Completed Week 2 assignment of the same course.

-

Course link : Click here

-

Implemented DCGAN(Deep Convolutional Generative Adversarial Network) on the face dataset to generate human faces.

-

The image generated was not upto the mark and would need some modification.

-

The dataset is available Here

-

Link to the notebook is available here

-

Learnt about Machine Learning structural strategies used in the industry.

-

Read about terms like Avoidable bias and variance on the dataset and about Bayes error.

-

Read about Transfer Learning, Multi-task learning and end-to-end deep learning.

-

Completed the course 3 of the deep learning series by Andrew Ng.

-

Today I studied about the various clustering algorithms used in ML.

-

The list includes : K-means, Mean-shift, Density-Based Spatial Clustering of Applications with Noise (DBSCAN), Gaussian Mixture Models (GMM) and Agglomerative Hierarchical Clustering.

-

Analysed the pros and cons of each algorithm and the applications of each of them.

-

Looking forward to implement them in the coming days.

-

Today I started course 4 of the Deep Learning Series by deeplearning.ai

-

Week 1 turned out to be a quick recap of Convolution Neural Network (CNN).

-

The topics covered todaytujhe bhula diya included padding, strides, convolutions and MaxPooling.

-

The course is available Here.

-

Today I worked on the conversion of black images to images of color.

-

Used OpenCV and Deep Learning to implement the program.

-

The model used was pre-trained on the Caffe deep learning framework on ImageNet dataset.

-

You can refer to the paper here and to the documentation here

-

The results were plausible.

-

Today I dived deeper into the background of CNN.

-

I wrote the code for it to understand what happens behind the scenes.

-

It's always good to know about the theory and code of the pre-defined functions used directly by us.

-

I implemented the model on the 'signs' dataset.

-

Today I started learning about the basics of the PyTorch deep learning framework.

-

Looked up the differences between Tensorflow and PyTorch.

-

Implemented the framework on the University of California car dataset.

-

Today I started Week 2 of the fourth course in the Deep learning specialization.

-

Various architectures like ResNet, AlexNet and Inception were discussed in detail.

-

Read about the effectiveness of 1x1 convolution.

-

Would soon read the research papers on these architectures.

-

Today I implemented the CIFAR 10 dataset using Pytorch.

-

The accuracy was not upto the mark since my primary focus was on understanding the framework.

-

Would tweak the hyperparameters and increase the number of epochs to achieve higher accuracy.

-

Would implement Pytorch on different datasets as well in the coming days.

-

You can find the notebook here.

-

Today I studied the required mathematics for machine learning.

-

The topics included Linear Algebra, Multivariate Calculus, Probability and Calculus.

-

The YouTube video for it is available here.

-

Today I worked on the analysis of Mall Customer Segmentation data on Kaggle.

-

The analysis involved the comparison between parameters like Customer age, salary and gender.

-

K-means algorithm was used to form a cluster of customers on the basis of their shopping traits.

-

The notebook is available here.

-

Today I read the theory of time series analysis and its applications.

-

I was fascinated to know that time series analysis has a different approach as compared to conventional ML algorithms.

-

The applications of time series really intrigued me and would implement it in the coming days.

-

Relevant links.

7 Ways Time Series Forecasting Differs from Machine Learning

-

Completed the assignment 1 for SHALA2020 course.

-

The assignment was an introduction to important libraries like Numpy, Pandas and Matplotlib.

-

The notebook is available here

-

Revised statistic concepts assigned under the pre-work category on SHALA course.

-

Consisted of topics like measuring central tendency, histograms and statistic fundamentals.

-

Eventually gave a quiz on the related topic.

-

Relevant links.

-

Completed assignment 2 of the IITB Shala course.

-

Plotted and analysed data using Histograms, boxplots and pie chart.

-

The notebook can be accessed here.

-

Predicted the airline traffic for the future(3 years) using time series analysis using fbProphet.

-

Used Markov Chain Monte Carlo method(MCMC) to generate forecasts.

-

The model shows both the seasonality and trend in the data.

-

You can check out the notebook here.

-

Predicted the milk production for the future(3 years) using time series analysis using fbProphet.

-

Used Markov Chain Monte Carlo method(MCMC) to generate forecasts.

-

The model shows both the seasonality and trend in the data.

-

You can check out the notebook here.

-

Read the theory of different types of graphs and their uses.

-

Studied the differences between the types of graphs and charts.

-

Learnt several visualization practices.

-

Relevant links.

-

Completed assignment 2 of the IITB Shala course.

-

The assignment consisted of different kind of charts and graphs that can be made using libraries like Pandas, Matplotlib and Seaborn.

-

Explored various other visualization techniques as well.

-

You can access the assignment here.

-

Read a lot of intermediate statistics concepts.

-

Topics included Maximum likelihood estimation, sufficient statistics, null hypothesis testing, t-test and Wilcoxon rank test.

-

Would implement these concepts in the future.

Relevant Links:

-

Completed assignment 4 of the SHALA IITB course.

-

With this the module 1 (Data Science) of the course has been completed.

-

Computed the likelihood and log likelihood from samples that were drawn from an exponential distribution.

-

Performed a two sample t-test from samples of unknown distributions and found the critical value.

-

Notebook is available here.

-

Started week 3 of the CNN course by deeplearning.ai

-

Read about object localization, object and landmark detection and non-max suppression.

-

Studied the YOLO algorithm and implemented it in the week's assignment.

-

Was able to complete the assignment and detect cars.

-

Started Week 4 of the CNN deeplearning.ai course

-

The topic for the week was art generation with neural style transfer.

-

Understood the 2 types of cost functions i.e. Content cost function and Style cost function.

-

Completed the assignment for art generation.

-

Read about one-shot learning and its application in face recognition.

-

Understood the concepts of Siamese network and triplet loss.

-

Implemented face recognition and verification in the assignment.

-

With this completed the Course 4 of deeplearning.ai specialization.

-

Read about Decision trees and Random Forest Regressor as well as Classifier.

-

Gave a quiz on the topic and implemented the concepts in the SHALA ML assignment 1.

-

Predicted the Attrition of the employees of a company using classifier.

-

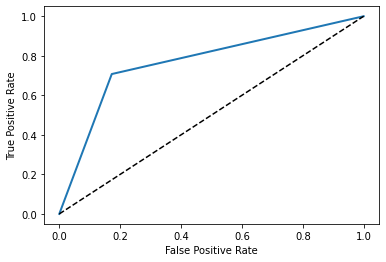

Made a classifier using Logistic Regression to predict the survival of a passenger in the Titanic dataset.

-

Reached 79.3% accuracy, plotted the roc curve and calculated the roc_auc_score.

-

Found out that Jack Dawson couldn't survive whereas Rose made it. Sigh!

-

The notebook can be found here.

-

Made several classifiers to predict passenger survival on the Titanic.

-

Applied Logistic Regression, Decison Trees, Random Forest, Gradient Boosting and XGBoost algorithms.

-

Corrected the anomalies of the logistic regression analyis performed the previous day.