A curated list of resources dedicated to reinforcement learning applied to cyber security. Note that the list includes only work that uses reinforcement learning, general machine learning methods applied to cyber security are not included in this list.

For other related curated lists, see :

↑ Environments

|

CSLE: The Cyber Security Learning Environment

|

|

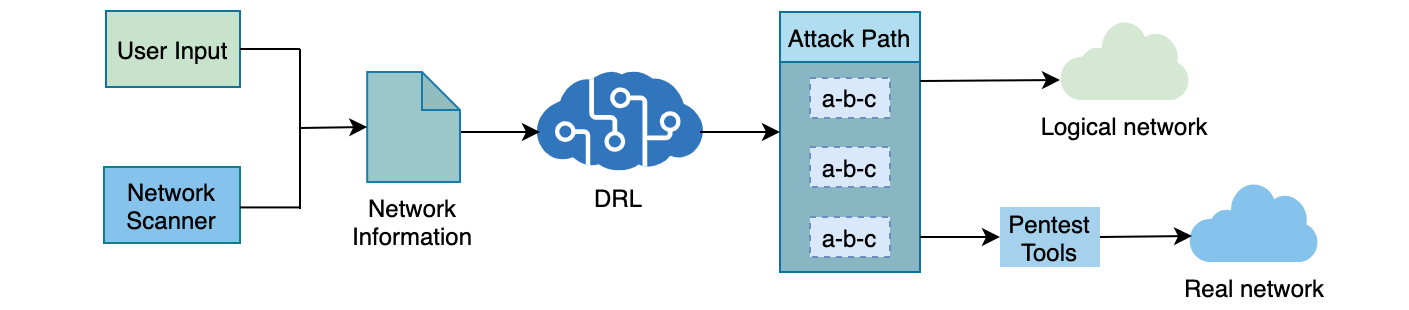

AutoPentest-DRL: Automated Penetration Testing Using Deep Reinforcement Learning

|

|

|

NASimEmu

|

|

gym-idsgame

|

|

CyberBattleSim

|

|

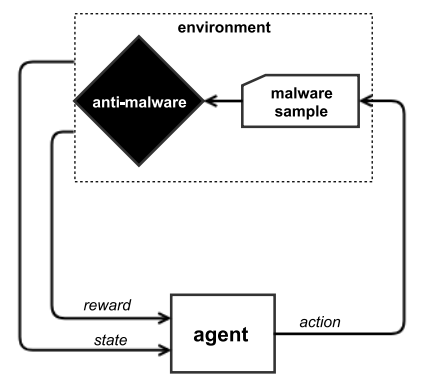

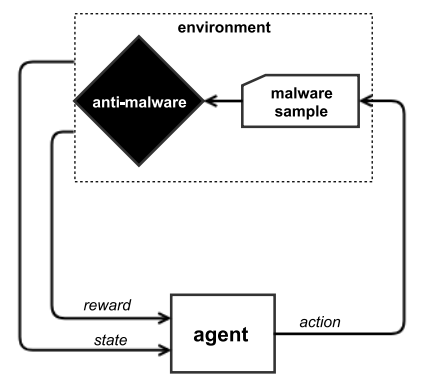

gym-malware

|

|

malware-rl

|

|

gym-flipit

|

|

gym-threat-defense

|

|

gym-nasim |

|

gym-optimal-intrusion-response

|

|

sql_env |

|

cage-challenge-1

|

cage-challenge-2

|

cage-challenge-3

|

|

ATMoS |

|

MAB-malware |

|

Autonomous Security Analysis and Penetration Testing framework (ASAP) |

|

Yawning Titan

|

|

Cyborg

|

|

FARLAND (github repository missing)

|

|

SecureAI

|

|

CYST

|

|

CLAP: Curiosity-Driven Reinforcment Learning Automatic Penetration Testing Agent

|

|

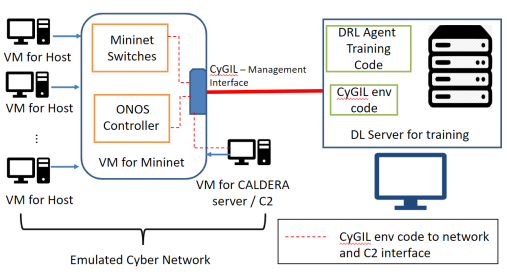

CyGIL: A Cyber Gym for Training Autonomous Agents over Emulated Network Systems

|

|

BRAWL

|

|

DeterLab: Cyber-Defense Technology Experimental Research Laboratory

|

|

|

Mininet creates a realistic virtual network, running real kernel, switch and application code, on a single machine (VM, cloud or native), in seconds, with a single command. |

|

VINE: A Cyber Emulation Environment for MTD Experimentation |

|

CRATE Exercise Control – A cyber defense exercise management and support tool |

|

Galaxy: A Network Emulation Framework for Cybersecurity tool |

↑ Papers

- (2023) Automated Cyber Defence: A Review

- (2022) The Confluence of Networks, Games, and Learning a Game-Theoretic Framework for Multiagent Decision Making Over Networks

- (2022) Cyber-security and reinforcement learning — A brief survey

- (2022) Blockchain and Federated Deep Reinforcement Learning Based Secure Cloud-Edge-End Collaboration in Power IoT

- (2022) Deep Reinforcement Learning for Cybersecurity Threat Detection and Protection: A Review

- (2022) Research and Challenges of Reinforcement Learning in Cyber Defense Decision-Making for Intranet Security

- (2021) Reinforcement Learning for Feedback-Enabled Cyber Resilience

- (2021) Prospective Artificial Intelligence Approaches for Active Cyber Defence

- (2019) Deep Reinforcement Learning for Cyber Security

- (2023) The Cyber Security Learning Environment (CSLE) v.0.2.0 Demo

- (2022) A System for Interactive Examination of Learned Security Policies (VIDEO)

- (2023) Intelligent Security Aware Routing: Using Model-Free Reinforcement Learning

- (2023) When Moving Target Defense Meets Attack Prediction in Digital Twins: A Convolutional and Hierarchical Reinforcement Learning Approach

- (2023) Out of the Cage: How Stochastic Parrots Win in Cyber Security Environments

- (2023) Deep Reinforcement Learning for Intelligent Penetration Testing Path Design

- (2023) Social Engineering Attack-Defense Strategies Based on Reinforcement Learning

- (2023) Real-Time Defensive Strategy Selection via Deep Reinforcement Learning

- (2023) CyberForce: A Federated Reinforcement Learning Framework for Malware Mitigation

- (2023) Simulating all archetypes of SQL injection vulnerability exploitation using reinforcement learning agents

- (2023) Research on active defense decision-making method for cloud boundary networks based on reinforcement learning of intelligent agent

- (2023) Adversarial Deep Reinforcement Learning for Cyber Security in Software Defined Networks

- (2023) Using POMDP-based Approach to Address Uncertainty-Aware Adaptation for Self-Protecting Software

- (2023) EIReLaND: Evaluating and Interpreting Reinforcement-Learning-based Network Defenses

- (2023) SDN/NFV-based framework for autonomous defense against slow-rate DDoS attacks by using reinforcement learning

- (2023) Whole Campaign Emulation with Reinforcement Learning for Cyber Test

- (2023) Neuroevolution for Autonomous Cyber Defense

- (2023) Reinforcement Learning-Based Attack Graph Analysis for Wastewater Treatment Plant

- (2023) QL vs. SARSA: Performance Evaluation for Intrusion Prevention Systems in Software-Defined IoT Networks

- (2023) TSGS: Two-stage security game solution based on deep reinforcement learning for Internet of Things

- (2023) Security-aware Resource Allocation Scheme Based on DRL in Cloud-Edge-Terminal Cooperative Vehicular Network

- (2023) Network Intrusion Detection System using Reinforcement learning

- (2023) Unified Emulation-Simulation Training Environment for Autonomous Cyber Agents

- (2023) Learning Near-Optimal Intrusion Responses Against Dynamic Attackers

- (2023) A Curriculum Framework for Autonomous Network Defense using Multi-agent Reinforcement Learning

- (2023) Enhancing Situation Awareness in Beyond Visual Range Air Combat with Reinforcement Learning-based Decision Support

- (2023) A Reinforcement Learning Approach to Undetectable Attacks against Automatic Generation Control

- (2023) Digital Twins for Security Automation

- (2023) Inroads into Autonomous Network Defence using Explained Reinforcement Learning

- (2023) Automated Adversary-in-the-Loop Cyber-Physical Defense Planning

- (2023) RLAuth: A Risk-based Authentication System using Reinforcement Learning

- (2023) SQIRL: Grey-Box Detection of SQL Injection Vulnerabilities Using Reinforcement Learning

- (2023) Dual Reinforcement Learning based Attack Path Prediction for 5G Industrial Cyber-Physical Systems

- (2023) Detecting State of Charge False Reporting Attacks via Reinforcement Learning Approach

- (2023) Learning to Defend by Attacking (and Vice-Versa): Transfer of Learning in Cybersecurity Games

- (2023) NASimEmu: Network Attack Simulator & Emulator for Training Agents Generalizing to Novel Scenarios

- (2023) A Collaborative Stealthy DDoS Detection Method based on Reinforcement Learning at the Edge of the Internet of Things

- (2023) An Intelligent SDWN Routing Algorithm Based on Network Situational Awareness and Deep Reinforcement Learning

- (2023) Trojan Playground: A Reinforcement Learning Framework for Hardware Trojan Insertion and Detection

- (2023) Decentralized Anomaly Detection in Cooperative Multi-Agent Reinforcement Learning

- (2023) Evolved Prevention Strategies for 6G Networks through Stochastic Games and Reinforcement Learning

- (2023) Cyber Attack Detection Using Bellman Optimality Equation in Reinforcement Learning

- (2023) Greybox Penetration Testing on Cloud Access Control with IAM Modeling and Deep Reinforcement Learning

- (2023) A Multiagent CyberBattleSim for RL Cyber Operation Agents

- (2023) Reinforcement Learning Solution for Cyber-Physical Systems Security Against Replay Attacks

- (2023) AIRS: Explanation for Deep Reinforcement Learning based Security Applications

- (2023) SSQLi: A Black-Box Adversarial Attack Method for SQL Injection Based on Reinforcement Learning

- (2023) On the use of Reinforcement Learning for Attacking and Defending Load Frequency Control

- (2023) An Optimal Active Defensive Security Framework for the Container-Based Cloud with Deep Reinforcement Learning

- (2023) AutoCAT: Reinforcement Learning for Automated Exploration of Cache-Timing Attacks

- (2023) Applying Reinforcement Learning for Enhanced Cybersecurity against Adversarial Simulation

- (2023) Offline RL+CKG: A hybrid AI model for cybersecurity tasks

- (2023) Learning automated defense strategies using graph-based cyber attack simulations

- (2023) Cyber Automated Network Resilience Defensive Approach against Malware Images

- (2023) Energy scheduling for DoS attack over multi-hop networks: Deep reinforcement learning approach

- (2023) Cybersecurity as a Tic-Tac-Toe Game Using Autonomous Forwards (Attacking) And Backwards (Defending) Penetration Testing in a Cyber Adversarial Artificial Intelligence System

- (2023) Deep Reinforcement Learning for Cyber System Defense under Dynamic Adversarial Uncertainties

- (2023) Catch Me If You Can: Improving Adversaries in Cyber-Security With Q-Learning Algorithms

- (2023) Security Analysis of Cyber-Physical Systems Using Reinforcement Learning

- (2023) Beyond von Neumann Era: Brain-inspired Hyperdimensional Computing to the Rescue

- (2023) Increasing attacker engagement on SSH honeypots using semantic embeddings of cyber-attack patterns and deep reinforcement learning

- (2023) Towards Dynamic Capture-The-Flag Training Environments For Reinforcement Learning Offensive Security Agents

- (2023) Leveraging Deep Reinforcement Learning for Automating Penetration Testing in Reconnaissance and Exploitation Phase

- (2023) HAXSS: Hierarchical Reinforcement Learning for XSS Payload Generation

- (2023) A Transfer Double Deep Q Network Based DDoS Detection Method for Internet of Vehicles

- (2022) Deep Reinforcement Learning for FlipIt Security Game

- (2022) DRAGON: Deep Reinforcement Learning for Autonomous Grid Operation and Attack Detection

- (2022) A Model-Free Approach to Intrusion Response Systems

- (2022) Reinforcement Learning Agents for Simulating Normal and Malicious Actions in Cyber Range Scenarios

- (2022) Sequential Topology Attack of Supply Chain Networks Based on Reinforcement Learning

- (2022) Defend to Defeat: Limiting Information Leakage in Defending against Advanced Persistent Threats

- (2022) How to Attack and Defend NextG Radio Access Network Slicing with Reinforcement Learning

- (2022) Knowledge Guided Two-player Reinforcement Learning for Cyber Attacks and Defenses

- (2022) Beyond CAGE: Investigating Generalization of Learned Autonomous Network Defense Policies

- (2022) Bridging Automated to Autonomous Cyber Defense: Foundational Analysis of Tabular Q-Learning.

- (2022) Cascaded Reinforcement Learning Agents for Large Action Spaces in Autonomous Penetration Testing.

- (2022) Model-Free Deep Reinforcement Learning in Software-Defined Networks.

- (2022) Hierarchical reinforcement learning guidance with threat avoidance.

- (2022) Exposing Surveillance Detection Routes via Reinforcement Learning, Attack Graphs, and Cyber Terrain.

- (2022) Cognitive Models of Dynamic Decisions in Autonomous Intelligent Cyber Defense.

- (2022) Optimizing cybersecurity incident response decisions using deep reinforcement learning.

- (2022) Robust Moving Target Defense against Unknown Attacks: A Meta-Reinforcement Learning Approach

- (2022) Learning Games for Defending Advanced Persistent Threats in Cyber Systems

- (2022) IEEE P2668-Compliant Multi-Layer IoT-DDoS Defense System Using Deep Reinforcement Learning

- (2022) Privacy-Enhanced Intrusion Detection and Defense for Cyber-Physical Systems: A Deep Reinforcement Learning Approach

- (2022) DeepThrottle: Deep Reinforcement Learning for Router Throttling to Defend Against DDoS Attack in SDN

- (2022) Breakthrough to Adaptive and Cost-Aware Hardware-Assisted Zero-Day Malware Detection: A Reinforcement Learning-Based Approach

- (2022) Mitigating Jamming Attack in 5G Heterogeneous Networks: A Federated Deep Reinforcement Learning Approach

- (2022) Deep Reinforcement Learning based Evasion Generative Adversarial Network for Botnet Detection

- (2022) Adaptive threat mitigation in SDN using improved D3QN

- (2022) A Comprehensive Survey on Security Attacks to Edge Server of IoT Devices through Reinforcement Learning

- (2022) Smart Grid Worm Detection Based on Deep Reinforcement Learning

- (2022) Deep reinforcement learning based IRS-assisted mobile edge computing under physical-layer security

- (2022) Reinforcement Learning for Intrusion Detection: More Model Longness and Fewer Updates

- (2022) AutoDefense: Reinforcement Learning Based Autoreactive Defense Against Network Attacks

- (2022) ProAPT: Projection of APT Threats with Deep Reinforcement Learning

- (2022) Reinforced Transformer Learning for VSI-DDoS Detection in Edge Clouds

- (2022) H4rm0ny: A Competitive Zero-Sum Two-Player Markov Game for Multi-Agent Learning on Evasive Malware Generation and Detection

- (2022) Reinforcement Learning for Hardware Security: Opportunities, Developments, and Challenges

- (2022) Attrition: Attacking Static Hardware Trojan Detection Techniques Using Reinforcement Learning

- (2022) Deep Reinforcement Learning in the Advanced Cybersecurity Threat Detection and Protection

- (2022) ReCEIF: Reinforcement Learning-Controlled Effective Ingress Filtering

- (2022) AutoCAT: Reinforcement Learning for Automated Exploration of Cache-Timing Attacks

- (2022) GPDS: A multi-agent deep reinforcement learning game for anti-jamming secure computing in MEC network

- (2022) Reinforcement Learning based Adversarial Malware Example Generation Against Black-Box Detectors

- (2022) SAC-AP: Soft Actor Critic based Deep Reinforcement Learning for Alert Prioritization

- (2022) How to Mitigate DDoS Intelligently in SD-IoV: A Moving Target Defense Approach

- (2022) ReLFA: Resist Link Flooding Attacks via Renyi Entropy and Deep Reinforcement Learning in SDN-IoT

- (2022) An Artificial Intelligence-Enabled Framework for Optimizing the Dynamic Cyber Vulnerability Management Process

- (2022) Eavesdropping Game Based on Multi-Agent Deep Reinforcement Learning

- (2022) A Hidden Attack Sequences Detection Method Based on Dynamic Reward Deep Deterministic Policy Gradient

- (2022) Security State Estimation for Cyber-Physical Systems against DoS Attacks via Reinforcement Learning and Game Theory

- (2022) Developing Optimal Causal Cyber-Defence Agents via Cyber Security Simulation

- (2022) Enabling intrusion detection systems with dueling double deep Q-learning

- (2022) MultiAgent Deep Reinforcement LearningDriven Mitigation of Adverse Effects of CyberAttacks on Electric Vehicle Charging Station

- (2022) XSS Adversarial Example Attacks Based on Deep Reinforcement Learning

- (2022) Analyzing Multi-Agent Reinforcement Learning and Coevolution in Cybersecurity

- (2022) AlphaSOC: Reinforcement Learning-based Cybersecurity Automation for Cyber-Physical Systems

- (2022) Online Cyber-Attack Detection in the Industrial Control System: A Deep Reinforcement Learning Approach

- (2022) Detecting Cyber Attacks: A Reinforcement Learning Based Intrusion Detection System

- (2022) Robust Enhancement of Intrusion Detection Systems using Deep Reinforcement Learning and Stochastic Game

- (2022) irs-partition: An Intrusion Response System utilizing Deep Q-Networks and system partitions

- (2022) Defensive deception framework against reconnaissance attacks in the cloud with deep reinforcement learning

- (2022) Captcha me if you can: Imitation Games with Reinforcement Learning

- (2022) Deep-Reinforcement-Learning-Based QoS-Aware Secure Routing for SDN-IoT

- (2022) A generic scheme for cyber security in resource constraint network using incomplete information game

- (2022) A Layered Reference Model for Penetration Testing with Reinforcement Learning and Attack Graphs

- (2022) A flexible SDN-based framework for slow-rate DDoS attack mitigation by using deep reinforcement learning

- (2022) Learning Security Strategies through Game Play and Optimal Stopping

- (2022) Resilient Optimal Defensive Strategy of Micro-Grids System via Distributed Deep Reinforcement Learning Approach Against FDI Attack

- (2022) Data-driven Cyber-attack Detection of Intelligent Attacks in Islanded DC Microgrids

- (2022) Multiple Domain Cyberspace Attack and Defense Game Based on Reward Randomization Reinforcement Learning

- (2022) Cyber threat response using reinforcement learning in graph-based attack simulations

- (2022) Intrusion Prevention through Optimal Stopping

- (2022) Learning to Play an Adaptive Cyber Deception Game

- (2022) Neural Fictitious Self-Play for Radar Anti-Jamming Dynamic Game with Imperfect Information

- (2022) A Reinforcement Learning Approach for Defending Against Multi-Scenario Load Redistribution Attacks

- (2022) A Proactive Eavesdropping Game in MIMO systems Based on Multi-Agent Deep Reinforcement Learning

- (2022) FEAR: Federated Cyber-Attack Reaction in Distributed Software-Defined Networks with Deep Q-Network

- (2022) EvadeRL: Evading PDF Malware Classifiers with Deep Reinforcement Learning

- (2022) Link: Black-Box Detection of Cross-Site Scripting Vulnerabilities Using Reinforcement Learning

- (2022) MERLIN - Malware Evasion with Reinforcement LearnINg

- (2022) DeepAir: Deep Reinforcement Learning for Adaptive Intrusion Response in Software-Defined Networks

- (2022) DroidRL: Reinforcement Learning Driven Feature Selection for Android Malware Detection

- (2022) MAB-Malware: A Reinforcement Learning Framework for Attacking Static Malware Classifiers

- (2022) Behaviour-Diverse Automatic Penetration Testing: A Curiosity-Driven Multi-Objective Deep Reinforcement Learning Approach

- (2022) Safe Exploration in Wireless Security: A Safe Reinforcement Learning Algorithm with Hierarchical Structure

- (2022) Discovering Exfiltration Paths Using Reinforcement Learning with Attack Graphs

- (2022) Multi-Agent Reinforcement Learning for Decentralized Resilient Secondary Control of Energy Storage Systems against DoS Attacks

- (2021) Network defense decision-making based on a stochastic game system and a deep recurrent Q-network

- (2021) Discovering reflected cross-site scripting vulnerabilities using a multiobjective reinforcement learning environment

- (2021) Enhancing the insertion of NOP instructions to obfuscate malware via deep reinforcement learning

- (2021) Automating post-exploitation with deep reinforcement learning

- (2021) Moving Target Defense as a Proactive Defense Element for Beyond 5G

- (2021) Network Resilience Under Epidemic Attacks: Deep Reinforcement Learning Network Topology Adaptations

- (2021) An Intrusion Response Approach for Elastic Applications Based on Reinforcement Learning

- (2021) Reinforcement Learning-assisted Threshold Optimization for Dynamic Honeypot Adaptation to Enhance IoBT Networks Security

- (2021) Reinforcement Learning-based Hierarchical Seed Scheduling for Greybox Fuzzing

- (2021) SquirRL: Automating Attack Analysis on Blockchain Incentive Mechanisms with Deep Reinforcement Learning

- (2021) Reinforcement Learning for the Problem of Detecting Intrusion in a Computer System

- (2021) Timing Strategy for Active Detection of APT Attack Based on FlipIt Model and Q-learning Method

- (2021) Collaborative Multi-agent Reinforcement Learning for Intrusion Detection

- (2021) ATMoS+: Generalizable Threat Mitigation in SDN Using Permutation Equivariant and Invariant Deep Reinforcement Learning

- (2021) Network Security Defense Decision-Making Method Based on Stochastic Game and Deep Reinforcement Learning

- (2021) Solving Large-Scale Extensive-Form Network Security Games via Neural Fictitious Self-Play

- (2021) An Efficient Parallel Reinforcement Learning Approach to Cross-Layer Defense Mechanism in Industrial Control Systems

- (2021) SDN-based Moving Target Defense using Multi-agent Reinforcement Learning

- (2021) Reinforcement Learning for Industrial Control Network Cyber Security Orchestration

- (2021) Automating Privilege Escalation with Deep Reinforcement Learning

- (2021) Multi-Agent Reinforcement Learning Framework in SDN-IoT for Transient Load Detection and Prevention

- (2021) Crown Jewels Analysis using Reinforcement Learning with Attack Graphs

- (2021) Deep Q-Learning based Reinforcement Learning Approach for Network Intrusion Detection

- (2021) Deep-Reinforcement-Learning-Based Intrusion Detection in Aerial Computing Networks

- (2021) Deep Reinforcement Learning for Securing Software Defined Industrial Networks with Distributed Control Plane

- (2021) Autonomous network cyber offence strategy through deep reinforcement learning

- (2021) CyGIL: A Cyber Gym for Training Autonomous Agents over Emulated Network Systems

- (2021) Constraints Satisfiability Driven Reinforcement Learning for Autonomous Cyber Defense

- (2021) Curious SDN for network attack mitigation

- (2021) Catch Me If You Learn: Real-Time Attack Detection and Mitigation in Learning Enabled CPS

- (2021) SyzVegas: Beating Kernel Fuzzing Odds with Reinforcement Learning

- (2021) Network Environment Design for Autonomous Cyberdefense

- (2021) CybORG: A Gym for the Development of Autonomous Cyber Agents

- (2021) SQL Injections and Reinforcement Learning: An Empirical Evaluation of the Role of Action Structure

- (2021) Towards Autonomous Defense of SDN Networks Using MuZero Based Intelligent Agent

- (2021) Defense Against Advanced Persistent Threats in Smart Grids: A Reinforcement Learning Approach

- (2021) Deep hierarchical reinforcement agents for automated penetration testing

- (2021) Adversarial Attack and Defense on Graph-based IoT Botnet Detection Approach

- (2021) Simulating a Logistics Enterprise Using an Asymmetrical Wargame Simulation with Soar Reinforcement Learning and Coevolutionary Algorithms

- (2021) Deep Reinforcement Learning for Mitigating Cyber-Physical DER Voltage Unbalance Attacks

- (2021) Mixed Initiative Balance of Human-Swarm Teaming in Surveillance via Reinforcement learning

- (2021) Proximal Policy Based Deep Reinforcement Learning Approach for Swarm Robots

- (2021) Using Deep Reinforcement Learning to Evade Web Application Firewalls

- (2021) Sequential Node Attack of Complex Networks based on Q-learning Method

- (2021) Learning Intrusion Prevention Policies through Optimal Stopping

- (2021) Using Cyber Terrain in Reinforcement Learning for Penetration Testing

- (2021) Reinforcement learning based self-adaptive moving target defense against DDoS attacks

- (2021) Modeling, Detecting, and Mitigating Threats Against Industrial Healthcare Systems: A Combined Software Defined Networking and Reinforcement Learning Approach

- (2021) Lightweight IDS For UAV Networks: A Periodic Deep Reinforcement Learning-based Approach

- (2021) DESOLATER: Deep Reinforcement Learning-Based Resource Allocation and Moving Target Defense Deployment Framework

- (2021) RAIDER: Reinforcement-aided Spear Phishing Detector

- (2021) DDoS Mitigation Based on Space-Time Flow Regularities in IoV: A Feature Adaption Reinforcement Learning Approach

- (2021) Power system structure optimization based on reinforcement learning and sparse constraints under DoS attacks in cloud environments

- (2021) Network Abnormal Traffic Detection Model Based on Semi-Supervised Deep Reinforcement Learning

- (2021) An adaptive honeypot using Q-Learning with severity analyzer

- (2021) Game-Theoretic Actor–Critic-Based Intrusion Response Scheme (GTAC-IRS) for Wireless SDN-Based IoT Networks

- (2021) A Reinforcement Learning Approach for Dynamic Information Flow Tracking Games for Detecting Advanced Persistent Threats

- (2021) Deep Reinforcement Learning for Backup Strategies against Adversaries

- (2021) A Secure Learning Control Strategy via Dynamic Camouflaging for Unknown Dynamical Systems under Attacks

- (2020) Learning and Planning in the Feature Deception Problem

- (2020) Machine Learning Cyberattack and Defense Strategies

- (2020) Reinforcement Learning for Attack Mitigation in SDN-enabled Networks

- (2020) Per-Host DDoS Mitigation by Direct-Control Reinforcement Learning

- (2020) Game Theory and Reinforcement Learning Based Secure Edge Caching in Mobile Social Networks

- (2020) A New Black Box Attack Generating Adversarial Examples Based on Reinforcement Learning

- (2020) Deep Reinforcement Adversarial Learning Against Botnet Evasion Attacks

- (2020) Deep Reinforcement Learning for Adaptive Cyber Defense and Attacker’s Pattern Identification

- (2020) Reinforcement Learning Based Approach for Flip Attack Detection

- (2020) Reinforcement Learning in FlipIt

- (2020) CPSS LR-DDoS Detection and Defense in Edge Computing Utilizing DCNN Q-Learning

- (2020) Multi-agent Reinforcement Learning in Bayesian Stackelberg Markov Games for Adaptive Moving Target Defense

- (2020) An Intelligent Deployment Policy for Deception Resources Based on Reinforcement Learning

- (2020) Defense Against Advanced Persistent Threats: Optimal Network Security Hardening Using Multi-stage Maze Network Game

- (2020) Automated Adversary Emulation for Cyber-Physical Systems via Reinforcement Learning

- (2020) DRL-FAS: A Novel Framework Based on Deep Reinforcement Learning for Face Anti-Spoofing

- (2020) Q-Bully: A Reinforcement Learning based Cyberbullying Detection Framework

- (2020) Application-Layer DDoS Defense with Reinforcement Learning

- (2020) DQ-MOTAG: Deep Reinforcement Learning-based Moving Target Defense Against DDoS Attacks

- (2020) A Hybrid Game Theory and Reinforcement Learning Approach for Cyber-Physical Systems Security

- (2020) Machine Learning Cyberattack and Defense Strategies

- (2020) Automated Post-Breach Penetration Testing through Reinforcement Learning

- (2020) DeepBLOC: A Framework for Securing CPS through Deep Reinforcement Learning on Stochastic Games

- (2020) Deep Reinforcement Learning for DER Cyber-Attack Mitigation

- (2020) Adaptive Cyber Defense Against Multi-Stage Attacks Using Learning-Based POMDP

- (2020) Using Knowledge Graphs and Reinforcement Learning for Malware Analysis

- (2020) Autonomous Security Analysis and Penetration Testing

- (2020) POMDP + Information-Decay: Incorporating Defender's Behaviour in Autonomous Penetration Testing

- (2020) ATMoS: Autonomous Threat Mitigation in SDN using Reinforcement Learning

- (2020) Modeling Penetration Testing with Reinforcement Learning Using Capture-the-Flag Challenges: Trade-offs between Model-free Learning and A Priori Knowledge

- (2020) Finding Effective Security Strategies through Reinforcement Learning and Self-Play

- (2020) AFRL: Adaptive federated reinforcement learning for intelligent jamming defense in FANET

- (2020) Reinforcement Learning for Efficient Network Penetration Testing

- (2020) The Agent Web Model -- Modelling web hacking for reinforcement learning

- (2020) Stochastic Dynamic Information Flow Tracking Game using Supervised Learning for Detecting Advanced Persistent Threats

- (2020) Reinforcement Learning Based PHY Authentication for VANETs

- (2020) Deep Reinforcement Learning for Cybersecurity Assessment of Wind Integrated Power Systems

- (2020) Smart Security Audit: Reinforcement Learning with a Deep Neural Network Approximator

- (2020) Quickest Detection of Advanced Persistent Threats: A Semi-Markov Game Approach

- (2020) Distributed Reinforcement Learning for Cyber-Physical System With Multiple Remote State Estimation Under DoS Attacker

- (2020) Secure Crowdsensing in 5G Internet of Vehicles: When Deep Reinforcement Learning Meets Blockchain

- (2020) Deep Reinforcement Learning based Intrusion Detection System for Cloud Infrastructure

- (2020) Application of deep reinforcement learning to intrusion detection for supervised problems

- (2019) A game-theoretic method based on Q-learning to invalidate criminal smart contracts

- (2019) A Performance Evaluation of Deep Reinforcement Learning for Model-Based Intrusion Response

- (2019) Deep Q-Learning and Particle Swarm Optimization for Bot Detection in Online Social Networks

- (2019) Finding Needles in a Moving Haystack: Prioritizing Alerts with Adversarial Reinforcement Learning

- (2019) Evaluation of Reinforcement Learning-Based False Data Injection Attack to Automatic Voltage Control

- (2019) Study of Learning of Power Grid Defense Strategy in Adversarial Stage Game

- (2019) Learning to Cope with Adversarial Attacks

- (2019) Learning Distributed Cooperative Policies for Security Games via Deep Reinforcement Learning

- (2019) An Efficient Reinforcement Learning-Based Botnet Detection approach

- (2019) Strategic Learning for Active, Adaptive, and Autonomous Cyber Defense

- (2019) QFlip: An Adaptive Reinforcement Learning Strategy for the FlipIt Security Game

- (2019) Solving Cyber Alert Allocation Markov Games with Deep Reinforcement Learning

- (2019) Adaptive Honeypot Engagement Through Reinforcement Learning of Semi-Markov Decision Processes

- (2019) Detecting Phishing Websites through Deep Reinforcement Learning

- (2019) Adversarial Deep Reinforcement Learning based Adaptive Moving Target Defense

- (2019) Autonomous Penetration Testing using Reinforcement Learning

- (2019) A Multistage Game in Smart Grid Security: A Reinforcement Learning Solution

- (2019) Automating Penetration Testing using Reinforcement Learning

- (2019) Reinforcement Learning-Based DoS Mitigation in Software Defined Networks

- (2019) Adversarial attack and defense in reinforcement learning-from AI security view

- (2019) A Learning-Based Solution for an Adversarial Repeated Game in Cyber–Physical Power Systems

- (2019) Reinforcement Learning for Cyber-Physical Security Assessment of Power Systems

- (2019) Empowering Reinforcement Learning on Big Sensed Data for Intrusion Detection

- (2019) Cyber-Attack Recovery Strategy for Smart Grid Based on Deep Reinforcement Learning

- (2019) Deep Reinforcement Learning for Partially Observable Data Poisoning Attack in Crowdsensing Systems

- (2019) Adaptive Alert Management for Balancing Optimal Performance among Distributed CSOCs using Reinforcement Learning

- (2018) Simulating SQL Injection Vulnerability Exploitation Using Q-Learning Reinforcement Learning Agents

- (2018) Security in Mobile Edge Caching with Reinforcement Learning

- (2018) Detection of online phishing email using dynamic evolving neural network based on reinforcement learning

- (2018) A reinforcement learning approach for attack graph analysis

- (2018) Reinforcement Learning for Autonomous Defence in Software-Defined Networking

- (2018) Learning to Evade Static PE Machine Learning Malware Models via Reinforcement Learning

- (2018) Autonomic Computer Network Defence Using Risk State and Reinforcement Learning

- (2018) Reinforcement Learning for Intelligent Penetration Testing

- (2018) Autonomous Intelligent Cyber-defense Agent (AICA) Reference Architecture

- (2018) Deep reinforecement learning based optimal defense for cyber-physical system in presence of unknown cyber-attack

- (2018) Adversarial Reinforcement Learning for Observer Design in Autonomous Systems under Cyber Attacks

- (2018) Machine learning for autonomous cyber defense

- (2018) Online Cyber-Attack Detection in Smart Grid: A Reinforcement Learning Approach

- (2018) Deep Reinforcement Learning based Smart Mitigation of DDoS Flooding in Software-Defined Networks

- (2018) Off-Policy Q-learning Technique for Intrusion Response in Network Security

- (2018) UAV Relay in VANETs Against Smart Jamming With Reinforcement Learning

- (2018) A Game-Theoretical Approach to Cyber-Security of Critical Infrastructures Based on Multi-Agent Reinforcement Learning

- (2018) Security in Mobile Edge Caching with Reinforcement Learning

- (2018) Robotics CTF (RCTF), a playground for robot hacking

- (2018) NIDSRL: Network Based Intrusion Detection System Using Reinforcement Learning

- (2018) An IRL Approach for Cyber-Physical Attack Intention Prediction and Recovery

- (2018) QRASSH - A Self-Adaptive SSH Honeypot Driven by Q-Learning

- (2018) Using Reinforcement Learning to Conceal Honeypot Functionality

- (2018) Improving adaptive honeypot functionality with efficient reinforcement learning parameters for automated malware

- (2018) Enhancing Machine Learning Based Malware Detection Model by Reinforcement Learning

- (2017) Network Defense Strategy Selection with Reinforcement Learning and Pareto Optimization

- (2017) Adversarial Reinforcement Learning in a Cyber Security Simulation

- (2017) Detecting Stealthy Botnets in a Resource-Constrained Environment using Reinforcement Learning

- (2017) Q-learning Based Vulnerability Analysis of Smart Grid against Sequential Topology Attacks

- (2017) Multi-agent Reinforcement Learning Based Cognitive Anti-jamming

- (2017) Reinforcement Learning Based Mobile Offloading for Cloud-Based Malware Detection

- (2017) A Secure Mobile Crowdsensing Game With Deep Reinforcement Learning

- (2017) Online Algorithms for Adaptive Cyber Defense on Bayesian Attack Graphs

- (2016) Markov Security Games: Learning in Spatial Security Problems

- (2016) Dynamic Scheduling of Cybersecurity Analysts for Minimizing Risk Using Reinforcement Learning

- (2016) Balancing Security and Performance for Agility in Dynamic Threat Environments

- (2016) Reinforcement Learning Based Anti-jamming with Wideband Autonomous Cognitive Radios

- (2016) PHY-Layer Spoofing Detection With Reinforcement Learning in Wireless Networks

- (2015) Application of reinforcement learning for security enhancement incognitive radio networks

- (2015) Power control with reinforcement learning in cooperative cognitive radio networks against jamming

- (2015) Game Theory with Learning for Cyber Security Monitoring

- (2015) Spoofing Detection with Reinforcement Learning in Wireless Networks

- (2015) Mobile Cloud Offloading for Malware Detections with Learning

- (2014) Reinforcement Learning Algorithms for Adaptive Cyber Defense against Heartbleed

- (2014) Cooperative game theoretic approach using fuzzy Q-learning for detecting and preventing intrusions in wireless sensor networks

- (2014) Q-Learning: From Computer Network Security to Software Security

- (2013) Multiagent Router Throttling: Decentralized Coordinated Response Against DDoS Attacks

- (2013) Hybrid Learning in Stochastic Games and Its Application in Network Security

- (2013) Competing Mobile Network Game: Embracing Antijamming and Jamming Strategies with Reinforcement Learning

- (2012) Intrusion Detection System using Log Files and Reinforcement Learning

- (2012) Anti-jamming in Cognitive Radio Networks Using Reinforcement Learning Algorithms

- (2011) An Anti-jamming Strategy for Channel Access in Cognitive Radio Networks

- (2011) Distributed strategic learning with application to network security

- (2010) Dynamic policy-based IDS configuration

- (2008) Reinforcement Learning for Vulnerability Assessment in Peer-to-Peer Networks

- (2007) Defending DDoS Attacks Using Hidden Markov Models and Cooperative Reinforcement Learning

- (2006) An intrusion detection game with limited observations

- (2005) A Reinforcement Learning Approach for Host-Based Intrusion Detection Using Sequences of System Calls

- (2005) Multi-agent reinforcement learning for intrusion detection

- (2000) Next Generation Intrusion Detection: Autonomous Reinforcement Learning of Network Attacks

- (2023) Detecting Complex Cyber Attacks Using Decoys with Online Reinforcement Learning

- (2022) Anomaly Detection in Competitive Multiplayer Games

- (2022) Secure Automated and Autonomous Systems

- (2014) Distributed Reinforcement Learning for Network Intrusion Response

- (2009) Multi-Agent Reinforcement Learning for Intrusion Detection

- (2023) Competitive Reinforcement Learning for Autonomous Cyber Operations

- (2023) Learning Cyber Defence Tactics from Scratch with Cooperative Multi-Agent Reinforcement Learning

- (2022) Automating exploitation of SQL injection with reinforcement learning

- (2022) Self-Play Reinforcement Learning for Finding Intrusion Prevention Strategies

- (2022) Reinforcement Learning-aided Dynamic Analysis of Evasive Malware

- (2021) Intrusion Detection Based on Reinforcement Learning

- (2021) Bayesian Reinforcement Learning Methods for Network Intrusion Prevention

- (2019) Learning to Hack

- (2018) Autonomous Penetration Testing using Reinforcement Learning

- (2018) Analysis of Network Intrusion Detection System with Machine Learning Algorithms (Deep Reinforcement Learning Algorithm)

- (2022) Simulating Network Lateral Movements through the CyberBattleSim Web Platform

- (2018) Autonomous Penetration Testing using Reinforcement Learning

- (2023) Learning Near-Optimal Intrusion Responses Against Dynamic Attackers

- (2022) Autonomous Network Defence using Reinforcement Learning

- (2022) Intrusion Prevention through Optimal Stopping

- (2022) Intrusion Prevention through Optimal Stopping

- (2021) Learning Intrusion Prevention Policies through Optimal Stopping

↑ Books

- (2021) Game Theory and Machine Learning for Cyber Security (Chapter 5 on RL)

- (2019) Reinforcement Learning for Cyber-Physical Systems with Cybersecurity Case Studies

- (2010) Network Security: A Decision and Game-Theoretic Approach

↑ Blogposts

- (2021) Gamifying machine learning for stronger security and AI models

- (2021) Automating Cyber-Security With Reinforcement Learning

- (2021) Towards a method for computing effective intrusion prevention policies using reinforcement learning

↑ Talks

- (2023) Uplifting Cyber Defense

- (2023) Learning Near-Optimal Intrusion Response for Large-Scale IT Infrastructures via Decomposition

- (2023) Reinforcement Learning for Autonomous Cyber Defense

- (2023) Digital Twins and Reinforcement Learning for Security Automation

- (2023) Automation of digital crime investigation using Reinforcement Learning (RL)

- (2023) Applying Multi-Agent Reinforcement Learning (MARL) in a Cyber Wargame Engine

- (2023) Intrusion Response through Optimal Stopping

- (2022) Inroads in Autonomous Network Defence using Explained Reinforcement Learning (CAMLIS 2022)

- (2022) The Journey to The Self-Driving SOC

- (2022) Applications of Deep Reinforcement Learning for Cyber Security

- (2022) CNSM 2022, Adapting Security Policies in Dynamic IT Environments - Hammar & Stadler

- (2022) Self-Learning Systems for Cyber Defense

- (2022) Poster: Study of Intelligent Cyber Range Simulation using Reinforcement Learning

- (2022) Paper Study - Survey of Reinforcement Learning for Cyber Security

- (2022) The Role of Artificial Intelligence in Cyber-Defence | AI & Cybersecurity | Vincent Lenders

- (2022) Learning Security Strategies through Game Play and Optimal Stopping - Hammar & Stadler

- (2022) Reinforcement Learning for Complex Security Games and Beyond

- (2022) NOMS22 Demo - A System for Interactive Examination of Learned Security Policies - Hammar & Stadler

- (2022) Reinforcement Learning Applications: Cyber Security

- (2021) Artificial Intelligence Applications to Cybersecurity (AI ATAC) Prize Challenges I&II

- (2021) NordSec 2021 - SQL Injections and Reinforcement Learning

- (2021) Deep hierarchical reinforcement agents for automated penetration testing

- (2021) USENIX Security '21 - SyzVegas: Beating Kernel Fuzzing Odds with Reinforcement Learning

- (2021) CyGIL: A Cyber Gym for Training Autonomous Agents over Emulated Network Systems

- (2021) Simulating a Logistics Enterprise Using an Asymmetrical Wargame Simulation with Soar Reinforcement Learning and Coevolutionary Algorithms

- (2021) Incorporating Deception into CyberBattleSim for Autonomous Defense

- (2021) CybORG: A Gym for the Development of Autonomous Cyber Agents

- (2021) Defending the Cyber Front with AI - CyCon 2021

- (2021) Informing Autonomous Deception Systems with Cyber Expert Performance Data

- (2021) ACD 2021 Keynote - Prof. George Cybenko - Attrition in Adaptive Cyber Defense

- (2021) Reinforcement learning approaches on intusion detection

- (2021) Applying Deep Reinforcement Learning (DRL) in a Cyber Wargaming Engine

- (2021) Automated Penetration Testing using Reinforcement Learning

- (2021) Training an Autonomous Pentester with Deep RL

- (2021) Learning Intrusion Prevention Policies Through Optimal Stopping

- (2020) Finding Effective Security Strategies through Reinforcement Learning and Self-Play

- (2020) Autonomous Security Analysis and Penetration Testing (ASAP) - Ankur Chowdhary

- (2020) Autonomous Security Analysis and Penetration Testing: A reinforcement learning approach.

- (2020) Artificial Intelligence based Autonomous Penetration Testing.

- (2019) Cost-Efficient Malware Detection Using Deep Reinforcement Learning

- (2019) Trying to Make Meterpreter into an Adversarial Example

- (2019) A Reinforcement Learning Framework for Smart, Secure, and Efficient Cyber-Physical Autonomy

- (2019) Adaptive Honeypot Engagement through Reinforcement Learning of Semi-Markov Decision Processes

- (2018) Autonomous Cyber Defense: AI and the Immune System Approach

- (2018) Bonware to the Rescue: the Future Autonomous Cyber Defense Agents | Dr Alexander Kott | CAMLIS 2018

- (2018) CSIAC Webinar - Learning to Win: Making the Case for Autonomous Cyber Security Solutions

↑ Miscellaneous

- (2023) Proceedings of the 2nd Workshop on Adaptive Cyber Defense

- (2023) GameSec '23: 14th Conference on Decision and Game Theory for Security (GameSec-23)

- (2023) AISec '23: 16th ACM Workshop on Artificial Intelligence and Security

- (2022) AI for Cyber Defence Mailing List

- (2022) AI for Cyber Defence (AICD) research centre - Alan Turing Institute

- (2022) DARPA's CASTLE: Cyber Agents for Security Testing and Learning Environments using Reinforcement Learning

- (2022) AISec '22: 15th ACM Workshop on Artificial Intelligence and Security

- (2022) ICML Workshop on Machine Learning for Cybersecurity

- (2022) AAAI Workshop on Artificial Intelligence for Cyber Security (AICS)

- (2022) ECMLPKDD Workshop on Machine Learning for Cybersecurity(MLCS)

- (2021) IJCAI First International Workshop on Adaptive Cyber Defense

- (2021) ICONIP Workshop on Artificial Intelligence for Cyber Security (AICS)

- (2021) ECMLPKDD Workshop on Machine Learning for Cybersecurity (MLCS)

- (2021) Self-Learning AI

- (2021) AI/ML for Cybersecurity: Challenges, Solutions, and Novel Ideas at SIAM Data Mining 2021

- (2020) ECMLPKDD Workshop on Machine Learning for Cybersecurity(MLCS)

- (2020) Self-Learning Systems for Cyber Defense

- (2020) Workshop on Artificial Intelligence for Cyber Security (AICS)

- (2019) ECMLPKDD Workshop on Machine Learning for Cybersecurity (MLCS)

- (2019) Workshop on Artificial Intelligence for Cyber Security (AICS)

Contributions are very welcome. Please use Github issues and pull requests.

Thanks for all your contributions and keeping this project up-to-date.

Creative Commons

(C) 2021-2023